|

Hello! Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

Amazon S3 Tables: Storage optimized for analytics workloads

Amazon S3 Tables give you storage that is optimized for tabular data such as daily purchase transactions, streaming sensor data, and ad impressions in Apache Iceberg format, for easy queries using popular query engines like Amazon Athena, Amazon EMR, and Apache Spark. When compared to self-managed table storage, you can expect up to 3x faster query performance and up to 10x more transactions per second, along with the operational efficiency that is part-and-parcel when you use a fully managed service…

Interviewing Finbarr Timbers on the "We are So Back" Era of Reinforcement Learning

Finbarr Timbers is an AI researcher who writes Artificial Fintelligence — one of the technical AI blog’s I’ve been recommending for a long time — and has a variety of experiences at top AI labs including DeepMind and Midjourney. The goal of this interview was to do a few things: Revisit what reinforcement learning (RL) actually is, its origins, and its motivations. Contextualize the major breakthroughs of deep RL in the last decade, from DQN for Atari to AlphaZero to ChatGPT. How could we have seen the resurgence coming? (see the timeline below for the major events we cover) Modern uses for RL, o1, RLHF, and the future of finetuning all ML models. Address some of the critiques like “RL doesn’t work yet.”

LLMOps Database

A curated knowledge base of real-world LLMOps implementations, with detailed summaries and technical notes…

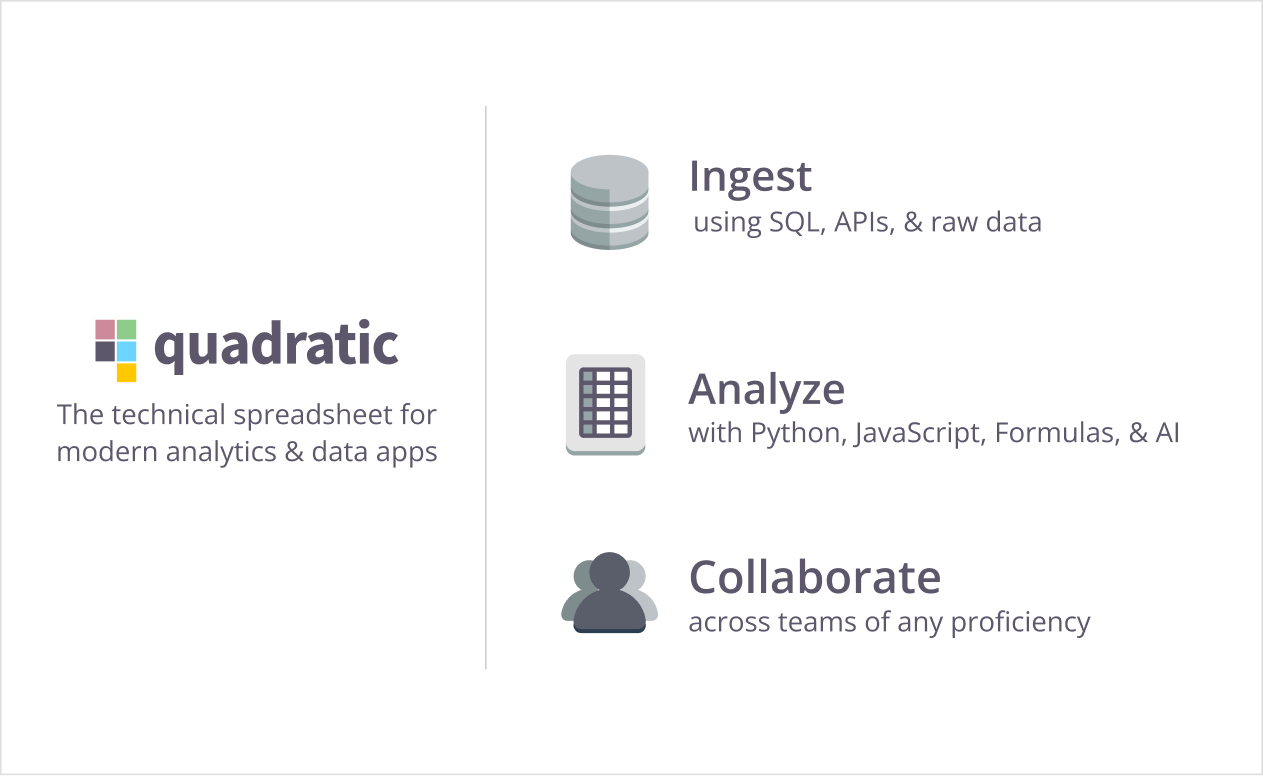

With Quadratic, combine the spreadsheets your organization asks for with the code that matches your team’s code-driven workflows. Powered by code, you can build anything in Quadratic spreadsheets with Python, JavaScript, or SQL, all approachable with the power of AI.

Use the data tool that actually aligns with how your team works with data, from ad-hoc to end-to-end analytics, all in a familiar spreadsheet. Level up your team’s analytics with Quadratic today . * Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Model Validation Techniques, Explained: A Visual Guide with Code Examples

Here, I’ve organized these validation techniques — all 12 of them — in a tree structure, showing how they evolved from basic concepts into more specialized ones. And of course, we will use clear visuals and a consistent dataset to show what each method does differently and why method selection matters… I read every major AI lab’s safety plan so you don’t have to

A handful of tech companies are competing to build advanced, general-purpose AI systems that radically outsmart all of humanity. Each acknowledges that this will be a highly – perhaps existentially – dangerous undertaking. How do they plan to mitigate these risks?…I tried to write this assuming no prior knowledge. It is aimed at a reader who has heard that AI companies are doing something dangerous, and would like to know how they plan to address that. In the first section, I give a high-level summary of what each framework actually says. In the second, I offer some of my own opinions…

Diffusion Meets Flow Matching: Two Sides of the Same Coin

Flow matching and diffusion models are two popular frameworks in generative modeling. Despite seeming similar, there is some confusion in the community about their exact connection. In this post, we aim to clear up this confusion and show that diffusion models and Gaussian flow matching are the same, although different model specifications can lead to different network outputs and sampling schedules. This is great news, it means you can use the two frameworks interchangeably…

Why hasn't forecasting evolved as far as LLMs have? [Reddit]

Forecasting is still very clumsy and very painful. Even the models built by major companies -- Meta's Prophet and Google's Causal Impact come to mind -- don't really succeed as one-step, plug-and-play forecasting tools. They miss a lot of seasonality, overreact to outliers, and need a lot of tweaking to get right…It's an area of data science where the models that I build on my own tend to work better than the models I can find. LLMs, on the other hand, have reached incredible versatility and usability…Why is that? After all the time we as data scientists have put into forecasting, why haven't we created something that outperforms what an individual data scientist can create? Or -- if I'm wrong, and that does exist -- what tool does that?…

Skimpy - A light weight tool for creating summary statistics from dataframes

skimpy is a light weight tool that provides summary statistics about variables in pandas or Polars data frames within the console or your interactive Python window. Think of it as a super-charged version of pandas’ df.describe()…

Probabilistic weather forecasting with machine learning

We introduce GenCast, a probabilistic weather model with greater skill and speed than the top operational medium-range weather forecast in the world, ENS, the ensemble forecast of the European Centre for Medium-Range Weather Forecasts. GenCast is an ML weather prediction method, trained on decades of reanalysis data…

Disposable environments for ad-hoc analyses

In this blog post, I explore the innovative 'juv' package, which simplifies Python environment management for Jupyter notebooks by embedding dependencies directly within the notebook file. This approach eliminates the need for separate environment files, making notebooks easily shareable and reducing setup complexity. I also discuss integrating 'juv' with 'pyds-cli' to streamline ad-hoc data analyses within organizations, enhancing reproducibility and reducing environment conflicts. Curious about how this could change your data science workflow?…

Universal Semantic Layer: Capabilities, Integrations, and Enterprise Benefits

This article examines how semantic layers fit into modern data architectures and their critical benefits, from API-driven access to enhanced governance, and why they've become essential in today's data stack…

Meta Learning: Addendum or a revised recipe for life In 2021 I published Meta Learning: How To Learn Deep Learning And Thrive In The Digital World. The book is based on 8 years of my life where nearly every day I thought about how to learn machine learning and how to do machine learning efficiently and at a high level…The more I pursued ML professionally, the more I started to think that the recipe that led me up to this point no longer applied. On one hand, this hasn't impacted my work one bit. I have been an employee for nearly 20 years and that's plenty of time to figure things out. However, I didn't appreciate how big of a role being part of the fast.ai community and continuous learning played in my life. How important following the recipe was for me on a personal level. I share my experience in the hopes that should you find yourself in a similar situation, you might have an easier time balancing your personal growth trajectory and your work…

Successive Halving

There is an experimental technique for cross validation in scikit-learn that revolves around "Successive Halving". In this livestream we discuss how the technique works but also why the approach is still considered experimental at the time of making this recording…

Why You Should Care About AI Agents

So what exactly are AI agents, and how soon (if ever) can we expect them to become ubiquitous? Should their emergence excite us, worry us, or both? We’ve investigated the state-of-the-art in AI agents and where it might be going next…

Jobs where Bayesian statistics is used a lot? [Reddit]

How much bayesian inference are data scientists generally doing in their day to day work? Are there roles in specific areas of data science where that knowledge is needed? Marketing comes to mind but I’m not sure where else. By knowledge of Bayesian inference I mean building hierarchical Bayesian models or more complex models in languages like Stan…

How to be a wise optimist about science and technology?

I believe the central problem of the 21st century is how civilization co-evolves with science and technology. As our understanding of the world deepens, it enables technologies that confer ever more power to both improve and damage the world…This essay emerged from a personal crisis. From 2011 through 2015 much of my work focused on artificial intelligence. But in the second half of the 2010s I began doubting the wisdom of such work, despite the enormous creative opportunity…one needs a big-picture view of how humanity should meet the challenges posed by science and technology, and especially by artificial superintelligence (ASI). This essay attempts to develop such a big-picture view…

.

.

* Based on unique clicks.

** Find last week's issue #575 here.

.

Learning something for your job? Hit reply to get get our help.

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.

Promote yourself/organization to ~64,300 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :) Stay Data Science-y! All our best,

Hannah & Sebastian

Invite your friends and earn rewardsIf you enjoy Data Science Weekly Newsletter, share it with your friends and earn rewards when they subscribe. Invite Friends | |