OpenAI Nonprofit Buyout: Much More Than You Wanted To Know

Last month, I put out a request for experts to help me understand the details of OpenAI’s forprofit buyout. The following comes from someone who has looked into the situation in depth but is not an insider. Mistakes are mine alone. Why Was OpenAI A Nonprofit In The First Place? In the early 2010s, the AI companies hadn’t yet discovered scaling laws, and so underestimated the amount of compute (and therefore money) it would take to build AI. DeepMind was the first victim; originally founded on high ideals of prioritizing safety and responsible stewardship of the Singularity, it hit a financial barrier and sold to Google. This scared Elon Musk, who didn’t trust Google (or any corporate sponsor) with AGI. He teamed up with Sam Altman and others, and OpenAI was born. To avoid duplicating DeepMind’s failure, they founded it as a nonprofit with a mission to “build safe and beneficial artificial general intelligence for the benefit of humanity”. But like DeepMind, OpenAI needed money. At first, they scraped by with personal donations from Musk and other idealists, but as the full impact of scaling laws became clearer, Altman wanted to form a forprofit arm and seek investment. Musk and Altman disagree on what happened next: Musk said he objected to the profit focus, Altman says Musk agreed but wanted to be in charge. In any case, Musk left, Altman took full control, and OpenAI founded a forprofit subsidiary. This subsidary was supposedly a “capped forprofit”, meaning that their investors were capped at 100x return - if someone invested $1 million, they could get a max of $100 million back, no matter how big OpenAI became - this ensured that the majority of gains from a Singularity would go to humanity rather than investors. But a capped forprofit isn’t a real kind of corporate structure; in real life OpenAI handles this through Profit Participation Units, a sort of weird stock/bond hybrid which does what OpenAI claims the capped forprofit model is doing. Why Is OpenAI No Longer Happy To Be A Nonprofit? It’s neither unusual nor illegal for nonprofits to own forprofits. Outdoorwear company Patagonia is a typical example. Its billionaire founder gave his shares to a purpose-designed environmentalist trust. They run Patagonia in an environmentally friendly way and spend the profits on environmental protection. In theory, OpenAI could do something similar. It’s not even illegal for a nonprofit to sell stock in a forprofit that it owns. It only needs to argue that its charitable purpose would be better served by having more money now than by continuing to own the stock. So why is OpenAI unhappy with its current situation? They’ve already provided us the answer - it makes it harder to sell stock, or makes the stock worth less (so much so that participants in a recent funding round made the money conditional on a change to the structure). But why would it do this? This is the part I’m least sure about, but here are some vague guesses. The nonprofit board continues to control the company. The original board included idealists who got seats in exchange for early donations to the nonprofit. When this faction got cold feet and fired Altman in November 2023, it highlighted the danger (to investors) of nonprofit control. When Altman came back, he replaced most of the idealists with standard Silicon Valley business types, mitigating the risk. But these people still have a fig-leaf legal obligation to put the good of humanity above shareholder value, and even this fig leaf makes investors nervous. If the nonprofit board sold 51% of the stock (including governance rights) to outside investors, they could remove this problem. In some sense, that’s what they’re trying to do now. But why can’t they do it naturally - just keep selling stock until they’ve sold 51%? If they didn’t broadcast that they were going to do this, buyers would underpay for the stock, because they would still worry the nonprofit board would stay in charge. But also, controlling OpenAI is heavily related to their supposed nonprofit mission of ensuring that AI benefits humanity. If they were to sacrifice that control in a seemingly dumb way - whoops, guess that last stock sale put us under 50%, suppose that means we’re not in charge anymore - they could get in trouble for breaching their fiduciary duties. Their odds are better if they just sell the whole thing openly to someone who understands the deal they’re getting and is willing to pay for it. How Will Altman Turn OpenAI Into A Forprofit? First, he’ll start a new shell company. Realistically this will also be named OpenAI, but to avoid confusion, let’s call it Altman Skullduggery, Inc. ASI will offer to buy OpenAI LLC from the nonprofit for its fair value (some sources say he plans to bid $40 billion). The board (made of hand-picked Altman loyalists) will agree. ASI (a normal forprofit) will get all of OpenAI’s useful corporate assets, and the nonprofit will get $40 billion, which it can spend on benefitting humanity if it wants. Where will Altman get $40 billion? He won’t; rather, he’ll offer the nonprofit shares in Altman Skullduggery, Inc. Why are shares in ASI, a meaningless shell company with no assets, worth anything? Because it will soon own OpenAI, an exciting cutting-edge AI lab! I am naive to the ways of finance, so this originally struck me as some kind of ridiculous perpetual motion bullshit. But I was eventually convinced it made sense. Suppose that OpenAI is currently underperforming its potential because it’s stuck in an awkward nonprofit governance structure; it ought to be worth $100 billion, but given the awkward governance it’s only worth $30 billion. ASI could bid 40% of its shares. Then, when OpenAI leaves the nonprofit and its value shoots up from $30 billion to $100 billion, both sides will have won: the nonprofit will have received $40 billion in ASI stock for its $30 billion asset (a profit of $10 billion), and ASI will have received a 60% share in OpenAI for nothing (a profit of $60 billion). Therefore, both sides are happy! I guess maybe this is how all private equity works. Why can’t I do this same maneuver and get rich with no effort? If I don’t have a plan to increase the value of the company, then OpenAI-in-my-shell-company is worth the same amount as OpenAI now. Therefore, I have to offer 100% of shares in my shell company to afford OpenAI. Therefore, the deal is meaningless - the people who started with 100% of the shares still have 100% of the shares, and I still have zero. Maybe it’s not completely meaningless - I could replace Altman as CEO - but this is bad (I am a less skilled CEO than Altman and nobody would want to make that switch). In the extremely unlikely event that the board did want to make the switch, they could just fire Altman and replace him the usual way (if they dared). If I did have an exciting plan to increase the value of the company and can convince the board, then this would just be a regular private equity deal. Private equity companies often do get rich, but it’s not literally no effort - they need to make a case that they can increase shareholder value in some way that the current leadership (and other potential bidders) can’t. How Much Should Altman Pay The Nonprofit For OpenAI? Why offer more than $0.00? The nonprofit board is packed with his supporters. They’ll vote yes on anything he asks. So why spend money at all? Because it’s illegal for a nonprofit to sell assets for less than they’re worth. If it wasn’t illegal, then anyone could loot a charity to line their own pockets. If board members approved an unfairly low offer, they could be charged with breaching their fiduciary duties (in this case, their duty to carry out the charity’s mission of benefiting humanity). The relevant regulators are the Attorney Generals of California (where OpenAI operates) and Delaware (where it is registered). They try not to second-guess company boards’ decisions too much, limiting their intervention to clear fraud without even a fig leaf of honesty. But $0.00 wouldn’t even have that fig leaf. So Altman needs to figure out the lowest number he can offer that gives the board a fig leaf of accepting a fair offer and trying to benefit humanity. How much would that be? A recent investment round valued OpenAI at $157 billion conditional on it escaping the nonprofit. It must be worth less than that when it’s still in the nonprofit - but maybe not too much less - and ASI should pay somewhere between the nonprofit value and the forprofit value (so that both sides gain from the deal). Altman previously floated $40 billion, which struck most observers as too low, maybe too low to even provide the fig leaf. What About Elon Musk’s Offer? Elon Musk recently offered the nonprofit $97.4 billion for OpenAI. He sweetened the deal by guaranteeing that the nonprofit would continue to have a controlling share. Rumor says this was a clever ploy. Musk knew the board was in Altman’s pocket and would turn him down. But he knew it would complicate their task of looking honest to regulators. What honest person would turn down a $97 billion offer only to accept a $40 billion one? The situation isn’t hopeless. The board could try saying something like “Well, Elon Musk is a crazy person who’s currently dismantling the federal government with a chainsaw; we charitable people acting for the benefit of humanity think it’s worth losing $57 billion to keep him away from the control panel for the machine god.” As fig leaves go, that’s honestly a pretty good one. This strategy is dicey, and realistically Altman will supplement it by increasing his offer. I heard rumors of a new offer where the nonprofit keeps a controlling interest, but I can’t find a credible source. So If Altman Increases His Offer, Then It’s Fair, Right? We’re not sure! The nonprofit’s mission was to create AI in a way that benefits humanity. If they sold the company, they might get $40 billion (or whatever). You can do a lot of good charity work with $40 billion. But the nonprofit isn’t supposed to do generic good charity work. It’s supposed to create AI in a way that benefits humanity. You can certainly do lots of great things for beneficial AI with $40 billion (I know some AI alignment charities that would love that kind of money - the OpenAI board can send me an email if it wants to be put in contact!) But I don’t know, investing $40 billion in worthy AI-related causes seems a lot less like creating AI then, you know, being OpenAI and actually creating AI. So separate from the argument that Altman is offering too little, there’s another argument - which judges and the Attorneys General will have to consider - that they shouldn’t be allowed to sell at any price. Where Does Elon Musk’s Lawsuit Fit In? During the original nonprofit days, Musk donated $44 million. He says OpenAI violated his rights when they accepted his $44 million for charitable purposes, then used it to become a corporate titan that competed with him. Surprising nobody who knows Elon, the complaint comes on strong:

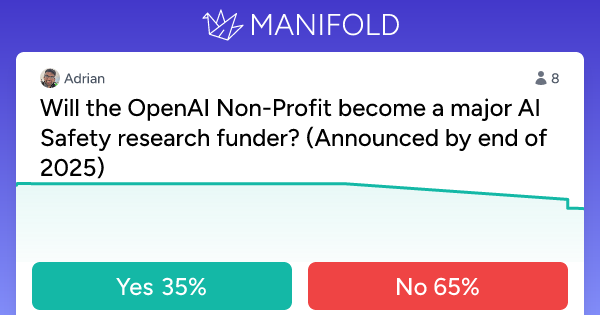

Earlier this month, the judge denied a preliminary injunction. That is, she declined to block OpenAI from doing their forprofit conversion until the trial was over. This was always a long shot and would have required Musk to be overwhelmingly obviously in the right. But Rob Wiblin and Garrison Lovely say the narrative that “Musk lost” is too simple. The judge ruled that Musk only has standing to sue if he meant for his $44 million donation to be restricted in some way. But she also said that if he did have standing to sue, his case seemed strong on the merits. So she will hold a trial to see whether he has standing, and, if so, likely rule in his favor. Wiblin estimates a 45% chance that the judge will eventually rule in Musk’s favor. Then what? If the nonprofit has already converted to a forprofit, she might order it to convert back, or change its governance. Or she might just make them give Musk a lot of money. Even if the judge doesn’t block the conversion, the lawsuit could still cause trouble. If the judge rules that Musk doesn’t have standing but his case is good, the Attorneys General might use the “his case is good part” when making their own analysis of whether to permit the buyout. And if the board members agreed to the forprofit conversion after a judge had said - even in a nonbinding way - that the case against it was good, it would make it easier for someone (eg Musk) to accuse them individually of breach of duty. If The Nonprofit Became A Real Nonprofit, What Would It Do? Altman has suggested it would “hire a leadership team and staff to pursue charitable initiatives in sectors such as health care, education, and science." Whatever it did would have to follow its mission statement of using AI to benefit humanity. I think it would be a stretch to say that they used the AI to get the money and now the money is benefiting humanity, so probably the charitable initiatives would have to involve AI in some way. Maybe this would look like helping hospitals use AI to treat patients. Manifold asks whether they might end up funding AI safety efforts: This sort of makes sense - surely this is the most direct way to interpret a mandate of using charity dollars to “make sure AI benefits humanity”. And an obvious commitment to pursuing their mission exactly as described would look good to regulators. But it also might not be as popular with the normies as “health care, education, and science” - and doing popular things would look good to regulators too. If this is on their mind, Altman hasn’t mentioned it. Unless a judge does something crazy, it will be the current nonprofit board (of Altman loyalists) who make this decision. Does Any Of This Matter For Singularity Believers? Maybe. If OpenAI successfully converts to a forprofit, it can get more investment. That will make it easier to scale faster and keep its lead. If you don’t want fast scaling, or you don’t want OpenAI to be in the lead, you should root against them. But if OpenAI successfully converts to a forprofit, then the nonprofit has to reinvent itself as a real charity, funding healthcare and science and maybe if we’re really lucky AI safety. If you’re excited about this funding, you should root for them. The original goal of OpenAI was to ensure that the singularity created broad-based prosperity for everyone, maybe through a basic income. Whether or not a charity holds 51% (or some other number) of OpenAI shares is relevant to this vision. If OpenAI initiates a singularity and ends up with all the money in the world, then maybe 49% of all the money in the world goes to their investors (creating a caste of mega-plutocrats), but 51% goes to a nonprofit charged with benefiting all humanity (and this is where the basic income comes from?). I do think it’s kind of unlikely that OpenAI ends up creating God yet also remains subject to the subject to the Attorney General of California. But it’s not totally impossible - Robin Hanson has written a lot about how if there are many competing near-peer superintelligences and companies, they might choose to keep existing property and governance structures as referees. In this one unlikely scenario, the exact percent of OpenAI shares held by investors vs. charity might matter a lot. Finally, the regulators (either the judge of Musk’s lawsuit, or the Attorney Generals) might rule that everything about OpenAI sucks and they have to start over. Maybe this would mean firing the board and replacing it with . . . some other board? People who have some strong claim to really care about whether AI benefits all humanity or not? I don’t know, a man can dream. Business Insider discusses an incipient coalition between Meta and various public interest watchdogs pushing this solution:

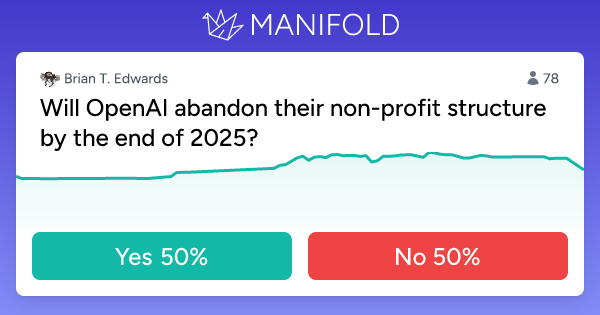

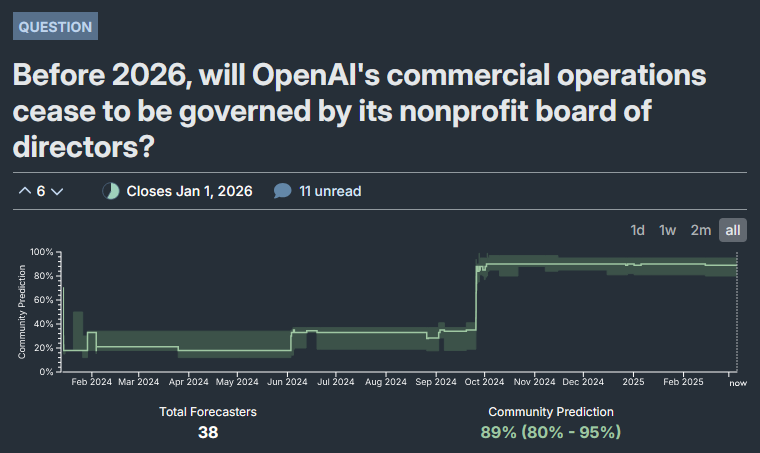

What Do Prediction Markets Say? This is the biggest Manifold market on the topic. The big drop at the end is when the judge ruled Musk’s case had merit. This Metaculus question looks like the Manifold market, but without the big drop at the end. Are the Manifolders overreacting, or are the Metaculans asleep at the wheel? Bonus Question 1: Who Are The Attorney Generals Of California and Delaware? California AG Rob Bonta is a former nonprofit coordinator with experience at the ACLU and other activist groups. He is a lifelong Democrat and close ally of Governor Newsom. He has a reputation for being Tough On Guns, sometimes veering into what I consider publicity stunts (when Texas passed a questionably-constitutional bounty for tips on illegal abortions, he tried to own the cons by proposing a questionably-constitutional bounty for tips on illegal guns). In the past, the Newsom administration has sided with AI companies to please his Silicon Valley donors. But Bonta has previously been involved in antitrust lawsuits against Amazon and Google, so he’s not exactly afraid to stand up to Big Tech. Delaware Attorney Kathy Jennings, also a Democrat, is a former prosecutor. She wrote a letter to the court hearing Musk’s lawsuit:

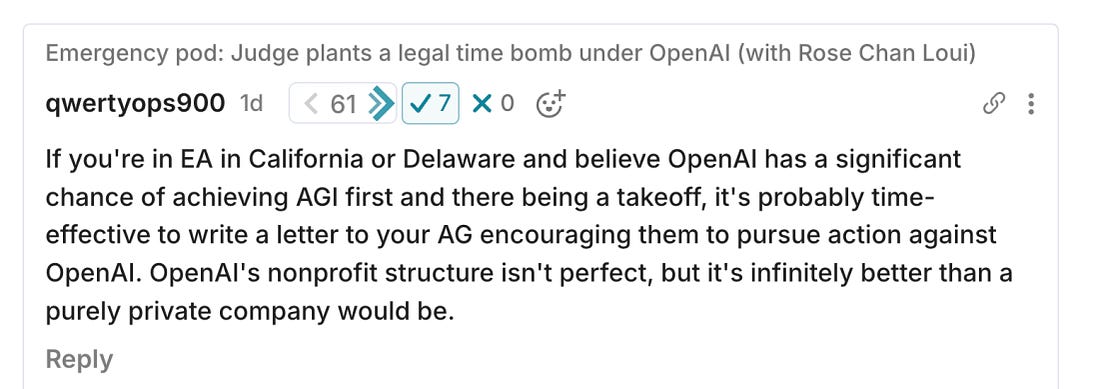

A person who I don’t know claims that maybe writing letters to these people could be meaningful: Bonus Question 2: What Is Anthropic’s Structure? OpenAI and Anthropic were both founded by idealistic Singularity believers to ensure AI was used for good. OpenAI tried to implement their commitment by being a nonprofit; Anthropic used a different corporate arrangement. Anthropic is a public benefit company - much closer to a normal forprofit than OpenAI. It’s run by a board of five people. Two members of the board are picked the normal way by investors. But the other three (a majority!) are picked by a group of five people called the Long Term Benefit Trust. At the beginning of Anthropic, the founders seeded the Trust with trustworthy smart outsiders who seemed interested in the long-term benefit of humanity. Trustees can choose their own replacements without input from investors. At any time, they can use their three board members to have a majority in the board and overrule what everyone else is doing. How’s it going? Okay. Some of the original LTBT members took jobs in government, and had to quit the LTBT as a conflict of interest. A source close to Anthropic suggests they had trouble finding replacements - most of the altruistically-minded eminent AI people had conflicts of interest of their own. As a result, the LTBT seems to be down to three people, all of whom have great credentials as thoughtful philanthropists but none of whom are especially connected to AI in particular. They seem to be overdue in electing their board members, and we don’t know why. Zach Stein-Perlman, who runs AI Lab Watch, argues that Maybe Anthropic’s Long-Term Benefit Trust Is Powerless. He points to a rule that a supermajority (with complicated definition, see here) of stockholders can overrule the LTBT. Anthropic partisans counter that they need some way to deal with the trust losing the plot; many of Anthropic’s shareholders are also people with a special interest in AI safety, and it would be hard to get a majority of these people to overrule the LTBT unless it was important. I think Anthropic seems to be going pretty well and the Trust hasn’t had any reason to interfere, so we haven’t gotten a chance to see how much power they really wield. You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

Open Thread 373

Tuesday, March 18, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

What Happened To NAEP Scores?

Tuesday, March 11, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 372

Monday, March 10, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Why Should Intelligence Be Related To Neuron Count?

Friday, March 7, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 371.5

Wednesday, March 5, 2025

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

☕ Poppi-ing off

Tuesday, March 18, 2025

Job hopping doesn't pay like it used to... March 18, 2025 View Online | Sign Up | Shop Morning Brew Presented By GACW Good morning. Navigating life requires making many small decisions, whether

U.S. Destroyer to Mexico, Baseball Begins, and Mining on the Moon

Tuesday, March 18, 2025

The Pentagon confirmed that a Navy guided-missile destroyer was deployed to the southern border to aid in securing the US border and operate in international waters. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Ozempocalypse Is Nigh

Tuesday, March 18, 2025

Sorry, you can only get drugs when there's a drug shortage. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Microsoft will kill Remote Desktop soon, insists you'll love replacement [Wed Mar 12 2025]

Tuesday, March 18, 2025

Hi The Register Subscriber | Log in The Register Daily Headlines 12 March 2025 End of the line (train line). Photo by Shutterstock Microsoft will kill Remote Desktop soon, insists you'll love

☕️ Tariff chicken

Tuesday, March 18, 2025

Trump threatened then took back new tariffs on Canada... March 12, 2025 View Online | Sign Up | Shop Morning Brew Presented By AT&T Connected Car Good morning. If you thought the last episode of

Numlock News: March 12, 2025 • Lightning, Saturn, Honk

Tuesday, March 18, 2025

By Walt Hickey ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Ontario Backs Down, Girl Scout Cookie Lawsuit, and 'Red Worm Moon'

Tuesday, March 18, 2025

Ontario backed off plans to put a 25% electricity surcharge on nearby US states Tuesday after President Trump said he would raise tariffs on Canadian steel and aluminum to 50% in retaliation. ͏ ͏ ͏

Introducing: The Musk Watch DOGE Tracker

Tuesday, March 18, 2025

New tool reveals Musk has overstated verified DOGE savings by at least 92% ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The Tesla backlash is here

Tuesday, March 18, 2025

Plus: Plastic rain, personal style, and peace talks. View this email in your browser March 12, 2025 A group of protesters hold cardboard cutouts shaped like Cybertrucks; they read: Stop Musk, Trump =

☕ Government guardrails

Tuesday, March 18, 2025

The future of US AI regulation. March 12, 2025 View Online | Sign Up Tech Brew together with Indeed It's Wednesday. We dispatched Tech Brew's Patrick Kulp to the HumanX AI conference in Las