TypeScript Migrates to Go: What's Really Behind That 10x Performance Claim?

Welcome to the new week! Almost two weeks ago, Microsoft announced they're porting the TypeScript compiler from JavaScript to Go, promising a staggering 10x performance improvement. This news spread like wildfire across tech communities, with everyone from TypeScript fans to language war enthusiasts chiming in. The announcement leads with impressive performance numbers and ambitious goals, though some intriguing details remain unexplored. We’ll tackle those today. Why today and not a week ago? Well, in Architecture Weekly, we don’t want rushy clickbaits, aye? I intentionally wanted to wait for the noise to calm down. Beneath the headline figures lies a story worth unpacking about design choices, performance trade-offs, and the evolution of developer tools. Even if you’re not interested in compilers, there are suitable lessons learned for your system’s design, like:

Let’s tackle that step by step, starting from the Microsoft article headline. "A 10x Faster TypeScript" – Well, Not ExactlyFirst, let's clarify something that might be confusing in Microsoft's announcement title:

Is it really faster? Would your application written in TypeScript be 10x faster once MS releases the new code rewritten in Go? What's actually getting faster is the TypeScript compiler, not the TypeScript language itself or the JavaScript's runtime performance. Your TypeScript code will compile faster, but it won't suddenly execute 10x faster in the browser or Node.js. It's a bit like saying,

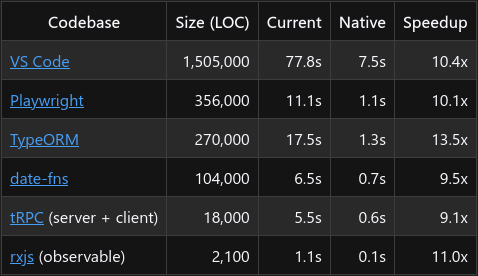

and then clarifying that they only made the manufacturing process faster—the car itself still drives at the same speed. It's still valuable, especially if you've been waiting months for your car, but not quite what the headline suggests. Beyond the "10x Faster" HeadlineAnders Hejlsberg's announcement showcased impressive numbers: Such remarkable stats deserve a deeper look, as this 10x speedup has multiple contributing factors. It's not simply that "Go is faster than JavaScript". Let's be honest: whenever you see a "10x faster" claim, you should view it with healthy scepticism. My first reaction was, "OK, what did they do suboptimal before?" Because 10x improvements don't materialize from thin air—they typically indicate that something wasn’t made well enough in the first place. Be it implementation or some design choices. Understanding Node.jsThere's a widespread belief that "Node.js is slow,” which is more of a repeated stereotype than a truth. In some instances, it can be true, but it is not a general statement. If someone says, "Node.js is slow” is slow, it’s almost like they’d say that C and C++ are slow. Why? The Architecture of Node.jsNode.js is built on Google's V8 JavaScript engine, which is the same high-performance engine that powers Chrome. The V8 engine itself is written in C++, and Node.js essentially provides a runtime environment around it. This architecture is key to understanding Node.js performance:

When people talk about Node.js, they often don't realize they're talking about a system where critical operations are executed by highly optimized C/C++ code. Your JavaScript often just orchestrates calls to these native implementations. Fun fact: Node.js has been among the fastest web server technologies available for many years. When it first appeared, it outperformed many traditional threaded web servers in benchmarks, especially for high-concurrency, low-computation workloads. This wasn't an accident—it was by design. Memory-Bound vs. CPU-BoundNode.js was specifically architected for web servers and networked applications, which are predominantly memory-bound and I/O-bound rather than CPU-bound: Memory-bound operations involve moving data around, transforming it, or storing/retrieving it. Think of:

I/O-bound operations involve waiting for external systems:

For typical web applications, most of the time is spent waiting for these I/O operations to complete. A typical request flow might be:

In this workflow, actual CPU-intensive computation is minimal. Most web applications spend 80-90% of their time waiting for I/O operations to complete. Node.js was optimised for precisely for this scenario because:

Here's what many people miss: I/O-intensive operations in Node.js work at nearly C-level speeds. When you make a network request or read a file in Node.js, you're essentially calling C functions with a thin JavaScript wrapper. This architecture made Node.js revolutionary for web servers. When properly used, a single Node.js process can efficiently handle thousands of concurrent connections, often outperforming thread-per-request models for typical web workloads. It came essentially from the idea that multitasking, like in human tasks, is not always the optimal way of handling tasks. Synchronising multiple tasks and context switching adds overhead and does not always give better results. It all depends on whether a task can be split into smaller pieces that can be done concurrently. Where Node.js Faces Challenges: CPU-Bound OperationsThe real performance challenges for Node.js are CPU-intensive tasks—like (welcome again!) compiling TypeScript. These workloads have fundamentally different characteristics from the web server scenarios for which Node.js was optimized. CPU-bound operations involve heavy computation with minimal waiting:

In these scenarios, the bottleneck isn't waiting for external systems—it's raw computational power and how efficiently the runtime can execute algorithms. The Single-Threaded LimitationJavaScript was designed with a single-threaded event loop model. This model works best for handling concurrent I/O (where most time is spent waiting) but becomes problematic for CPU-intensive operations: When a CPU-intensive task runs, it monopolizes this single thread. During that time, Node.js can't process other events, handle new requests, or even respond to existing ones. It's effectively blocked until the computation completes. This is why running a complex algorithm in Node.js can make your entire web server unresponsive—the event loop is busy with computation and can't handle incoming requests. The Event Loop DanceWriting efficient CPU-intensive code in JavaScript requires understanding and respecting the event loop. The code must be structured to yield control, allowing other operations to proceed periodically: This "chunking" approach works but introduces complexity and fundamentally changes how you structure your code. It's a very different programming model from languages with native threading, where you can write straightforward CPU-intensive code without worrying about blocking other operations. For a complex application like the TypeScript compiler, this dance with the event loop becomes increasingly difficult to manage as the codebase grows. Read also more: Compilers: The CPU-Intensive BeastA compiler is practically the poster child for CPU-intensive workloads. It needs to:

These operations involve complex algorithms, large memory structures, and lots of computation—exactly the kind of work that challenges JavaScript's execution model. For TypeScript specifically, as the language grew more complex and powerful over the years, the compiler had to handle increasingly sophisticated type checking, inference, and code generation. This progression naturally pushed against the limits of what's efficient in a JavaScript runtime. Threading Models Matter: Event Loop vs. Native ConcurrencyThe performance gap between the JavaScript and Go implementations isn't just about raw language speed—it's fundamentally about threading models and how they match the problem domain. As mentioned, Node.js operates on an event loop model: This single-threaded approach means that for CPU-intensive work like compiling TypeScript, you need to write code that doesn't monopolize the thread. In practice, this involves breaking work into smaller chunks that can yield control back to the event loop. For a compiler, this creates significant design challenges:

Go: Native Concurrency with GoroutinesGo, by contrast, offers goroutines—lightweight threads managed by the Go runtime: This model allows compiler phases to be naturally parallelized with minimal coordination overhead:

In this model, file parsing, type checking, and code generation can all happen concurrently without explicit yield points. The code structure can more naturally follow the logical flow of compiler phases. Read also more in: Same Code, Different Execution ModelAnders Hejlsberg said they evaluated multiple languages, and Go was the easiest to port the code base into. The TypeScript team has apparently created a tool that generates Go code, resulting in a port that's nearly line-for-line equivalent in many places. That might lead you to think the code is "doing the same thing," but that's a misconception. The code may look the same but can behave very differently across languages due to their execution models. In JavaScript:

In Go:

When you port code from JavaScript to Go without changing its structure, you implicitly change how it executes. Operations that would block the event loop in JavaScript can run concurrently in Go with minimal effort. This leads to a fascinating insight: when a direct port yields dramatic performance improvements, it might indicate that the original implementation wasn't fully optimized for JavaScript's execution model. Writing truly performant JavaScript means embracing its asynchronous nature and event loop constraints—something that becomes increasingly challenging in complex codebases like a compiler. The Evolution ProblemWhen TypeScript started in 2012, the team made reasonable technology choices based on the context at that time:

Over time, TypeScript evolved from a relatively simple superset of JavaScript into a sophisticated language with advanced type features, generics, conditional types, and more. The compiler grew accordingly but remained built on foundations designed for a simpler problem. This is a classic example of how successful software often faces scaling challenges that weren't anticipated in its early design. As the TypeScript compiler became more complex and was applied to larger codebases, its JavaScript foundations became increasingly restrictive. Don’t you know that on your own? How is legacy code created? Day by day. Outstanding Questions and Future ConsiderationsWhile the performance improvements from the Go migration are impressive, several important questions haven't been fully addressed in Microsoft's announcement: What About Browser Support?TypeScript doesn't just run on servers and development machines and's used directly in browsers via various playground implementations and in-browser IDEs. How will Microsoft address this use case since Go doesn't run natively in browsers? There are a few potential approaches:

The announcement doesn't clarify their approach, and it's an important detail that will affect the TypeScript ecosystem. Feature Parity vs. Performance Trade-offsIt's worth noting that achieving performance improvements often involves trade-offs. One common approach is to reduce feature scope or complexity. While Microsoft claims they're maintaining full feature parity, we should watch carefully to see if any subtle behaviours change or if certain edge cases are handled differently. Historical examples from other language migrations show that 100% identical behaviour is challenging to achieve. Some questions to consider:

The TypeScript team has a strong track record of backward compatibility, but a ground-up rewrite inherently carries risks of subtle behavioural changes. Extensibility and Plugin EcosystemTypeScript has a rich ecosystem of plugins and tools that extend the compiler. The migration to Go raises questions about the future of this ecosystem:

These considerations will affect the broader TypeScript ecosystem beyond just compilation performance. Why This Matters Beyond TypeScriptThis case study has broader implications for technology choices:

Looking ForwardAs a TypeScript user, building Emmett and Pongo is good news. If I can get the compiler working faster “for free”, that’s sweet. But on the other hand, I don’t see why we make such noise and use clickbait as a way of bringing that to the development community. Focusing on 10x without giving enough context just created friction (I mercifully skipped C# developers' cries of “why not C#?!“…). That’s why I wanted to expand on this use case, as it offers valuable lessons about technology, language choices, performance optimization, and the evolution of successful projects. The move from JavaScript to Go shouldn’t be taken as a confirmation that “Node.js is slow”. It’s better to look on that as a recognition that different problems call for different tools. JavaScript and Node.js continue to be good at what they were designed for: IO-intensive web applications with high concurrency needs. Of course, it’d be better if Microsoft explained that in more detail, rather than making a clickbait claim, but this is the world we live in. Check also previous releases on performance: What do you think? Have you faced challenges similar to technology evolution in your projects? How did you tackle them? Did you ever get a 10x surprising improvement? How did you deal with that? I'd love to hear your thoughts in the comments! Cheers! Oskar p.s. Ukraine is still under brutal Russian invasion. A lot of Ukrainian people are hurt, without shelter and need help. You can help in various ways, for instance, directly helping refugees, spreading awareness, and putting pressure on your local government or companies. You can also support Ukraine by donating, e.g. to the Ukraine humanitarian organisation, Ambulances for Ukraine or Red Cross. Invite your friends and earn rewardsIf you enjoy Architecture Weekly, share it with your friends and earn rewards when they subscribe. |

Older messages

Whole Architecture Weekly content is free now!

Tuesday, March 18, 2025

💣 Boom here comes the big news! The only constant in the world is change. I made the Architecture Weekly a fully free newsletter. Yes, that also include all the past articles and videos free. Why did I

Practical Introduction to Event Sourcing with Emmett

Monday, March 10, 2025

Emmett is a framework that will take your applications back to the future. Learn mor on how Event Sourcing can be practical and smoother with it.The idea behind Emmett was to make it easier to create

So you want to break down monolith? Read that first.

Monday, March 3, 2025

My lessons learned, dos and donts from breaking down monoliths. I gathered my experience on what to do before even starting. I explained hy defining real business metrics is critical and why you should

Documenting Event-Driven Architecture with EventCatalog and David Boyne

Thursday, February 27, 2025

If you're wondering on how to document Event-Driven Architecture, or you don't know that you should, I have something for you. We discussed with David Boyne, why data governance practices and

How does Kafka know what was the last message it processed? Deep dive into Offset Tracking

Thursday, February 27, 2025

Today we got back to our Kafka internals series. We discussed how it keeps track of processed messages. This may seem like a detail, but understanding it can be critical for smooth message processing.

You Might Also Like

BetterDev #277 - When You Deleted /lib on Linux While Still Connected via SSH

Tuesday, March 25, 2025

Better Dev #277 Mar 25, 2025 Hi all, Last week, NextJS has a new security vulnerability, CVE-2025-29927 that allow by pass middleware auth checking by setting a header to trick it into thinking this is

JSK Daily for Mar 25, 2025

Tuesday, March 25, 2025

JSK Daily for Mar 25, 2025 View this email in your browser A community curated daily e-mail of JavaScript news Easily Render Flat JSON Data in JavaScript File Manager The Syncfusion JavaScript File

Want to create an AI Agent?

Tuesday, March 25, 2025

Tell me what to build next ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

LangGraph, Marimo, Django Template Components, and More

Tuesday, March 25, 2025

LangGraph: Build Stateful AI Agents in Python #674 – MARCH 25, 2025 VIEW IN BROWSER The PyCoder's Weekly Logo LangGraph: Build Stateful AI Agents in Python LangGraph is a versatile Python library

Charted | Where People Trust the Media (and Where They Don't) 🧠

Tuesday, March 25, 2025

Examine the global landscape of public trust in media institutions. Confidence remains low in all but a few key countries. View Online | Subscribe | Download Our App Presented by: BHP >> Read

Daily Coding Problem: Problem #1728 [Medium]

Tuesday, March 25, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Square. Assume you have access to a function toss_biased() which returns 0 or 1 with a

LW 175 - Shopify uses AI to Prepare Stores for Script Editor Deprecation

Tuesday, March 25, 2025

Shopify uses AI to Prepare Stores for Script Editor Deprecation Shopify Development news and

Reminder: Microservices rules #7: Design loosely design-time coupled services - part 1

Tuesday, March 25, 2025

You are receiving this email because you subscribed to microservices.io. Considering migrating a monolith to microservices? Struggling with the microservice architecture? I can help: architecture

Delete your 23andMe data ASAP 🧬

Tuesday, March 25, 2025

95+ Amazon tech deals; 10 devs on vibe coding pros and cons -- ZDNET ZDNET Tech Today - US March 25, 2025 dnacodegettyimages-155360625 How to delete your 23andMe data and why you should do it now With

Post from Syncfusion Blogs on 03/25/2025

Tuesday, March 25, 2025

New blogs from Syncfusion ® Create AI-Powered Smart .NET MAUI Data Forms for Effortless Data Collection By Jeyasri Murugan This blog explains how to create an AI-powered smart data form using our .NET