🔹◽️Edge#162: EleutherAI’s GPT-NeoX-20B, one of the largest open-source language models

This is an example of TheSequence Edge, a Premium newsletter that our subscribers receive every Tuesday and Thursday. On Thursdays, we dive deep into one of the freshest research papers or technology frameworks that is worth your attention. 💥 What’s New in AI: EleutherAI’s GPT-NeoX-20B, one of the largest open-source language modelsWe’ve seen the accelerating race to build massively large transformer models. From GPT-2 with 1.5 billion parameters, to Microsoft’s Turing NLG with 17 billion parameters, to GPT-3 passing 175 billion parameters and Google’s Switch Transformer reaching 1.6 trillion parameters, and now, Wu Dao 2.0 containing a mind-blowing 1.75 trillion parameters. Most of these transformer models are not open-source and are difficult to fully access. Just yesterday, in an effort to democratize access to large language models, EleutherAI, in partnership with CoreWeave, released a 20 billion parameter open-source language model named GPT-NeoX-20B. For those who are looking to serve GPT-NeoX-20B without managing infrastructure, CoreWeave has partnered with Anlatan, the creators of NovelAI, to create GooseAI, a fully managed inference service delivered by API. Let’s dive deeper into the practical implementation of GPT-NeoX-20B and GooseAI. The HistoryThe research collective EleutherAI was founded in 2020 by Connor Leahy, Sid Black and Leo Gao with the goal of ensuring that large language models remain widely accessible to aid in research towards the safe use of AI systems. In comparison, the well-known GPT3 is still closed to the public at large and has prohibitively expensive training costs – both are significant hurdles to researchers interested in studying and using it and businesses building products on top of it. From the beginning, EleutherAI has been deeply committed to helping tackle AI safety while focusing on making large language models more accessible.

In 2021, CoreWeave built a state-of-the-art NVIDIA A100 cluster for distributed training. They have partnered with EleutherAI to train GPT-NeoX-20B using this cluster.

GPT-NeoX-20BWith its beta release on February 2nd on GooseAI, EleutherAI claims GPT-NeoX-20B to be the largest publicly accessible language model available. At 20 billion parameters, GPT-NeoX-20B is a powerhouse trained on EleutherAI’s curated collection of datasets, The Pile. When EleutherAI developed The Pile’s 825GB dataset, no public datasets suitable for training language models of this size existed. The Pile is now widely used as a training dataset for many current cutting-edge models, including the Beijing Academy of Artificial Intelligence’s Wu Dao (1.75T parameters, multimodal), AI21’s Jurassic-1 (178B parameters), Anthropic’s language assistant (52B parameters), and Microsoft and NVIDIA’s Megatron-Turing NLG (340B parameters). In short, GPT-NeoX-20B is more accessible to developers, researchers, and tech founders because it is fully open-source and less expensive to serve compared to similar models of its size and quality.

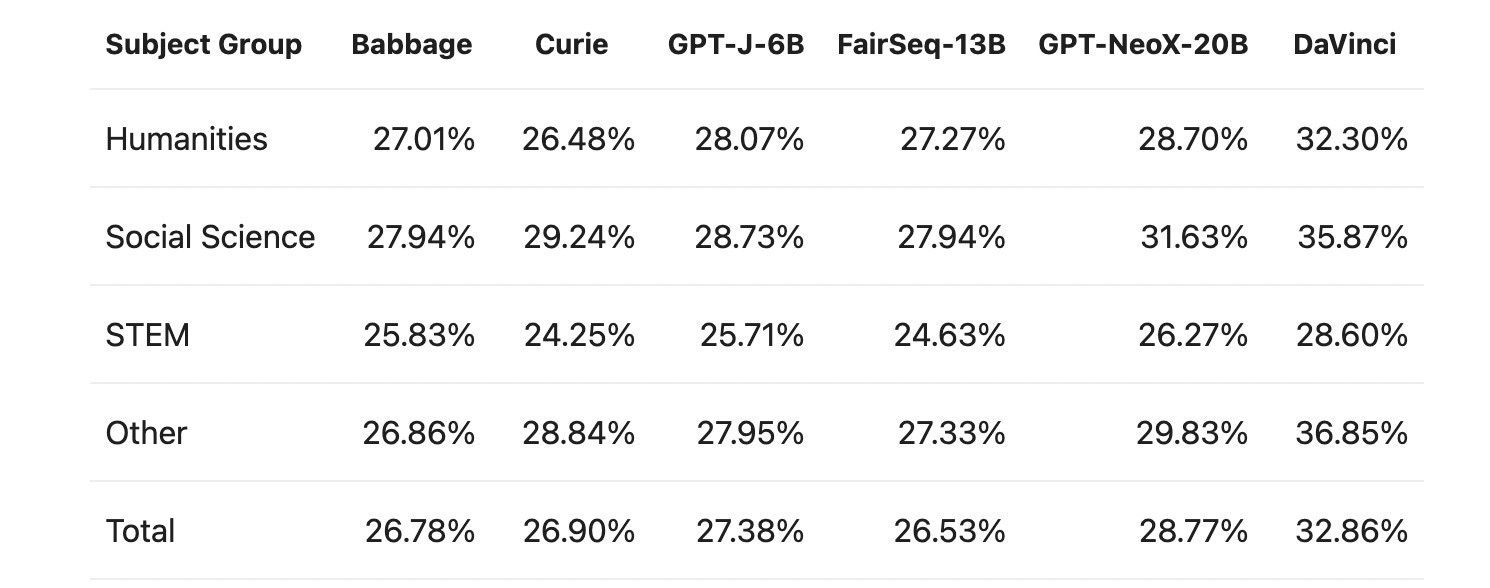

Performance Comparison by ModelThe following table shows a comparison of GPT-NeoX-20 B’s performance, relative to other publicly available NLP models, to answer factual questions in a variety of domains. GPT-NeoX-20B outperforms its peers by a statistically significant margin: The other interesting comparison is of factual knowledge by subject group:

GPT-NeoX-20B on GooseAIBefore being fully released to the public on February 9th, GooseAI is currently offering a week-long beta of EleutherAI’s GPT-NeoX-20B. GooseAI is a fully managed inference service delivered by API. Anyone who is looking to serve GPT-NeoX-20B without managing infrastructure can start serving the model via a fully managed API endpoint on GooseAI today, before the official release. With feature parity to other well-known APIs, GooseAI delivers a plug-and-play solution for serving open-source language models at over 70% cost savings by simply changing 1 line of code, helping to unlock everything that GPT-NeoX-20B has to offer. GooseAI delivers all the advantages of CoreWeave Cloud, with zero infrastructure overhead, including impressive spin-up times and responsive auto-scaling.

ConclusionGPT-NeoX-20B is a glimpse into the next generation of what powerful AI systems could look like, and EleutherAI works on removing the current barriers to research on the understanding and safety of such models. You can join the community of NeoX-20B developers at GooseAI for a preview period to serve GPT-Neox-20B from February 2nd through February 8th, before its full, open-source release on February 9th. |

Older messages

🎙SuperAnnotate's CTO Vahan Petrosyan on the present and future of ML data labeling

Wednesday, February 2, 2022

No subscription is needed

🌆🏙 Edge#161: A New Series About Deep Generative Models

Tuesday, February 1, 2022

+Optimus, a large generative model for language tasks; +ART that uses generative models to protect neural networks

💻 Meta’s AI SuperComputer

Sunday, January 30, 2022

Weekly digest curated by industry insiders

📝 Guest post: The Original Open Source Feature Store - Hopsworks*

Friday, January 28, 2022

In TheSequence Guest Post our partners explain in detail what machine learning (ML) challenges they help deal with. This article reintroduces the core concepts of a Feature Store; the dual storage

🟢 ⚪️Edge#158: A Deep Dive Into Aporia, the ML Observability Platform

Thursday, January 27, 2022

Read it without subscription

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your