📝 Guest post: You are probably doing MLOps at a reasonable scale. Embrace it*

Was this email forwarded to you? Sign up here 📝 Guest post: You are probably doing MLOps at a reasonable scale. Embrace it*No subscription is neededIn TheSequence Guest Post, our partners explain what ML and AI challenges they help deal with. In this article, neptune.ai discusses hyperscale and reasonable scale companies and how their needs for ML tools differ. You are probably doing MLOps at a reasonable scale. Embrace it.Solving the right problem and creating a working model, while still crucial, is no longer enough. At more and more companies, ML needs to be deployed to production to show “real value for the business”. Otherwise, your managers or managers of your managers will start asking questions about the “ROI of our AI investment”. And that means trouble. The good thing is, many teams, large and small, are past that point, and their models are doing something valuable for the business. The question becomes: How do you actually deploy, maintain and operate those models in production? The answer seems to be MLOps. But what does it mean to have MLOps set up?

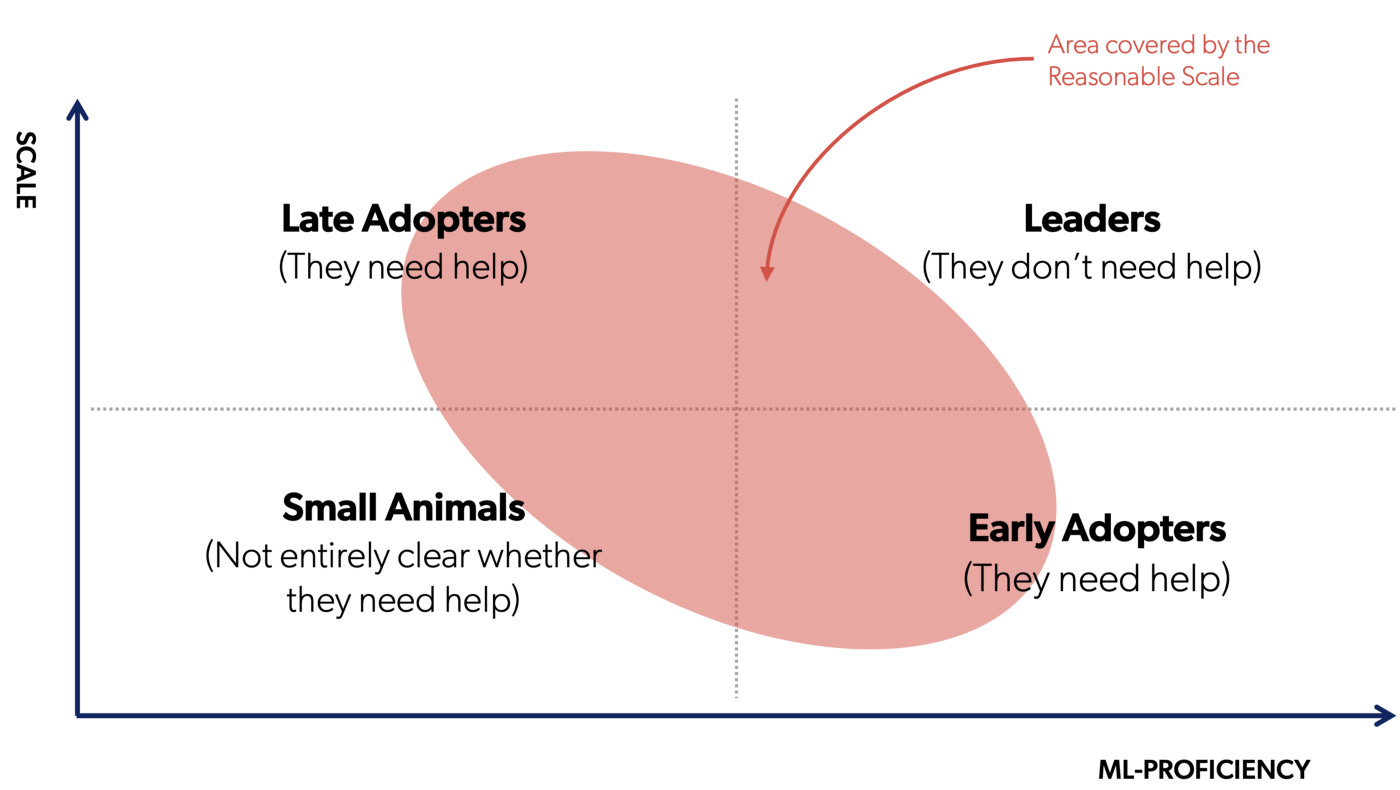

But do you? Do you really need those things or is it just a “standard industry best practice”. Most of the good blog posts, whitepapers, conference talks, and tools are created by people from super-advanced, hyperscale companies. Companies like Google, Uber, and Airbnb, who have hundreds of people working on ML problems that serve trillions of requests a month. That means most of the best practices you find are naturally biased toward hyperscale. But 99% of companies are not doing production ML at hyperscale. Most companies are either not doing any production ML yet or do it at a reasonable scale, a term coined last year by Jacopo Tagliabue. Reasonable scale as in five ML people, ten models, millions of requests. Reasonable, demanding, but nothing crazy and hyperscale. Ok, so the best practices are biased toward hyperscale, what is wrong with that? The problem is when a reasonable scale team is going with “standard industry best practice” and tries to build or buy a full-blown, hyperscale MLOps system. Hyperscale companies need everything. Reasonable scale companies need to solve the most important current challenges. They need to be smart and pragmatic about what they need right now. The tricky part is to tell what your actual needs are and what are potential, nice-to-have, future needs. With so many blog articles and conference talks out there, it is hard. Once you are clear about your reality, you are halfway there. But there are examples of pragmatic companies achieving great results by embracing reasonable scale MLOps limitations:

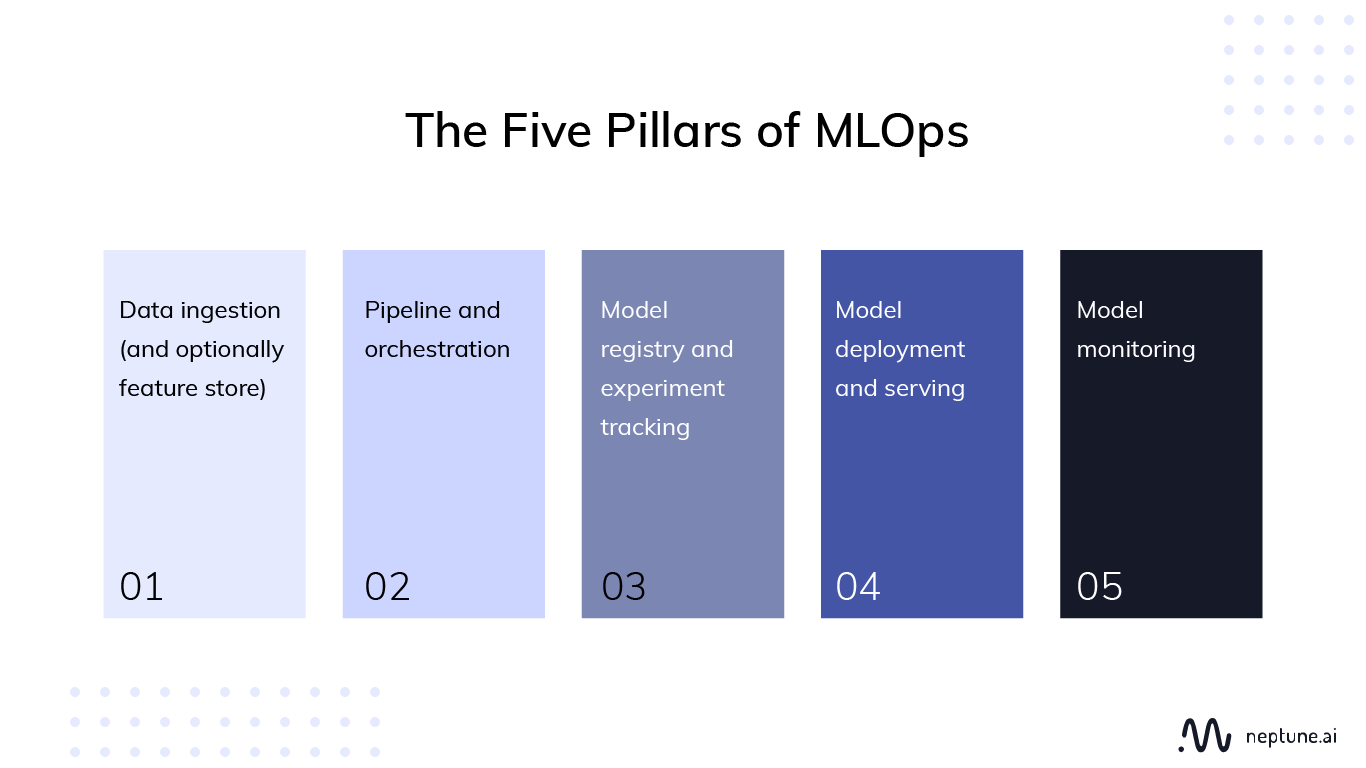

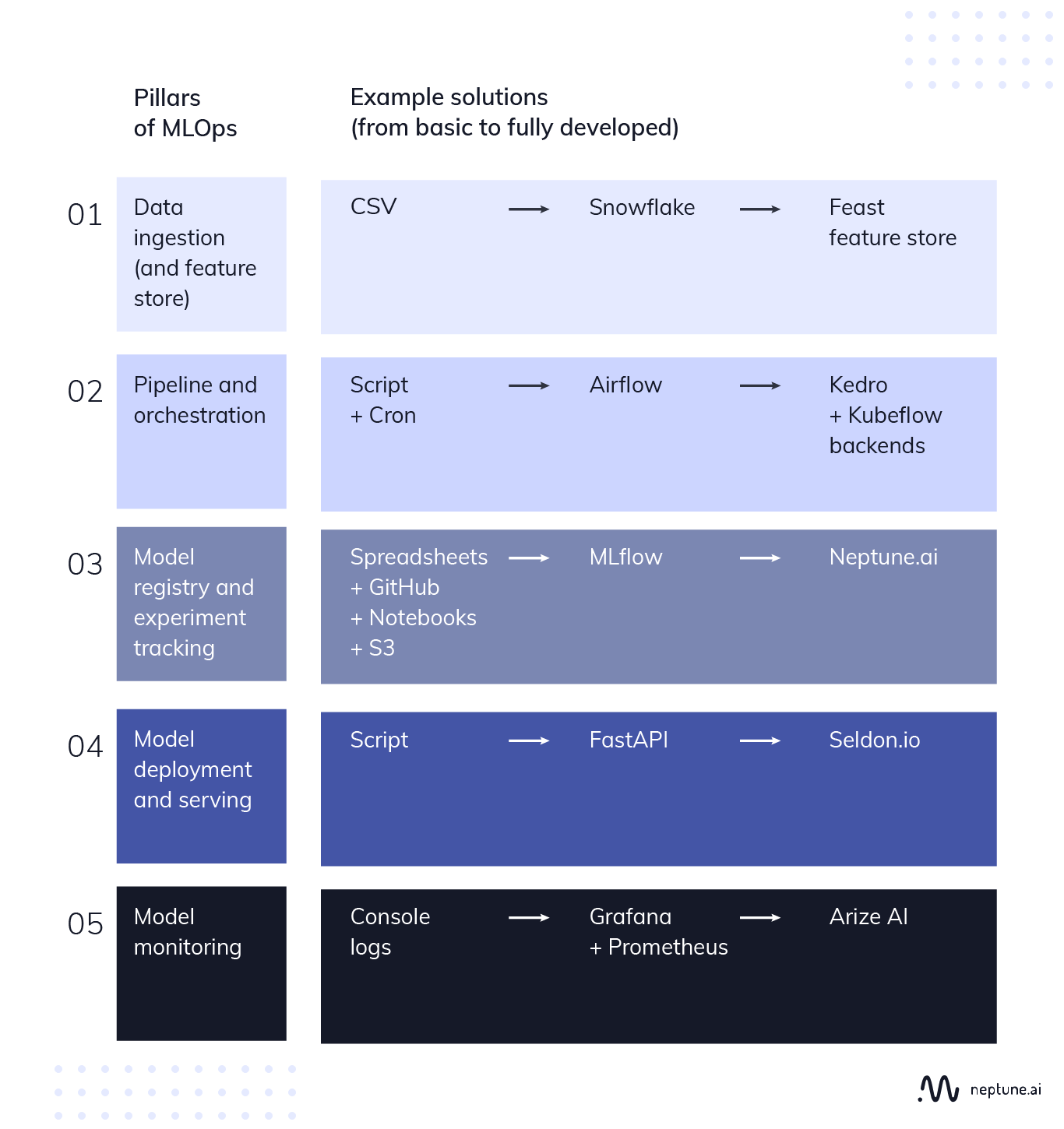

You probably never heard of them, but their problems and solutions are a lot closer to your use case than that Netflix blog post or Google whitepaper you have open in the other tab. Ok, so say you want to do it right, what do you do? Some things that are clear(ish) is that there are five main pillars of MLOps that you need to implement somehow:

Each of those can be solved with a simple script or a full-blown solution depending on your needs. The decision boils down to whether you want:

The answer, as always, is “it depends”. Some teams have a fairly standard ML use case and decide to buy an end-to-end ML platform. The problem is that the further away you go from the standard use case, the harder it gets to adjust the platform to your workflow. And everything looks simple and standard at the beginning. Then business needs change, requirements change, and it is not so simple anymore. And then there is the pricing discussion. Can you justify spending “this much” on an end-to-end enterprise solution when all you really need is just 3 out of 10 components? Sometimes you can, and sometimes you cannot. Because of all that, many teams stay away from end-to-end and decide to build a canonical MLOps stack from point solutions that solve just some parts very well. Some of those solutions are in-house tools, some are open-source, some are third-party SaaS or on-prem tools. Depending on their use case, they may have something as basic as bash scripts for most of their ML operations and get something more advanced for one area where they need it. For example:

By pragmatically focusing on the problems you actually have right now, you don’t overengineer solutions for the future. You deploy those limited resources you, as a team doing ML at a reasonable scale have, into things that make a difference for your team/business. Where are we as neptune.ai in all this? We really believe in this pragmatic, reasonable scale MLOps approach. It starts with the content we create, focusing on ML practitioners from reasonable scale companies solving their real-life problems, and it goes all the way to the product we built. Regardless of how you solve for other components of your MLOps stack, we want you to use neptune to deal with experiment tracking and model registry problems. For example:

Great, neptune will work for you as you grow and change other components of your MLOps stack. That is, of course, if you actually need an experiment tracking tool today. If you can live with spreadsheets or git? Go for it, be pragmatic :) *This post was written by the neptune.ai team. We thank neptune.ai for their ongoing support of TheSequence. |

Older messages

👩🏼🎨 Edge#175: Understanding StyleGANs

Tuesday, March 22, 2022

+open-sourced StyleGANS for generating photorealistic synthetic images

📌 Remember in-person events? This one’s worth the wait

Monday, March 21, 2022

After a two-year hiatus, Rev is back. The most ambitious Enterprise MLOps conference is coming to New York City May 5-6. We're bringing the data science community together in-person for a one-of-a-

🍭The ML Hardware Virtualization Layer

Sunday, March 20, 2022

Weekly news digest curated by the industry insiders

📝 Guest post: Beating the Challenges of AI Inference Workloads in the Cloud*

Friday, March 18, 2022

No subscription is needed

📜 Edge#174: How DeepMind Uses Transformer Models to Help Restore Ancient Inscriptions

Thursday, March 17, 2022

Ithaca is a transformer based architecture used to help historians restore ancient Greek text

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your