Mostly Skeptical Thoughts On The Chatbot Propaganda Apocalypse

People worry about chatbot propaganda. The simplest concern is that you could make chatbots write disinformation at scale. This has created a cottage industry of AI Trust And Safety people making sure their chatbot will never write arguments against COVID vaccines under any circumstances, and a secondary industry of journalists writing stories about how they overcame these safeguards and made the chatbots write arguments against COVID vaccines. But Alex Berenson already writes arguments against COVID vaccines. He’s very good at it, much better than I expect chatbots to be for many years. Most people either haven’t read them, or have incidentally come across one or two things from his yearls-long corpus. The limiting factor on your exposure to arguments against COVID vaccines isn’t the existence of arguments against COVID vaccines. It’s the degree to which the combination of the media’s coverage decisions and your viewing habits causes you to see those arguments. A million mechanical Berensons churning out a million times the output wouldn’t affect that; even one Berenson already churns out more than most people ever read. Could a million mechanical disinformers do somewhat better than one? There’s room for tailored arguments - an anti-vax argument that appeals to liberals, an argument that appeals to conservatives, an argument that appeals to parents, an argument that appeals to the elderly, salami-slicing demographics like any other advertiser would. But the returns diminish pretty quickly here. And there are already many other people writing targeted versions: Berenson, Marjorie Taylor Greene, and RFK Jr. already post unique anti-vaccine content tailored to different audiences. Once you have ten people writing differently-pitched arguments on a topic, do another hundred add very much? So even granting that disinformation is very bad, I can’t bring myself to get too worried about chatbots writing it. But some people have more sophisticated concerns. Philosophy Bear discusses a broader chatbot propaganda apocalypse - I’m basing the rest of this post off the way I think about his argument, which might not be exactly the same as his actual argument - any errors in reasoning here are mine, not his. I think of this as separating into two scenarios: Medium Bad Scenario: Chatbots will show up in your Twitter replies and DMs, posing as friendly people trying to inform you of the dangers of COVID vaccines. If you bite, they’ll hold your hand as they walk you through anti-vaccine arguments, answering your questions and responding to your objections. Not only does this mean the disinformation will come to you (instead of you having to go to it), but it will directly target the weaknesses in your arguments and the places you’re most uncertain. Very Bad Scenario: Half of your online friends - the people you’ve known for months or years, the people whose Twitter and Instagram accounts you follow and trust - are secretly propagandabots, designed by some company or movement to catch your interest. Most of their content will be really good. But every so often they’ll drop in a reference to how their grandmother got a COVID vaccine and died instantly, and the ordinary social reasoning we use to figure out what our friends and role models think will be hopelessly poisoned. Bear is a leftie, and thinks about thinks about this through a class-based lens:

…but it’s easy to see how anyone of any political stripe would have cause for concern. I worry about this more than I worry about Berenson-bot, but I don’t feel the same kind of panic Philosophy Bear does. Overall I think it will happen to a very limited degree or not at all: 1: There Are Already Plenty Of Social Anti-Bot Filters In a sense, I’m living in the chatbot apocalypse already. As a famous blogger, I live in a world where hordes of people with mediocre arguing skills try to fake shallow friendships with to convince me to support things. But I can almost completely block it out. I do sometimes engage, but mostly with people with people I know personally, or through some kind of vague community social proof, or because I’ve appreciated other original and interesting things someone has said. Other famous people have set their social media to only allow replies from people they follow, or from other bluechecks, or they use some other screening method that mostly works for them. I don’t think famous people get convinced of weird stuff more often than the rest of us, even though they labor under this disadvantage. Even non-famous people seem to have sorted into insular social media communities. The best websites (hopefully including ACX comments section) feel more like a village, where people know each other at least a little. These groups can develop arcane jargons, making them impenetrable to outsiders, and standards of discourse thatoff-the-shelf chatbots probably can’t replicate. “Hordes of annoying people want to debate you on social media without providing real value” is a problem people have been dealing with long before GPT, and have often found good solutions. Maybe this is too glib. I do sometimes see people respond to random bad-faith objections in their Twitter replies. But these people are already in Hell. I don’t know how chatbots can add to or subtract from their suffering. Still, this is a hole in my mental map now, and maybe a place where chatbots can cause mischief. 2: …And Technological Anti-Bot Filters I’m not sure how long CAPTCHAs will work for. It wouldn’t surprise me if they kept working for a while - even if some advanced AI somewhere can defeat all possible CAPTCHAs, propaganda-botnet owners might not be able to deploy it at scale. Still, we should be prepared for a world where they’re not enough. Sites could ask for proof of humanity. I don’t know how this will work in the future: drivers licenses can be faked, videos can be spoofed. Worst case scenario, I think megacorporations like Google and Facebook could offer this as a service - so-and-so has a GMail account or Facebook page and has gotten lots of normal-looking messages over many years. If nothing else works, sites could verify through payment - something like Twitter Blue. Propagandabot owners could pay for one account, but not separate accounts for each of the thousands of people they want to reach. And if one account started messaging thousands of people, this would be suspicious activity that moderators could detect and remove. The argument isn’t that these particular solutions would definitely work. It’s that once we approach the point where, in Philosophy Bear’s words, you should “log off and start forming connections and organizations”, social media corporations will have strong incentives to take draconian anti-bot measures, and users will have strong incentives to accept them even if they’re annoying. Since these measures don’t seem impossible in principle, they’ll probably happen. I’m more worried about corporations and governments using bots as an excuse to strip users of privacy than I am about there being no privacy-stripping solution that maintains a usable online environment. 3: Fear Of Backlash Will Limit Adoption In order to be dangerous, chatbots will need to build close personal relationships with you, then exploit them to change your opinions. But if I learned that my Internet friend who I’d talked to every day for a year was actually a chatbot run by Pepsi trying to make me buy more Pepsi products, I would never buy another can of Pepsi in my life. It would be less reasonable to do this for eg a pro-communism bot: communism is a large and diverse movement; just because some pro-communism AI hacker group happens to be terrible doesn’t mean the workers shouldn’t control the means of production. But good luck hoping people will be able to put aside their anger and betrayal and appreciate this. Israel has a program called Hasbara where they get volunteers to support Israel in Internet comment sections. I know this because every time someone is pro-Israel in an Internet comment section, other people accuse them of being a Hasbara stooge. I don’t know if this program has produced enough value for Israel to justify the backlash. Don’t get me wrong - I think companies, PR firms, and governments will have chatbots that push their messages. But I think they might shy away from the full strategy of trying to become your friend under false pretenses. 4: Propagandabots Spreading Disinformation Is Probably The Opposite Of What You Should Worry About News articles about this problem always start with the same scenario: what if someone made a propagandabot to spread COVID disinformation, ie the most unpopular topic in the world, which every government and social media corporation and AI corporation is itching to censor? Here’s a crazy thought: maybe the side that has 10x more people on it and 100x more support from the relevant actors is the one that will win the race to use this technology. You can already get an off-the-shelf chatbot that mouths liberal pieties; I’m not sure there are any that are much good at disinformation production. The liberal-piety-bots will be on version 10.0 before the first jailbroken disinformation-bot comes out. So the establishment has a big propagandabot advantage even before the social media censors ban disinfo-bots but come up with some peaceful-coexistence-solution for establishment-bots. So there’s a strong argument (which I’ve never seen anyone make) that the biggest threat from propaganda bots isn’t the spread of disinformation, but a force multiplier for locking in establishment narratives and drowning out dissent. If this doesn’t worry you in the US, at least let it worry you in China, whose government already hires people to parrot propaganda at people on social media. Maybe only disinformation-spreaders would be evil enough to use the bad kind of propaganda-bot, the one that pretends to be your friend? I admit this is possible. But the quantity of spam text messages I have gotten from Nancy Pelosi - ever since making the terrible mistake of donating to a candidate on ActBlue one single solitary time - makes me skeptical of claims about the establishment’s unwillingness to use scummy annoying propaganda techniques. 5: Realistically This Will All Be Crypto Scams Sorry, I just thought of this one, but it’s obviously true. All of this talk of politics and propaganda and misinformation and censorship ignores the fact that the most likely main use of any new chatbot technology will be the same as existing spambots, which is promoting crypto scams. In fact, political propaganda is one of the worst subjects to use bots for. On the really big debates - communism vs. capitalism, woke vs. anti-woke, mRNA vs. ivermectin - people rarely change their mind, even under pressure from friends. But those are the kinds of debates people enjoy having, the ones that mark the sphere of Internet Debate. Much more promising for chatbot owners is promoting some Ponzi scheme, or making people have slightly more positive opinions towards some corporation that hired a good PR agency. Bots will crowd out other bots. If they’re trying the “invest a lot of effort into becoming your friend” strategy, the average person can only have so many online friends, and some of those will be non-bots. Even if the bots are becoming influencers, the average person only reads so many. If someone has room for five bot friends and twenty bot influencers, probably most of those will go to non-political topics. Honestly it’s cute that we nerds leap to thinking about political propaganda as the first thing we would use an evil chatbot for. Also, realistically the bots will all be hot women, and anyone who isn’t a hot woman will be safe and confirmed human. “But wouldn’t they use some bots who aren’t hot women, to trick people who have already learned this heuristic?” You might think so, but you might also think that the spam fake Facebook friend requests I get would try this, and they never do. 6: I Do Think This Might Decrease Serendipitous Friendship, Though One time a hot woman messaged me on Facebook saying she wanted to be my friend. I almost blocked her - obvious spam, right? - but she was able to say “. . . because I read your blog” before I hit the block button. I figured this was beyond a spambot’s ability, so we kept talking and had a good conversation. (obviously this story should end with “. . . and now we’re married”, but it’s not that good an anecdote and I met my wife in a much less dramatic way, sorry) If chatbots get good, I expect to block more people in those kinds of situations, even when they do say things like “I read your blog”. I expect to be more reluctant to open new conversations, start new friendships with people I only know through a name and profile picture, and debate people I don’t know in comments sections. This might not be a strong effect. This will be something people don’t like, and both normal human social organization instincts and social media corporate giants will try to find ways around it. But it might make the difference in some marginal cases, like the one above. 7: You Can Solve For The Equilibrium

How seriously should we take these comics? The worse chatbots are (compared to humans) as friends, influencers, and debate partners, the less we have to worry about. But the better chatbots are as friends, influencers, and debate partners, the more upside there could be. I don’t want to speculate on exactly how this would work: it gets too close to the original idea of the Singularity in the sense of “a point where crazy things are happening so fast it’s not worth trying to predict”. Conclusion And Predictions I’m nervous writing this, because I remember the halcyon days of the early 2000s, when we all assumed the Internet would be a force for reason and enlightenment. Surely if everyone were just allowed to debate everyone else, without intervening barriers of race or class or religion, the best arguments would rise to the top and we would enter a new utopia of universal agreement. The scale at which this project failed makes me reluctant to ever speculate again about anything regarding online discourse going well. Maybe in the 2030s, the idea that propagandabots would be either easily dispatched, or else model netizens writing good content, will seem just as naive as the early 2000s vision. And the chatbot propaganda apocalypse is a popular thing to believe in without any clear definition, and there will surely be some celebrated cases of chatbots causing mischief, so I’m setting myself up to fail here by the standards I mentioned in Nostradamus to Fukuyama. Still, I do want to go on record as doubting the strongest form of this thesis. As for predictions:

You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

Book Review Contest Rules 2023

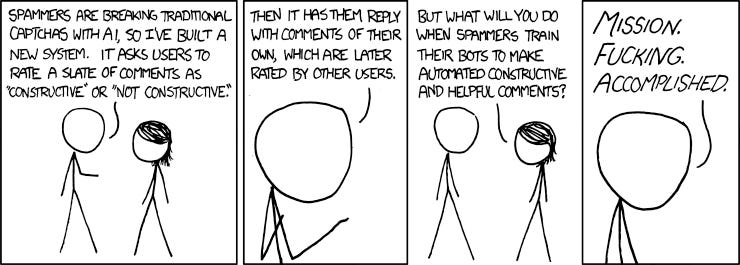

Thursday, February 2, 2023

...

Response To Alexandros Contra Me On Ivermectin

Wednesday, February 1, 2023

...

Mantic Monday 1/30/2023

Tuesday, January 31, 2023

One million Metaculi, fake stocks, scandal markets again

Open Thread 261

Monday, January 30, 2023

...

Janus' Simulators

Thursday, January 26, 2023

This post isn't about AI, but bear with me

You Might Also Like

Bank Beliefs

Monday, March 10, 2025

Writing of lasting value Bank Beliefs By Caroline Crampton • 10 Mar 2025 View in browser View in browser Two Americas, A Bank Branch, $50000 Cash Patrick McKenzie | Bits About Money | 5th March 2025

Dismantling the Department of Education.

Monday, March 10, 2025

Plus, can someone pardoned of a crime plead the Fifth? Dismantling the Department of Education. Plus, can someone pardoned of a crime plead the Fifth? By Isaac Saul • 10 Mar 2025 View in browser View

Vote now for the winners of the Inbox Awards!

Monday, March 10, 2025

We've picked 18 finalists. Now you choose the winners. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚡️ ‘The Electric State’ Is Better Than You Think

Monday, March 10, 2025

Plus: The outspoken rebel of couch co-op games is at it again. Inverse Daily Ready Player One meets the MCU in this Russo Brothers Netflix saga. Netflix Review Netflix's Risky New Sci-Fi Movie Is

Courts order Trump to pay USAID − will he listen?

Monday, March 10, 2025

+ a nation of homebodies

Redfin to be acquired by Rocket Companies in $1.75B deal

Monday, March 10, 2025

Breaking News from GeekWire GeekWire.com | View in browser Rocket Companies agreed to acquire Seattle-based Redfin in a $1.75 billion deal that will bring together the nation's largest mortgage

Musk Has Triggered A Corporate Deregulation Bomb

Monday, March 10, 2025

A Delaware bill would award Elon Musk $56 billion, shield corporate executives from liability, and strip away voting power from shareholders. Forward this email to others so they can sign up “

☕ Can’t stop, won’t stop

Monday, March 10, 2025

Why DeepSeek hasn't slowed Nvidia's roll. March 10, 2025 View Online | Sign Up Tech Brew Presented By Notion It's Monday. So much is happening all the time, so you'd be forgiven for

Trump's war on the First Amendment

Monday, March 10, 2025

Plus: Giant white houses everywhere, a woman in chains, and love. View this email in your browser March 10, 2025 Trump, in a navy suit and red tie, is seen from the shoulders up. His mouth is open in

Veterans Administration therapists forced to provide mental health counseling in open cubicles

Monday, March 10, 2025

As part of the Trump administration's frenzied push to end remote work arrangements for federal government workers, the Veterans Administration (VA) is forcing therapists to provide mental health