📝 Guest Post: Stop Hallucinations From Hurting your LLM Powered Apps*

Was this email forwarded to you? Sign up here Large language model (LLM) hallucinations pose a big threat to the successful adoption of the new wave of LLM apps. In this post, the Galileo team dives into how one can prevent hallucinations from creeping in, as well as some metrics developed by the researchers at Galileo to quantify potential LLM hallucinations. They also introduce a free access to the Galileo LLM Studio, powered by research-backed mechanisms to combat LLM hallucinations. Leveraging large language models to build useful and interactive products has never been easier. This has sparked a new wave of ‘LLM developers’ to quickly use a combination of LLMs (Open source/Closed source), frameworks such as LangChain, LlamaIndex and LangFlow, and perhaps a vector database to spin up a LLM powered product — a large number of startups, mid-market businesses, as well as large enterprises have been racing in this direction. Given this, it becomes increasingly important to be mindful of a huge bottleneck in LLM adoption – hallucinations, aka models being over confidently incorrect in their predictions. We have already seen this crop up in Google’s Bard launch, Microsoft’s launch of the Tey chatbot a few years ago, and numerous similar examples recently – this poses not just a reputational risk to the business, but also a societal risk with disinformation dissemination and biased viewpoints proliferating. What are LLM hallucinations?LLMs hallucinate when their predictions are based on insufficient or inaccurate training data. For instance, GPT-4 was trained on data dating back to Sep 2021. When prompted with questions beyond that date, it has a high likelihood of a hallucinated response. A research paper from the Center for Artificial Intelligence Research (CAiRE) defines a hallucination from an LLM as “when the generated content is nonsensical or unfaithful to the provided source content”. A popular example of this is about GPT-4 being asked “Who owns Silicon Valley Bank?”. The response is “Silicon Valley Bank (SVB) is not owned by a single individual or entity. It is a subsidiary of SVB Financial Group, a publicly traded company (NASDAQ: SIVB). The ownership of SVB Financial Group is distributed among individual and institutional shareholders who own its stocks. As a publicly traded company, its ownership can change frequently as shares are bought and sold on the stock market.” In this case, GPT-4 has no idea about the recent SVB collapse. To mitigate disinformation from this ‘hallucinated’ response, OpenAI recently added the ‘As of my knowledge cutoff in September 2021,’ prefix ahead of such responses. Why LLM hallucinations occurLLMs are, at the end of the day, large neural networks that predict the next token in a sequence – this could be the next character, sub-word or word. In mathematical terms – given a sequence of tokens T1, T2, …, TN, , the LLM learns the probability distribution of the next token TN+1 conditioned on the previous tokens: P(T_{N+1}|T_{1},T_{2},…,T_{N}) There are two factors that can influence LLM hallucination a lot:

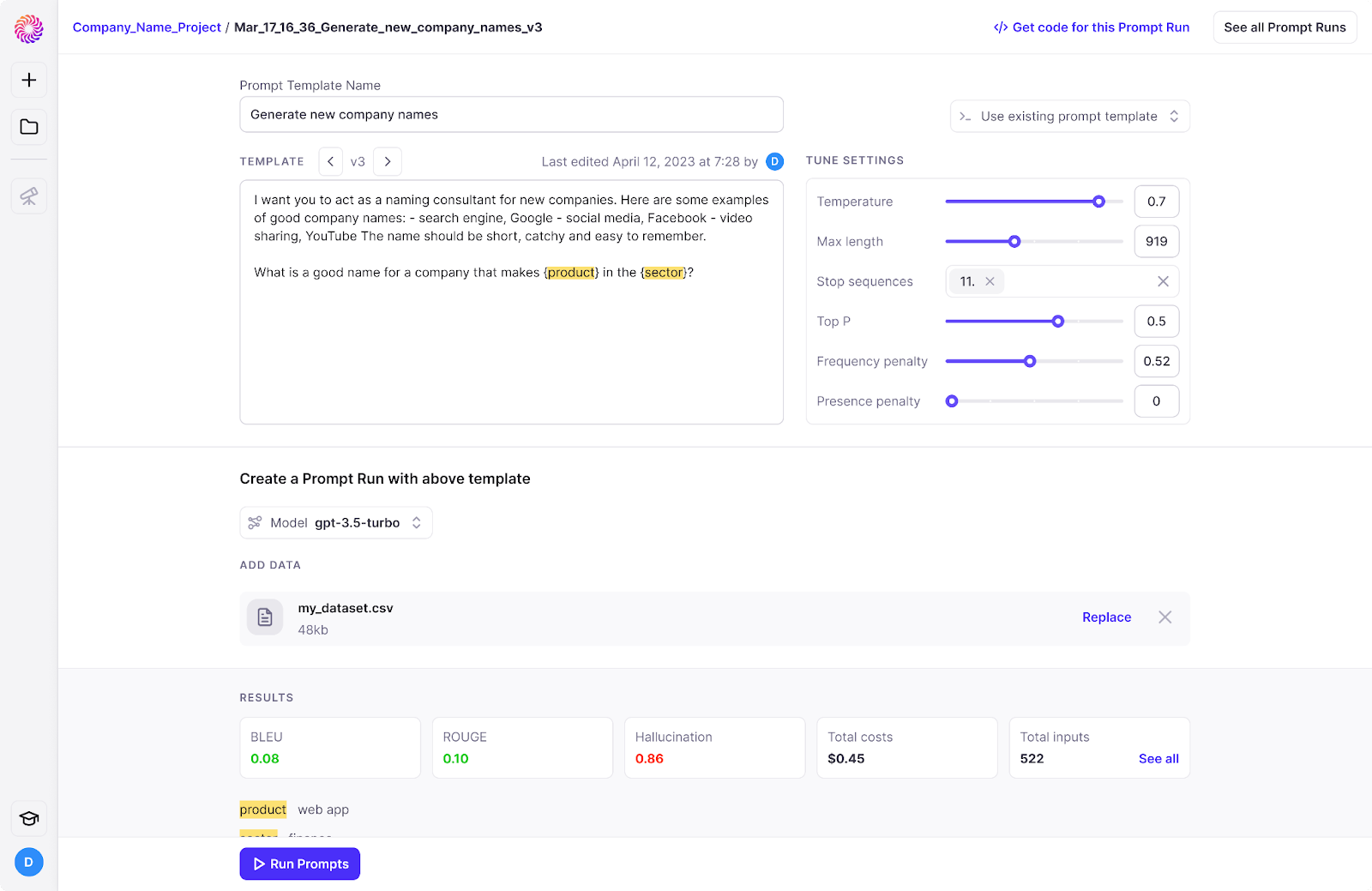

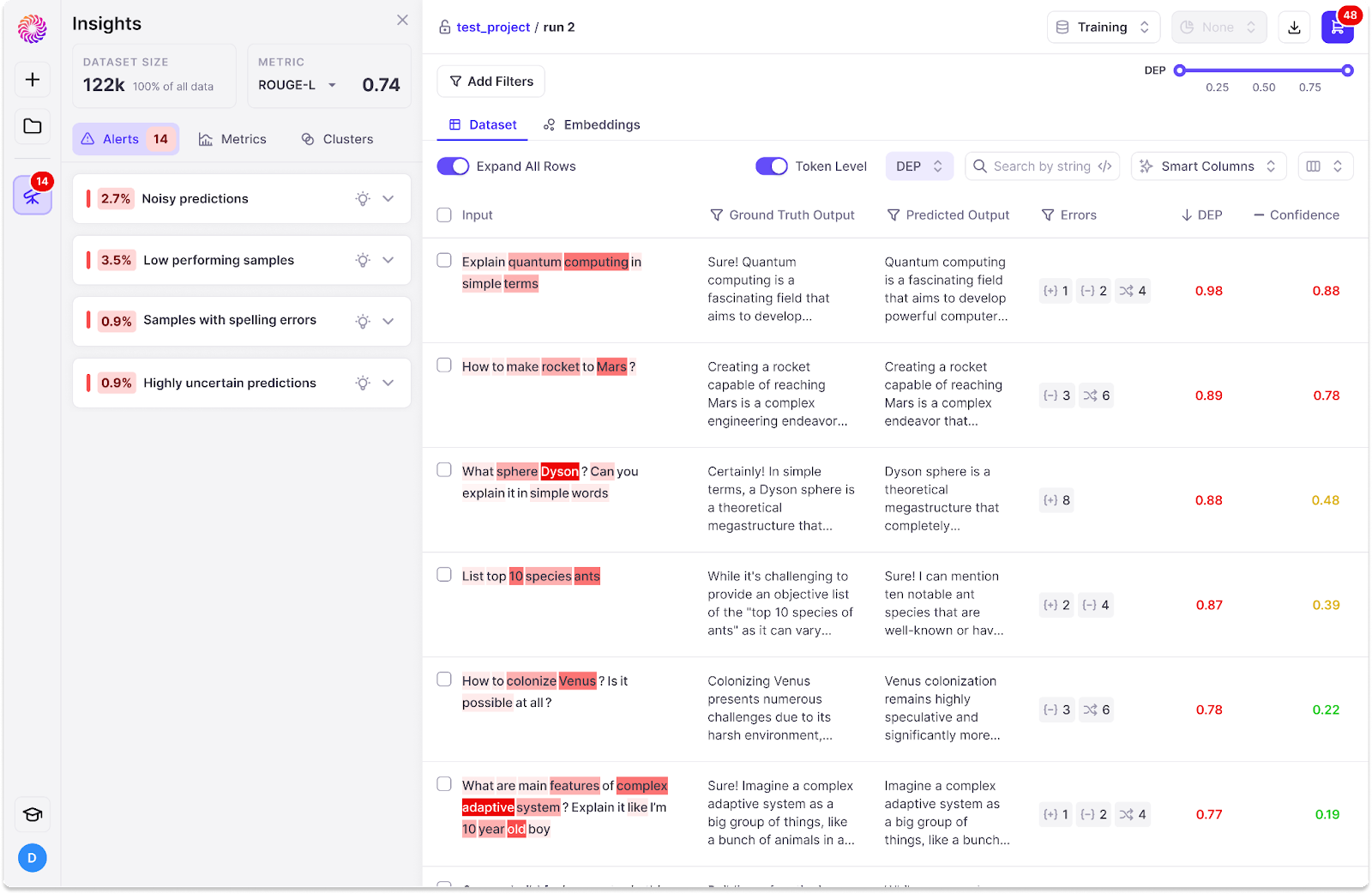

Quantifying LLM HallucinationsThe best ways to reduce LLM hallucinations are by

To take this a step further, the researchers at Galileo have come up with promising metrics to be used to quantify hallucination.

Introducing the Galileo LLM StudioTo build high performing LLM powered apps, requires careful debugging of prompts and the training data – the Galileo LLM Studio provides powerful tools to do just that, powered by research-backed mechanisms to combat LLM hallucinations – and it’s 100% free for the community to use.

ConclusionIf you are interested to try the Galileo LLM Studio – join the waitlist along with 1000s of developers building exciting LLM powered apps. The problem of model hallucinations poses a dire threat in the face of adopting LLMs in applications at scale for everyday use – by focusing on ways to quantify the problem, as well as baking in safeguards, we can build safer, more useful products for the world and truly unleash the power of LLMs. References & AcknowledgmentsThe calibration and building blocks of Galileo's LLM hallucination metric is the outcome of numerous techniques and experiments, with references to (but not limited by) the following papers and artifacts:

*This post was written by the Galileo team. We thank Galileo for their support of TheSequence.You’re on the free list for TheSequence Scope and TheSequence Chat. For the full experience, become a paying subscriber to TheSequence Edge. Trusted by thousands of subscribers from the leading AI labs and universities. |

Older messages

Edge 296: Inside OpenAI's Method to Use GPT-4 to Explain Neuron's Behaviors in GPT-2

Thursday, June 1, 2023

The technique is one of the first attempts to utilize LLMs as a explainability foundation.

The Sequence Chat: Rohan Taori on Stanford's Alpaca, Alpaca Farm and the Future of LLMs

Wednesday, May 31, 2023

Alpaca was one of the first open LLMs to incorporate instruction following capabilities. Now one of the project's main researchers shares his insights about modern LLMs.

Edge 295: Self-Instruct Models

Tuesday, May 30, 2023

What if LLMs could auto improve their own instruction following capabilities?

📝 Guest Post: How to build a responsible code LLM with crowdsourcing*

Monday, May 29, 2023

In this post Toloka showcases Human-in-the-Loop using StarCoder, a code LLM, as an example. They address PII risks by training a PII reduction model through crowdsourcing, employing strategies like

GPT-Microsoft

Sunday, May 28, 2023

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

You Might Also Like

Import AI 399: 1,000 samples to make a reasoning model; DeepSeek proliferation; Apple's self-driving car simulator

Friday, February 14, 2025

What came before the golem? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Defining Your Paranoia Level: Navigating Change Without the Overkill

Friday, February 14, 2025

We've all been there: trying to learn something new, only to find our old habits holding us back. We discussed today how our gut feelings about solving problems can sometimes be our own worst enemy

5 ways AI can help with taxes 🪄

Friday, February 14, 2025

Remotely control an iPhone; 💸 50+ early Presidents' Day deals -- ZDNET ZDNET Tech Today - US February 10, 2025 5 ways AI can help you with your taxes (and what not to use it for) 5 ways AI can help

Recurring Automations + Secret Updates

Friday, February 14, 2025

Smarter automations, better templates, and hidden updates to explore 👀 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

The First Provable AI-Proof Game: Introducing Butterfly Wings 4

Friday, February 14, 2025

Top Tech Content sent at Noon! Boost Your Article on HackerNoon for $159.99! Read this email in your browser How are you, @newsletterest1? undefined The Market Today #01 Instagram (Meta) 714.52 -0.32%

GCP Newsletter #437

Friday, February 14, 2025

Welcome to issue #437 February 10th, 2025 News BigQuery Cloud Marketplace Official Blog Partners BigQuery datasets now available on Google Cloud Marketplace - Google Cloud Marketplace now offers

Charted | The 1%'s Share of U.S. Wealth Over Time (1989-2024) 💰

Friday, February 14, 2025

Discover how the share of US wealth held by the top 1% has evolved from 1989 to 2024 in this infographic. View Online | Subscribe | Download Our App Download our app to see thousands of new charts from

The Great Social Media Diaspora & Tapestry is here

Friday, February 14, 2025

Apple introduces new app called 'Apple Invites', The Iconfactory launches Tapestry, beyond the traditional portfolio, and more in this week's issue of Creativerly. Creativerly The Great

Daily Coding Problem: Problem #1689 [Medium]

Friday, February 14, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Google. Given a linked list, sort it in O(n log n) time and constant space. For example,

📧 Stop Conflating CQRS and MediatR

Friday, February 14, 2025

Stop Conflating CQRS and MediatR Read on: my website / Read time: 4 minutes The .NET Weekly is brought to you by: Step right up to the Generative AI Use Cases Repository! See how MongoDB powers your