The Generalist - What to Watch in AI

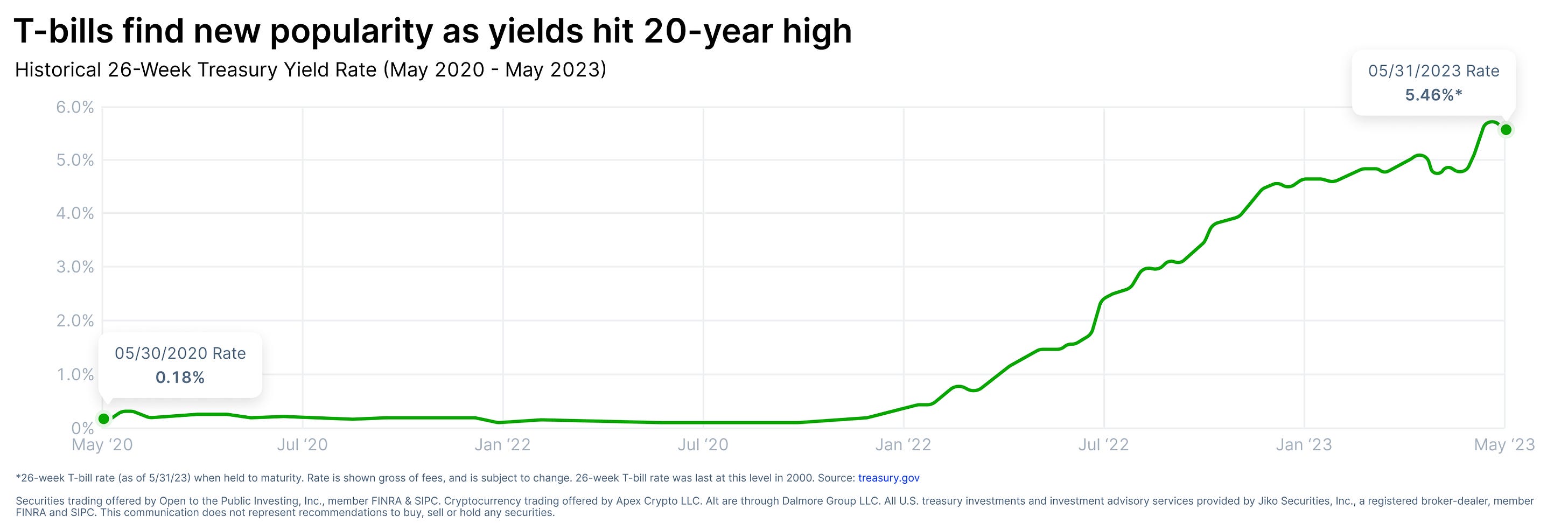

What to Watch in AIInvestors from Sequoia, USV, Kleiner Perkins, and more select the AI startups you should keep an eye on.Friends, Artificial intelligence is the technology story of the year; it may prove to be the defining narrative of our decade. Since our last edition of this series, the sector has continued to attract capital, talent, and attention. Not all attention has been positive, of course. Though there’s broad excitement about the capabilities of the technology, the past four months have seen industry heavyweights express their concern and regulators begin to devise some palisades. The following months and years look set to determine how fully AI impacts our lives, creating new winners and losers on a global scale. Our “What to Watch” series aims to help readers prepare for the coming age and see the future more clearly. It is a starting point for those that wish to understand the technologies bubbling up at the AI frontier and capitalize on the change occurring. To do so, we ask a selection of AI’s most impressive investors and founders to surface the startups they consider most promising. In this latest edition, our most comprehensive yet, you’ll learn how AI startups are assisting with human fertility, helping in our factories, accelerating corporate processes, and much more. These are our contributors’ picks. Note: Long-time readers will know we intentionally don’t preclude investors from mentioning companies they’ve backed. The benefits of increased knowledge and “skin in the game” outweigh the risk of facile book-talking. Across contributors, we do our utmost to select for expertise, originality, and thoughtfulness. A quick ask: If you liked this piece, I’d be grateful if you’d consider tapping the “heart” ❤️ above. It helps us understand which pieces you enjoy most, and supports our work. Thank you! Brought to you by PublicLock that rate down. Right now, you can take advantage of some of the highest Treasury yields since the early 2000s on Public. It only takes a few minutes to create your account, purchase government-backed Treasury bills, and start generating a historic 5%+ yield on your cash. Actionable insightsIf you only have a few minutes to spare, here’s what investors, operators, and founders should know about AI’s most exciting startups.

AlifeImproving IVF outcomes with AIIn any fertility procedure, there are designated moments of human decision-making. Two of the most relevant of these in IVF are “ovarian stimulation” and “embryo selection.” “Ovarian stimulation” refers to determining the dosage of medication patients receive to stimulate the growth of follicles in the ovaries and when to deliver the trigger shot that stimulates the release of the eggs from those follicles. Timing the trigger shot is critical – too early and you can get premature eggs; too late and you can get post-mature eggs or not as many as possible. “Embryo selection” means choosing which fertilized egg to use and implant. Currently, clinicians and embryologists, as in most of medicine, use a combination of their own experience and training, morphological grading systems, and trial and error to decide. If the dosage or timing is off on one cycle, they adjust it on the next. Many doctors are fantastic at this, creating a system where skill is widely varied and important in outcomes. For fertility, a significantly supply-constrained market, this means sky-high prices, particularly to see the best of the best and widely varying results across the field. Alife builds AI-powered tools to improve in vitro fertilization (IVF) outcomes. The company gives practitioners superpowers to augment their decision-making with AI tools that leverage large datasets of inputs and outcomes. Now, through a simple interface, doctors can input a patient’s characteristics and get precise recommendations at critical moments in the fertility process, taken from the outcomes of thousands of previous cycles. The datasets come from large collected resources of patient outcomes that already exist and, in turn, get better with each patient that uses the Alife products. These tools will change the nature of the fertility industry. Alife’s studies indicate that their machine learning model can help doctors optimize the trigger shot in the 50% of patients triggered too early or too late and help retrieve up to three more mature eggs, two more fertilized eggs, and one more embryo on average. Alife’s products can significantly broaden access to fertility treatments, bringing down the cost per patient by lowering required drug dosages and improving the success rate of each expensive IVF cycle. And they will flatten the playing field of doctors, allowing those with less firsthand experience to access broader knowledge and inputs. Eventually, you can imagine Alife’s tools providing all input for judgment moments in a process and allowing practitioners outside of doctors to perform cycles, significantly changing the sector’s cost structure and availability. More importantly, data-driven precision medicine that augments – or eventually replaces – a person’s judgment with personalized recommendations is not unique to IVF. Across medicine, there are thousands and thousands of moments like this and an opportunity to leverage data to dramatically transform outcomes and access to critical procedures and treatments. – Rebecca Kaden, General Partner at Union Square Ventures GleanEnterprise search and beyondFinding the exact information you need at work, right when you need it, should be fast and easy. With the endless number of applications each person uses to get their job done, and the amount of data and documents generated as a result, this isn’t always the case. The exponential rise in “knowledge” and the increasingly distributed nature of work have increased the time needed to find existing knowledge. In other words, “searching for stuff” at work is fairly broken. To help employers solve this problem, Arvind Jain and his team built Glean, an AI-powered unified search platform for the workplace. It equips employees with an intuitive work assistant that helps them find exactly what they need, when they need it, and proactively discover the things they should know. The company’s mission from the beginning was simple: to help people find all the answers to workplace questions faster, with less frustration and wasted time. But what has resulted since goes well beyond the realm of search. For example, Glean doesn’t just search across every single one of your workplace apps and knowledge bases (Slack, Teams, Google Drive, Figma, Dropbox, Coda, etc.); it also understands natural language and context, personalizing each of its user interactions based on people’s roles and inter/intra-company relationships. It intelligently surfaces your company’s most popular and verified information to help you discover what your team knows and stay on the same page – all in a permissions-aware fashion. As organizations become more distributed and knowledge becomes more fragmented, an intuitive work assistant like Glean is no longer a nice-to-have but a critical tool in driving employee productivity. What the company has developed will break down the silos that slow progress and create more positive and productive work experiences. Additionally, Glean’s search technology positions it to bring generative AI to the workplace while adhering to enterprises’ strict permissions and data governance requirements. Today, one of the key obstacles preventing enterprises from shipping AI applications to production is their inability to enforce appropriate governance controls (e.g., “Does my application understand what the end user is allowed to see and not see?”; “Is the inference done on my servers or OpenAI’s servers?”; “What source data led to a given model output and who owns it?”). By being plugged into an enterprise’s internal environment with real-time data permissions, Glean has emerged as an ideal solution to help enterprises solve governance at scale and confidently leverage their internal data for both model training and inference – serving the role of an enterprise-grade AI data platform/vector store. Over the fullness of time, we believe every company will have its own AI-enabled copilot personalized to understand the nuances of the business and its employees. And we believe Glean is well on its way to capturing this exact opportunity. – Josh Coyne, Partner at Kleiner Perkins LanceStorage and management for multi-modal dataWe’ve all played with Midjourney, and most of us have seen the GPT-4 napkin to code demo. Midjourney (text-to-image) and GPT-4 (image-to-text/code) illustrate what’s possible when models become multi-modal, bridging the gap across different forms of media like text, images, and audio. While most of the current wave of AI hype has been centered around text-based models, multi-modal models are the key to building more accurate representations of the world as we know it. As we unlock the next wave of AI applications in industries like robotics, healthcare, manufacturing, entertainment, and advertising, more and more companies will build on top of multi-modal models. Players like Runway and Flair.ai are good examples of emerging leaders in their respective spaces that have seen massive user demand for their products, while incumbents like Google have started releasing similar multi-modal capabilities. But working with multi-modal models presents a challenge: how do you store and manage the data? Legacy storage formats like Parquet aren’t optimized for unstructured data, so ML teams struggle with slow performance for data loading, analytics, evals, and debugging. In addition, the lack of a single source of truth makes ML workflows much more error-prone in subtle ways. Lance is one company that has recently emerged to tackle this challenge. Companies like Midjourney and WeRide are in the process of converting petabytes-scale datasets to the Lance format and have seen meaningful improvements in performance versus legacy formats like Parquet and TFRecords, as well as an order of magnitude reduction in incremental storage costs. Lance isn’t stopping at storage – they’ve recognized the need to rebuild the entire data management stack to better fit the world we are moving toward, a world in which unstructured, multi-modal data becomes an organization’s most valuable asset. Their first platform offering, LanceDB (now in private beta), provides a seamless embedded experience for developers who want to build multi-modal capabilities into their applications. Lance is just one example of a company bringing developers into the multi-modal future – I couldn’t be more excited to see what other technologies emerge to push the boundaries of multi-modal applications. With the pace at which AI is advancing, it won’t be long before that future becomes a reality. – Saar Gur, General Partner at CRV Abnormal SecurityStemming the tide of AI-enhanced cyber attacksI am an unabashed optimist about generative AI, but not a naive one. For example, I’m concerned about a huge spike in “social engineering” attacks such as spear-phishing, which typically uses email to extract sensitive information. Incidences have radically increased since ChatGPT exploded onto the scene last year. According to Abnormal Security, the number of attacks per 1,000 people has jumped from below 500 to more than 2,500 in the past year. And the sophistication of attacks is skyrocketing. Just as any student can use ChatGPT to write a perfectly good essay, it can also be used to churn out fraudulent messages that are grammatically perfect and dangerously personalized, without so much as a Google search. According to the FBI, such targeted “business email compromise” attacks have caused more than $50 billion in losses since 2013. And it’s going to get worse. Every day, untold numbers of cyber-criminals and other bad actors get their hands on blackhat tools like “WormGPT,” a chatbot designed to mine malware data to craft the most convincing and scalable fraud campaigns. Fortunately, Abnormal co-founders Evan Reiser and Sanjay Jeyakumar are hard at work using AI to combat this threat. Think of it as using AI to defend against AI. Historically, email security systems scanned for signatures of known-bad behavior, like a particular IP address or attempts to access employees’ personally identifiable information (PII). Using the power of AI, Abnormal flips this on its head. Since AI-enhanced attacks are designed to seem legitimate, Abnormal’s approach is to understand known-good behavior so well that even subtle departures become visible. The company uses large language models to build a detailed representation of its digital inner and external workings, such as which people typically talk to each other and what they may interact around. If my partner Reid Hoffman sent me an email that said, “Hey, please send me the latest deck for Inflection.AI,” Abnormal’s AI engine would quickly notice that Reid seldom starts sentences with “Hey” and rarely sends one-sentence notes – and that he has never once asked me to send him documents about Inflection. (As a co-founder and board member of the company, he would have more access to decks than I would!) Not surprisingly, Abnormal has seen accelerating enterprise customer demand as security concerns around generative AI have risen. I find Abnormal’s success particularly gratifying, given how quickly it has harnessed AI to counter a problem accelerated by AI. Bad actors often enjoy a lengthy first-mover advantage in times of disruptive technological change. After all, they can exploit innovations without worrying about product quality, security, or regulators, who have yet to lay down new laws. (The history of spam and ransomware provides interesting case studies.) At the same time, technology startups are understandably focused on developing powerful new use cases for their innovations rather than stopping illegal or destructive ones. But like everything to do with AI, the potential cyber damage from its misuse is staggering. Thanks to the foresight of the Abnormal team, the new normal for cybercriminals may prove to be at least a little less accommodating. – Saam Motamedi, Partner at Greylock DustAugmenting knowledge workersIt’s obvious that Large Language Models (LLMs) will increase the productivity of knowledge workers. But it’s still unclear exactly how. Dust is on a mission to figure that out. Since LLMs won’t be of much help in the enterprise if they don’t have access to internal data, Dust has built a platform that indexes, embeds, and keeps updated in real-time companies’ internal data (Notion, Slack, Drive, GitHub) to expose it to LLM-backed products. Dust co-founders Gabriel Hubert and Stanislas Polu sold a company to Stripe and worked there for five years. They witnessed firsthand how fast-growing companies can struggle with scale. They’ve seen what they call “information debt” creep in, and they’re now focused on applying LLMs to solve some of the major pain points associated with that. They’re currently exploring the following applications on top of their platform:

It’s a lot, but Dust’s founders believe most of these streams will ultimately contribute to one coherent product. They’re still in the early days of their exploration and are forming the final focused picture of what Dust will be. Based on their initial iterations, they believe they’ve confirmed their core hypothesis: that knowledge workers can be augmented (not replaced) with LLM applications that have access to company data, and a new kind of “team operating system” can be built for that. – Konstantine Buhler, Partner at Sequoia LabelboxUnlocking business dataThe “rise of big data” has been happening for over 20 years, and although companies continue to ingest more data than ever, many still struggle to use it to generate insights from their AI models. Data processing and annotation remain the most tedious and expensive part of the AI process but also the most important for quality outcomes. Even with the rise in pre-trained large language models, enterprises need to focus on using their proprietary data (across multiple modalities) to create production AI that leads to differentiated services, insights, and increased operational efficiencies. Labelbox solves this challenge by simplifying how companies feed their datasets into AI models. It helps data and ML teams find the correct data, process and annotate it, push models into production, and continuously measure and improve performance. Labelbox’s new platform takes advantage of the generative AI movement. Model Foundry allows teams to rapidly experiment with AI foundation models from all major closed and open-source providers enabling them to pre-label data in just a few clicks and rapidly experiment. In doing so, they can learn which model performs best on their data. Model Foundry auto-generates detailed performance metrics for every experiment run while versioning and snapshotting outcomes. Its impact can be profound. Traditionally, humans take days to complete a straightforward but time-consuming task like classifying e-commerce listings with multiple paragraphs of text. With GPT-4, however, that task can be performed within hours. Model Foundry allows businesses to discover these efficiencies themselves. This is far from the only example. Early results show that over 88% of labeling tasks can be meaningfully accelerated by one or more foundation models. Instead of coding and building pipelines to feed your data to models, Labelbox enables anyone to pre-label data with a few clicks. It is built to empower teams to work collaboratively and draw in cross-functional expertise to maintain human supervision for data quality assurance. This functionality democratizes access to AI by allowing ML experts and business SMEs to easily evaluate models, enrich datasets, and collaborate to build intelligent applications. Labelbox has proven to significantly reduce costs and increase model quality for many of the world’s largest enterprises, including Walmart, Procter & Gamble, Genentech, and Adobe. For enterprises, the race is now on to unleash the power of these foundation models on their proprietary data to solve business problems. We are excited to see how Labelbox will help businesses unlock their data and deliver better products at much higher efficiencies. – Robert Kaplan, Partner at SoftBank PuzzlerRespond to this email for a hint.

The right honorable Austin V snaffled the answer first. He was joined by Shashwat N, Bruce G, Krishna N, Michael S, Rohit B, Laura F, John G, Emerson K, Adam G, Scott M, Michael O, Carter G, Saagar B, Gary J, Morihiko Y, Ariel B, and Rob N. Well played to all for their response to this riddle:

The answer? A library. 📚 Until next time, Mario |

Older messages

Stablecoins: The Next Financial Platform

Sunday, July 16, 2023

Stablecoins are more than just an asset – they're the foundation for a modernized financial system.

Brex’s Second Act

Sunday, July 16, 2023

The $12.3 billion fintech is hitting new heights and entering a new growth phase. It's a dividend of the firm's clear-eyed decision-making.

Modern Meditations: Sam Lessin

Sunday, July 9, 2023

The Slow Ventures GP on philosophy, empowering metrics, AI hype, and John Wick.

AI and The Burden of Knowledge

Sunday, June 25, 2023

A story of superior intelligence and possible obsolescence.

Modern Meditations: Scott Belsky

Tuesday, June 20, 2023

The Adobe CPO on innovating from within, shipping slow, and AI.

You Might Also Like

🚀 Ready to scale? Apply now for the TinySeed SaaS Accelerator

Friday, February 14, 2025

What could $120K+ in funding do for your business?

📂 How to find a technical cofounder

Friday, February 14, 2025

If you're a marketer looking to become a founder, this newsletter is for you. Starting a startup alone is hard. Very hard. Even as someone who learned to code, I still believe that the

AI Impact Curves

Friday, February 14, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. AI Impact Curves What is the impact of AI across different

15 Silicon Valley Startups Raised $302 Million - Week of February 10, 2025

Friday, February 14, 2025

💕 AI's Power Couple 💰 How Stablecoins Could Drive the Dollar 🚚 USPS Halts China Inbound Packages for 12 Hours 💲 No One Knows How to Price AI Tools 💰 Blackrock & G42 on Financing AI

The Rewrite and Hybrid Favoritism 🤫

Friday, February 14, 2025

Dogs, Yay. Humans, Nay͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🦄 AI product creation marketplace

Friday, February 14, 2025

Arcade is an AI-powered platform and marketplace that lets you design and create custom products, like jewelry.

Crazy week

Friday, February 14, 2025

Crazy week. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

join me: 6 trends shaping the AI landscape in 2025

Friday, February 14, 2025

this is tomorrow Hi there, Isabelle here, Senior Editor & Analyst at CB Insights. Tomorrow, I'll be breaking down the biggest shifts in AI – from the M&A surge to the deals fueling the

Six Startups to Watch

Friday, February 14, 2025

AI wrappers, DNA sequencing, fintech super-apps, and more. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

How Will AI-Native Games Work? Well, Now We Know.

Friday, February 14, 2025

A Deep Dive Into Simcluster ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏