Inside Discord’s reform movement for banned users

Here’s this week’s free column — in which I embed with Discord as it rolls out a new policy intended to rehabilitate the large number of teenage trolls on the platform. Do you value independent reporting on social networks and the trust and safety teams that keep them running? If so, your support would mean a lot to us. Upgrade your subscription today and we’ll email you first with all our scoops — like this week’s interview with a former Twitter employee that Elon Musk fired for criticizing her. ➡️

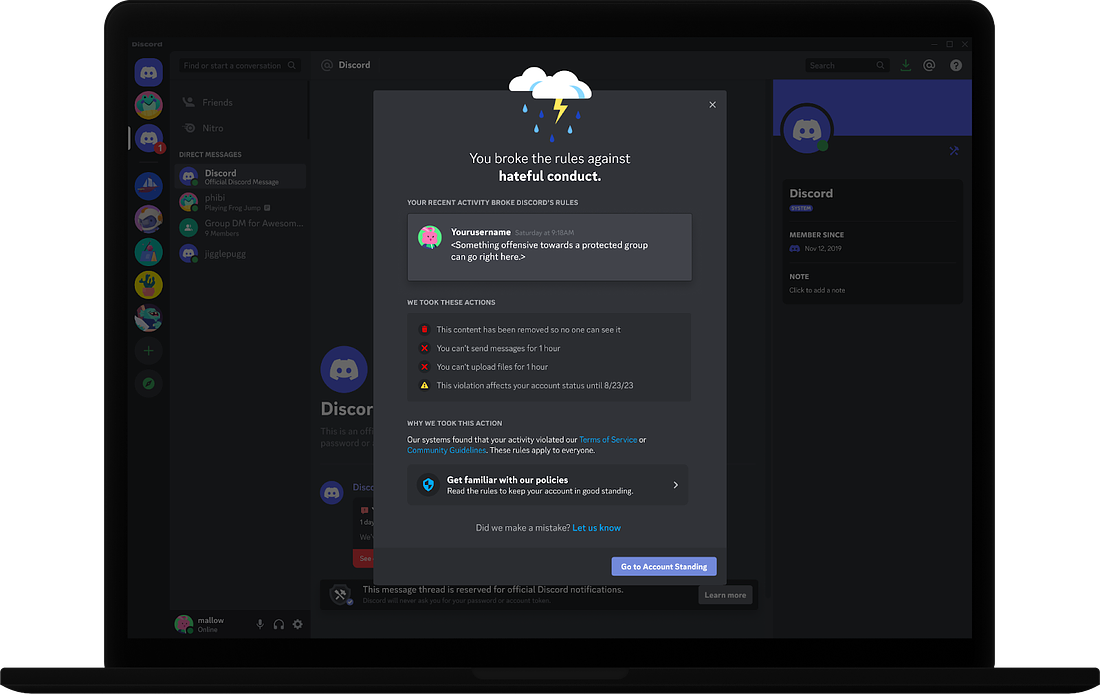

Inside Discord’s reform movement for banned usersMost platforms ban their trolls forever. Discord wants to rehabilitate them Discord's new warning screen (Discord) Today, let’s talk about how the traditional platform justice system is seeing signs of a new reform movement. If it’s successful at Discord, its backers hope that the initiative could lead to better behavior around the web. I. Discord’s San Francisco campus is a tech company headquarters like many others, with its open-plan office, well stocked micro-kitchens and employees bustling in and out of over-booked conference rooms. But step through the glass doors at its entrance and it is immediately apparent that this is a place built by gamers. Arcade-style art decks the walls, various games hide in corners, and on Wednesday afternoon, a trio of employees sitting in a row were competing in a first-person shooter. Video games are designed for pure fun, but the community around those games can be notoriously toxic. Angry gamers hurl slurs, doxx rivals, and in some of the most dangerous cases, summon SWAT teams to their targets’ homes. For Discord, which began as a tool for gamers to chat while playing together, gamers are both a key constituency and a petri dish for understanding the evolution of online harms. If it can hurt someone, there is probably an angry gamer somewhere trying it out. By now, of course, eight-year-old Discord hosts much more than gaming discussions. By 2021, it reported more than 150 million monthly users, and its biggest servers now include ones devoted to music, education, science, and AI art. Along with the growing user base has come high-profile controversies over what users are doing on its servers. In April, the company made headlines when leaked classified documents from the Pentagon were found circulating on the platform. Discord faced previous scrutiny over its use in 2017 by white nationalists planning the “Unite the Right” rally in Charlottesville, VA, and later when the suspect in a racist mass shooting in Buffalo, NY was found to have uploaded racist screeds to the platform. Most of the problematic posts on Discord aren’t nearly that grave, of course. As on any large platform, Discord fights daily battles against spam, harassment, hate speech, porn, and gore. (At the height of crypto mania, it also became a favored destination for scammers.) Most platforms deal with these issues with a variation of a three-strikes-and-you’re-out policy. Break the rules a couple times and you get a warning; break them a third time and your account is nuked. In many cases, strikes are forgiven after some period of time — 30 days, say, or 90. The nice thing about this policy from a tech company’s perspective is that it’s easy to communicate, and it “scales.” You can build an automated system that issues strikes, reviews appeals, and bans accounts without any human oversight at all. At a time when many tech companies are pulling back on trust and safety efforts, a policy like this has a lot of appeal. II. When Discord’s team reviewed its own policies around warning and suspending users, though, it found the system wanting. One, a three-strikes policy isn’t proportionate. It levies the same penalty for both minor infractions and major violations. Two, it doesn’t rehabilitate. Most users who receive strikes probably don’t deserve to be permanently banned, but if you want them to stay you have to figure out how to educate them. Three, most platform disciplinary systems lack nuance. If a teenage girl posts a picture depicting self-harm, Discord will remove the picture under its policies. But the girl doesn’t need to be banned from social media — she needs to be pointed toward resources that can help her. On top of all that, Discord had one additional complication to consider. Half of its users are 13 to 24 years old; a substantial portion of its base are teenagers. Teenagers are inveterate risk-takers and boundary pushers, and Discord was motivated to build a system that would rein in their worst impulses and — in the best-case scenario — turn them into upstanding citizens of the internet. This is the logic that went into Discord’s new warning system, which it announced today. The company explained the changes in a blog post:

A system like this isn’t totally novel; Instagram takes a similar approach. Where Discord goes further is in its system of punishments. Rather than simply give users a strike, it limits their behavior on the platform based on their violation. If you post a bunch of gore in a server, Discord will temporarily limit your ability to upload media. If you raid someone else’s server and flood it with messages, Discord will temporarily shut off your ability to send messages. “As an industry we’ve had a lot of hammers at our disposal. We’re trying to introduce more scalpels into our approach,” John Redgrave, Discord’s vice president of trust and safety, told me in an interview. “That doesn’t just benefit Discord — it benefits all platforms, if users can actually change their behavior.” And when someone does cross the line repeatedly, Discord will strive not to ban the user forever. Instead, it will ban them for one year — a drastic reduction in sentencing for an industry in which lifetime bans are the norm. It’s a welcome acknowledgement of the importance of social networks in the lives of people online, particularly young people — and a rare embrace of the idea that most wayward users can be rehabilitated, if only someone would take the time to try. “We really want to give people who have had a bad day the chance to change,” Savannah Badalich, Discord’s senior director of policy, told me. The new system has already been tested in a small group of servers and will begin rolling out in the coming weeks, Badalich said. Along with the new warning system, the company is introducing a feature called Teen Safety Assist that is enabled by default for younger users. When switched on, it scans incoming messages from strangers for inappropriate content and blurs potentially sensitive images in direct messages. III. On Wednesday afternoon, Discord let me sit in on a meeting with Redgrave, Badalich, and four other members of its 200-person trust and safety team. The subject: could the warning system it had just announced for individual users be adapted for servers as well? After all, sometimes problem usage at Discord goes beyond individual users. Servers violate policies too, and now that the warning system for individuals has rolled out, the company is turning its attention to group-based harms. I appreciated the chance to sit in on the meeting, which was on the record, since the company is still in the early stages of building a solution. As in most subjects related to content moderation, untangling the various equities involved can be very difficult. In this case, members of the team had to decide who was responsible for what happened in a server gone bad. If your first thought was “the server’s owner,” that was mine too. But sometimes moderators get mad at server owners, and retaliate against them by posting content that breaks Discord’s rules — a kind of scorched-earth policy aimed at getting the server banned. Alright, then. Perhaps moderators should be considered just as responsible for harms in a server as the owner? Well, it turns out that Discord doesn’t have a totally consistent definition of who counts as an active moderator. Some users are automatically given moderator permissions when they join a server. If the server goes rogue and the “moderator” has never posted in the server, why should they be held accountable? Moreover, team members said, some server owners and moderators are often unfamiliar with Discord’s community guidelines. Others might know the rules but weren’t actually aware of the bad behavior in a server — either because it’s too big and active to read every post, or because they haven’t logged in lately. Finally, this set of questions applies to the majority of servers where harm has occurred incidentally. Discord also has to consider the smaller but significant number of servers that are set up to do harm — such as by gathering and selling child sexual abuse material. Those servers require much different assumptions and enforcement mechanisms, the team agreed. All of it can feel like an impossible knot to untangle. But in the end, the team members found a way forward: analyzing a combination of server metadata, along with the behavior of server owners, moderators and users, to diagnose problem servers and attempt to rehabilitate them. It wasn’t perfect — nothing in trust and safety ever is. “The current system is a fascinating case of over- and under-enforcement,” one product policy specialist said, only half-joking. “What we’re proposing is a somewhat different case of over- and under-enforcement.” Still, I left Discord headquarters that day confident that the company’s future systems would improve over time. Too often, trust and safety teams get caricatured as partisan scolds and censors. Visiting Discord offered a welcome reminder that they can be innovators, too.  On the podcast this week: Three ways of understanding what’s going on inside the black box of a large language model. Then: I’m sorry but we discussed the Marc Andreessen thing. And finally, Brent Seales joins us to discuss the Vesuvius Challenge — a fascinating and recently successful experiment in which a 21-year-old college student used AI to begin decoding an ancient scroll. Apple | Spotify | Stitcher | Amazon | Google Governing

Industry

Those good postsFor more good posts every day, follow Casey’s Instagram stories.  (Link)  (Link)  (Link) Talk to usSend us tips, comments, questions, and restorative justice for banned trolls: casey@platformer.news and zoe@platformer.news. By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

How one former Twitter employee could beat Elon Musk in court

Thursday, October 19, 2023

In her first interview, former engineer Yao Yue discusses being fired over a tweet — and her success in getting the NLRB to file its first formal complaint against X

How the attacks in Israel are changing Threads

Tuesday, October 10, 2023

Three months into its existence, the app's purpose may be coming into focus — if Meta will embrace it

Taylor Lorenz on her extremely online history of the internet

Wednesday, October 4, 2023

Notes on selling books via Instagram stories, fighting with Elon, and which platform is best at shielding users from harassment

The synthetic social network is coming

Friday, September 29, 2023

Between ChatGPT's surprisingly human voice and Meta's AI characters, our feeds may be about to change forever

How Google taught AI to doubt itself

Wednesday, September 20, 2023

Can you stop chatbots from making stuff up using search?

You Might Also Like

Making Wayves

Tuesday, March 4, 2025

+ Girls just wanna have funding; e-bike turf war View in browser Powered by ViennaUP Author-Martin by Martin Coulter Good morning there, Since 2021, VC firm Future Planet Capital (FPC) has secured more

Animal Shine And Doctor Stein 🐇

Monday, March 3, 2025

And another non-unique app͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

upcoming analyst-led events

Monday, March 3, 2025

the future of the customer journey, tech M&A predictions, and the industrial AI arms race. CB-Insights-Logo-light copy Upcoming analyst-led webinars Highlights: The future of the customer journey,

last call...

Monday, March 3, 2025

are you ready? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🦄 Dimmable window technology

Monday, March 3, 2025

Miru is creating windows that uniformly tint—usable in cars, homes, and more.

Lopsided AI Revenues

Monday, March 3, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. Lopsided AI Revenues Which is the best business in AI at the

📂 NEW: 140 SaaS Marketing Ideas eBook 📕

Monday, March 3, 2025

Most SaaS marketing follows the same playbook. The same channels. The same tactics. The same results. But the biggest wins? They come from smart risks, creative experiments, and ideas you

17 Silicon Valley Startups Raised $633Million - Week of March 3, 2025

Monday, March 3, 2025

🌴 Upfront Summit 2025 Recap 💰 Why Is Warren Buffett Hoarding $300B in Cash 💰 US Crypto Strategic Reserve ⚡ Blackstone / QTS AI Power Strains 🇨🇳 Wan 2.1 - Sora of China ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⛔ STOP paying suppliers upfront - even if they offer a cheaper price in return!

Monday, March 3, 2025

You're not really saving money if all your cash is stuck in inventory. Hey Friend , A lot of ecommerce founders think paying upfront for inventory at a lower price is a smart move. Not always!

13 Content & Media Deals 💰

Monday, March 3, 2025

Follow the money in media ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏