Astral Codex Ten - Your Book Review: How Language Began

[This is one of the finalists in the 2024 book review contest, written by an ACX reader who will remain anonymous until after voting is done. I’ll be posting about one of these a week for several months. When you’ve read them all, I’ll ask you to vote for a favorite, so remember which ones you liked] I. THE GODYou may have heard of a field known as "linguistics". Linguistics is supposedly the "scientific study of language", but this is completely wrong. To borrow a phrase from elsewhere, linguists are those who believe Noam Chomsky is the rightful caliph. Linguistics is what linguists study. I'm only half-joking, because Chomsky’s impact on the study of language is hard to overstate. Consider the number of times his books and papers have been cited, a crude measure of influence that we can use to get a sense of this. At the current time, his Google Scholar page says he's been cited over 500,000 times. That’s a lot. It isn’t atypical for a hard-working professor at a top-ranked institution to, after a career’s worth of work and many people helping them do research and write papers, have maybe 20,000 citations (= 0.04 Chomskys). Generational talents do better, but usually not by more than a factor of 5 or so. Consider a few more citation counts:

Yes, fields vary in ways that make these comparisons not necessarily fair: fields have different numbers of people, citation practices vary, and so on. There is also probably a considerable recency bias; for example, most biologists don’t cite Darwin every time they write a paper whose content relates to evolution. But 500,000 is still a mind-bogglingly huge number. Not many academics do better than Chomsky citation-wise. But there are a few, and you can probably guess why:

…well, okay, maybe I don’t entirely get Foucault’s number. Every humanities person must have an altar of him by their bedside or something. Chomsky has been called “arguably the most important intellectual alive today” in a New York Times review of one of his books, and was voted the world’s top public intellectual in a 2005 poll. He’s the kind of guy that gets long and gushing introductions before his talks (this one is nearly twenty minutes long). All of this is just to say: he’s kind of a big deal. This is what he looks like. According to Wikipedia, the context for this picture is: “Noam Chomsky speaks about humanity's prospects for survival” Since around 1957, Chomsky has dominated linguistics. And this matters because he is kind of a contrarian with weird ideas. Is language for communicating? No, it’s mainly for thinking: (What Kind of Creatures Are We? Ch. 1, pg. 15-16)

Should linguists care about the interaction between culture and language? No, that’s essentially stamp-collecting: (Language and Responsibility, Ch. 2, pg. 56-57)

Did the human capacity for language evolve gradually? No, it suddenly appeared around 50,000 years ago after a freak gene mutation: (Language and Mind, third edition, pg, 183-184)

I think all of these positions are kind of insane for reasons that we will discuss later. (Side note: Chomsky’s proposal is essentially the hard takeoff theory of human intelligence.) Most consequential of all, perhaps, are the ways Chomsky has influenced (i) what linguists mainly study, and (ii) how they go about studying it. Naively, since language involves many different components—including sound production and comprehension, intonation, gestures, and context, among many others—linguists might want to study all of these. While they do study all of these, Chomsky and his followers view grammar as by far the most important component of humans’ ability to understand and produce language, and accordingly make it their central focus. Roughly speaking, grammar refers to the set of language-specific rules that determine whether a sentence is well-formed. It goes beyond specifying word order (or ‘surface structure’, in Chomskyan terminology) since one needs to know more than just where words are placed in order to modify or extend a given sentence. Consider a pair of sentences Chomsky uses to illustrate this point in Aspects of the Theory of Syntax (pg. 22), his most cited work: (1a) I expected John to be examined by a specialist. (2a) I persuaded John to be examined by a specialist. The words “expected” and “persuaded” appear in the same location in each sentence, but imply different ‘latent’ grammatical structures, or ‘deep structures’. One way to show this is to observe that a particular way of rearranging the words produces a sentence with the same meaning in the first case (1a = 1b), and a different meaning in the second (2a != 2b): (1b) I expected a specialist to examine John. (2b) I persuaded a specialist to examine John. In particular, the target of persuasion is “John” in the case of (2a), and “the specialist” in the case of (2b). A full Chomskyan treatment of sentences like this would involve hierarchical tree diagrams, which permit a precise description of deep structure. You may have encountered the famous sentence: “Colorless green ideas sleep furiously.” It first appeared in Chomsky’s 1957 book Syntactic Structures, and the point is that even nonsense sentences can be grammatically well-formed, and that speakers can quickly assess the grammatical correctness of even nonsense sentences that they’ve never seen before. To Chomsky, this is one of the most important facts to be explained about language. A naive response to Chomsky’s preoccupation with grammar is: doesn’t real language involve a lot of non-grammatical stuff, like stuttering and slips of the tongue and midstream changes of mind? Of course it does, and Chomsky acknowledges this. To address this point, Chomsky has to move the goalposts in two important ways. First, he famously distinguishes competence from performance, and identifies the former as the subject of any serious theory of language: (Aspects of the Theory of Syntax, Ch. 1, pg. 4)

Moreover, he claims that grammar captures most of what we should mean when we talk about speakers’ linguistic competence: (Aspects of the Theory of Syntax, Ch. 1, pg. 24)

Another way Chomsky moves the goalposts is by distinguishing E-languages, like English and Spanish and Japanese, from I-languages, which only exist inside human minds. He claims that serious linguistics should be primarily interested in the latter. In a semi-technical book summarizing Chomsky’s theory of language, Cook and Newson write: (Chomsky’s Universal Grammar: An Introduction, pg. 13)

Not only should linguistics primarily be interested in studying I-languages, but to try and study E-languages at all may be a fool’s errand: (Chomsky’s Universal Grammar: An Introduction, pg. 13)

I Am Not A Linguist (IANAL), but this redefinition of the primary concern of linguistics seems crazy to me. Is studying a language like English as it is actually used really of no particular empirical significance? And this doesn’t seem to be a one-time hyperbole, but a representative claim. Cook and Newson continue: (Chomsky’s Universal Grammar: An Introduction, pg. 14)

So much for what linguists ought to study. How should they study it? The previous quote gives us a clue. Especially in the era before Chomsky (BC), linguists were more interested in description. Linguists were, at least in one view, people who could be dropped anywhere in the world, and emerge with a tentative grammar of the local language six months later. (A notion like this is mentioned early in this video.) Linguists catalog the myriad of strange details about human languages, like the fact that some languages don’t appear to have words for relative directions, or “thank you”, or “yes” and “no”. After Chomsky's domination of the field (AD), there were a lot more theorists. While you could study language by going out into the field and collecting data, this was viewed as not the only, and maybe not even the most important, way to work. Diagrams of sentences proliferated. Chomsky, arguably the most influential linguist of the past hundred years, has never done fieldwork. In summary, to Chomsky and many of the linguists working in his tradition, the scientifically interesting component of language is grammar competence, and real linguistic data only indirectly reflects it. All of this matters because the dominance of Chomskyan linguistics has had downstream effects in adjacent fields like artificial intelligence (AI), evolutionary biology, and neuroscience. Chomsky has long been an opponent of the statistical learning tradition of language modeling, essentially claiming that it does not provide insight about what humans know about languages, and that engineering success probably can’t be achieved without explicitly incorporating important mathematical facts about the underlying structure of language. Chomsky’s ideas have motivated researchers to look for a “language gene” and “language areas” of the brain. Arguably, no one has yet found either—but more on that later. How Chomsky attained this stranglehold on linguistics is an interesting sociological question, but not our main concern in the present work². The intent here is not to pooh-pooh Chomsky, either; brilliant and hard-working people are often wrong on important questions. Consider that his academic career began in the early 1950s—over 70 years ago!—when our understanding of language, anthropology, biology, neuroscience, and artificial intelligence, among many other things, was substantially more rudimentary. Where are we going with this? All of this is context for understanding the ideas of a certain bomb-throwing terrorist blight on the face of linguistics: Daniel Everett. How Language Began is a book he wrote about, well, what language is and how it began. Everett is the anti-Chomsky. II. THE MISSIONARYWe all love classic boy-meets-girl stories. Here’s one: boy meets girl at a rock concert, they fall in love, the boy converts to Christianity for the girl, then the boy and girl move to the Amazon jungle to dedicate the rest of their lives to saving the souls of an isolated hunter-gatherer tribe. Daniel Everett is the boy in this story. The woman he married, Keren Graham, is the daughter of Christian missionaries and had formative experiences living in the Amazon jungle among the Sateré-Mawé people. At seventeen, Everett became a born-again Christian; at eighteen, he and Keren married; and over the next few years, they started a family and prepared to become full-fledged missionaries like Keren’s parents. First, Everett studied “Bible and Foreign Missions” at the Moody Bible Institute in Chicago. After finishing his degree in 1975, the natural next step was to train more specifically to follow in the footsteps of Keren’s parents. In 1976, he and his wife enrolled in the Summer Institute of Linguistics (SIL) to learn translation techniques and more viscerally prepare for life in the jungle:

Everett apparently had a gift for language-learning. This led SIL to invite Everett and his wife to work with the Pirahã people (pronounced pee-da-HAN), whose unusual language had thwarted all previous attempts to learn it. In 1977, Everett’s family moved to Brazil, and in December they met the Pirahã for the first time. As an SIL-affiliated missionary, Everett’s explicit goals were to (i) translate the Bible into Pirahã, and (ii) convert as many Pirahã as possible to Christianity. But Everett’s first encounter with the Pirahã was cut short for political reasons: (Don’t Sleep There Are Snakes, Ch. 1, pg. 13-14)

Everett became a linguist proper sort of by accident, mostly as an excuse to continue his missionary work. But he ended up developing a passion for it. In 1980, he completed Aspects of the Phonology of Pirahã, his master’s thesis. He continued on to get a PhD in linguistics, also from UNICAMP, and in 1983 finished The Pirahã Language and Theory of Syntax, his dissertation. He continued studying the Pirahã and working as an academic linguist after that. In all, Everett spent around ten years of his life living with the Pirahã, spread out over some thirty-odd years. As he notes in Don’t Sleep, There Are Snakes: (Prologue, pg. xvii-xviii)

Everett did eventually learn their language, and it’s worth taking a step back to appreciate just how hard that task was. No Pirahã spoke Portuguese, apart from some isolated phrases they used for bartering. They didn’t speak any other language at all—just Pirahã. How do you learn another group’s language when you have no languages in common? The technical term is monolingual fieldwork. But this is just a fancy label for some combination of pointing at things, listening, crude imitation, and obsessively transcribing whatever you hear. For years. It doesn’t help that the Pirahã language seems genuinely hard to learn in a few different senses. First, it is probably conventionally difficult for Westerners to learn since it is a tonal language (two tones: high and low) with a small number of phonemes (building block sounds) and a few unusual sounds³. Second, there is no written language. Third, the language has a variety of ‘channels of discourse’, or ways of talking specialized for one or another cultural context. One of these is ‘whistle speech’; Pirahãs can communicate purely in whistles. This feature appears to be extremely useful during hunting trips: (Don’t Sleep, There Are Snakes, Ch. 11, pg. 187-188)

Fourth, important aspects of the language reflect core tenets of Pirahã culture in ways that one might not a priori expect. Everett writes extensively about the ‘immediacy of experience principle’ of Pirahã culture, which he summarizes as the idea that: (Don’t Sleep, There Are Snakes, Ch. 7, pg. 132)

One way the language reflects this is that the speaker must specify how they know something by affixing an appropriate suffix to verbs: (Don’t Sleep, There Are Snakes, Ch. 12, pg. 196)

Everett also convincingly links this cultural principle to the lack of Pirahã number words and creation myths. On the latter topic, Everett recalls the following exchange: (Don’t Sleep, There Are Snakes, Ch. 7, pg. 134)

And all of this is to say nothing of the manifold perils of the jungle: malaria, typhoid fever, dysentery, dangerous snakes, insects, morally gray river traders, and periodic downpours. If Indiana Jones braved these conditions for years, we would consider his stories rousing adventures. Everett did this while also learning one of the most unusual languages in the world.

By the way, he did eventually sort of achieve his goal of translating the Bible. Armed with a solid knowledge of Pirahã, he was able to translate the New Testament’s Gospel of Mark. Since the Pirahã have no written language, he provided them with a recorded version, but did not get the reaction he expected: (Don’t Sleep, There Are Snakes, Ch. 17, pg. 267-268)

One reaction to hearing the gospel caught Everett even more off-guard: (Don’t Sleep, There Are Snakes, Ch. 17, pg. 269)

But the Pirahã had an even more serious objection to Jesus: (Don’t Sleep, There Are Snakes, Ch. 17, pg. 265-266)

In the end, Everett never converted a single Pirahã. But he did even worse than converting zero people—he lost his own faith after coming to believe that the Pirahã had a good point. After keeping this to himself for many years, he revealed his loss of faith to his family, which led to a divorce and his children breaking contact with him for a number of years afterward. But Everett losing his faith in the God of Abraham was only the beginning. Most importantly for us, he also lost his faith in the God of Linguistics—Noam Chomsky. III. THE WARIn 2005, Everett’s paper “Cultural constraints on grammar and cognition in Pirahã: Another look at the design features of human language” was published in the journal Cultural Anthropology. An outsider might expect an article like this, which made a technical observation about the apparent lack of a property called ‘recursion’ in the Pirahã language, to receive an ‘oh, neat’ sort of response. Languages can be pretty different from one another, after all. Mandarin lacks plurals. Spanish sentences can omit an explicit subject. This is one of those kinds of things. But the article ignited a firestorm of controversy that follows Everett to this day. Praise for Everett and his work on recursion in Pirahã:

Apparently he struck a nerve. And there is much more vitriol like this; see Pullum for the best (short) account of the beef I’ve found, along with sources for each quote except the last. On the whole affair, he writes:

I’m not going to rehash all of the details, but the conduct of many in the pro-Chomsky faction is pretty shocking. Highly recommended reading. Substantial portions of the books The Kingdom of Speech and Decoding Chomsky are also dedicated to covering the beef and related issues, although I haven’t read them. What’s going on? Assuming Everett is indeed acting in good faith, why did he get this reaction? As I said in the beginning, linguists are those who believe Noam Chomsky is the rightful caliph. Central to Chomsky’s conception of language is the idea that grammar reigns supreme, and that human brains have some specialized structure for learning and processing grammar. In the writing of Chomsky and others, this hypothetical component of our biological endowment is sometimes called the narrow faculty of language (FLN); this is to distinguish it from other (e.g., sensorimotor) capabilities relevant for practical language use. A paper by Hauser, Chomsky, and Fitch titled “The Faculty of Language: What Is It, Who Has It, and How Did It Evolve?” was published in the prestigious journal Science in 2002, just a few years earlier. The abstract contains the sentence:

Some additional context is that Chomsky had spent the past few decades simplifying his theory of language. A good account of this is provided in the first chapter of Chomsky’s Universal Grammar: An Introduction. By 2002, arguably not much was left: the core claims were that (i) grammar is supreme, (ii) all grammar is recursive and hierarchical. More elaborate aspects of previous versions of Chomsky’s theory, like the idea that each language might be identified with different parameter settings of some ‘global’ model constrained by the human brain (the core idea of the so-called ‘principles and parameters’ formulation of universal grammar), were by now viewed as helpful and interesting but not necessarily fundamental. Hence, it stands to reason that evidence suggesting not all grammar is recursive could be perceived as a significant threat to the Chomskyan research program. If not all languages had recursion, then what would be left of Chomsky’s once-formidable theoretical apparatus? Everett’s paper inspired a lively debate, with many arguing that he is lying, or misunderstands his own data, or misunderstands Chomsky, or some combination of all of those things. The most famous anti-Everett response is “Pirahã Exceptionality: A Reassessment” by Nevins, Pesetsky, and Rodrigues (NPR), which was published in the prestigious journal Language in 2009. This paper got a response from Everett, which led to an NPR response-to-the-response. To understand how contentious even the published form of this debate became, I reproduce in full the final two paragraphs of NPR’s response-response:

Two observations here. First, another statement about “serious” linguistics; why does that keep popping up? Second, wow. That’s the closest you can come to cursing someone out in a prestigious journal. Polemics aside, what’s the technical content of each side’s argument? Is Pirahã recursive or not? Much of the debate appears to hinge on two things:

Everett generally takes recursion to refer to the following property of many natural languages: one can construct sentences or phrases from other sentences and phrases. For example: “The cat died.” -> “Alice said that [the cat died].” -> “Bob said that [Alice said that [the cat died.]]” In the above example, we can in principle generate infinitely many new sentences by writing “Z said X,” where X is the previous sentence and Z is some name. For clarity’s sake, one should probably distinguish between different ways to generate new sentences or phrases from old ones; Pullum mentions a few in the context of assessing Everett’s Pirahã recursion claims:

Regardless of the details, a generic prediction should be that there is no longest sentence in a language whose grammar is recursive. This doesn’t mean that one can say an arbitrarily long sentence in real life⁴. Rather, one can say that, given a member of some large set of sentences, one can always extend it. Everett takes the claim “All natural human languages have recursion.” to mean that, if there exists a natural human language without recursion, the claim is false. Or, slightly more subtly, if there exists a language which uses recursion so minimally that linguists have a hard time determining whether a corpus of linguistic data falsifies it or not, sentence-level recursion is probably not a bedrock principle of human languages. I found the following anecdote from a 2012 paper of Everett’s enlightening:

He does explicitly claim (in the aforementioned paper and elsewhere) that Pirahã probably has no longest sentence, which is about the most generic anti-recursion statement one can make. Chomsky and linguists working in his tradition sometimes write in a way consistent with Everett’s conception of recursion, but sometimes don’t. For example, consider this random 2016 blogpost I found by a linguist in training:

To be clear, this usage of ‘recursion’ seems consistent with how many other Chomskyan linguists have used the term. And with all due respect to these researchers, I find this notion of recursion completely insane, because it would imply (i) any language with more than one word in its sentences has recursion, and that (ii) all sentences are necessarily constructed recursively. The first implication means that “All natural human languages have recursion.” reduces to the vacuously true claim that “All languages allow more than one word in their sentences.”⁵ The second idea is more interesting, because it relates to how the brain constructs sentences, but as far as I can tell this claim cannot be tested using purely observational linguistic data. One would have to do some kind of experiment to check the order in which subjects mentally construct sentences, and ideally make brain activity measurements of some sort. Aside from sometimes involving a strange notion of recursion, another feature of the Chomskyan response to Everett relates to the distinction we discussed earlier between so-called E-languages and I-languages. Consider the following exchange from a 2012 interview with Chomsky:

Chomsky makes claims like this elsewhere too. The argument is that, even if there were a language without a recursive grammar, this is not inconsistent with his theory, since his theory is not about E-languages like English or Spanish or Pirahã. His theory only makes claims about I-languages, or equivalently about our innate language capabilities. But this is kind of a dumb rhetorical move. Either the theory makes predictions about real languages or it doesn’t. The statement that some languages in the world are arguably recursive is not a prediction; it’s an observation, and we didn’t need the theory to make it. What does it mean for the grammar of thought languages to be recursive? How do we test this? Can we test it by doing experiments involving real linguistic data, or not? If not, are we even still talking about language? To this day, as one might expect, not everyone agrees with Everett that (i) Pirahã lacks a recursive hierarchical grammar, and that (ii) such a discovery would have any bearing at all on the truth or falsity of Chomskyan universal grammar. Given that languages can be pretty weird, among other reasons, I am inclined to side with Everett here. But where does that leave us? We do not just want to throw bombs and tell everyone their theories are wrong. Does Everett have an alternative to the Chomskyan account of what language is and where it came from? Yes, and it turns out he’s been thinking about this for a long time. How Language Began is his 2017 offering in this direction. IV. THE BOOKSo what is language, anyway? Everett writes: (How Language Began, Ch. 1, pg. 15)

Okay, so far, so good. To the uninitiated, it looks like Everett is just listing all of the different things that are involved in language; so what? The point is that language is more than just grammar. He goes on to say this explicitly: (How Language Began, Ch. 1, pg. 16)

His paradigmatic examples here are Pirahã and Riau Indonesian, which appears to lack a hierarchical grammar, and which moreover apparently lacks a clear noun/verb distinction. You might ask: what does that even mean? I’m not 100% sure, since the linked Gil chapter appears formidable, but Wikipedia gives a pretty good example in the right direction:

Is “chicken” the subject of the sentence, the object of the sentence, or something else? Well, it depends on the context. What’s the purpose of language? Communication: (How Language Began, Introduction, pg. 5)

Did language emerge suddenly, as it does in Chomsky’s proposal, or gradually? Very gradually: (How Language Began, Introduction, pg. 7-8)

So far, we have a bit of a nothingburger. Language is for communication, and probably—like everything else!—emerged gradually over a long period of time. While these points are interesting as a contrast to Chomsky, they are not that surprising in and of themselves. But Everett’s work goes beyond taking the time to bolster common sense ideas on language origins. Two points he discusses at length are worth briefly exploring here. First, he offers a much more specific account of the emergence of language than Chomsky does, and draws on a mix of evidence from paleoanthropology, evolutionary biology, linguistics, and more. Second, he pretty firmly takes the Anti-Chomsky view on whether language is innate: (Preface, pg. xv)

These two points are not unrelated. Everett’s core idea is that language should properly be thought of as an invention rather than an innate human capability. You might ask: who invented it? Who shaped it? Lots of people, collaboratively, over a long time. In a word, culture. As Everett notes in the preface, “Language is the handmaiden of culture.” In any case, let’s discuss these points one at a time. First: the origins of language. There are a number of questions one might want to answer about how language began:

To Everett, the most important feature of language is not grammar or any particular properties of grammar, but the fact that it involves communication using symbols. What are symbols? (Ch. 1, pg. 17)

There are often rules for arranging symbols, but given how widely they can vary in practice, Everett views such rules as interesting but not fundamental. One can have languages with few rules (e.g., Riau) or complex rules (e.g., German); the key requirement for a language is that symbols are used to convey meaning. Where did symbols come from? To address this question, Everett adapts a theory due to the (in his view underappreciated) American polymath Charles Sanders Peirce: semiotics, the theory of signs. What are signs? (Ch. 1, pg. 16)

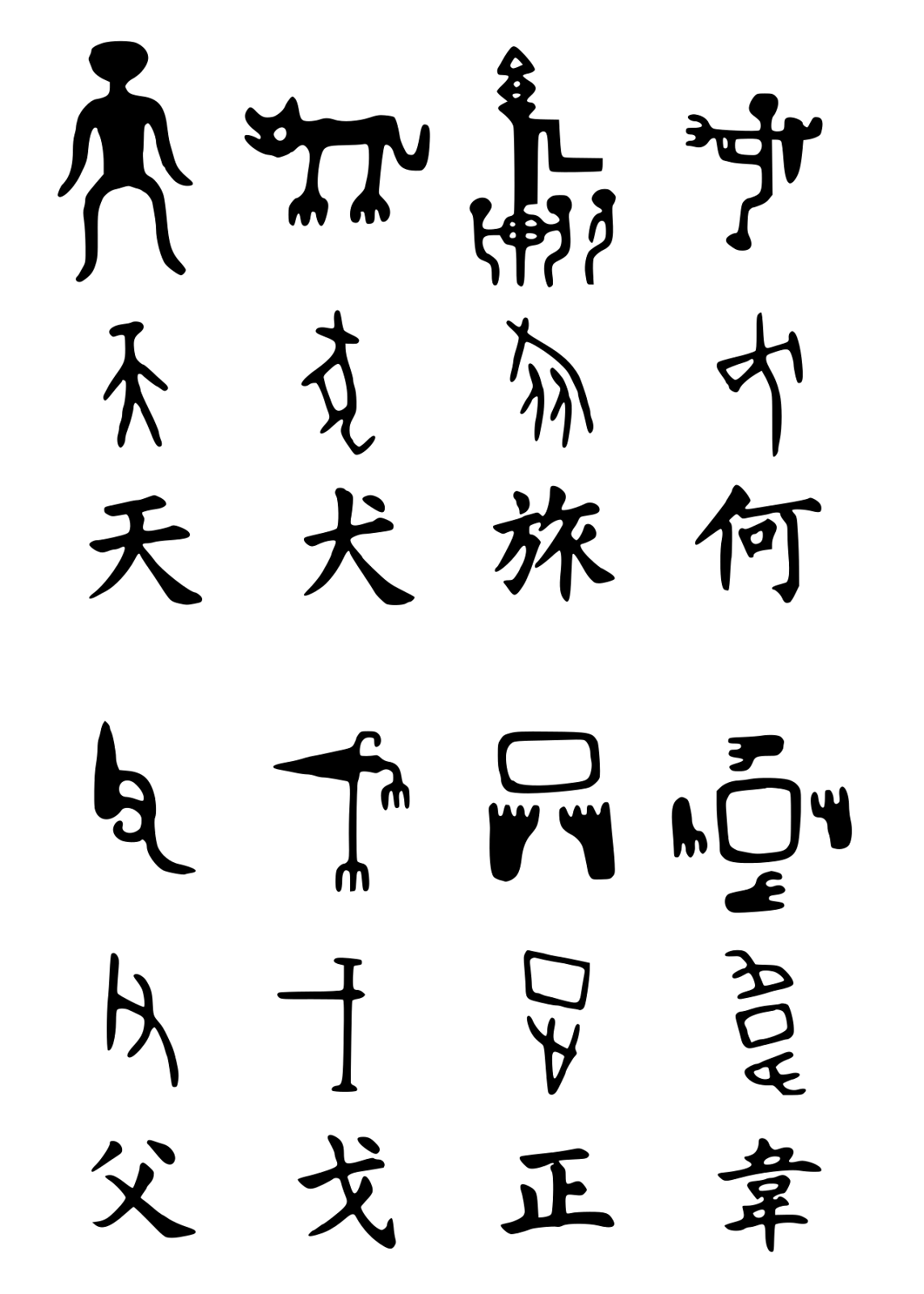

Everett, in the tradition of Peirce, distinguishes between various different types of signs. The distinction is based on (i) whether the pairing is intentional, and (ii) whether the form of the sign is arbitrary. Indexes are non-intentional, non-arbitrary pairings of form and meaning (think: dog paw print). Icons are intentional, non-arbitrary pairings of form and meaning (think: a drawing of a dog paw print). Symbols are intentional, arbitrary pairings (think: the word “d o g” refers to a particular kind of real animal, but does not resemble anything about it). Everett argues that symbols did not appear out of nowhere, but rather arose from a natural series of abstractions of concepts relevant to early humans. The so-called ‘semiotic progression’ that ultimately leads to symbols looks something like this: indexes (dog paw print) -> icons (drawing of dog paw print) -> symbols (“d o g”) This reminds me of what little I know about how written languages changed over time. For example, many Chinese characters used to look a lot more like the things they represented (icon-like), but became substantially more abstract (symbol-like) over time:

For a given culture and concept, the icon-to-symbol transition could’ve happened any number of ways. For example, early humans could’ve mimicked an animal’s cry to refer to it (icon-like, since this evokes a well-known physical consequence of some animal’s presence), but then gradually shifted to making a more abstract sound (symbol-like) over time. The index (non-intentional, non-arbitrary) to icon transition must happen even earlier. This refers to whatever process led early humans to, for example, mimic a given animal’s cry in the first place, or to draw people on cave walls, or to collect rocks that resemble human faces. Is there a clear boundary between indexes, icons, and symbols? It doesn’t seem like it, since things like Chinese characters changed gradually over time. But Everett doesn’t discuss this point explicitly. Why did we end up with certain symbols and not others? Well, there’s no good a priori reason to prefer “dog” over “perro” or “adsnofnowefn”, so Everett attributes the selection mostly to cultural forces. Everett suggests these forces shape language in addition to practical considerations, like the fact that, all else being equal, we prefer words that are not hundreds of characters long, because they would be too annoying to write or speak. When did language—in the sense of communication using symbols—begin? Everett makes two kinds of arguments here. One kind of argument is that certain feats are hard enough that they probably required language in this sense. Another kind of argument relates to how we know human anatomy has physically changed on evolutionary time scales. The feats Everett talks about are things like traveling long distances across continents, possibly even in a directed rather than random fashion; manufacturing nontrivial hand tools (e.g., Oldowan and Mousterian); building complex settlements (e.g., the one found at Gesher Benot Ya'aqov); controlling fire; and using boats to successfully navigate treacherous waters. Long before sapiens arose, paleoanthropological evidence suggests that our predecessors Homo erectus did all of these things. Everett argues that they might have had language over one million years ago⁶. This differs from Chomsky’s proposal by around an order of magnitude, time-wise, and portrays language as something not necessarily unique to modern humans. In Everett’s view, Homo sapiens probably improved on the language technology bestowed upon them by their erectus ancestors, but did not invent it. Everett’s anatomy arguments relate mainly to the structure of the head and larynx (our ‘voice box’, an organ that helps us flexibly modulate the sounds we produce). Over the past two million years, our brains got bigger, our face and mouth became more articulate, our larynx changed in ways that gave us a clearer and more elaborate inventory of sounds, and our ears became better tuned to hearing those sounds. Here’s the kind of thing Everett writes on this topic: (Ch. 5, pg. 117)

Pretty neat and not something I would’ve thought about. What aspects of biology best explain all of this? Interestingly, at no point does Everett require anything like Chomsky’s faculty of language; his view is that language was primarily enabled by early humans being smart enough to make a large number of useful symbol-meaning associations, and social enough to perpetuate a nontrivial culture. Everett thinks cultural pressures forced humans to evolve bigger brains and better communications apparatuses (e.g., eventually giving us modern hyenoid bones to support clearer speech), which drove culture to become richer, which drove yet more evolution, and so on. Phew. Let’s go back to the question of innateness before we wrap up. Everett’s answer to the innateness question is complicated and in some ways subtle. He agrees that certain features of the human anatomy evolved to support language (e.g., the pharynx and ears). He also agrees that modern humans are probably much better than Homo erectus at working with language, if indeed Homo erectus did have language. He mostly seems to take issue with the idea that some region of our brain is specialized for language. Instead, he thinks that our ability to produce and comprehend language is due to a mosaic of generally-useful cognitive capabilities, like our ability to remember things for relatively long times, our ability to form and modify habits, and our ability to reason under uncertainty. This last capability seems particularly important since, as Everett points out repeatedly, most language-based communication is ambiguous, and it is important for participants to exploit cultural and contextual information to more reliably infer the intended messages of their conversation partners. Incidentally, this is a feature of language Chomskyan theory tends to neglect⁷. Can’t lots of animals do all those things? Yes. Everett views the difference as one of degree, not necessarily of quality. What about language genes like FOXP2 and putative language areas like Broca’s and Wernicke’s areas? What about specific language impairments? Aren’t they clear evidence of language-specific human biology? Well, FOXP2 appears to be more related to speech control—a motor task. Broca’s and Wernicke’s areas are both involved in coordinating motor activity unrelated to speech. Specific language impairments, contrary to their name, also involve some other kind of deficit in the cases known to Everett. I have to say, I am not 100% convinced by the brain arguments. I mean, come on, look at the videos of people with Broca’s aphasia or Wernicke’s aphasia. Also, I buy that Broca’s and Wernicke’s areas (or whatever other putative language areas are out there) are active during non-language-related behavior, or that they represent non-language-related variables. But this is also true of literally every other area we know of in the brain, including well-studied sensory areas like the primary visual cortex. It’s no longer news when people find variable X encoded in region Y-not-typically-associated-with-X. Still, I can’t dismiss Everett’s claim that there is no language-specific brain area. At this point, it’s hard to tell. The human brain is complicated, and there remains much that we don’t understand. Overall, Everett tells a fascinatingly wide-ranging and often persuasive story. If you’re interested in what language is and how it works, you should read How Language Began. There’s a lot of interesting stuff in there I haven’t talked about, especially for someone unfamiliar with at least one of the areas Everett covers (evolution, paleoanthropology, theoretical linguistics, neuroanatomy, …). Especially fun are the chapters on aspects of language I don’t hear people talk about as much, like gestures and intonation. As I’ve tried to convey, Everett is well-qualified to write something like this, and has been thinking about these topics for a long time. He’s the kind of linguist most linguists wish they could be, and he’s worth taking seriously, even if you don’t agree with everything he says. V. THE REVELATIONSI want to talk about large language models now. Sorry. But you know I had to do this. Less than two years ago at the time of writing, the shocking successes of ChatGPT put many commentators in an awkward position. Beyond all the quibbling about details (Does ChatGPT really understand? Doesn’t it fail at many tasks trivial for humans? Could ChatGPT or something like it be conscious?), the brute empirical fact remains that it can handle language comprehension and generation pretty well. And this is despite the conception of language underlying it—language use as a statistical learning problem, with no sentence diagrams or grammatical transformations in sight—being somewhat antithetical to the Chomskyan worldview. Chomsky has frequently criticized the statistical learning tradition, with his main criticisms seeming to be that (i) statistical learning produces systems with serious defects, and (ii) succeeding at engineering problems does not tell us anything interesting about how the human brain handles language. These are reasonable criticisms, but I think they are essentially wrong. Statistical approaches succeeded where more directly-Chomsky-inspired approaches failed, and it was never close. Large language models (LLMs) like ChatGPT are not perfect, but they’re getting better all the time, and the onus is on the critics to explain where they think the wall is. It’s conceivable that a completely orthogonal system designed according to the principles of universal grammar could outperform LLMs built according to the current paradigm—but this possibility is becoming vanishingly unlikely. Why do statistical learning systems handle language so well? If Everett is right, the answer is in part because (i) training models on a large corpus of text and (ii) providing human feedback both give models a rich collection of what is essentially cultural information to draw upon. People like talking with ChatGPT not just because it knows things, but because it can talk like them. And that is only possible because, like humans, it has witnessed and learned from many, many, many conversations between humans. Statistical learning also allows these systems to appreciate context and reason under uncertainty, at least to some extent, since both of these are crucial factors in many of the conversations that appear in training data. These capabilities would be extremely difficult to implement by hand, and it’s not clear how a more Chomskyan approach would handle them, even if some kind of universal-grammar-based latent model otherwise worked fairly well. Chomsky’s claim that engineering success does not necessarily produce scientific insight is not uncommon, but a large literature speaks against it. And funnily enough, given that he is ultimately interested in the mind, engineering successes have provided some of our most powerful tools for interrogating what the mind might look like. The rub is that artificial systems engineered to perform some particular task well are not black boxes; we can look inside them and tinker as we please. Studying the internal representations and computations of such networks has provided neuroscience with crucial insights in recent years, and such approaches are particularly helpful given how costly neuroscience experiments (which might involve, e.g., training animals and expensive recording equipment) can be. Lots of recent computational neuroscience follows this blueprint: build a recurrent neural network to solve a task neuroscientists study, train it somehow, then study its internal representations to generate hypotheses about what the brain might be doing. In principle, (open-source) LLMs and their internal representations can be interrogated in precisely the same way. I’m not sure what’s been done already, but I’m confident that work along these lines will become more common in the near future. Given that high-quality recordings of neural dynamics during natural language use are hard to come by, studying LLMs might be essential for understanding human-language-related neural computations. When we peer inside language-competent LLMs, what will we find? This is a topic Everett doesn’t have much to say about, and on which Chomsky might actually be right. Whether we’re dealing with the brain or artificial networks, we can talk about the same thing at many different levels of description. In the case of the brain, we might talk in terms of interacting molecules, networks of electrically active neurons, or very many other effective descriptions. In the case of artificial networks, we can either talk about individual ‘neurons’, or some higher-level description that better captures the essential character of the underlying algorithm. Maybe LLMs, at least when trained on data from languages whose underlying rules can be parsimoniously described using universal grammar, effectively exploit sentence diagrams or construct recursive hierarchical representations of sentences using an operation like Merge. It’s still possible that formalisms like Chomsky’s provide a useful way of talking about what LLMs do, if anything like that is true. Such descriptions might be said to capture the ‘mind’ of an LLM, since from a physicalist perspective the ‘mind’ is just a useful way of talking about a complex system of interacting neurons. Regardless of who’s right and who’s wrong, the study language is certainly interesting and we have a lot more to learn. Something Chomsky wrote in 1968 seems like an appropriate summary of the way forward: (Language and Mind, pg. 1)

1 Chomsky 1991b refers to “Linguistics and adjacent fields: a personal view”, a chapter of The Chomskyan Turn. I couldn’t access the original text, so this quote-of-a-quote will have to do. 2 Chomsky’s domination of linguistics is probably due to a combination of factors. First, he is indeed brilliant and prolific. Second, Chomsky’s theories promised to ‘unify’ linguistics and make it more like physics and other ‘serious’ sciences; for messy fields like linguistics, I assume this promise is extremely appealing. Third, he helped create and successfully exploited the cognitive zeitgeist that for the first time portrayed the mind as something that can be scientifically studied in the same way that atoms and cells can. Moreover, he was one of the first to make interesting connections between our burgeoning understanding of fields like molecular biology and neuroscience on the one hand, and language on the other. Fourth, Chomsky was not afraid to get into fights, which can be beneficial if you usually win. 3 One such sound is the bilabial trill, which kind of sounds like blowing a raspberry. 4 This reminds me of a math joke. 5 Why is this vacuously true? If, given some particular notion of ‘sentence’, the sentences of any language could only have one word at most, we would just define some other notion of ‘word collections’. 6 He and archaeologist Lawrence Barham provide a more self-contained argument in this 2020 paper. 7 A famous line at the beginning of Chomsky’s Aspects of the Theory of Syntax goes: “Linguistic theory is concerned primarily with an ideal speaker-listener, in a completely homogeneous speech community, who knows its language perfectly and is unaffected by such grammatically irrelevant conditions as memory limitations, distractions, shifts of attention and interest, and errors (random or characteristic) in applying his knowledge of the language in actual performance.” You're currently a free subscriber to Astral Codex Ten. For the full experience, upgrade your subscription. |

Older messages

Highlights From The Comments On Mentally Ill Homeless People

Thursday, July 18, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Consciousness As Recursive Reflections

Tuesday, July 16, 2024

A guest post by Daniel Böttger ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Open Thread 338

Monday, July 15, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Your Book Review: The Family That Couldn’t Sleep

Friday, July 12, 2024

Finalist #4 in the Book Review Contest ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Lifeboat Games And Backscratchers Clubs

Thursday, July 11, 2024

... ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

Coming Soon: Your Money Under 24/7 Gov’t Surveillance

Monday, March 3, 2025

͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

👋 Goodbye retirement, hello crypto

Monday, March 3, 2025

The president just pledged to sink millions in taxpayer funds into the ever-volatile world of cryptocurrencies. 🔥 Today's Lever story (also attached below): The plan to make America hazardous again

Lawmakers target social media giants | Microsoft unveils new AI assistant for healthcare

Monday, March 3, 2025

Conan O'Brien delivers Amazon jokes at Oscars ADVERTISEMENT GeekWire SPONSOR MESSAGE: Revisit defining moments, explore new challenges, and get a glimpse into what lies ahead for one of the

☕ Death becomes him

Monday, March 3, 2025

Lessons from killing off mascots. March 03, 2025 View Online | Sign Up Marketing Brew Presented By Impact.com It's Monday. Can't get enough of The White Lotus? Season 3's Parker Posey is

☕ Conference call

Monday, March 3, 2025

Our takeaways from CAGNY's conference. March 03, 2025 View Online | Sign Up Retail Brew Presented By Bazaarvoice We start the week with sad news about our favorite crustaceans, lobsters, who

The Trump-Zelensky Oval Office blowup.

Monday, March 3, 2025

What does the future hold for US-Ukraine relations? The Trump-Zelensky Oval Office blowup. What does the future hold for US-Ukraine relations? By Isaac Saul • 3 Mar 2025 View in browser View in browser

Sorry Birthday

Monday, March 3, 2025

Writing of lasting value Sorry Birthday By Sylvia Bishop • 3 Mar 2025 View in browser View in browser Sorry Not Sorry Michelle Cyca | Walrus | 28th February 2025 | U The apology statement is ubiquitous

⚡️ Should We All Eat Like Athletes?

Monday, March 3, 2025

Plus: The most important cyberpunk movie ever is back on Netflix. Inverse Daily The gels, goos, and performance drinks designed for athletes work, really well. But what about when we're not pushing

Oval Office debacle reflects new reality for Ukraine

Monday, March 3, 2025

+ how clouds take shape

Numlock News: March 3, 2025 • Anora, Mixue, Stars

Monday, March 3, 2025

By Walt Hickey ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏