|

Hello! Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

The Rise of the Declarative Data Stack

Data stacks have come a long way, evolving from monolithic, one-fits-all systems like Oracle/SAP to today's modular open data stacks. This begs the question, what's next? Or why is the current not meeting our needs? As we see more analytics engineering and software best practices, embracing codeful, Git-based, and more CLI-based workflows, the future looks more code-first. Beyond SQL transformations, across the entire data stack. From ingestion to transformation, orchestration, and measures in dashboards—all defined declaratively. But what does this shift towards declarative data stacks mean? How does it change how we build and manage data stacks? And what are the implications for us data professionals? Let's find out in this article…

Alternatives to cosine similarity

Cosine similarity is the recommended way to compare vectors, but what other distance functions are there? And are any of them better?…Last month we looked at how cosine similarity works and how we can use it to calculate the "similarity" of two vectors. But why choose cosine similarity over any other distance function? Why not use Euclidean distance, or Manhattan, or Chebyshev? In this article we'll dig in to some alternative methods for comparing vectors, and see how they compare to cosine similarity. Are any of them faster? Are any of them more accurate?…

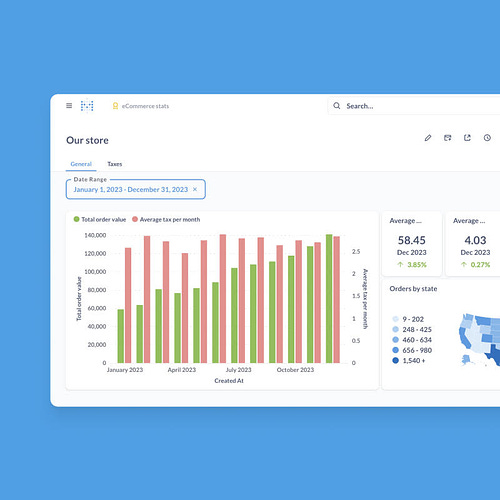

Metabase’s intuitive BI tools empower your team to effortlessly report and derive insights from your data. Compatible with your existing data stack, Metabase offers both self-hosted and cloud-hosted (SOC 2 Type II compliant) options. In just minutes, most teams connect to their database or data warehouse and start building dashboards—no SQL required. With a free trial and super affordable plans, it's the go-to choice for venture-backed startups and over 50,000 organizations of all sizes. Empower your entire team with Metabase. Read more. . * Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Why do Random Forests Work? Understanding Tree Ensembles as Self-Regularizing Adaptive Smoothers

Despite their remarkable effectiveness and broad application, the drivers of success underlying ensembles of trees are still not fully understood. In this paper, we highlight how interpreting tree ensembles as adaptive and self-regularizing smoothers can provide new intuition and deeper insight to this topic. We use this perspective to show that, when studied as smoothers, randomized tree ensembles not only make predictions that are quantifiably more smooth than the predictions of the individual trees they consist of, but also further regulate their smoothness at test-time based on the dissimilarity between testing and training inputs… What life science can learn from self-driving

As the self-driving industry matures, there’s an ongoing migration of excitement and talent to other applications of AI, including the life sciences. This talent cross-pollination will lead to a shared language and a transfer of lessons between the two efforts. We believe the history of self-driving can serve as a guide: AI in life science will go through a similar period of inflated expectations, followed by the accumulation of gradual successes that redefine the industry…Based on our experience, we suggest four lessons learned by the self-driving industry that we believe also apply to AI for drug discovery and development…

How WHOOP Built and Launched a Reliable GenAI Chatbot

We’re suckers for a good self-serve data product, especially when it’s powered by GenAI. So we were thrilled when Matt sat down with our Field CTO Shane Murray at the 18th annual CDOIQ Symposium to share how his team is leveraging LLMs to deliver reliable insights to stakeholders throughout the organization. Here are a few key insights from their conversation…

A Primer on the Inner Workings of Transformer-based Language Models

This primer provides a concise technical introduction to the current techniques used to interpret the inner workings of Transformer-based language models, focusing on the generative decoder-only architecture. We conclude by presenting a comprehensive overview of the known internal mechanisms implemented by these models, uncovering connections across popular approaches and active research directions in this area…

The Prompt() Function: Use the Power of LLMs with SQL

This democratization of AI has reached a stage where integrating small language models (SLMs) like OpenAI’s gpt-4o-mini directly into a scalar SQL function has become practicable from both cost and performance perspectives…Therefore we’re thrilled to announce the prompt() function, which is now available in Preview on MotherDuck. This new SQL function simplifies using LLMs and SLMs with text to generate, summarize, and extract structured data without the need of separate infrastructure…

Multi Objective Optimisation in Suggestions Ranking at Flipkart

At the scale of Flipkart’s massive active user base, our autosuggest system prompts about a billion Search queries each day. This increases manifold during sale events such as The Big Billion Days. Autosuggest is a typical Information Retrieval (IR) system that matches, ranks, and presents search queries as suggestions to reduce the users’ typing effort and make their shopping journey easier…We realized that ranking of suggestions should also optimize for funnel metrics along with user preference to offer an optimal end-to-end shopping experience to the user…As part of understanding the solution, it is important to understand the ranking stack…

Last Mile Data Processing with Ray

Our mission at Pinterest is to bring everyone the inspiration to create the life they love. Machine Learning plays a crucial role in this mission. It allows us to continuously deliver high-quality inspiration to our 460 million monthly active users, curated from billions of pins on our platform. Behind the scenes, hundreds of ML engineers iteratively improve a wide range of recommendation engines that power Pinterest, processing petabytes of data and training thousands of models using hundreds of GPUs…In this blogpost, we will share our assessment of the ML developer velocity bottlenecks and delve deeper into how we adopted Ray, the open source framework to scale AI and machine learning workloads, into our ML Platform to improve dataset iteration speed from days to hours, while improving our GPU utilization to over 90%…

Marketing Mix Modeling (MMM): How to Avoid Biased Channel Estimates

“How will sales be impacted by an X Dollar investment in each marketing channel?” This is the causal question a Marketing-Mix-Model should answer in order to guide companies in deciding how to attribute their marketing channel budgets in the future…In this article, I want to address this issue and give guidance on how to determine which variables should and should not be taken into account in your MMM…

Is Augmentation Effective in Improving Prediction in Imbalanced Datasets? In this paper, we challenge the common assumption that data augmentation is necessary to improve predictions on imbalanced datasets. Instead, we argue that adjusting the classifier cutoffs without data augmentation can produce similar results to oversampling techniques. Our study provides theoretical and empirical evidence to support this claim…

Meaning and Intelligence in Language Models: From Philosophy to Agents in a World

In this talk, I want to take a look backward at where language models came from and why they were so slow to emerge, a look inward to give my thoughts on meaning, intelligence, and what language models understand and know, and a look forward at what we need to build intelligent language-using agents in a world. I will argue that material beyond language is not necessary to having meaning and understanding, but it is very useful in most cases, and that adaptability and learning are vital to intelligence, and so the current strategy of building from huge curated data will not truly get us there, even though LLMs have so many good uses…

Unit Disk Uniform Sampling

Discover the optimal transformations to apply on the standard [0,1] uniform random generator for uniformly sampling a 2D disk…This article focuses on uniformly sampling the 2D unit disk and visualizing how transformations applied to a standard [0,1] uniform random generator create different distributions. We’ll also explore how these transformations, though yielding the same distribution, affect Monte Carlo integration by introducing distortion, leading to increased variance…

Statistics should serve the public not just governments

The UK has a well-respected and extensive official statistics system, but does this system lean too heavily towards the needs of government at the expense of public use? Outlining a vision of statistics that function in an official capacity as well as meeting the problem-oriented needs of the public, Paul Allin, sets out why the Royal Statistical Society is launching a new campaign for public statistics…

.

* Based on unique clicks.

** Find last week's issue #568 here.

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.

Promote yourself/organization to ~63,700 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :) Stay Data Science-y! All our best,

Hannah & Sebastian

| |