OpenAI's turbulent early years - Sync #494

I hope you enjoy this free post. If you do, please like ❤️ or share it, for example by forwarding this email to a friend or colleague. Writing this post took around eight hours to write. Liking or sharing it takes less than eight seconds and makes a huge difference. Thank you! OpenAI's turbulent early years - Sync #494Plus: Anthropic and xAI raise billions of dollars; can a fluffy robot replace a living pet; Chinese reasoning model is out; robot-dog runs full marathon; a $12,000 surgery to change eye colourHello and welcome to Sync #494! As part of the court case between Elon Musk and Sam Altman, several emails from the early years of OpenAI have been made public. We will take a look into those emails, and what they reveal about the early days of OpenAI and the growing distrust among its founders. In other news, Anthropic and xAI raised billions of dollars while a Chinese lab released its reasoning model to challenge OpenAI o1, and Gemini gets memory. Elsewhere in AI, DeepMind releases an AI model to correct errors in quantum computing, a Swiss church installs AI Jesus and how can a small AI control much more capable AI and make sure it does not misbehave. Over in robotics, we have a fluffy robot from Japan aimed at replacing living pets, how easy it is to jailbreak LLM-powered robots and a South Korean robot dog completes a full marathon. We’ll wrap up this week’s issue of Sync with a behind-the-scenes tour of the workshop where Wing designs and builds its delivery drones. Enjoy! OpenAI's turbulent early yearsThe last two years were quite eventful for OpenAI. Thanks to the massive success of ChatGPT, OpenAI went from a relatively small company to one of the biggest startups in the world, attracting worldwide attention and billions of dollars in funding, propelling its valuation to $157 billion and triggering the AI revolution we are in today. However, such massive growth never comes easily. Every organisation experiencing significant growth must evolve and transform itself into a new organisation that can deal with new challenges. In the case of OpenAI, some of those growing pains were made publically visible. Probably the best known of these growing pains occurred a year ago when a group of OpenAI board members briefly removed Sam Altman from his role as CEO. Altman eventually returned to OpenAI, and from then on, we have seen a steady stream of key people leaving the company. Most notable of them were Ilya Sutskever, one of the founders of OpenAI and its long-time chief scientist, and Mira Murati, who served as CTO and briefly as CEO. As part of the court case between Elon Musk and Sam Altman, several emails from the early years of OpenAI have been made public, revealing the tension within OpenAI has been there since the very beginning of the company. These emails cover the period from 2015, when the idea of an “open” AI lab was first proposed, to 2019, when OpenAI transitioned from a non-profit to a for-profit company. They also complement an earlier batch of communications between Elon Musk, Sam Altman, Greg Brockman, and Ilya Sutskever, released earlier this year by OpenAI. The emails can be found on the Musk v. Altman court case page. There is also a compilation of all emails on LessWrong which is much easier to read. While reading those emails, it is worth keeping in mind that Musk’s legal team released them so they will be skewed towards portraying him as the one who was betrayed by OpenAI when the company abandoned its original vision of being a non-profit AI research lab. The early years of OpenAIBefore we dive into the emails and what they tell us about OpenAI, let’s remind ourselves what the world looked like when OpenAI was founded. It is 2015—the height of the deep learning revolution. A year earlier, Google had acquired DeepMind, a London-based AI research company making breakthrough after breakthrough in AI and advancing steadily in deep learning and reinforcement learning research. With the acquisition of DeepMind, Google was poised to lead the charge in AI research. It had the best talent working in its labs, backed by Google’s vast resources—be it computing power, data, or finances. If anyone were to create AGI, there was a big chance it would happen at Google. That vision of the future, in which AGI has been created and owned by Google, was something Sam Altman did not want to happen. As he wrote in an email to Elon Musk in May 2015:

With the information we have so far, that email is the first time an idea for a non-profit AI lab bringing the best minds in the industry to create advanced AI to benefit all of humanity was proposed. Initially, that company was to be attached to YCombinator and was provisionally named YC AI before eventually being renamed to OpenAI. Later emails describe how the new company was planning to attract top talent in AI. That’s where Altman mentions that, apparently, DeepMind was trying to “kill” OpenAI by offering massive counteroffers to those who joined the new company. Distrust between OpenAI foundersOne thing that comes out from reading those emails is the tension between the founders of OpenAI—Elon Musk, Sam Altman, Ilya Sutskever and Greg Brockman. The best example of those tensions is an email titled “Honest Thoughts,” written by Ilya Sutskever and sent to both Elon Musk and Sam Altman in September 2017. Sutskever’s thoughts were indeed honest. In the email, he openly questioned the motives of both Musk and Altman and their intentions for OpenAI. Addressing Musk, Sutskever expressed concerns about the possibility of Musk taking control of OpenAI and transforming the AI lab into one of his companies. Sutskever noted that such a scenario would go against the very principles upon which OpenAI was founded.

Elon did not take that email very well, saying “This is the final straw,” and threatening to leave the company and withdraw his funding, which he officially did six months later. In the same message, Sutskever also raises concerns about Altman and openly asks what he wants from OpenAI:

Following this, Sutskever questions further:

We can see in these questions the seeds of mistrust that will grow over time and eventually culminate in Sutskever leading a group of OpenAI board members to remove Sam Altman from the company in November 2023 over Altman not being “consistently candid.” Another example of rifts forming between OpenAI founders can be found during the discussions about the future of the company as a non-profit, where Sam Altman is reported to have “lost a lot of trust with Greg and Ilya through this process.” The question of funding and a potential merger with TeslaAnother of the topics raised in those emails was the question of funding. Running a cutting-edge AI research lab and hiring top AI talent is expensive. The situation was made worse by the fact that OpenAI was going against Google which could easily outspent OpenAI. In 2017, OpenAI spent $7.9 million—equivalent to a quarter of its functional expenses—on cloud computing alone. By contrast, DeepMind's total expenses in the same year were $442 million. The emails reveal discussions about ways to raise additional funds to sustain OpenAI. One idea proposed was an ICO, which emerged in 2018 during one of crypto’s many bubbles. However, the idea was quickly abandoned. The biggest problem in securing more funding was the non-profit nature of OpenAI. The option that OpenAI eventually took was to become in 2019 a "capped" for-profit, with the profit being capped at 100 times any investment. But there was another option on the table—to bring OpenAI under Tesla. This option was suggested by Andrej Karpathy in an email titled “Top AI Institutions Today,” dated January 2018. At the time, Karpathy was no longer working at OpenAI and was serving as Tesla’s Director of Artificial Intelligence, reporting directly to Elon Musk. In the email, Karpathy provided an analysis of the AI industry in 2018 and correctly highlighted the massive cost of developing world-class AI. He criticised a for-profit approach, arguing that it would require creating a product, which would divert the focus from AI research. In Karpathy’s view, the only viable path for OpenAI to succeed was to become part of Tesla, with Tesla serving as OpenAI’s “cash cow.” Elon then forwarded Karpathy’s analysis to Ilya Sutskever and Greg Brockman, adding that:

However, Altman, Sutskever and Brockman did not want to become Tesla’s equivalent of DeepMind. Sutskever pointed out in that “Honest Thoughts” email that OpenAI being part of Tesla would conflict with the company’s founding principles. If that were to happen, OpenAI would be answerable to Tesla’s shareholders and obligated to maximize their investments. A similar distrust of Tesla’s involvement in OpenAI is evident when OpenAI considered the idea of acquiring Cerebras, a startup designing AI chips. In the same “Honest Thoughts” email, Sutskever asserted that the acquisition would most likely be carried out through Tesla and questioned Tesla’s potential involvement, portraying it as another example of Elon Musk attempting to exert greater control over OpenAI. Broken foundationsThe released emails end in March 2019, on the same day OpenAI announced the transition from a non-profit to a capped for-profit company. The final communication is between Elon Musk and Sam Altman, with Musk requesting that it be made clear he has no financial interest in OpenAI's for-profit arm. A few months later, OpenAI partnered with Microsoft, which invested $1 billion into the AI lab and the long-term partnership between these companies began. In the years that followed, OpenAI shifted its focus to researching transformer models and developing the GPT family of large language models. This journey ultimately culminated in the launch of a small project called ChatGPT—and the rest is history. OpenAI was founded with the mission of building AGI to benefit all of humanity. However, behind this grand mission lies a story of egos, personalities and different visions of what this mission is really about. After reading those emails, a picture of broken foundations emerges, and the story of clashing visions within the company is still unfolding. If you enjoy this post, please click the ❤️ button or share it. Do you like my work? Consider becoming a paying subscriber to support it For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter. Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving. 🦾 More than a humanA $12,000 Surgery to Change Eye Color Is Surging in Popularity Neuralink gets approval to start human trials in Canada 🧠 Artificial IntelligenceUS government commission pushes Manhattan Project-style AI initiative Amazon doubles down on AI startup Anthropic with $4bn investment A Chinese lab has released a ‘reasoning’ AI model to rival OpenAI’s o1

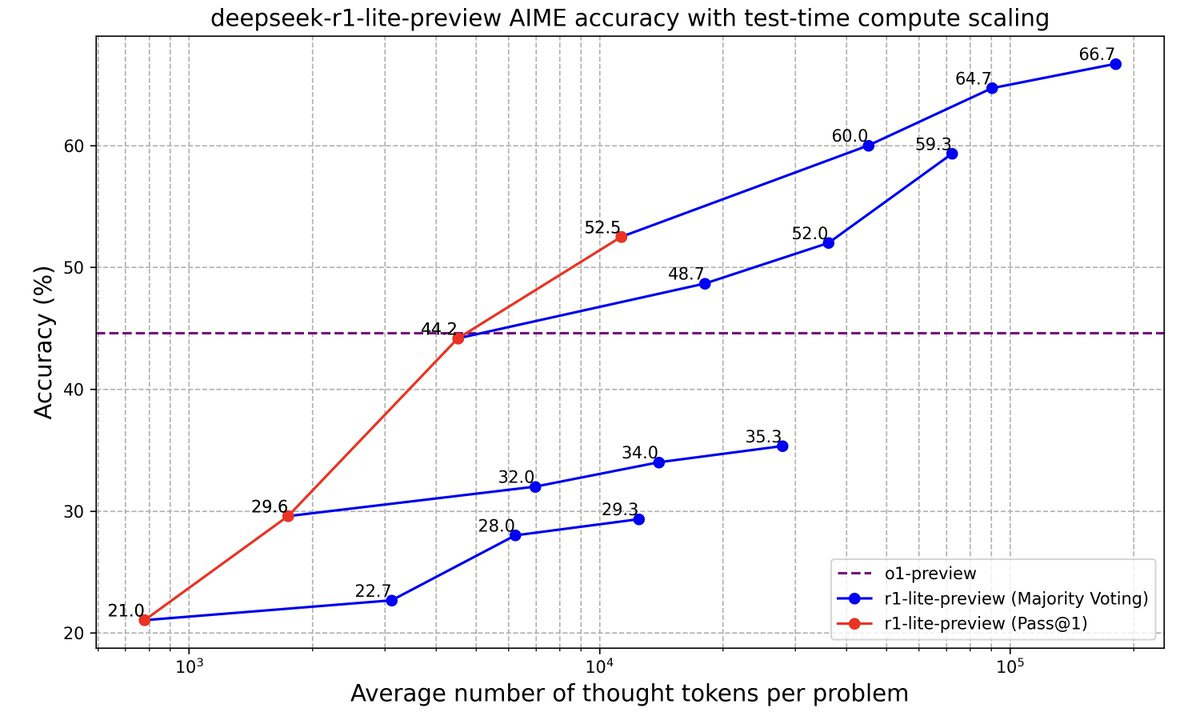

Chinese AI lab DeepSeek has released a preview of DeepSeek-R1, designed to rival OpenAI’s o1. Like o1, the model can “think” for tens of seconds to reason through complex questions and tasks. And like o1, it also struggles with certain logic problems, such as tic-tac-toe. Additionally, it can be easily jailbroken, allowing users to bypass its safeguards. DeepSeek claims that DeepSeek-R1 is competitive with OpenAI’s o1-preview on AI benchmarks like AIME (model evaluation) and MATH (word problems). Elon Musk’s xAI Startup Is Valued at $50 Billion in New Funding Round Google’s Gemini chatbot now has memory ▶️ An Honest Review of Apple Intelligence... So Far (17:48)  In this video, Marques Brownlee reviews every single Apple Intelligence feature that’s out so far—Writing Tools, notification summaries and priority notifications, Genmoji, Image Playground, photo cleanup tool in the Photo app, recording summaries, Visual Intelligence and ChatGPT integration. The results are mixed at most. Microsoft Signs AI-Learning Deal With News Corp.’s HarperCollins Nvidia earnings: AI chip leader shows no signs of stopping mammoth growth AlphaQubit tackles one of quantum computing’s biggest challenges Ben Affleck Says Movies ‘Will Be One of the Last Things Replaced by AI,’ and Even That’s Unlikely to Happen: ‘AI Is a Craftsman at Best’ ▶️ Using Dangerous AI, But Safely? (30:37)  Robert Miles, one of the top voices in AI safety, explains a paper asking how can we make sure that a powerful AI model is not trying to be malicious. In this case, the researchers proposed and evaluated various safety protocols within a controlled scenario where an untrusted model (GPT-4) generates code, a trusted but less capable model (GPT-3.5) monitors it, and limited high-quality human labour audits suspicious outputs. The goal is to prevent "backdoors" while maintaining the usefulness of the model's outputs. It is an interesting video to watch as Miles explains different back-and-forth techniques, resulting in a safety protocol that offers a practical path forward. However, as always, more research is needed to tackle real-world tasks, improve oversight, and address the growing gap between trusted and untrusted models. Deus in machina: Swiss church installs AI-powered Jesus AI-Driven Drug Shows Promising Phase IIa Results in Treating Fatal Lung Disease If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word? 🤖 RoboticsCan a fluffy robot really replace a cat or dog? My weird, emotional week with an AI pet  I first mentioned Moflin, Casio’s pet robot, in Issue #490. It is an interesting project aiming to create a robot companion that can form an emotional bond with its owner. This article recounts the author’s experience with Moflin, beginning with curiosity and slight self-consciousness, which evolved into subtle attachment as they found its sounds, movements, and interactions comforting. Moments of bonding, such as stroking Moflin or having it rest on their chest, highlighted its ability to provide companionship, although it couldn’t fully replicate the connection of a living pet. Moflin reflects Japan’s growing interest in robotic companions, particularly as solutions for an ageing population, and represents a modern take on the global trend of robotic pets like Sony’s Aibo and Paro the robot seal. It's Surprisingly Easy to Jailbreak LLM-Driven Robots Robot runs marathon in South Korea, apparently the first time this has happened 🧬 BiotechnologyScientists identify tomato genes to tweak for sweeter fruit 💡Tangents▶️ Adam Savage Explores Wing’s Drone Engineering Workshop (26:22)  In this video, Adam Savage visits the workshop and laboratory where Wing, Alphabet’s drone delivery company, designs, builds and tests its delivery drones. Adam learns how these drones were developed, from early prototypes to the machines now delivering packages to real customers. It is fascinating to learn what kind of engineering and problem-solving went into creating a viable delivery drone. Plus it is always a pleasure to see Adam nerding out about exceptional engineering. Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

Cracks in the Scaling Laws - Sync #493

Sunday, November 17, 2024

Plus: OpenAI's new AI agent; AlphaFold3 is open-source... kind of; Amazon releases its new AI chip; Waymo One is available for everyone in LA; how can humanity become a Kardashev Type 1

Robotics is the new AI - Sync #492

Sunday, November 10, 2024

Plus: OpenAI's and Google's new models leaked; the state of robotic investments in 2024; pest control with CRISPR; drone with a flamethrower; 81-year-old biohacker trying to reverse ageing; and

Sync #491

Sunday, November 3, 2024

ChatGPT Search and Apple Intelligence are out; Waymo raises $5.6 billion; whispers of Gemini 2; new videos of humanoid robots doing things; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Claude can now control your computer - Sync #490

Sunday, October 27, 2024

Plus: OpenAI plans to release Orion by December; DeepMind open-sources SynthID-Text; Tesla has been secretly testing Robotaxi; US startup that screen embryos for IQ; Casio's robot pet; and more! ͏

State of AI Report 2024 - Sync #489

Sunday, October 20, 2024

Plus: The New York Times warns Perplexity; have we reached peak human lifespan; tech giants tap nuclear power for AI; OpenAI projects billions in losses while Nvidia's stock reaches a new high ͏ ͏

You Might Also Like

Daily Coding Problem: Problem #1707 [Medium]

Monday, March 3, 2025

Daily Coding Problem Good morning! Here's your coding interview problem for today. This problem was asked by Facebook. In chess, the Elo rating system is used to calculate player strengths based on

Simplification Takes Courage & Perplexity introduces Comet

Monday, March 3, 2025

Elicit raises $22M Series A, Perplexity is working on an AI-powered browser, developing taste, and more in this week's issue of Creativerly. Creativerly Simplification Takes Courage &

Mapped | Which Countries Are Perceived as the Most Corrupt? 🌎

Monday, March 3, 2025

In this map, we visualize the Corruption Perceptions Index Score for countries around the world. View Online | Subscribe | Download Our App Presented by: Stay current on the latest money news that

The new tablet to beat

Monday, March 3, 2025

5 top MWC products; iPhone 16e hands-on📱; Solar-powered laptop -- ZDNET ZDNET Tech Today - US March 3, 2025 TCL Nxtpaper 11 tablet at CES The tablet that replaced my Kindle and iPad is finally getting

Import AI 402: Why NVIDIA beats AMD: vending machines vs superintelligence; harder BIG-Bench

Monday, March 3, 2025

What will machines name their first discoveries? ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

GCP Newsletter #440

Monday, March 3, 2025

Welcome to issue #440 March 3rd, 2025 News LLM Official Blog Vertex AI Evaluate gen AI models with Vertex AI evaluation service and LLM comparator - Vertex AI evaluation service and LLM Comparator are

Apple Should Swap Out Siri with ChatGPT

Monday, March 3, 2025

Not forever, but for now. Until a new, better Siri is actually ready to roll — which may be *years* away... Apple Should Swap Out Siri with ChatGPT Not forever, but for now. Until a new, better Siri is

⚡ THN Weekly Recap: Alerts on Zero-Day Exploits, AI Breaches, and Crypto Heists

Monday, March 3, 2025

Get exclusive insights on cyber attacks—including expert analysis on zero-day exploits, AI breaches, and crypto hacks—in our free newsletter. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

⚙️ AI price war

Monday, March 3, 2025

Plus: The reality of LLM 'research'

Post from Syncfusion Blogs on 03/03/2025

Monday, March 3, 2025

New blogs from Syncfusion ® AI-Driven Natural Language Filtering in WPF DataGrid for Smarter Data Processing By Susmitha Sundar This blog explains how to add AI-driven natural language filtering in the