|

Hello! Once a week, we write this email to share the links we thought were worth sharing in the Data Science, ML, AI, Data Visualization, and ML/Data Engineering worlds.

And now…let's dive into some interesting links from this week.

AI decodes the calls of the wild

Over the past year, AI-assisted studies have found that both African savannah elephants (Loxodonta africana) and common marmoset monkeys (Callithrix jacchus) bestow names on their companions. Researchers are also using machine-learning tools to map the vocalizations of crows. As the capability of these computer models improves, they might be able to shed light on how animals communicate, and enable scientists to investigate animals’ self-awareness — and perhaps spur people to make greater efforts to protect threatened species…

Taming LLMs - A Practical Guide to LLM Pitfalls with Open Source Software

The current discourse around Large Language Models (LLMs) tends to focus heavily on their capabilities while glossing over fundamental challenges. Conversely, this book takes a critical look at the key limitations and implementation pitfalls that engineers and technical product managers encounter when building LLM-powered applications. Through practical Python examples and proven open source solutions, it provides an introductory yet comprehensive guide for navigating these challenges. The focus is on concrete problems - from handling unstructured output to managing context windows - with reproducible code examples and battle-tested open source tools. By understanding these pitfalls upfront, readers will be better equipped to build products that harness the power of LLMs while sidestepping their inherent limitations…

GPU Programming Glossary

Running many thousands of GPUs means spending a lot of time knee deep in the CUDA-swamp and other strange places. In the hope that we can help other people navigate this, we're releasing the first version of a GPU Glossary…

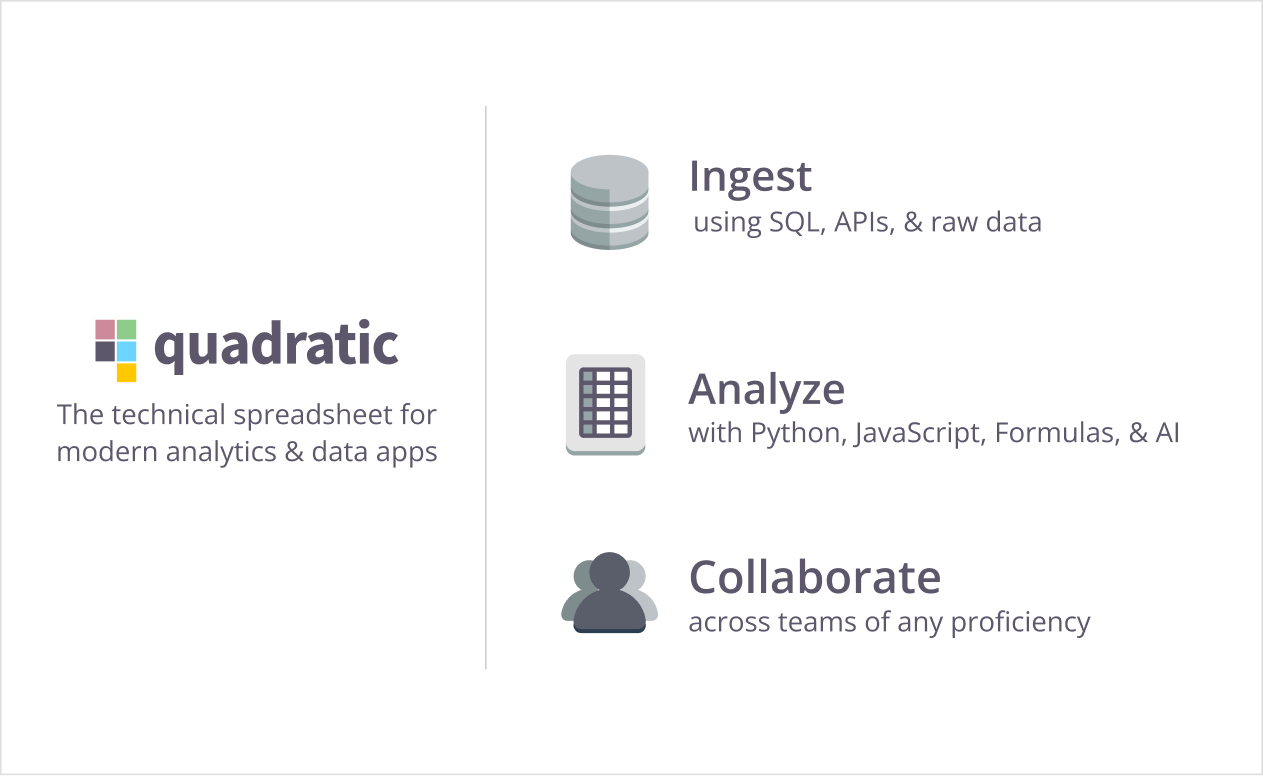

With Quadratic, combine the spreadsheets your organization asks for with the code that matches your team’s code-driven workflows. Powered by code, you can build anything in Quadratic spreadsheets with Python, JavaScript, or SQL, all approachable with the power of AI.

Use the data tool that actually aligns with how your team works with data, from ad-hoc to end-to-end analytics, all in a familiar spreadsheet. Level up your team’s analytics with Quadratic today . * Want to sponsor the newsletter? Email us for details --> team@datascienceweekly.org

Harvard Is Releasing a Massive Free AI Training Dataset Funded by OpenAI and Microsoft

Harvard University announced Thursday it’s releasing a high-quality dataset of nearly 1 million public-domain books that could be used by anyone to train large language models and other AI tools. The dataset was created by Harvard’s newly formed Institutional Data Initiative with funding from both Microsoft and OpenAI. It contains books scanned as part of the Google Books project that are no longer protected by copyright… The State of Robot Motion Generation

This paper reviews the large spectrum of methods for generating robot motion proposed over the 50 years of robotics research culminating in recent developments. It crosses the boundaries of methodologies, typically not surveyed together, from those that operate over explicit models to those that learn implicit ones. The paper discusses the current state-of-the-art as well as properties of varying methodologies, highlighting opportunities for integration…

Is studying Data Science still worth it? [Reddit Discussion]

Hi everyone, I’m currently studying data science, but I’ve been hearing that the demand for data scientists is decreasing significantly. I’ve also been told that many data scientists are essentially becoming analysts, while the machine learning side of things is increasingly being handled by engineers. Does it still make sense to pursue a career in data science or should i switch to computer science? Also, are machine learning engineers still building models or are they mostly focused on deploying them?…

Ways to use torch.compile

On the surface, the value proposition of torch.compile is simple: compile your PyTorch model and it runs X% faster. But after having spent a lot of time helping users from all walks of life use torch.compile, I have found that actually understanding how this value proposition applies to your situation can be quite subtle! In this post, I want to walk through the ways to use torch.compile, and within these use cases, what works and what doesn't…

I want to achieve mathematical superintelligence, so why am I doing LLMs now?

I’ve recently defended my PhD, which is on AI for formal mathematics…Now I’m a full-time researcher at Mistral AI, working on reasoning. A significant shift in my work is that now I spend my working hours on informal reasoning rather than formal mathematics inside proof assistants, although I maintain some connections with academia and advise on formal mathematics projects. To justify this shift in focus (mainly for myself), let me try to lay down some thoughts…

LLM Agents in Production: Architectures, Challenges, and Best Practices

Today’s blog is about agents in production so this post focuses on some of the core case studies and how specific companies developed and deployed agent(ic) application(s)...

Parallel and Asynchronous Programming in Shiny with future, promise, future_promise, and ExtendedTask

In this post, we’ll dive into parallel and asynchronous programming, why it matters for {shiny} developers, and how you can implement it in your next app…

Reward Hacking in Reinforcement Learning

Reward shaping in Reinforcement Learning (RL) is challenging. Reward hacking occurs when an RL agent exploits flaws or ambiguities in the reward function to obtain high rewards without genuinely learning the intended behaviors or completing the task as designed. In recent years, several related concepts have been proposed, all referring to some form of reward hacking…

On proof and progress in mathematics In response to Jaffe and Quinn [“Theoretical mathematics'': Toward a cultural synthesis of mathematics and theoretical physics], the author discusses forms of progress in mathematics that are not captured by formal proofs of theorems, especially in his own work in the theory of foliations and geometrization of 3-manifolds and dynamical systems…

Breaking the rules with expression expansion

In a social media post we made, we asked you a question. Doesn’t the expression .struct.unnest break one of Polars’ most fundamental principles, in which one expression must produce exactly one column as output? While this article will give a detailed answer to this question, we can spoil the final conclusion for you: everything will be fine in the end…

Introduction to GIS Programming

This course offers a comprehensive exploration of GIS programming, centered around the Python programming language. Throughout the semester, students will master the use of Python libraries and frameworks essential for processing, analyzing, and visualizing geospatial data…

I'm a self-taught DE who weaseled my way into the tech world over 10 years ago. AMA! [Reddit Discussion]

I've been a senior-level Data Engineer for years now, and an odd success story considering I have no degree and barely graduated high school. AMA…

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

People like to use cool names which are often confusing. When I started playing with CNN beyond single label classification, I got confused with the different names and formulations people write in their papers, and even with the loss layer names of the deep learning frameworks such as Caffe, Pytorch or TensorFlow. In this post I group up the different names and variations people use for Cross-Entropy Loss. I explain their main points, use cases and the implementations in different deep learning frameworks…

.

.

* Based on unique clicks.

** Find last week's issue #576 here.

.

Learning something for your job? Hit reply to get get our help.

Looking to get a job? Check out our “Get A Data Science Job” Course

It is a comprehensive course that teaches you everything related to getting a data science job based on answers to thousands of emails from readers like you. The course has 3 sections: Section 1 covers how to get started, Section 2 covers how to assemble a portfolio to showcase your experience (even if you don’t have any), and Section 3 covers how to write your resume.

Promote yourself/organization to ~64,300 subscribers by sponsoring this newsletter. 35-45% weekly open rate.

Thank you for joining us this week! :) Stay Data Science-y! All our best,

Hannah & Sebastian

| |