Uber's service migration strategy circa 2014. @ Irrational Exuberance

Hi folks,

This is the weekly digest for my blog, Irrational Exuberance. Reach out with thoughts on Twitter at @lethain, or reply to this email.

Posts from this week:

-

Uber's service migration strategy circa 2014.

-

Service onboarding model for Uber (2014).

Uber's service migration strategy circa 2014.

In early 2014, I joined as an engineering manager for Uber’s Infrastructure team. We were responsible for a wide number of things, including provisioning new services. While the overall team I led grew significantly over time, the subset working on service provisioning never grew beyond four engineers.

Those four engineers successfully migrated 1,000+ services onto a new, future-proofed service platform. More importantly, they did it while absorbing the majority, although certainly not the entirety, of the migration workload onto that small team rather than spreading it across the 2,000+ engineers working at Uber at the time. Their strategy serves as an interesting case study of how a team can drive strategy, even without any executive sponsor, by focusing on solving a pressing user problem, and providing effective ergonomics while doing so.

Note that after this introductory section, the remainder of this strategy will be written from the perspective of 2014, when it was originally developed.

More than a decade later after this strategy was implemented, we have an interesting perspective to evaluate its impact. It’s fair to say that it had some meaningful, negative consequences by allowing the widespread proliferation of new services within Uber. Those services contributed to a messy architecture that had to go through cycles of internal cleanup over the following years.

As the principle author of this strategy, I’ve learned a lot from meditating on the fact that this strategy was wildly successful, that I think Uber is better off for having followed it, and that it also meaningfully degraded Uber’s developer experience over time. There’s both good and bad here; with a wide enough lens, all evaluations get complicated.

This is an exploratory, draft chapter for a book on engineering strategy that I’m brainstorming in #eng-strategy-book. As such, some of the links go to other draft chapters, both published drafts and very early, unpublished drafts.

Reading this document

To apply this strategy, start at the top with Policy. To understand the thinking behind this strategy, read sections in reserve order, starting with Explore, then Diagnose and so on. Relative to the default structure, this document one tweak, folding the Operation section in with Policy.

More detail on this structure in Making a readable Engineering Strategy document.

Policy & Operation

We’ve adopted these guiding principles for extending Uber’s service platform:

-

Constrain manual provisioning allocation to maximize investment in self-service provisioning. The service provisioning team will maintain a fixed allocation of one full time engineer on manual service provisioning tasks. We will move the remaining engineers to work on automation to speed up future service provisioning. This will degrade manual provisioning in the short term, but the alternative is permanently degrading provisioning by the influx of new service requests from newly hired product engineers.

-

Self-service must be safely usable by a new hire without Uber context. It is possible today to make a Puppet or Clusto change while provisioning a new service that negatively impacts the production environment. This must not be true in any self-service solution.

-

Move to structured requests, and out of tickets. Missing or incorrect information in provisioning requests create significant delays in provisioning. Further, collecting this information is the first step of moving to a self-service process. As such, we can get paid twice by reducing errors in manual provisioning while also creating the interface for self-service workflows.

-

Prefer initializing new services with good defaults rather than requiring user input. Most new services are provisioned for new projects with strong timeline pressure but little certainty on their long-term requirements. These users cannot accurately predict their future needs, and expecting them to do so creates significant friction.

Instead, the provisioning framework should suggest good defaults, and make it easy to change the settings later when users have more clarity. The gate from development environment to production environment is a particularly effective one for ensuring settings are refreshed.

We are materializing those principles into this sequenced set of tasks:

-

Create an internal tool that coordinates service provisioning, replacing the process where teams request new services via Phabricator tickets. This new tool will maintain a schema of required fields that must be supplied, with the aim of eliminating the majority of back and forth between teams during service provisioning.

In addition to capturing necessary data, this will also serve as our interface for automating various steps in provisioning without requiring future changes in the workflow to request service provisioning.

-

Extend the internal tool will generate Puppet scaffolding for new services, reducing the potential for errors in two ways. First, the data supplied in the service provisioning request can be directly included into the rendered template. Second, this will eliminate most human tweaking of templates where typo’s can create issues.

-

Port allocation is a particularly high-risk element of provisioning, as reusing a port can breaking routing to an existing production service. As such, this will be the first area we fully automate, with the provisioning service supply the allocated port rather than requiring requesting teams to provide an already allocated port.

Doing this will require moving the port registry out of a Phabricator wiki page and into a database, which will allow us to guard access with a variety of checks.

-

Manual assignment of new services to servers often leads to new serices being allocated to already heavily utilized servers. We will replace the manual assignment with an automated system, and do so with the intention of migrating to the Mesos/Aurora cluster once it is available for production workloads.

Each week, we’ll review the size of the service provisioning queue, along with the service provisioning time to assess whether the strategy is working or needs to be revised.

Prolonged strategy testing

Although I didn’t have a name for this practice in 2014 when we created and implemented this strategy, the preceeding paragraph captures an important truth of team-led bottom-up strategy: the entire strategy was implemented in a prolonged strategy testing phase.

This is an important truth of all low-attitude, bottom-up strategy: because you don’t have the authority to mandate compliance. An executive’s high-altitude strategy can be enforced despite not working due to their organizational authority, but a team’s strategy will only endure while it remains effective.

Refine

In order to refine our diagnosis, we’ve created a systems model for service onboarding. This will allow us to simulate a variety of different approaches to our problem, and determine which approach, or combination of approaches, will be most effective.

As we exercised the model, it became clear that:

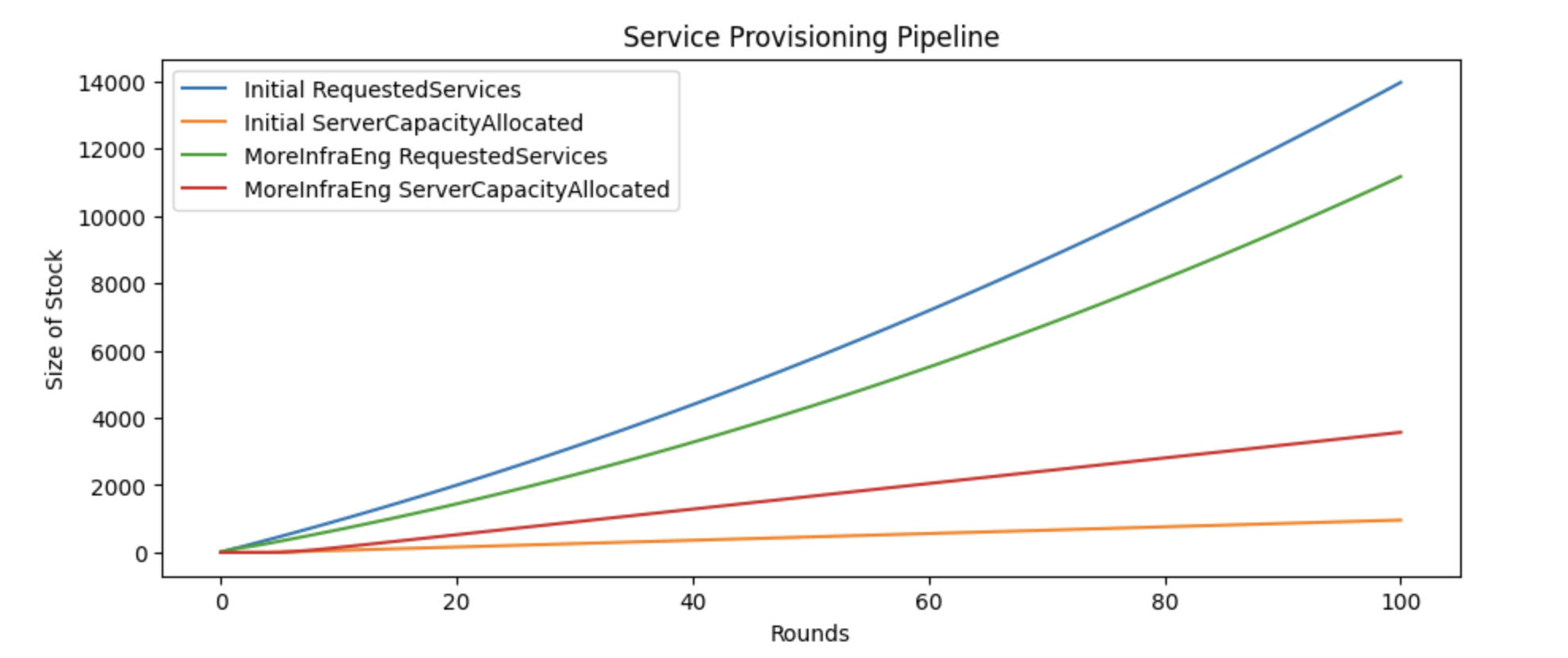

- we are increasingly falling behind,

- hiring onto the service provisioning team is not a viable solution, and

- moving to a self-service approach is our only option.

While the model writeup justifies each of those statements in more detail,

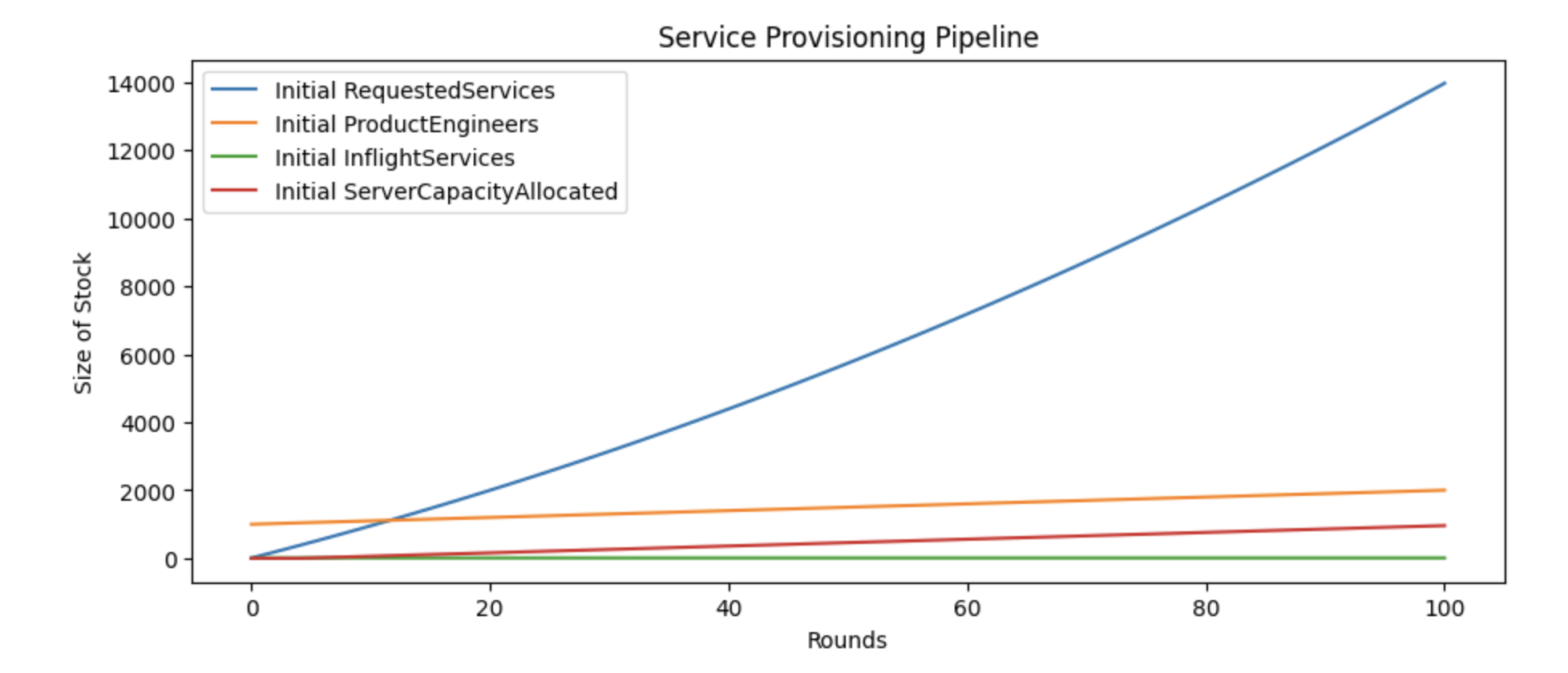

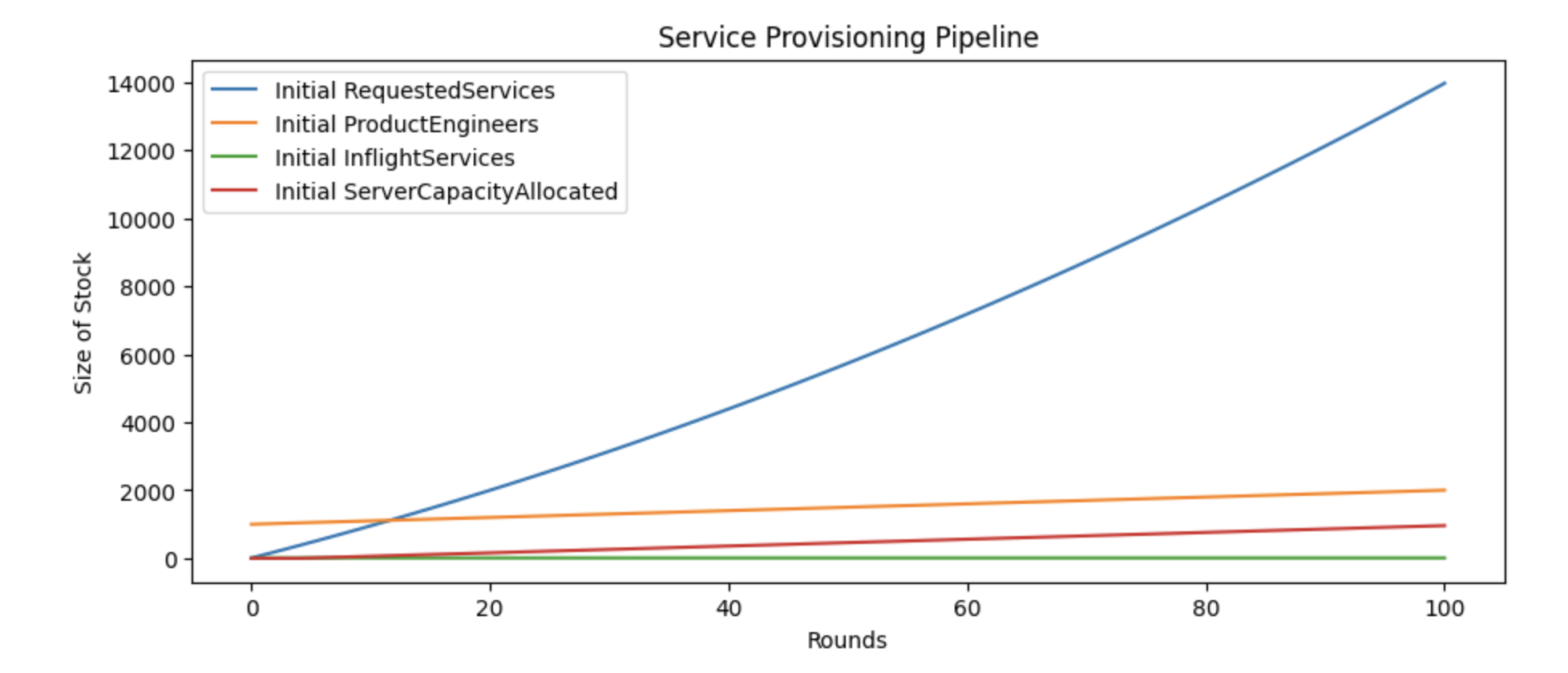

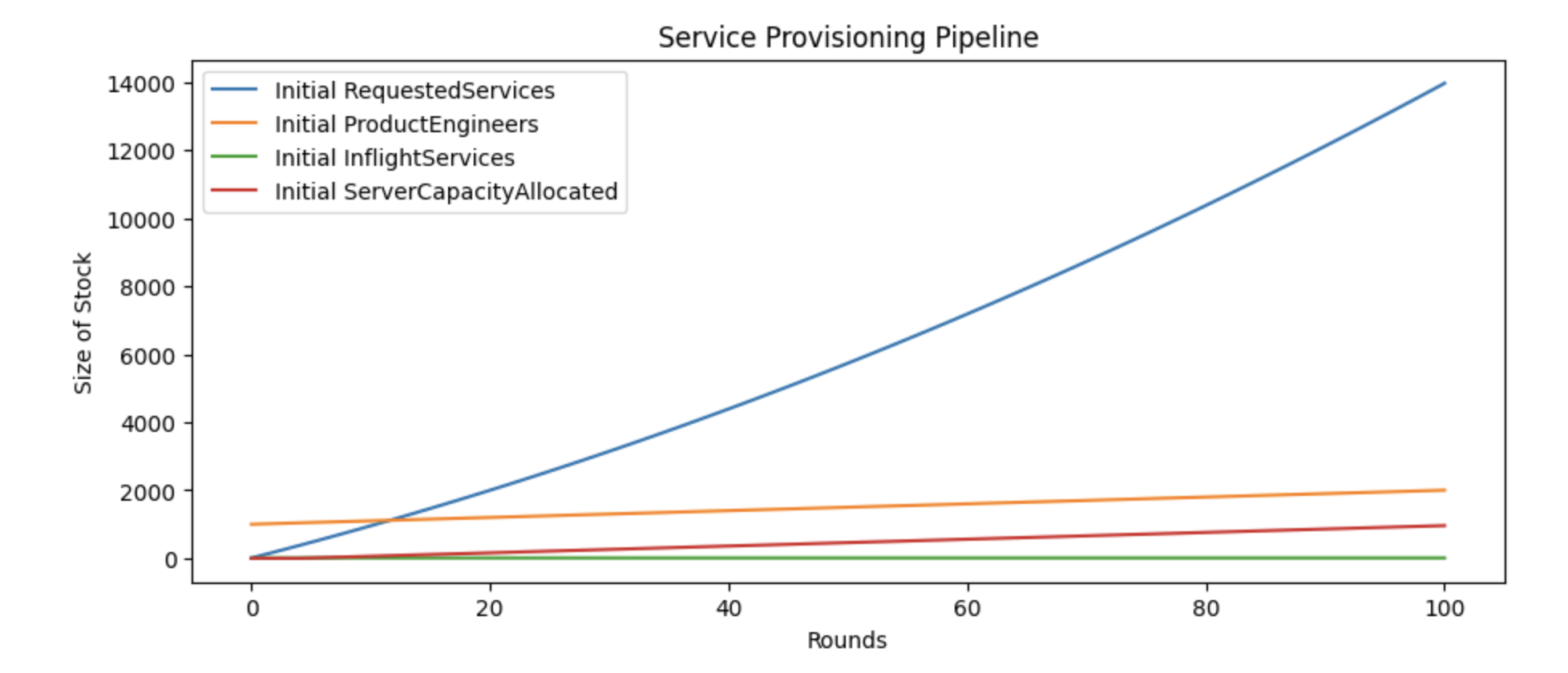

we’ll include two charts here. The first chart shows the status quo,

where new service provisioning requests, labeled as Initial RequestedServices, quickly accumulate into a backlog.

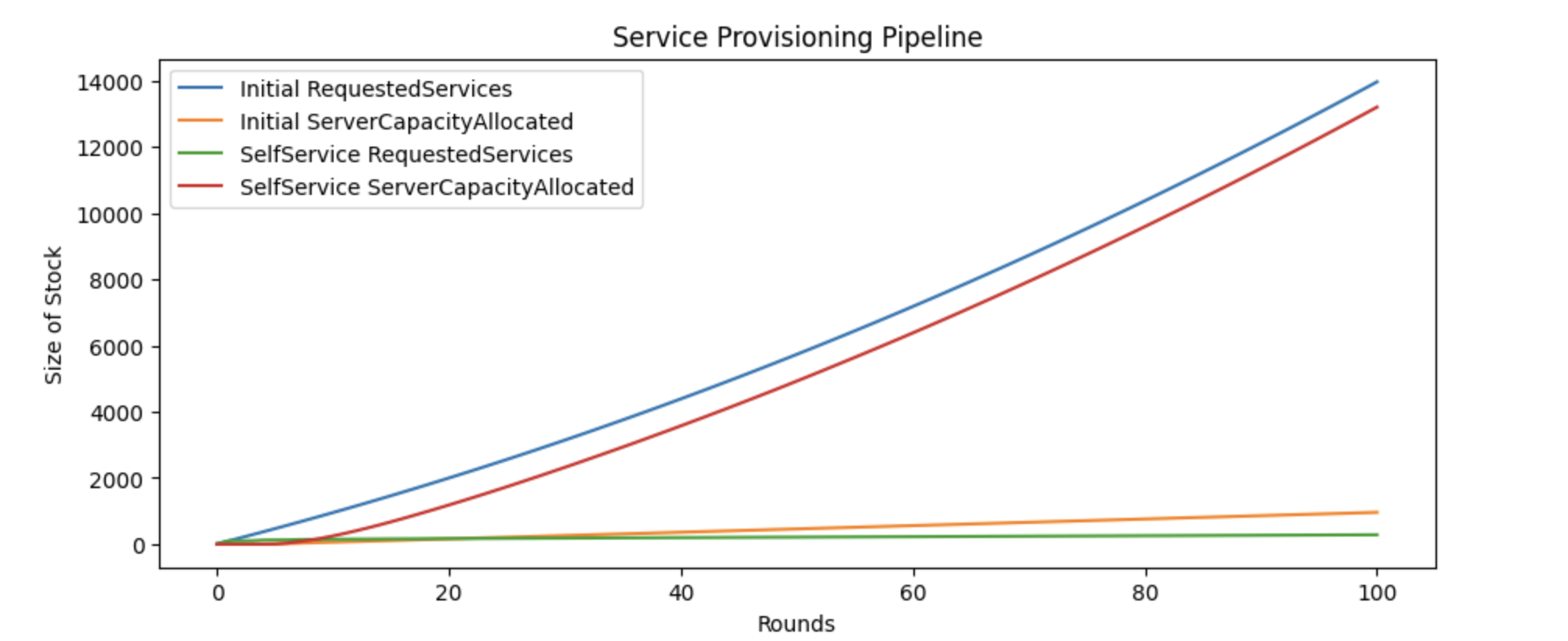

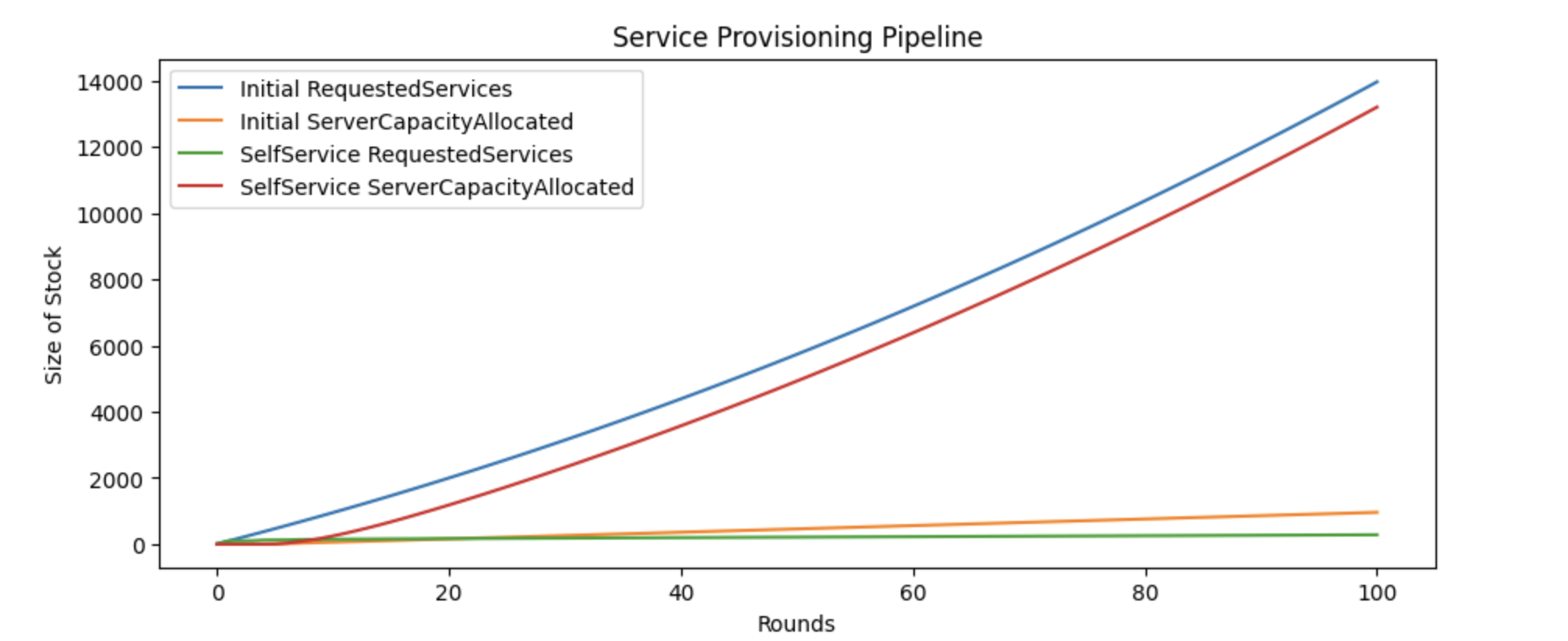

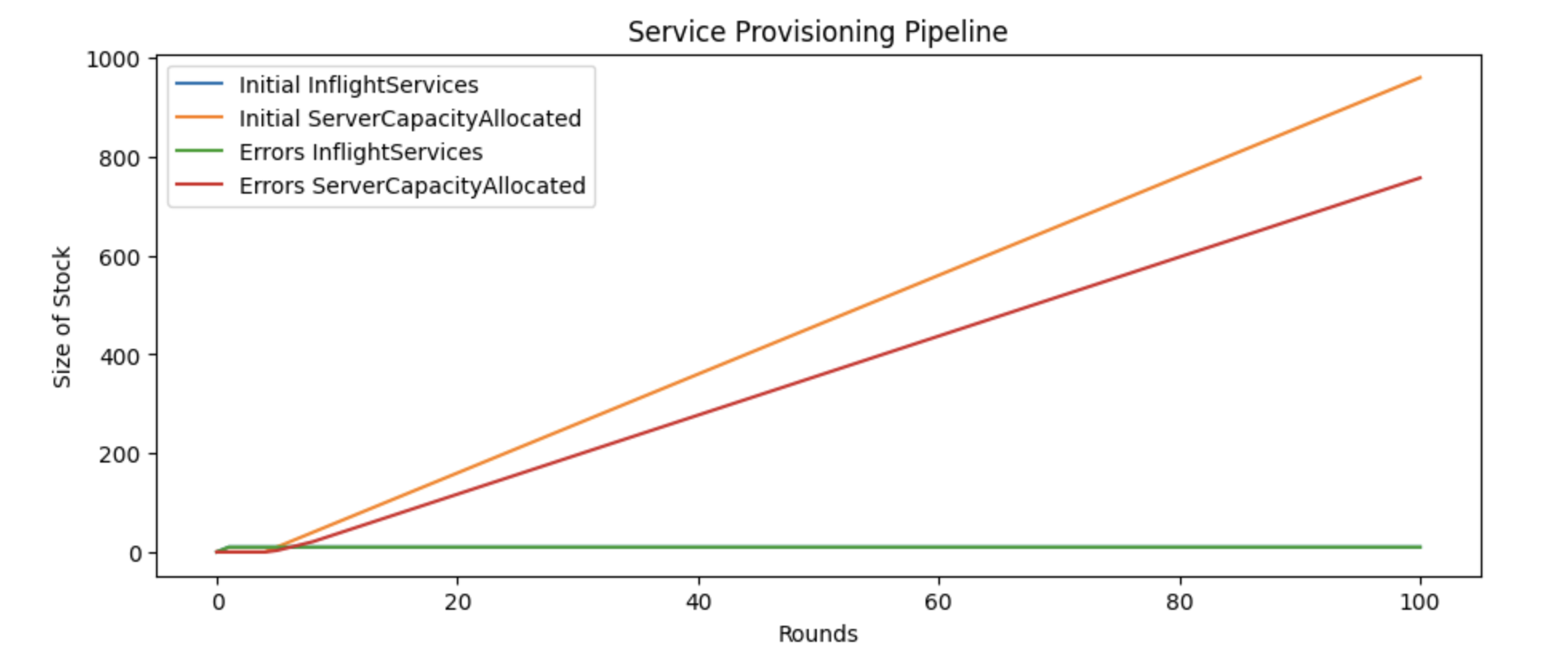

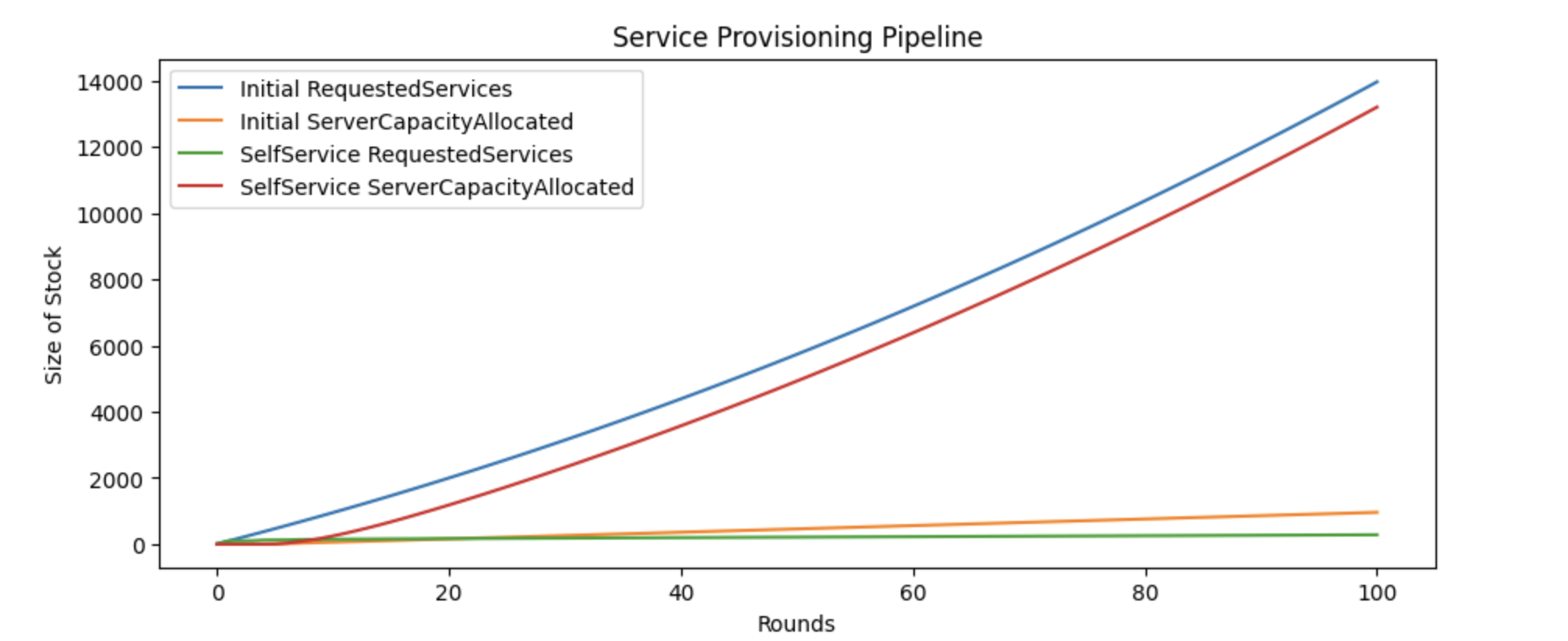

Second, we have a chart comparing the outcomes between the current status quo and a self-service approach.

In that chart, you can see that the service provisioning backlog in the self-service model remains steady,

as represented by the SelfService RequestedServices line. Of the various attempts to find a solution,

none of the others showed promise, including eliminating all errors in provisioning and increasing the team’s

capacity by 500%.

Diagnose

We’ve diagnosed the current state of service provisioning at Uber’s as:

-

Many product engineering teams are aiming to leave the centralized monolith, which is generating two to three service provisioning requests each week. We expect this rate to increase roughly linearly with the size of the product engineering organization.

Even if we disagree with this shift to additional services, there’s no team responsible for maintaining the extensibility of the monolith, and working in the monolith is the number one source of developer frustration, so we don’t have a practical counter proposal to offer engineers other than provisioning a new service.

-

The engineering organization is doubling every six months. Consequently, a year from now, we expect eight to twelve service provisioning requests every week.

-

Within infrastructure engineering, there is a team of four engineers responsible for service provisioning today. While our organization is growing at a similar rate as product engineering, none of that additional headcount is being allocated directly to the team working on service provisioning. We do not anticipate this changing.

Some additional headcount is being allocated to Service Reliability Engineers (SREs) who can take on the most nuanced, complicated service provisioning work. However, their bandwidth is already heavily constrained across many tasks, so relying on SRES is an insufficient solution.

-

The queue for service provisioning is already increasing in size as things are today. Barring some change, many services will not be provisioned in a timely fashion.

-

Today, provisioning a new service takes about a week, with numerous round trips between the requesting team and the provisioning team. Missing and incorrect information between teams is the largest source of delay in provisioning services.

If the provisioning team has all the necessary information, and it’s accurate, then a new service can be provisioned in about three to four hours of work across configuration in Puppet, metadata in Clusto, allocating ports, assigning the service to servers, and so on.

-

There are few safe guards on port allocation, server assignment and so on. It is easy to inadvertently cause a production outage during service provisioning unless done with attention to detail.

Given our rate of hiring, training the engineering organization to use this unsafe toolchain is an impractical solution: even if we train the entire organization perfectly today, there will be just as many untrained individuals in six months. Further, there’s product engineering leadership has no interest in their team being diverted to service provisioning training.

-

It’s widely agreed across the infrastructure engineering team that essentially every component of service provisioning should be replaced as soon as possible, but there is no concrete plan to replace any of the core components. Further, there is no team accountable for replacing these components, which means the service provisioning team will either need to work around the current tooling or replace that tooling ourselves.

-

It’s urgent to unblock development of new services, but moving those new services to production is rarely urgent, and occurs after a long internal development period. Evidence of this is that requests to provision a new service generally come with significant urgency and internal escalations to management. After the service is provisioned for development, there are relatively few urgent escalations other than one-off requests for increased production capacity during incidents.

-

Another team within infrastructure is actively exploring adoption of Mesos and Aurora, but there’s no concrete timeline for when this might be available for our usage. Until they commit to supporting our workloads, we’ll need to find an alternative solution.

Explore

Uber’s server and service infrastructure today is composed of a handful of pieces. First, we run servers on-prem within a handful of colocations. Second, we describe each server in Puppet manifests to support repeatable provisioning of servers. Finally, we manage fleet and server metadata in a tool named Clusto, originally created by Digg, which allows us to populate Puppet manifests with server and cluster appropriate metadata during provisioning. In general, we agree that our current infrastructure is nearing its end of lifespan, but it’s less obvious what the appropriate replacements are for each piece.

There’s significant internal opposition to running in the cloud, up to and including our CEO, so we don’t believe that will change in the forseeable future. We do however believe there’s opportunity to change our service definitions from Puppet to something along the lines of Docker, and to change our metadata mechanism towards a more purpose-built solution like Mesos/Aurora or Kubernetes.

As a starting point, we find it valuable to read Large-scale cluster management at Google with Borg which informed some elements of the approach to Kubernetes, and Mesos: A Platform for Fine-Grained Resource Sharing in the Data Center which describes the Mesos/Aurora approach.

If you’re wondering why there’s no mention of Borg, Omega, and Kubernetes, it’s because it wasn’t published until 2016, a year after this strategy was developed.

Within Uber, we have a number of ex-Twitter engineers who can speak with confidence to their experience operating with Mesos/Aurora at Twitter. We have been unable to find anyone to speak with that has production Kubernetes experience operating a comparably large fleet of 10,000+ servers, although presumably someone is operating–or close to operating–Kuberenetes at that scale.

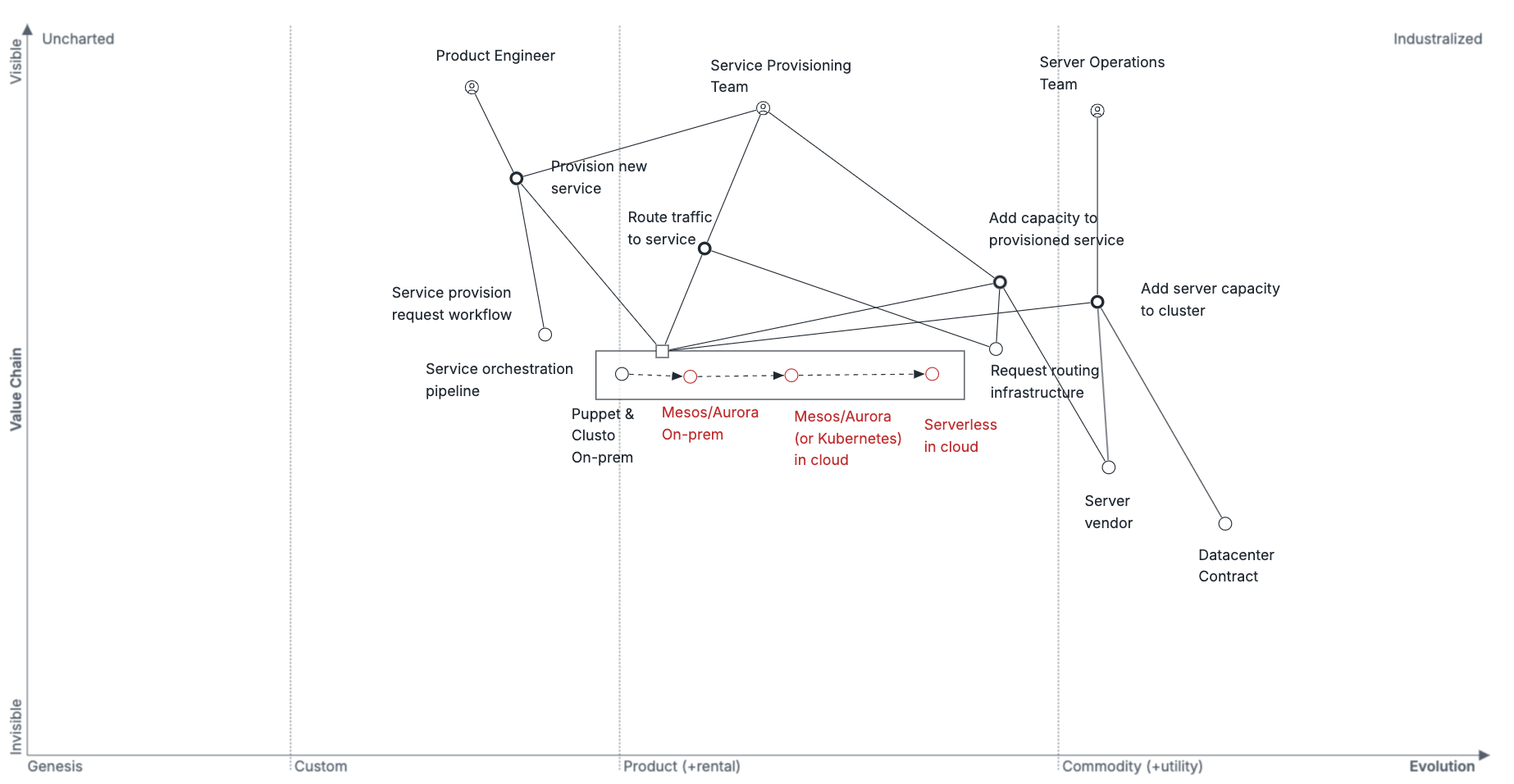

Our general belief of the evolution of the ecosystem at the time is described in this Wardley mapping exercise on service orchestration (2014).

One of the unknowns today is how the evolution of Mesos/Aurora and Kubernetes will look in the future. Kubernetes seems promising with Google’s backing, but there are few if any meaningful production deployments today. Mesos/Aurora has more community support and more production deployments, but the absolute number of deployments remains quite small, and there is no large-scale industry backer outside of Twitter.

Even further out, there’s considerable excitement around “serverless” frameworks, which seem like a likely future evolution, but canvassing the industry and our networks we’ve simply been unable to find enough real-world usage to make an active push towards this destination today.

Wardley mapping is introduced as one of the techniques for strategy refinement, but it can also be a useful technique for exploring an dyanmic ecosystem like service orchestration in 2014.

Assembling each strategy requires exercising judgment on how to compile the pieces together most usefully, and in this case I found that the map fits most naturally in with the rest of exploration rather than in the more operationally-focused refinement section.

Service onboarding model for Uber (2014).

At the core of Uber’s service migration strategy (2014) is understanding the service onboarding process, and identifying the levers to speed up that process. Here we’ll develop a system model representing that onboarding process, and exercise the model to test a number of hypotheses about how to best speed up provisioning.

In this chapter, we’ll cover:

- Where the model of service onboarding suggested we focus on efforts

- Developing a system model using the lethain/systems package on Github. That model is available in the lethain/eng-strategy-models repository

- Exercising that model to learn from it

Let’s figure out what this model can teach us.

This is an exploratory, draft chapter for a book on engineering strategy that I’m brainstorming in #eng-strategy-book. As such, some of the links go to other draft chapters, both published drafts and very early, unpublished drafts.

Learnings

Even if we model this problem with a 100% success rate (e.g. no errors at all), then the backlog of requested new services continues to increase over time. This clarifies that the problem to be solved is not the quality of service the service provisioning team is providing, but rather that the fundamental approach is not working.

Although hiring is tempting as a solution, our model suggests it is not a particularly valuable approach in this scenario. Even increasing the Service Provisioning team’s staff allocated to manually provisioning services by 500% doesn’t solve the backlog of incoming requests.

If reducing errors doesn’t solve the problem, and increased hiring for the team doesn’t solve the problem, then we have to find a way to eliminate manual service provisioning entirely. The most promising candidate is moving to a self-service provisioning model, which our model shows solves the backlog problem effectively.

Refining our earlier statement, additional hiring may benefit the team if we are able to focus those hires on building self-service provisioning, and were able to ramp their productivity faster than the increase of incoming service provisioning requests.

Sketch

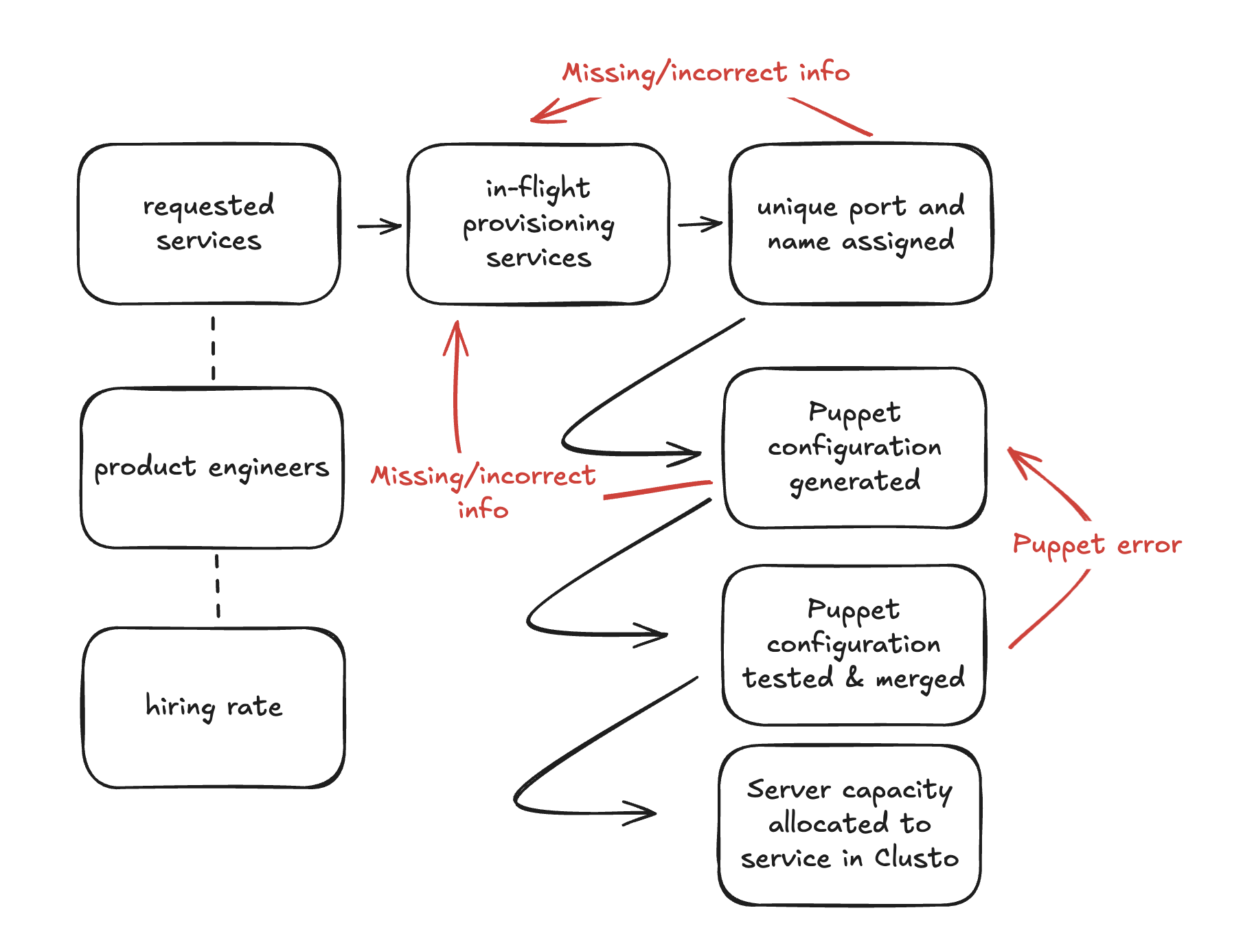

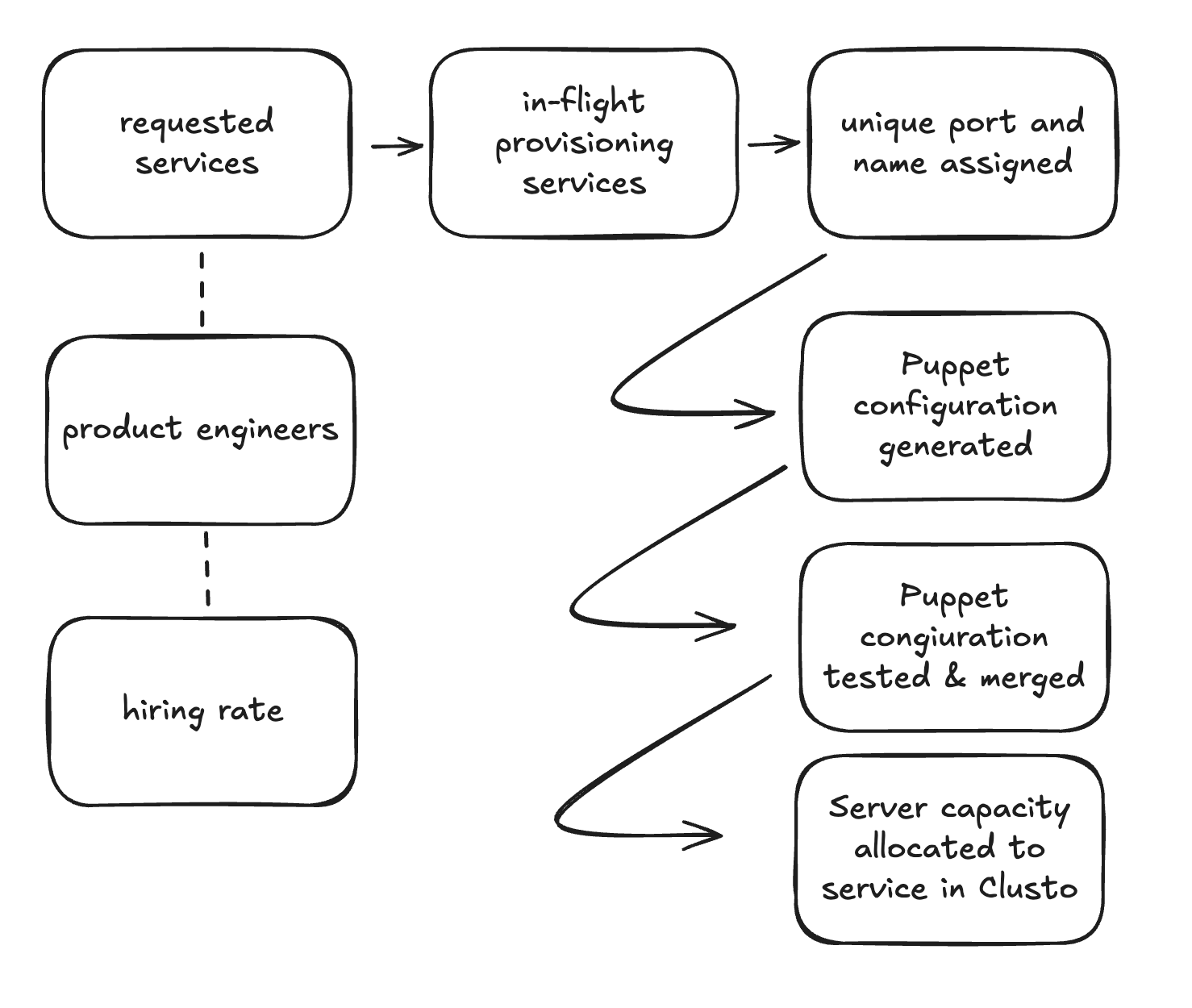

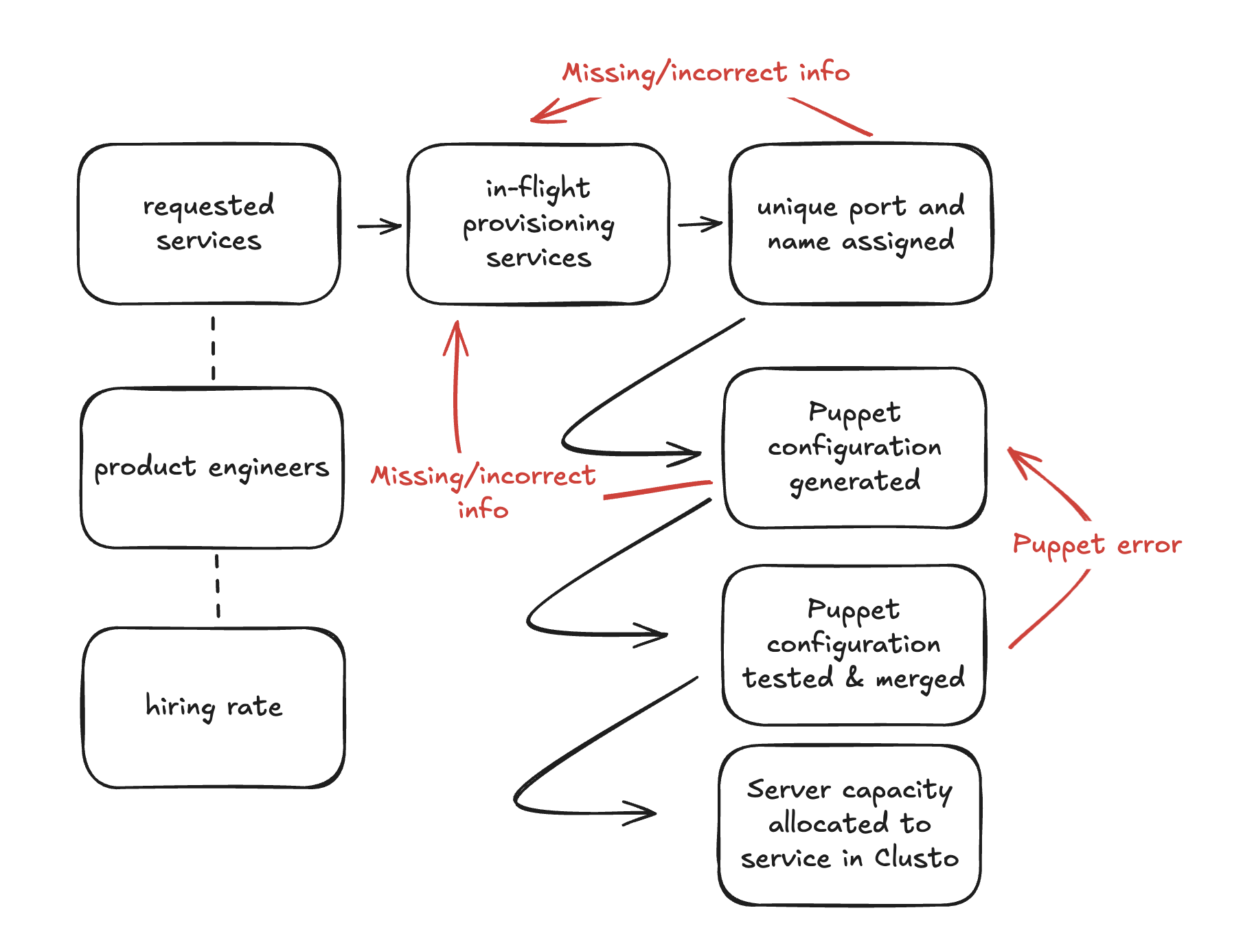

Our initial sketch of service provisioning is a simple pipieline starting with

requested services and moving step by step through to server capacity allocated.

Some of these steps are likely much slower than others, but it gives a sense of the

stages and where things might go wrong. It also gives us a sense of what we can measure

to evaluate if our approach to provisioning is working well.

One element worth mentioning are the dotted lines from hiring rate to product engineers and

from product engineers to requested services. These are called links, which are stocks that

influence another stock, but don’t flow directly into them.

A purist would correctly note that links should connect to flows rather than stocks. That is true! However, as we’ll encounter when we convert this sketch into a model, there are actually several counterintuitive elemnents here that are necessary to model this system but make the sketch less readable. As a modeler, you’ll frequently encounter these sorts of tradeoffs, and you’ll have to decide what choices serve your needs best in the moment.

The biggest missing element the initial model is missing is error flows, where things can sometimes go wrong in addition to sometimes going right. There are many ways things can go wrong, but we’re going to focus on modeling three error flows in particular:

-

Missing/incorrect informationoccurs twice in this model, and throws a provisioning request back into the initial provisioning phase where information is collected.When this occurs during port assignment, this is a relatively small trip backwards. However, when it occurs in Puppet configuration, this is a significantly larger step backwards.

-

Puppet erroroccurs in the second to final stock,Puppet configuration tested & merged. This sends requests back one step in the provisioning flow.

Updating our sketch to reflect these flows, we get a fairly complete, and somewhat nuanced, view of the service provisioning flow.

Note that the combination of these two flows introduces the possibility of a service

being almost fully provisioned, but then traveling from Puppet testing back to Puppet configuration

due to Puppet error, and then backwards again to the intial step due to Missing/incorrect information.

This means it’s possible to lose almost all provisioning progress if everything goes wrong.

There are more nuances we could introduce here, but there’s already enough complexity here for us to learn quite a bit from this model.

Reason

Studying our sketches, a few things stands out:

-

The hiring of product engineers is going to drive up service provisioning requests over time, but there’s no counterbalancing hiring of infrastructure engineers to work on service provisioning. This means there’s an implicit, but very real, deadline to scale this process independently of the size of the infrastructure engineering team.

Even without building the full model, it’s clear that we have to either stop hiring product engineers, turn this into a self-service solution, or find a new mechanism to discourage service provisioning.

-

The size of error rates are going to influence results a great deal, particularly those for

Missing/incorrect information. This is probably the most valuable place to start looking for efficiency improvements. -

Missing information errors are more expensive than the model implies, because they require coordination across teams to resolve. Conversely, Puppet testing errors are probably cheaper than the model implies, because they should be solvable within the same team and consequently benefit from a quick iteration loop.

Now we need to build a model that helps guide our inquiry into those questions.

Model

You can find the full implementation of this model on Github if you want to see the entirety rather than these emphasized snippets.

First, let’s get the success states working:

HiringRate(10)

ProductEngineers(1000)

[PotentialHires] > ProductEngineers @ HiringRate

[PotentialServices] > RequestedServices(10) @ ProductEngineers / 10

RequestedServices > InflightServices(0, 10) @ Leak(1.0)

InflightServices > PortNameAssigned @ Leak(1.0)

PortNameAssigned > PuppetGenerated @ Leak(1.0)

PuppetGenerated > PuppetConfigMerged @ Leak(1.0)

PuppetConfigMerged > ServerCapacityAllocated @ Leak(1.0)

As we run this model, we can see that the number of requested services grows significantly over time. This makes sense, as we’re only able to provision a maximum of ten services per round.

However, it’s also the best case, because we’re not capturing the three error states:

- Unique port and name assignment can fail because of missing or incorrect information

- Puppet configuration can also fail due to missing or incorrect information.

- Puppet configurations can have errors in them, requiring rework.

Let’s update the model to include these failure modes, starting with unique port and name assignment. The error-free version looks like this:

InflightServices > PortNameAssigned @ Leak(1.0)

Now let’s add in an error rate, where 20% of requests are missing information and return to inflight services stock.

PortNameAssigned > PuppetGenerated @ Leak(0.8)

PortNameAssigned > RequestedServices @ Leak(0.2)

Then let’s do the same thing for puppet configuration errors:

# original version

PuppetGenerated > PuppetConfigMerged @ Leak(1.0)

# updated version with errors

PuppetGenerated > PuppetConfigMerged @ Leak(0.8)

PuppetGenerated > InflightServices @ Leak(0.2)

Finally, we’ll make a similar change to represent errors made in the Puppet templates themselves:

# original version

PuppetConfigMerged > ServerCapacityAllocated @ Leak(1.0)

# updated version with errors

PuppetConfigMerged > ServerCapacityAllocated @ Leak(0.8)

PuppetConfigMerged > PuppetGenerated @ Leak(0.2)

Even with relatively low error rates, we can see that the throughput of the system overall has been meaningfully impacted by introducing these errors.

Now that we have the foundation of the model built, it’s time to start exercising the model to understand the problem space a bit better.

Exercise

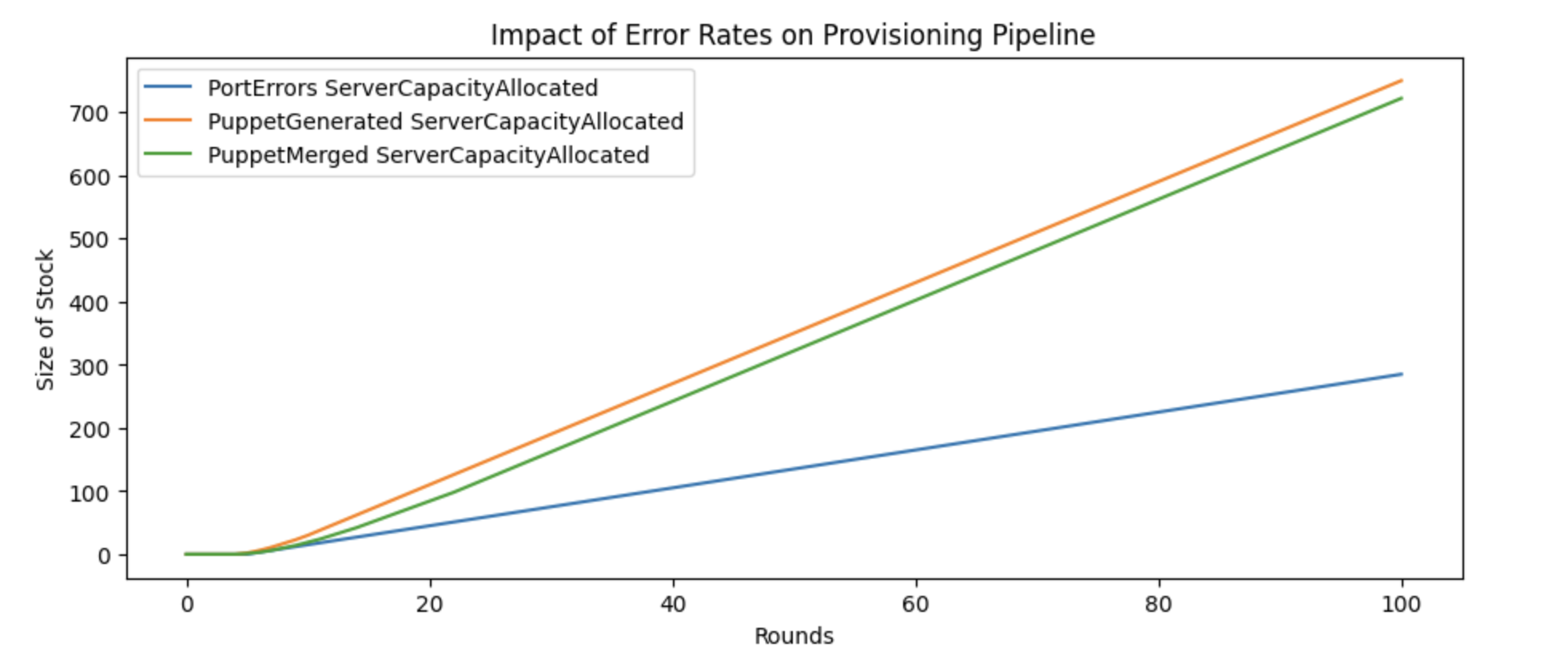

We already know the errors are impacting throughput, but let’s start by narrowing down which of errors matter most by increasing the error rate for each of them independently and comparing the impact.

To model this, we’ll create three new specifications, each of which increases one error from from 20% error rate to 50% error rate, and see how the overall throughput of the system is impacted:

# test 1: port assignment errors increased

PortNameAssigned > PuppetGenerated @ Leak(0.5)

PortNameAssigned > RequestedServices @ Leak(0.5)

# test 2: puppet generated errors increased

PuppetGenerated > PuppetConfigMerged @ Leak(0.5)

PuppetGenerated > InflightServices @ Leak(0.5)

# test 3: puppet merged errors increased

PuppetConfigMerged > ServerCapacityAllocated @ Leak(0.5)

PuppetConfigMerged > PuppetGenerated @ Leak(0.5)

Comparing the impact of increasing the error rates from 20% to 50% in each of the three error loops, we can get a sense of the model’s sensitivity to each error.

This chart captures why exercising is so impactful: we’d assumed during sketching that errors in puppet generation would matter the most because they caused a long trip backwards, but it turns out a very high error rate early in the process matters even more because there are still multiple other potential errors later on that compound on its increase.

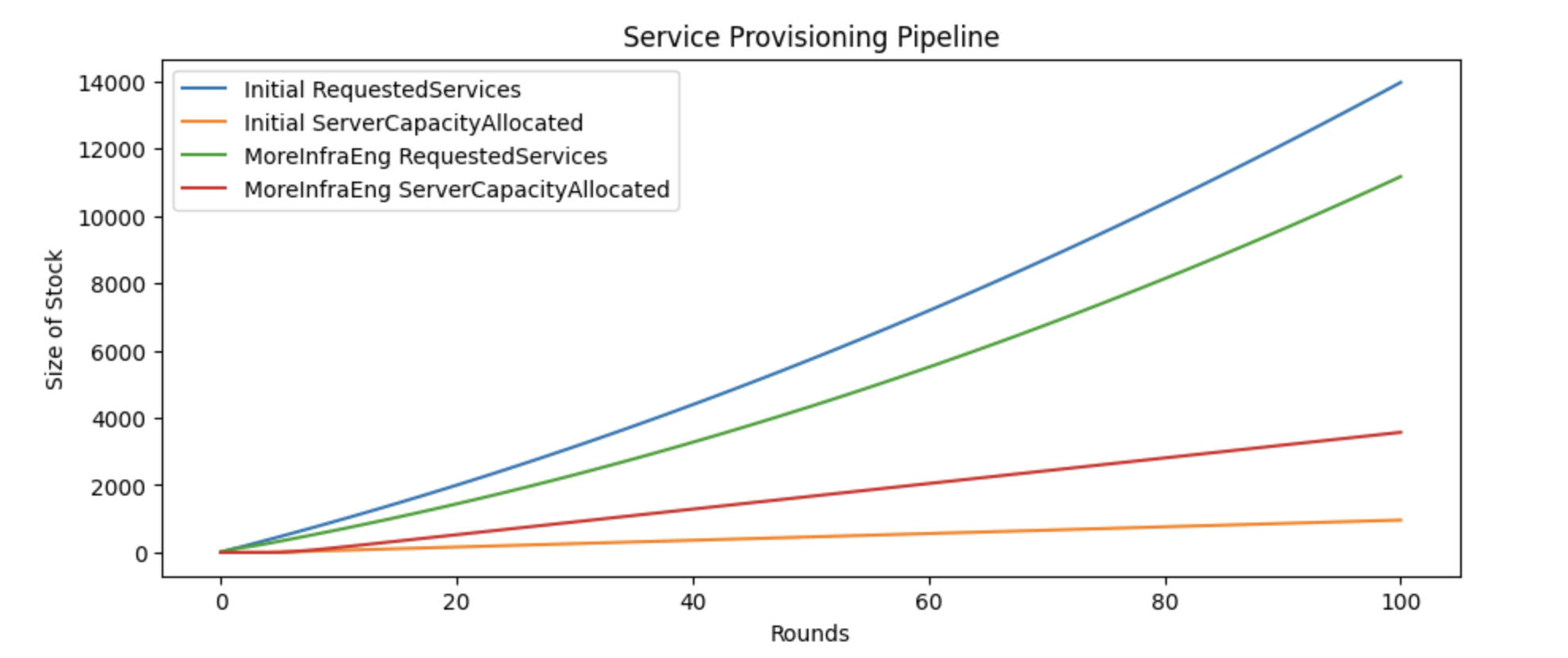

Next we can get a sense of the impact of hiring more people onto the service

provisioning team to manually provision more services, which we can model by

increasing the maximum size of the inflight services stock from 10 to 50.

# initial model

RequestedServices > InflightServices(0, 10) @ Leak(1.0)

# with 5x capacity!

RequestedServices > InflightServices(0, 50) @ Leak(1.0)

Unfortunately, we can see that even increasing the team’s capacity by 500% doesn’t solve the backlog of requested services.

There’s some impact, but that much, and the backlog of requested services remains extremely high. We can conclude that more infrastructure hiring isn’t the solution we need, but let’s see if moving to self-service is a plausible solution.

We can simulate the impact of moving to self-service by removing the maximum size from inflight services entirely:

# initial model

RequestedServices > InflightServices(0, 10) @ Leak(1.0)

# simulating self-service

RequestedServices > InflightServices(0) @ Leak(1.0)

We can see this finally solves the backlog.

At this point, we’ve exercised the model a fair amount and have a good sense of what it wants to tell us. We know which errors matter the most to invest in early, and we also know that we need to make the move to a self-service platform sometime soon.

That's all for now! Hope to hear your thoughts on Twitter at @lethain!

|

Older messages

Refining strategy with Wardley Mapping. @ Irrational Exuberance

Wednesday, January 8, 2025

Hi folks, This is the weekly digest for my blog, Irrational Exuberance. Reach out with thoughts on Twitter at @lethain, or reply to this email. Posts from this week: - Refining strategy with Wardley

How to effectively refine engineering strategy. @ Irrational Exuberance

Wednesday, January 1, 2025

Hi folks, This is the weekly digest for my blog, Irrational Exuberance. Reach out with thoughts on Twitter at @lethain, or reply to this email. Posts from this week: - How to effectively refine

Wardley mapping the LLM ecosystem. @ Irrational Exuberance

Wednesday, December 25, 2024

Hi folks, This is the weekly digest for my blog, Irrational Exuberance. Reach out with thoughts on Twitter at @lethain, or reply to this email. Posts from this week: - Wardley mapping the LLM ecosystem

2024 in review. @ Irrational Exuberance

Thursday, December 19, 2024

Hi folks, This is the weekly digest for my blog, Irrational Exuberance. Reach out with thoughts on Twitter at @lethain, or reply to this email. Posts from this week: - 2024 in review. 2024 in review. A

Measuring developer experience, benchmarks, and providing a theory of improvement. @ Irrational Exuberance

Wednesday, December 11, 2024

Hi folks, This is the weekly digest for my blog, Irrational Exuberance. Reach out with thoughts on Twitter at @lethain, or reply to this email. Posts from this week: - Measuring developer experience,

You Might Also Like

3-2-1: On the secret to self-control, how to live longer, and what holds people back

Thursday, February 27, 2025

“The most wisdom per word of any newsletter on the web.” 3-2-1: On the secret to self-control, how to live longer, and what holds people back read on JAMESCLEAR.COM | FEBRUARY 27, 2025 Happy 3-2-1

10 Predictions for the 2020s: Midterm report card

Thursday, February 27, 2025

In December of 2019, which feels like quite a lifetime ago, I posted ten predictions about themes I thought would be important in the 2020s. In the immediate weeks after I wrote this post, it started

Ahrefs’ Digest #220: Hidden dangers of programmatic SEO, Anthropic’s SEO strategy, and more

Thursday, February 27, 2025

Welcome to a new edition of the Ahrefs' Digest. Here's our meme of the week: — Quick search marketing news Google Business Profile now explains why your verification fails. Google launches a

58% of B2B Buyers Won't Consider You.

Thursday, February 27, 2025

Here's why, and how to fix it. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Teleportation, AI Innovation, and Outgrowing 'Good Enough'

Thursday, February 27, 2025

Oxford scientists have achieved quantum teleportation of logic gates, AI advances include France's €109B investment, Unitree's eerily smooth robotic movements, and DeepMind's Veo2 video

🧙♂️ Quick question

Thursday, February 27, 2025

Virtual dry-run of my event ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

A Classical Way to Save the Whales

Thursday, February 27, 2025

But what song, we don't know.

I'm investing in early stage AI startups

Thursday, February 27, 2025

Hi all, I'm investing in early stage AI startups. If you know someone who's looking for easy-to-fit-in checks from helpful founders (happy to provide references) I'd love to chat. Trevor

🧙♂️ [NEW] 7 Paid Sponsorship Opportunities

Thursday, February 27, 2025

Plus secret research on La-Z-Boy, Android, and OSEA Malibu ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

• Relax with a Great Book • Book Deals • Free • KU • Paperback •

Thursday, February 27, 2025

Reading Deals Here! Fiction and Non-fiction Book Deals for You! ContentMo's Books Newsletter