Claude 3.7 Sonnet and GPT-4.5 - Sync #508

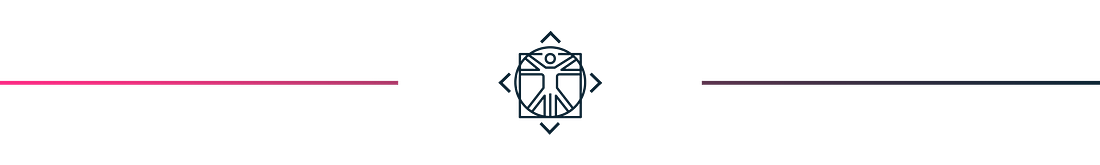

I hope you enjoy this free post. If you do, please like ❤️ or share it, for example by forwarding this email to a friend or colleague. Writing this post took around eight hours to write. Liking or sharing it takes less than eight seconds and makes a huge difference. Thank you! Claude 3.7 Sonnet and GPT-4.5 - Sync #508Plus: Alexa+; Google AI co-scientist; humanoid robots for home from Figure and 1X; miracle HIV medicine; a startup making glowing rabbits; and more!Hello and welcome to Sync #508! This week, both Anthropic and OpenAI released their newest models, Claude 3.7 Sonnet and GPT-4.5, respectively, and we’ll take a closer look at what both companies have brought to the table. Elsewhere in AI, Amazon announced a new upgraded Alexa powered by Anthropic’s Claude. Meanwhile, Google released an AI co-scientist, OpenAI rolled out Deep Research, Sora arrived in the UK and EU, and DeepSeek accelerated the timeline for releasing its next model, R2. In robotics, Figure and 1X announced their humanoid robots for home. Additionally, researchers from the RAI Institute taught Spot to run faster and built a bicycle-riding robot capable of doing some impressive tricks. Beyond that, this issue of Sync also features a paper from Meta on decoding brainwaves into text, a miracle HIV medicine, a startup promising to deliver glow-in-the-dark rabbits and other fantastical animals as pets, and more! Enjoy! Claude 3.7 Sonnet and GPT-4.5This week, we have seen the release of not one but two new models from leading AI labs, OpenAI and Anthropic. In this article, we will take a closer look at both models and what they tell us about the future trajectories of AI development. Claude 3.7 Sonnet—Anthropic’s first hybrid reasoning modelLet’s start with Claude 3.7 Sonnet—Anthropic’s first hybrid reasoning model. According to Anthropic, this means their newest model is both a large language model and a reasoning model in one. When I first heard rumours about this hybrid approach, I thought Anthropic’s new model would be able to dynamically switch between a fast, LLM-based mode and a slower but more powerful reasoning mode based on the prompt, thus removing the need for the user to decide which mode to use. However, that did not happen, and we still have to choose which mode we want to use (although Claude’s UI is a bit cleaner in that regard compared to ChatGPT’s).  Similar to what we have seen in other reasoning models, in Extended Thinking mode Claude takes some time to “think” about the prompt and self-reflects before answering it, thus making it perform better on math, physics, instruction-following, coding, and many other tasks. To support these claims, Anthropic released a number of benchmark results showing an uplift in performance with Extended Thinking compared to Claude 3.5 Sonnet. When compared to its competitors, Anthropic’s new model with Extended Thinking is usually close to other reasoning models.

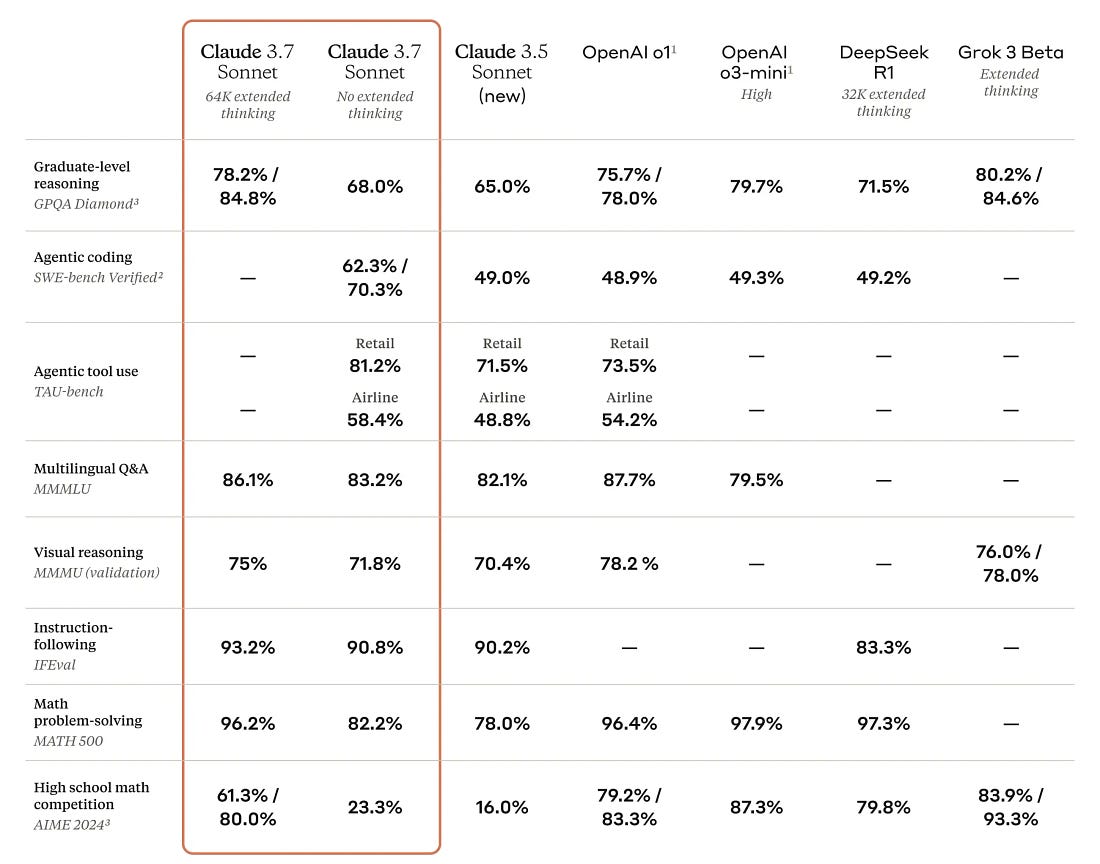

Additionally, Anthropic released benchmark results for coding and agentic tasks, both presenting Claude 3.7 Sonnet as a better option compared to Claude 3.5 Sonnet, OpenAI o1 and o3-mini (high), and DeepSeek R1.

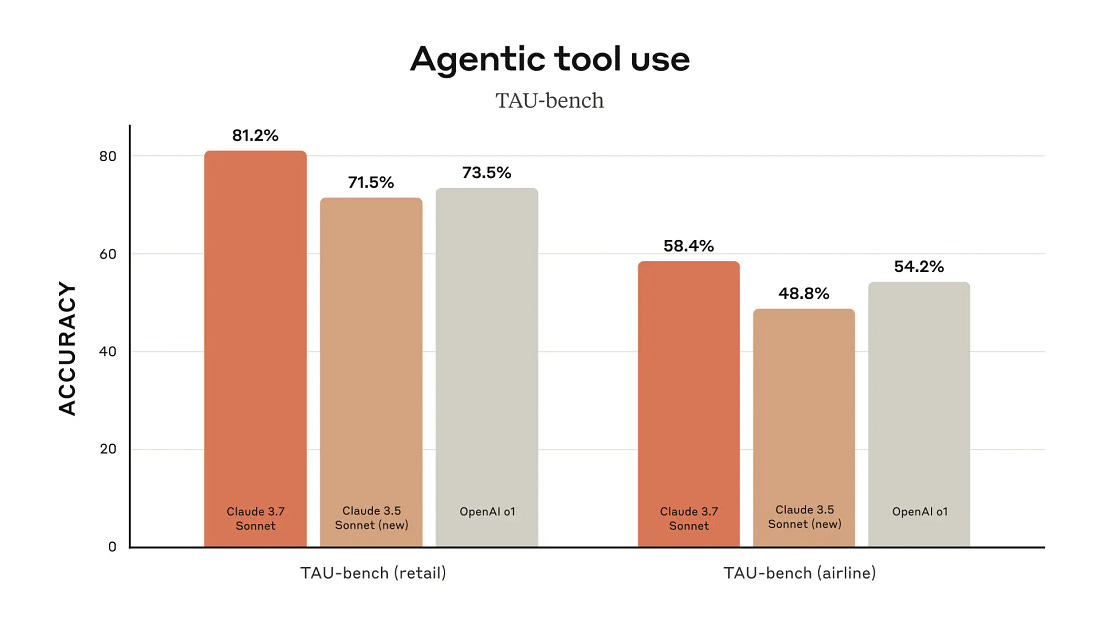

Anthropic also got creative in showing how good Claude 3.7 Sonnet is by making it play the Game Boy classic Pokémon Red. Claude 3.7 Sonnet successfully completed the game, whereas previous versions of Claude struggled with it.

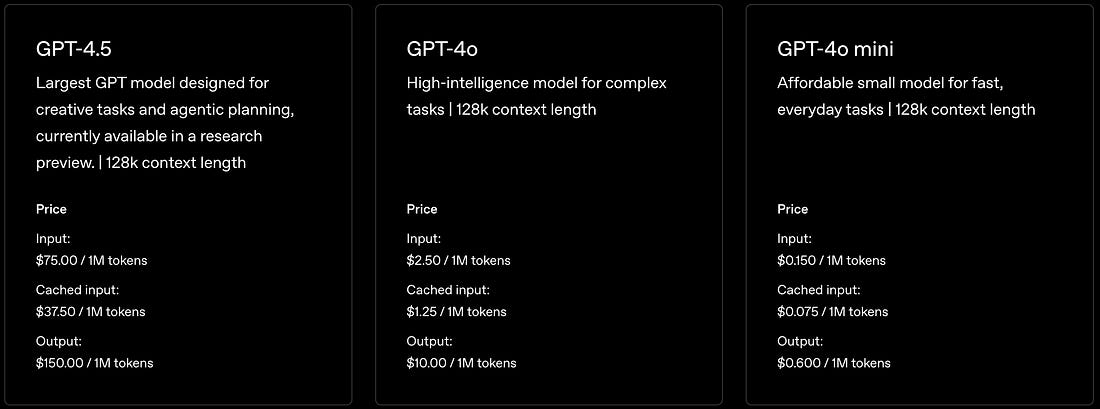

Please bear in mind that these are benchmark results provided by Anthropic and should be treated as marketing and taken with a grain of salt (this also applies to other companies). Claude 3.7 Sonnet is now available on all Claude plans—including Free, Pro, Team, and Enterprise—as well as the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI. However, the Extended Thinking mode is only available on paid plans. The API cost for Claude 3.7 Sonnet remains the same as its predecessors—$3 per million input tokens and $15 per million output tokens. One interesting thing Anthropic showed us this week is Claude Code—Anthropic’s first agentic coding tool. Claude Code is designed to assist developers with tasks such as searching and reading code, editing files, running tests, and managing GitHub commits. According to Anthropic, Claude Code has already become indispensable for its engineering teams, who use it to streamline development by automating complex processes such as debugging and large-scale refactoring. Also, you know Claude Code is geared towards software developers since it is a terminal tool.  Claude Code is currently in a limited research preview. If you want to try it, you can download Claude Code from GitHub, though you will need an account with Anthropic. GPT-4.5—meh?OpenAI’s newest model, GPT-4.5, is an interesting release, to say the least. 10 days before the release, Sam Altman was hyping the upcoming GPT-4.5 model by saying on X that “trying GPT-4.5 has been much more of a "feel the AGI" moment among high-taste testers than i expected.“ Well, the reality turned out to be a bit different. As Sam Altman said in a tweet announcing the new model, GPT-4.5 is a giant and expensive model. OpenAI won’t disclose any information about the first claim (although the company confirmed it is its largest model to date) but the fact that GPT-4.5 is the most expensive OpenAI model in their API catalogue supports the second claim. Compared to GPT-4o, the new model is 15 to 30 times more expensive. It is even more expensive to use GPT-4.5 via API than OpenAI’s reasoning models o1 or o3-mini.

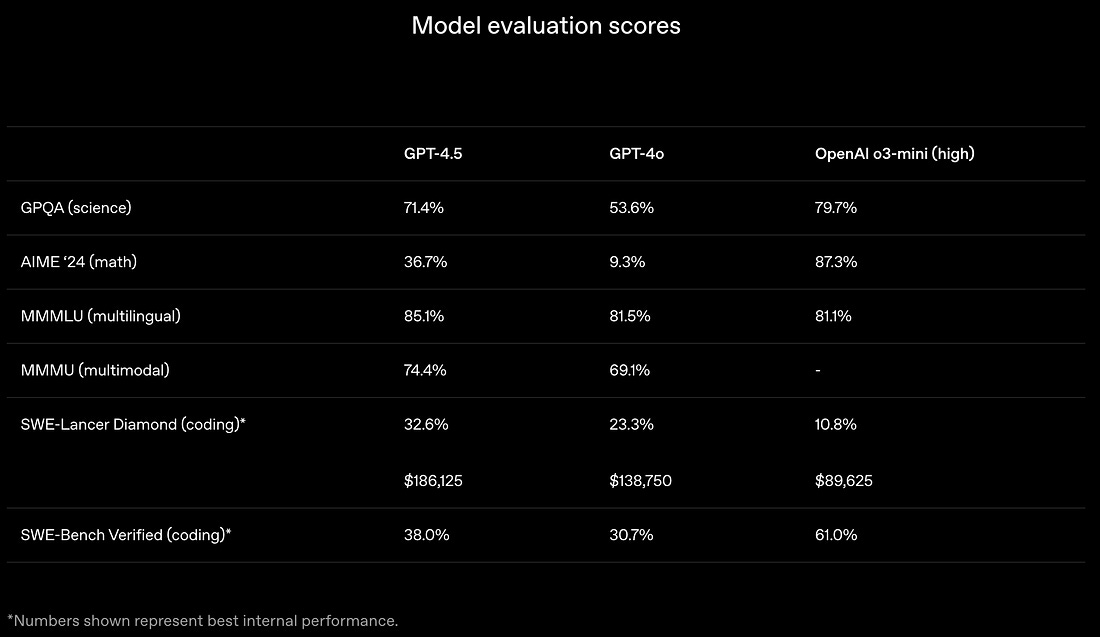

One might expect an equal uplift in performance to justify the steep price increase. However, if you look into the benchmark results provided by OpenAI, you won’t find 15 to 30 times more performance compared to previous models.

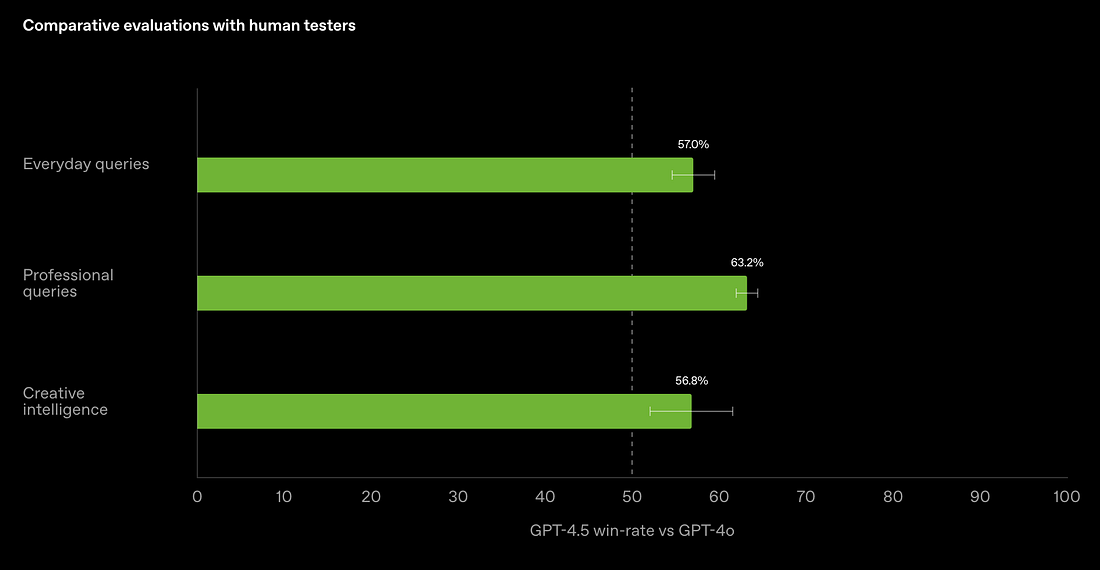

In some benchmarks, GPT-4.5 outperforms GPT-4o, with human testers preferring GPT-4.5’s answers in everyday and professional queries, as well as in queries requiring creativity.

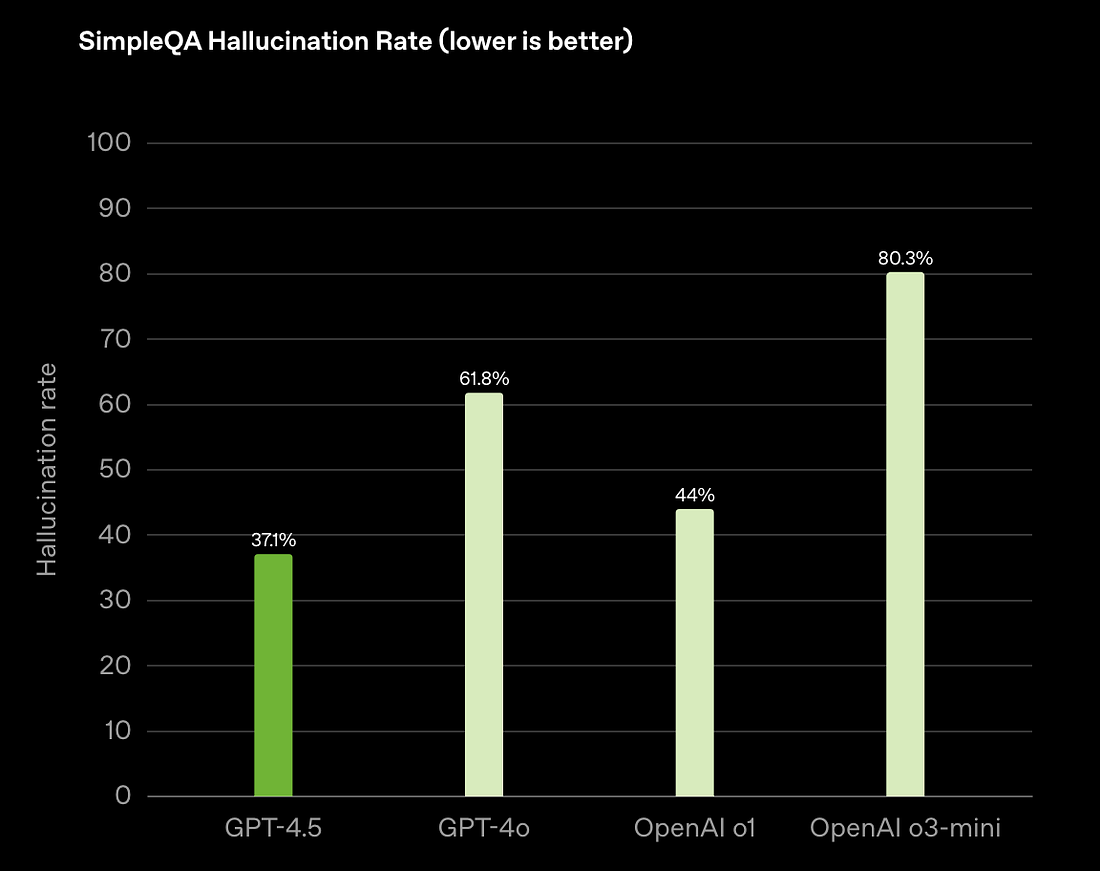

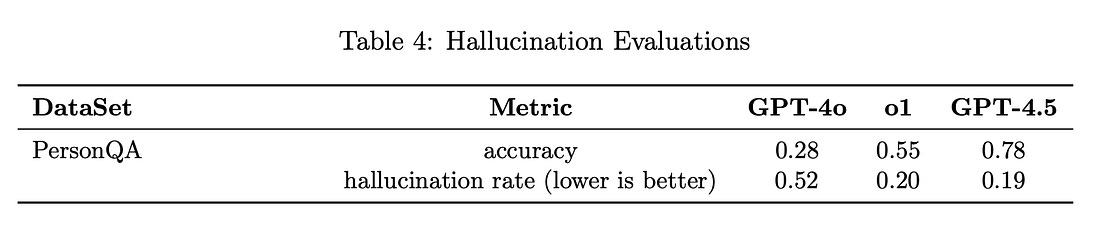

However, a look into the GPT-4.5 System Card reveals benchmarks—such as OpenAI’s Research Engineer interview—where the new model does exceed its predecessor (though not by much) but falls behind o1 or o3-mini. In some cases, both GPT-4.5 and the o-series models are significantly outperformed by Deep Research. GPT-4.5 is also supposed to hallucinate less than other OpenAI models. The graph showing the reduction in hallucinations included in the announcement shows a massive drop compared to GPT-4o or o3-mini but not so much compared to o1.

However, in the System Card, OpenAI shows that GPT-4.5 has scored lower rates in hallucination than GPT-4o but it is at the same level as o1 (although GPT-4.5 scores higher in accuracy on that benchmark).

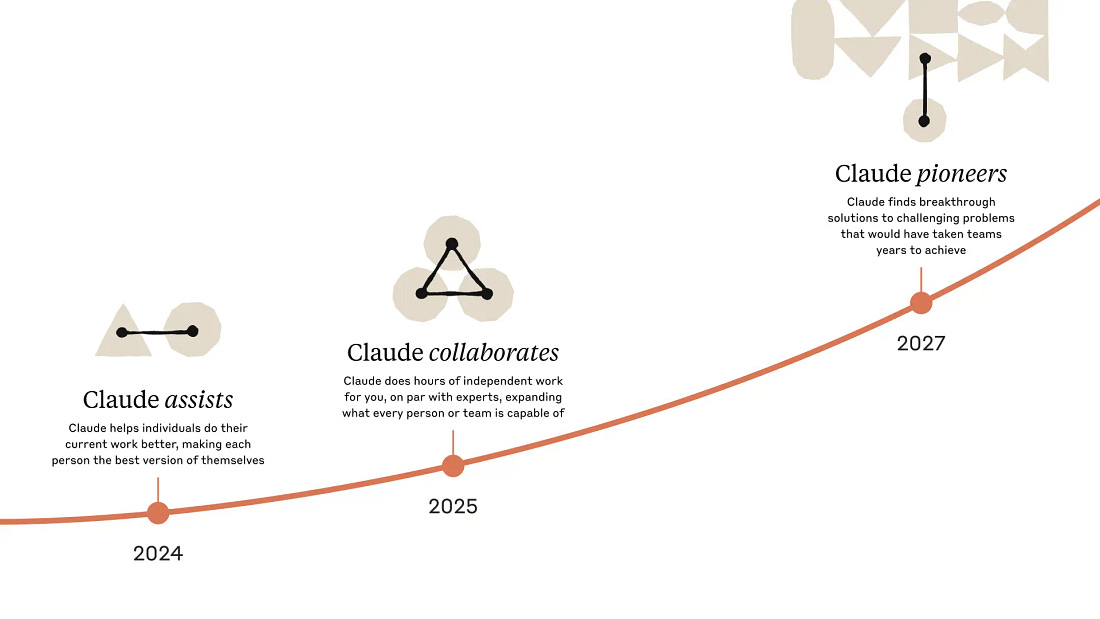

Additionally, OpenAI claims GPT-4.5 is its “best model for chat yet” and has greater “EQ”, or emotional intelligence. AI Explained checked the last claim and found that GPT-4.5 failed in his EQ evaluations while Claude 3.7 Sonnet provided expected answers. At the time I am writing this article, GPT-4.5 is only available to ChatGPT Pro subscribers. OpenAI promises to begin rolling out to Plus and Team users next week, then to Enterprise and Edu users the following week. GPT-4.5 currently supports internet search, and file and image uploads. However, GPT‑4.5 does not currently support multimodal features like Voice Mode, video, and screen-sharing in ChatGPT. I might be harsh in criticising OpenAI, especially considering that Claude 3.7 Sonnet without Extended Thinking also did not a massive improvement over Claude 3.5 Sonnet. However, Anthropic did not raise the cost of accessing its latest model through the API by 15 to 30 times. Additionally, Claude 3.7 Sonnet is available to all paying subscribers, not just those who pay $200 per month. GPT-4.5 comes with a significantly higher price tag than GPT-4o or even the o-series of reasoning models, yet it does not justify this cost with a proportional increase in performance. There is also one more thing worth mentioning—in the first published version of GPT-4.5 System Card, OpenAI stated that “GPT-4.5 is not a frontier model.” The latest version does not have that sentence anymore. What Claude 3.7 Sonnet and GPT-4.5 tell us about future AI developmentsWhat GPT-4.5 is showing is that we might have reached the point of diminishing returns when it comes to non-reasoning AI models. OpenAI has thrown a massive amount of computing power into creating its largest and most expensive model, and all that investment did not translate into an equally massive rise in performance. Sam Altman outlined in the roadmap for GPT-4.5 and GPT-5 that GPT-4.5 is OpenAI’s last non-chain-of-thought model. As DeepSeek R1 showed us a couple of weeks ago and o3 before it, the next gains in performance will not come from training even larger language models but from relying more on test-time compute—creating AI models that take more time to “reason” before producing an answer. That’s the path the leading AI companies believe will lead to the next breakthroughs and, eventually, to AGI.

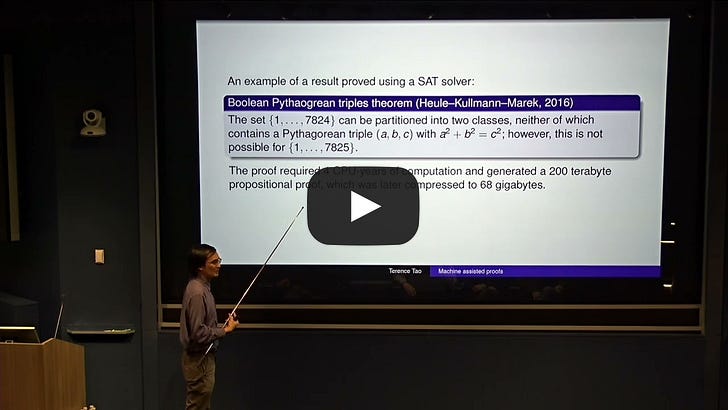

If you enjoy this post, please click the ❤️ button or share it. Do you like my work? Consider becoming a paying subscriber to support it For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter. Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving. 🦾 More than a humanBrain-to-Text Decoding: A Non-invasive Approach via Typing First two-way adaptive brain-computer interface enhances communication efficiency Supporting the first steps in your longevity career 🧠 Artificial IntelligenceIntroducing Alexa+, the next generation of Alexa Accelerating scientific breakthroughs with an AI co-scientist ▶️ there is nothing new here (30:31)  Angela Collier, a science communicator and YouTuber, responds to an article in New Scientist about the recently announced Google AI co-scientist. She criticises how AI advancements are often exaggerated in the media, misleading the public into believing AI can make groundbreaking discoveries when, in reality, it only reorganises existing information. Additionally, she warns about the monetisation strategies of AI companies, the ethical concerns of AI training on stolen data, and the risks of institutions becoming dependent on these tools. OpenAI rolls out deep research to paying ChatGPT users DeepSeek rushes to launch new AI model as China goes all in Apple will spend more than $500 billion in the U.S. over the next four years OpenAI’s Sora is now available in the EU, UK DeepSeek goes beyond “open weights” AI with plans for source code release Rabbit shows off the AI agent it should have launched with US AI Safety Institute could face big cuts The EU AI Act is Coming to America ▶️ Terence Tao - Machine-Assisted Proofs (59:11)  Terence Tao, considered one of the greatest mathematicians of all time, explores in this talk how AI and machine-assisted proofs can transform mathematics. He highlights how mathematicians have long relied on computational tools—from the abacus to logarithmic tables—and that the introduction of AI-powered tools is simply the next natural step. He recognises the transformative potential of these tools, such as enabling large-scale experimental mathematics, but does not yet see a definitive "killer app." Tao also emphasises key challenges, including AI's struggles with arithmetic, the inefficiency of formalisation, and the critical need for high-quality mathematical databases to fully unlock AI's potential in mathematics. If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word? 🤖 Robotics 1X, a Norwegian humanoid robotics startup, presents Gamma, a humanoid robot designed for household tasks. Unlike other such robots, Gamma features a soft, fabric skin for flexible and dynamic movements. The company envisions Gamma being used for cleaning and managing homes, as well as serving as a companion capable of having conversations, collaborating on various tasks, and even tutoring. 1X has not disclosed when the robot will be available for purchase or its price.  In this video, Figure demonstrates how its humanoid robot, Figure 02, can be used in logistics to pick up packages from a conveyor belt. The video showcases the robot's ability to identify objects and its dexterity. Figure will start ‘alpha testing’ its humanoid robot in the home in 2025 Reinforcement Learning Triples Spot’s Running Speed  Using reinforcement learning, researchers from the RAI Institute increased the running speed of Spot, Boston Dynamics’ four-legged robot, from 1.6 m/s to 5.2 m/s, more than tripling its original factory speed. Moreover, the fast-running gait is not biologically inspired but optimised for the robot’s mechanics. Spot’s default control system (MPC) models and optimises movement in real time but has rigid limitations. Reinforcement learning, in contrast, trains offline with complex models in simulations, leading to more efficient and adaptive control policies. With this result, the RAI Institute has demonstrated that reinforcement learning is a generalisable tool that can expand robot capabilities beyond traditional algorithms, enabling robots to move more efficiently, quietly, and reliably in various environments. ▶️ Stunting with Reinforcement Learning (1:23)  Researchers from the RAI Institute present the Ultra Mobile Vehicle, a robotic bike that can not only ride but also jump and perform various tricks. This project showcases the potential of reinforcement learning, and the results are truly impressive. Swarms of small robots could get big stuff done 🧬 BiotechnologyMaking a “Miracle” HIV Medicine Your Next Pet Could Be a Glowing Rabbit 💡TangentsThis artist collaborates with AI and robots Y Combinator Takes Heat for Helping Launch a Startup That Spies on Factory Workers Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it. Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support! My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!" |

Older messages

AI that can model and design the genetic code for all domains of life - Sync #507

Thursday, February 27, 2025

Plus: Grok 3; Figure shows its in-house AI model for humanoid robots; HP acquires Humane AI's IP; Meta explores robotics and announces LlamaCon; Microsoft's quantum chip; and more! ͏ ͏ ͏ ͏ ͏ ͏

CES 2025 - Sync #501

Sunday, January 12, 2025

Plus: Sam Altman reflects on the last two years; Anthropic reportedly in talks to raise $2B at $60B valuation; e-tattoo decodes brainwaves; anthrobots; top 25 biotech companies for 2025; and more! ͏ ͏

500 weeks later

Thursday, January 9, 2025

Reflections on a decade-long and looking ahead to 2025 ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

OpenAI proposes a new corporate structure - Sync #500

Sunday, January 5, 2025

Plus: Nvidia's next move; the state of AI hardware startups; "AI factories" for war; BYD enters humanoid robot race; ChatGPT Search vs. Google Search; and more! ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Deliberative alignment - Sync #499

Sunday, December 29, 2024

Plus: GPT-5 reportedly falling short of expectations; Nvidia GB300 "Blackwell Ultra"; automating the search for artificial life with foundation models; Boston Dynamics' Atlas does

You Might Also Like

Vo1d Botnet's Peak Surpasses 1.59M Infected Android TVs, Spanning 226 Countries

Monday, March 3, 2025

THN Daily Updates Newsletter cover Starting with DevSecOps Cheatsheet A Quick Reference to the Essentials of DevSecOps Download Now Sponsored LATEST NEWS Mar 3, 2025 The New Ransomware Groups Shaking

🪩 Why There Are So Many Linux Distros — Do Clone's Creepy Robots Have a Reason to Exist?

Monday, March 3, 2025

Also: 8 Wild Gaming Accessories You Can Actually Buy How-To Geek Logo March 3, 2025 Did You Know The slang term "the clink," used to refer to prison or jail, can be directly traced back to an

Re: Take incredible iPhone photos

Monday, March 3, 2025

Hi there, Ever had the perfect photo opportunity slip by while you were fumbling with your iPhone settings? Or snapped a picture, only to find out later it was blurry? You're not alone. But you don

So you want to break down monolith? Read that first.

Monday, March 3, 2025

My lessons learned, dos and donts from breaking down monoliths. I gathered my experience on what to do before even starting. I explained hy defining real business metrics is critical and why you should

📧 Get Pragmatic REST APIs for 30% OFF (limited offer)

Monday, March 3, 2025

Hey, it's Milan. More than 400+ students are already deep into the PRA lessons and they're finding it the "best REST APIs course" they've ever seen. So I want to share this

SRE Weekly Issue #466

Monday, March 3, 2025

View on sreweekly.com A bit of a short issue this week, as I spent most of my weekend at my child's first First Robotics Competition of the season. FRC truly is a microcosm of reliability

WP Weekly 232 - Energy - Faster Woo, Patterns in Folders, $800K Yearly

Monday, March 3, 2025

Read on Website WP Weekly 232 / Energy The WordPress energy was high at the recently concluded WordCamp Asia 2025. In this issue, check new plugin launches like Role Editor, Frontis Blocks, and

Last Chance to Register for ElasticON Singapore – Don’t Miss Out!

Monday, March 3, 2025

Join us tomorrow for Elastic insights, top speakers, and more!ㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤㅤ

Spring Bean Scopes for Dependency Injection

Monday, March 3, 2025

Since the Spring Container is responsible for the object lifetime management of Spring Beans, it is important to know how the Spring Container determines how bean objects are shared and disposed ͏ ͏ ͏