Takeaways

Gatekeeping is usually done to preserve individual status rather than group status, and should be avoided

Cost of capital and illiquidity spirals are linked in a feedback loop

Feature post by Vicki Boykis on how we should pay attention to the man behind the AI curtain

1. Gatekeeping for group vs individual status

You've seen this before.

A beginner, starry eyed and bushy tailed, will come looking for advice on how to get started in a subject.

|

And a host of experts will emerge from the depths to tell them it can't be done, that they should go back and spend years learning the prerequisites, and they should be embarrassed for asking the question in the first place. "The nerve of some people, thinking they could avoid paying their dues."

With some "experts" even finding things to complain about when others launch courses to help beginners do just that.

|

We encounter gatekeeping all the time, and it's mostly done to preserve status. There are some valid forms of gatekeeping, and I'll come to that in a bit. But it's nearly always done to exclude people and be mean. Funnily enough, the gatekeepers never seem to realise that they could be excluded too.

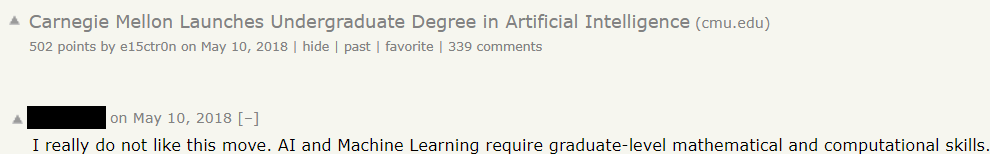

For example, you could say that you can't start machine learning unless you learn calculus, statistics, and linear algebra, just like the commenter above.

|

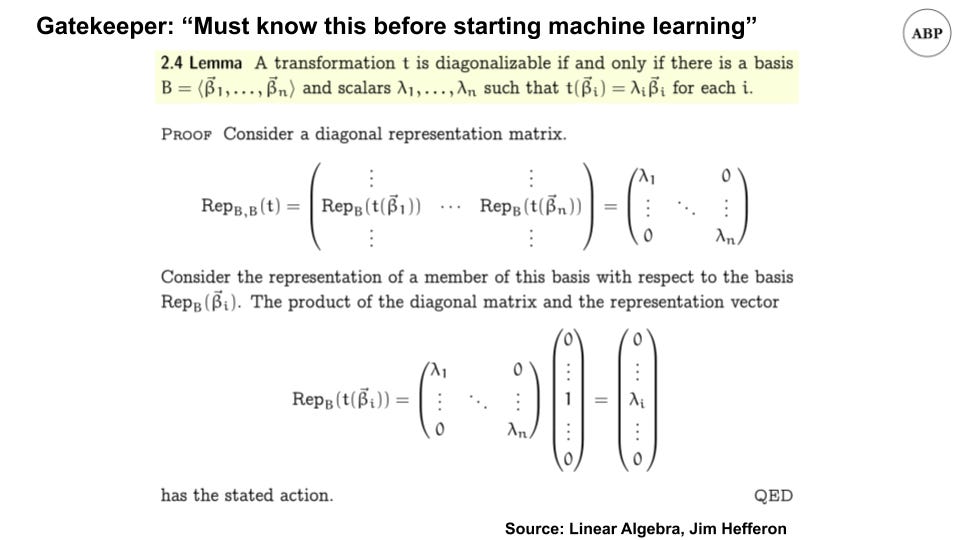

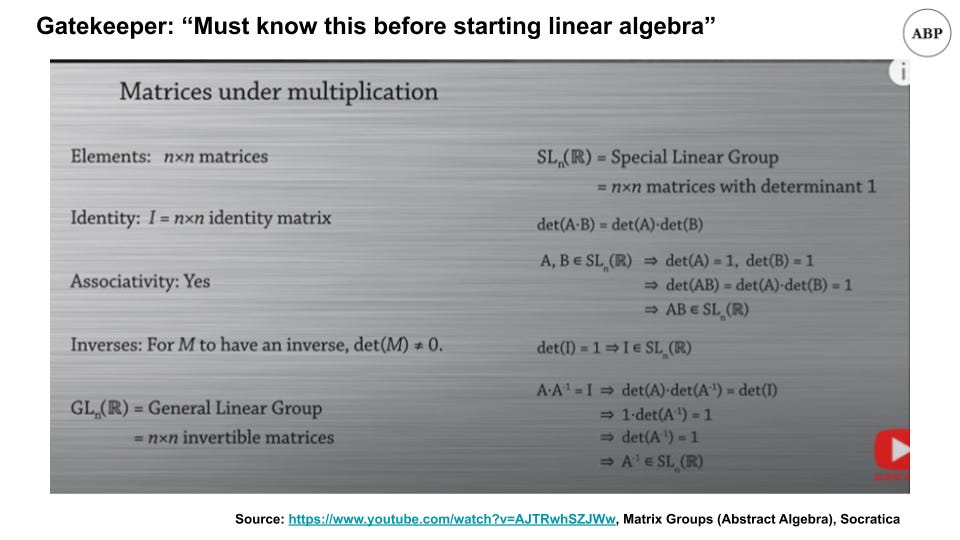

And you could also say that you can't start linear algebra unless you learn group theory, how matrices are a ring, and when to work with linear groups or not [1]

|

And you could gatekeep further and say that the above depends on set theory, Peano axioms, and philosophy. Wonder how much of that the commenter spent his undergrad studying.

If we wanted to, we could gatekeep anything.

We'd also never get anywhere since we'd never get started.

There are valid forms of gatekeeping. If you're excluding someone because they would be harmful to the community, that's reasonable. For example, if you're building a group of video gamers, and you get a membership request from someone who wants to ban video games, it probably doesn't make sense to accept her in.

More often though, gatekeeping is an attempt by individuals in the "in-group" to maintain their status, as if they somehow lose out the more people know what they know. It comes from a place of insecurity; from people afraid to let others realise what they do can be done by others too. As I wrote about last month, that's like shorting the lottery, and probably a bad idea. For example, the impressionist painters were all gatekept when they first started, but look at their influence in art now [2].

Now, note that the gatekeeper's aren't totally wrong. In fact, their suggestions often make sense. For example, it would be tremendously helpful to know linear algebra while studying machine learning. And if you want to become an expert, you have to master all the math required [3]. But artificially preventing people from starting a subject doesn't help anyone. A better response would have been "Yes, here's some simpler courses to get started, come back and revisit the fundamentals after." Enable rather than disable.

|

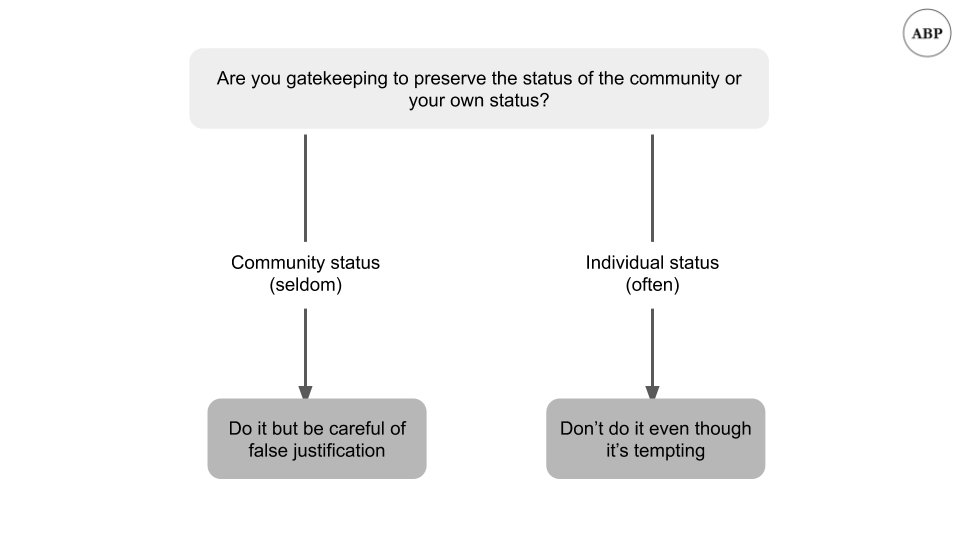

If you've been biased towards gatekeeping, I'd encourage thinking about whether you're helping the community, or helping yourself [4]. If you've self-selected into reading this newsletter, you can do better.

Fields are large enough that the more people pursuing them the better. It's rarely, if ever, a zero-sum game, and more of an infinite game. By being open-minded and accepting of beginners, we can grow the entire pie, and have more for ourselves. That's how we can drive progress as individuals; how we have our cake and eat it too.

Open more gates, and be kind.

2. Liquidity spirals

You've also seen this before.

A market is humming along, doing the thing it does market balancing, and matching buyers and sellers. When suddenly a shock occurs, the market freezes up, and the market becomes "illiquid."

For example, how flour was in short supply a while back [5].

Or how the 08 crisis caused runs on banking institutions, bringing some to bankruptcy.

And recall earlier this year when we discussed that liquidity was the cause of crises, not capital.

Today we'll look at highlights of the paper "Market Liquidity and Funding Liquidity" by Markus Brunnermeier and Lasse Pedersen. The paper's long and mostly mathematical [6], but we can still review the conclusions.

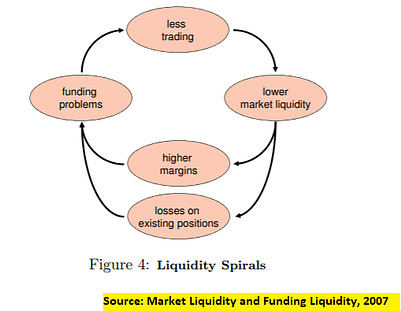

Markus and Lasse look at what causes these illiquidity spirals, finding that cost of capital [7] works in a feedback loop with liquidity. Harder to get funds, lower liquidity, higher volatility.

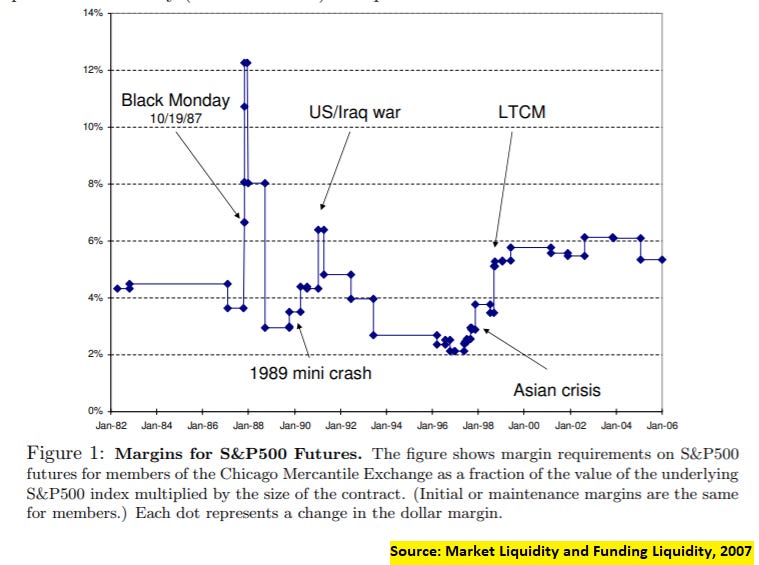

They first look at margin requirements [8], and note how they change in response to crises. As expected, margins (taking the role of costs here) become less liquid when there's more uncertainty, and become more liquid when there's less uncertainty.

|

Another way to reduce liquidity is to reduce capital of the participants:

Importantly, any equilibrium selection has the property that small speculator losses can lead to a discontinuous drop of market liquidity. This “sudden dry-up” or fragility of market liquidity is due to the fact that with high levels of speculator capital, markets must be in a liquid equilibrium, and, if speculator capital is reduced enough, the market must eventually switch to a low-liquidity/high-margin equilibrium

Which can lead to illiquidity spirals two ways:

|

first, a “margin spiral” emerges if margins are increasing in market illiquidity because a reduction in speculator wealth lowers market liquidity, leading to higher margins, tightening speculators’ funding constraint further, and so on

Second, a “loss spiral” arises if speculators hold a large initial position that is negatively correlated with customers’ demand shock

The first seems self explanatory; what the second means is that if you're a forced seller, the price is going to drop a lot, and you're going to have to sell more.

This leads them to conclusions for both investors and central banks:

For investors, they raise the importance of having a margin of safety, which seems obvious:

Finally, the risk alone that the funding constraint becomes binding limits speculators’ provision of market liquidity. Our analysis shows that speculators’ optimal (funding) risk management policy is to maintain a “safety buffer.”

More interesting is what they conclude about central banks and monetary policy.

central banks can help mitigate market liquidity problems by controlling funding liquidity. If a central bank is better than the typical financiers of speculators at distinguishing liquidity shocks from fundamental shocks, then the central bank can convey this information and urge financiers to relax their funding requirements

Central banks can also improve market liquidity by boosting speculator funding conditions during a liquidity crisis, or by simply stating the intention to provide extra funding during times of crisis

Which is what you're seeing in today's markets. Note that there's three separate actions a central bank can do here: 1) convey information, 2) provide funding, 3) just say they may provide funding; they might not even need to do so in the end. In the current crisis, markets recovered after (3), even though the actual funding provided was small.

Even the Fed has realised to not be the gatekeeper of last resort.

3. Pay attention to the man behind the curtain

This month's guest post is from Vicki Boykis of Normcore Tech, "a newsletter about making tech less sexy, more boring, and anything adjacent to tech that the mainstream media isn't covering." Vicki's excellent for anything data related, and the one memory that sticks out the most for me is when she predicted Netflix would introduce a "top 10 most popular" row.

|

You've not seen this before, and here's Vicki (any emphasis mine):

If you've ever seen a Boston Dynamics video, it's easy to be convinced that algorithm-wielding robots are going to transform our society into an unrecognizable, metallic, cyber dystopia.

Boston Dynamics, now a subsidiary of SoftBank, became famous for their work on robots like BigDog - elegant machines that can walk and move with terrifying anthropomorphic flexibility. Early videos featured hilarious failures and stilted movements. But, the company's latest robots are eerily approaching the uncanny valley of robotic-human movement by doing flips, navigate deftly around furniture, and open doors with nightmarish neck-claws.

News stories tell us to respect the awesome capabilities artificial intelligence, or the ability for computers to autonomously solve problems on the same level as humans. Our society's relationship as a society with AI has been not unlike Dorothy's with the Wizard of Oz. We've been told we need to make the long, dangerous, exhausting trip to find a powerful, omnipotent being who can grant all of our wishes.

The public response to Boston Dynamics robots is the clearest evidence of that. Blaring headlines call their progress "astounding and terrifying." 'These headlines seem scary because there's an element of powerful, smart, unknowable machines deciding the fate of humans, for the worse. But if we peel back the layers, we find that the real problem is not machines. The problem is people.

Take, for example, a 2018 case where, during an Uber autonomous vehicle pilot program, a self-driving car struck and killed a pedestrian during tests in Arizona. A Volvo SUV was "driving" in Tempe, with an operator in the driver's seat. She was not driving, but was monitoring the vehicle. It was almost ten p.m. at night. The pedestrian was crossing the road, unanticipated, with a bicycle, in a place where there was no crosswalk, and the car detected the pedestrian only 5.6 seconds before impact.

The initial media and public response was one of shock, disbelief, and fear that robots were taking over the roads, and that this was a bleak path forward for humanity. However, after months of local and federal investigation, a different and very complicated picture emerged. Recently, the National Transportation Safety Board conducted an investigation and released the detailed case incident, and the report paints a complex picture of many interlaced factors going wrong at the same time.

There were several issues at play. First, in the seconds right before the collision, the operator was looking at her cell phone, and not at the road. Second, with the way the car was built, there was no way for the AI system to activate the breaks. Only the human could do so, but because she was distracted, she didn't. Finally, the system itself didn't recognize that there was a pedestrian on the road until five seconds before it was too late, because it wasn't built to recognize jaywalkers.

There are a lot of illuminating lessons here. First, the algorithm developed for steering the car was missing some key information. In order for a car to be able to recognize specific objects, it has to be fed lots of other pictures of those same objects to infer from. In machine learning this is known as a training set. How were the engineers and data scientists who created the training set to know that they should have included lots of pictures of people jaywalking, at night? Moreover, even if they had included them, what other scenarios would they have to anticipate, in order to cover the whole range of things that can happen when a car is driving? Presumably, here, they should have been working with safety experts and law enforcement officials before the car was ever on the road.

But often, in corporations where there are tight loops between R&D and putting products out into the market, such as there is in the competitive self-driving vehicle industry, machine learning and research teams are constantly under pressure to perform and put out new, impressive results, with little time to reflect, and with little input from anyone other than immediate managers and executives.

Ultimately, if leadership says cars have to go out as soon as possible, there's no time for engineers to think through all of the ramifications of algorithms, and certainly no time, or incentive, to include outside stakeholders.

Which is why, if the algorithm was imperfect, the manual operator and other safety systems should have helped. But, as the report noted, Uber Advanced Technologies Group, at the time, had "an inadequate safety culture," That is, the group had no personnel with backgrounds in safety management and no risk assessment mechanisms within the physical car itself. And, because there was no culture of safety, there was no way for the algorithm to engage the breaks.

So, if the algorithm failed and the autonomous braking system failed, the operator should have been the safety valve and been easily able to stop what was happening. But, she wasn't paying attention.

And so it wasn't the algorithm itself that was the core issue, but a number of very human pressures: Uber's corporate safety culture at the time, go-to-market pressures, the lack of domain experts involved in reviewing the algorithm, the lack of safety valves in the car, the lack of operator preparedness, and the unpredictability of a pedestrian crossing a multi-lane highway late at night.

And if Uber couldn't (or wouldn't) get this right, then how can other companies operating under the same market pressures and with the same people issues be expected to? Having worked on a range of machine learning projects, I can say with confidence that technical issues usually pale in comparison to problems of corporate politics, and of multiple, compounded problems that no one saw coming in the system. AI systems in and of themselves are very complicated technologies, with many moving parts and many places where they can fail. But when you add people on top, it becomes almost impossible to police.

People rush work due to internal deadlines. People misunderstand metrics. And perhaps most importantly, executives look mainly at immediate financial incentives and deadlines as opposed to examining the holistic picture that may result in short-term losses, but long-term gains.

Show me the company's financial incentives, and I'll show you how and why it acts the way it does. In another example, recently, Netflix changed its recommendation algorithm, which picks what to show you on the home screen, so that the "viewing " metric meant watching at least two minutes of a title, from "watched 70 percent of a single episode of a series" to anyone who "chose to watch and did watch for at least 2 minutes."

What this probably means in reality is that the market for streamable content is becoming extremely saturated. Previously, Netflix was in the lead, but now it has to compete with a number of services, including, Disney+. It's under pressure to perform well with its original content, which it spent a lot of time and money on, which means changing the numbers to try to better reflect that.

It's usually these incentives and pressures, more so than the actual components of AI algorithms, that we have to think about when we read and evaluate stories of AI dystopian woe in the news. While the algorithms are, for sure, hard to get right, what's even harder is to predict and dig into the behaviors of these algorithms in conjunction with the systems of people who shepherd them out into the larger world. And that, not the robots, is the concerning part.

When Toto pulls back the curtain on the wizard, he's angered, and shouts, "Pay no attention to the man behind the curtain," as he frantically tries to turn the knobs on the great machine he's built.

In reality, it turns out that the Wizard of Oz is not unlike most AI systems: a great, terrifying machine, propped up entirely by people with their own motivations. But, just like companies need to improve their accountability, so too, we the public, need to start being more like Toto, more critically reading news stories and pulling back the curtain on what's truly going on behind the scenes instead of working off knee-jerk reactions. And, the more media offers us these second-level insights on the people systems behind the AI systems, the better equipped for the robotic future we'll all be.

Questions for Vicki

1. What are you practicing deliberately? E.g in the same way a football player would train drills to consciously improve

Trying not to read Twitter before bed. I was able to do this for 2 weeks before I reinstalled the app on my phone. I’m going to try again next month. I hate that I am dependent on my dopamine reactions to the news and need to get better about it.

And another one is knee-jerk reacting to news events as they happen. I’ve gotten better about not quote-tweeting stuff or posting about it as it happens and letting things unspool for a week or so, so I can digest and cover in my newsletter.

And a third is taking a walk every day.

2. What are the top frameworks you use to understand the world?

Fix the internet by writing good stuff and being nice to people

A group of people on the internet will try to tear each other apart

3. What do you wish more people ask you about?

What it's like to be a working parent, particularly mom, during COVID. There is an enormous swath of women who are dropping out of the workforce to now handle their kids' education and it's not an issue we're paying enough attention to

Shoutouts

Over 16,000 innovative startup and tech professionals subscribe to Charlie O’Donnell’s weekly newsletter, which started over ten years ago, before newsletters were cool. He shares info on fundraising, startup strategy, and maintains a huge list of high-quality weekly events for anyone working on either the next big thing or just their next big thing. Signup here: www.nycweeklynewsletter.com

I spoke with the founder of Spendyt, a simple way to track your budget in an email-optional way

I caught up with the founder of Explo, a YC company that enables companies to build user-facing analytics, like dashboards and reports, seamlessly integrated into your web app

I chatted with the CEO of Savitude, which is using AI to design clothes faster

Other

"Colour blindness is an inaccurate term". As a colour blind person this was cool to read, especially since it had new info

"The long tail turns out toe be a major cause of the economic challenges of building AI businesses"

Intro to abstract algebra and group theory by Socratica (youtube series). Recommended, suitable for beginners.

Survey

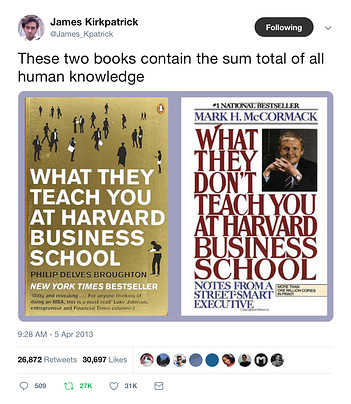

It's not too late to take the 2 min reader survey! So far I've gotten responses describing the newsletter as "The most bizarre interesting discussion on popular topics" (a glowing review in my books) to "Not sure, honestly not differentiated" (not so great), reminding me of the tweet below. More responses are much appreciated!

|

Footnotes

Having not known about group theory until this year, I'd have to say it's the most fascinating thing I've learnt about the entire year. It really helped in giving me intuition behind why we define algebra "things" and "operations" the way we do. Why is matrix multiplication not commutative, for example?

Impressionists first got their name from critics ridiculing them for their "unfinished" artwork that were mere "impressions"

To be clear - I agree that in order to become good at machine learning, you'll want to be good at calculus, stats, linear algebra etc. The commenter is right in that regard. I disagree that we should gatekeep people for years because they haven't learnt all that's needed

I've skipped discussion about safety issues e.g. you should gatekeep someone from free soloing if they've never climbed anything before. I trust in the reader to have common sense here; hopefully that's not too much of an ask

You'll notice I didn't use toilet paper as an example. That's because I have a strong opinion against a popular TP medium article that went viral a while ago which I believe to be mostly inaccurate. I'm planning to write about it and those kinds of "here's a counterintuitive explanation" articles that are not counterintuitive but just wrong; haven't had time for it yet.

Also, full disclosure that I don't fully understand the math in the paper. Particularly, there's a speculator skewed return conclusion that I don't quite follow.

For readers unaware of what cost of capital means, think of it as the cost of funding. I wrote about it more previously for paid subs here

"When a trader — e.g. a dealer, hedge fund, or investment bank — buys a security, he can use the security as collateral and borrow against it, but he cannot borrow the entire price. The difference between the security’s price and collateral value, denoted as the margin, must be financed with the trader’s own capital"