The COVID-19 pandemic, which has already killed more than 250,000 people in the United States, is raging. This month, however, there was a potential light at the end of the tunnel. Pfizer and Moderna released the initial results of their Phase III coronavirus vaccine trials. Both companies reported their vaccines were more than 90% effective with no serious safety concerns. This far exceeded the government requirement that vaccine candidates be at least 50% effective. Both companies say they plan to ask the FDA for an emergency use authorization this year. It brought hope that, sometime next year, the virus can be brought under control.

But the vaccines only work if enough people take them. And it's far from certain that this will happen in the United States.

A Gallup poll released on Tuesday found that 58% of Americans would be willing to be vaccinated against COVID. That still leaves 42% of Americans who, thus far, would be unwilling to receive a vaccine. The finding represents an improvement from September which found 50% of Americans would not take a COVID vaccine. But the results are "still indicative of significant challenges ahead for public health and government officials in achieving mass public compliance with vaccine recommendations."

It could require 70% or more of Americans to develop immunity to COVID — either through being infected with the virus or a vaccine — for the pandemic to end. So the reluctance of many Americans to be vaccinated could have significant consequences.

Facebook acknowledges that its platform became a key vector for the spread of misinformation about vaccines. Over the last couple of years, the company has announced a series of steps to limit vaccine misinformation on the platform. In March 2019, Facebook said it would "to tackle vaccine misinformation on Facebook by reducing its distribution and providing people with authoritative information on the topic." The company said it would take action against Pages and groups that post "vaccine hoaxes," as identified by the World Health Organization and the U.S. Centers for Disease Control and Prevention.

In October 2020, Facebook expanded its policy and said it would begin "rejecting ads globally that discourage people from getting a vaccine." Critically, this policy does not apply to organic content. So users can continue to post vaccine misinformation — including misinformation intended to discourage people from getting a vaccine — on Facebook.

While it is clear Facebook has taken steps to reduce the distribution of vaccine misinformation, a Popular Information investigation reveals it continues to find substantial audiences on the platform. Further, Facebook's efforts to counteract vaccine misinformation are often confusing and opaque.

The state of vaccine misinformation on Facebook

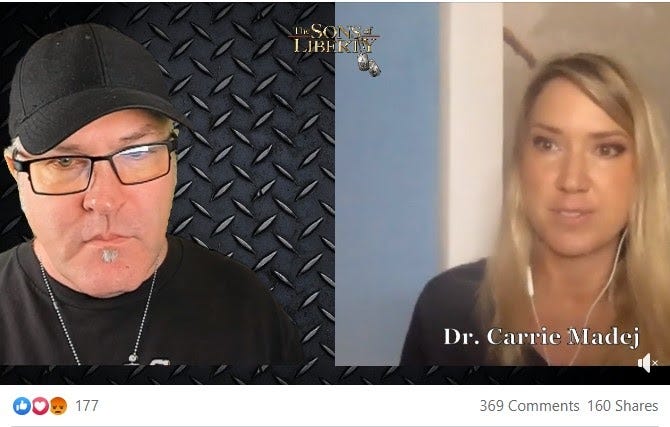

It's not hard to find coronavirus vaccine misinformation on Facebook. Bradlee Dean’s Facebook page has over 752,000 followers and is a “super-spreader” of health misinformation. The page features a verified Facebook badge and is listed as “Media/News Company.”

This week, Dr. Carrie Madej joined Dean on Facebook Live and falsely alleged that the COVID-19 vaccine will genetically modify recipients’ DNA. This is a common myth among anti-vaxxers, but has been repeatedly debunked by experts. It is not possible for mRNA vaccines to alter your DNA. Madej adds that this “genetic modification” could lead to humans who are “more cyborg than human” and are “harder to kill,” resulting in a situation she likens to the film “I Am Legend.”

Madej also falsely claimed that coronavirus vaccines will contain cancer-causing viruses, bacterium, and HIV. “They're covering this up...The vaccines themselves are causing HIV. We need to be really careful, infections are in there as well,” said Madej. Since Monday, Madej’s interview with Dean has garnered 6,200 views and has been shared at least 160 times.

The Facebook page WorldTruth.TV, which has 1.7 million followers, recently shared a post with the headline “Corrupt Vaccine Industry Has The Motive To Stage A Massive False Flag ‘Outbreak’ To Demand Nationwide Vaccine Mandates.” In the piece, the author argues that vaccine manufacturers are conspiring with the government to spread disease and place the government in “absolute control over your body.”

The Stop Mandatory Vaccination Facebook page has more than 168,000 followers. The page, which mostly shares anecdotes of those who claim “vaccine injury,” is run by Larry Cook – a “healthy lifestyle advocate” who has managed to earn significant income by selling self-published books. He also raised money for his work on GoFundMe before he was banned from the platform last year.

On October 18, the page claimed that “EVERY VACCINATION CAUSES HARM.” A week later, the page asserted that vaccines are “composed of multiple toxic and dangerous ingredients that *always* cause some harm - or cause GREAT HARM - to those who receive them.”

A few hours after Popular Information contacted Facebook for comment, the entire Stop Mandatory Vaccination page was removed. Facebook claimed the removal was a result of the page violating Facebook's QAnon policy.

"We are committed to reaching as many people as possible with accurate information about vaccines, and launched partnerships with WHO and Unicef to do that. We've banned ads that discourage people from getting vaccines and reduced the number of people who see vaccine hoaxes verified by the WHO and the CDC. We also label Pages and Groups that repeatedly share vaccine hoaxes, lower their posts in News Feed, and do not recommend them to anyone," Facebook spokesperson Dani Lever said in a statement to Popular Information.

There was no label on any of the Facebook pages featured in this report that identified them as a source of vaccine misinformation.

Critical information remains hidden from most users

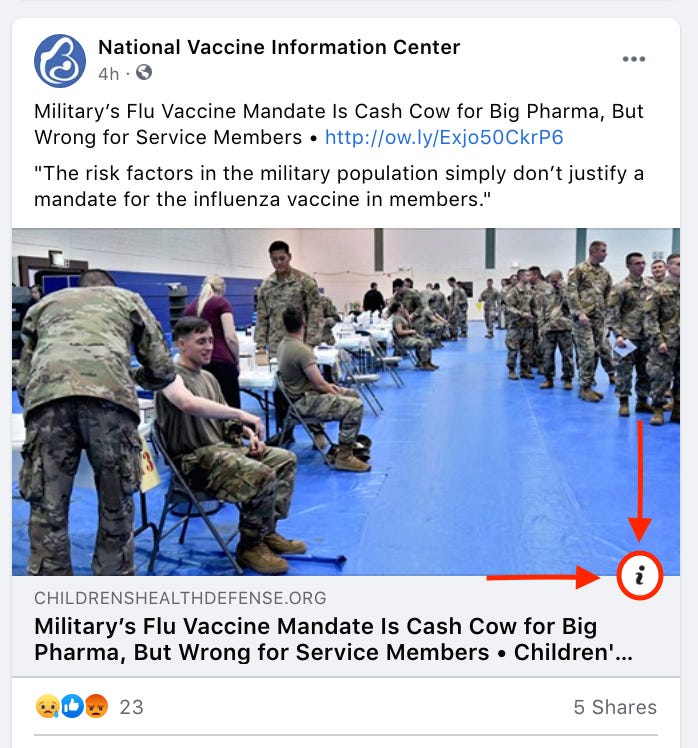

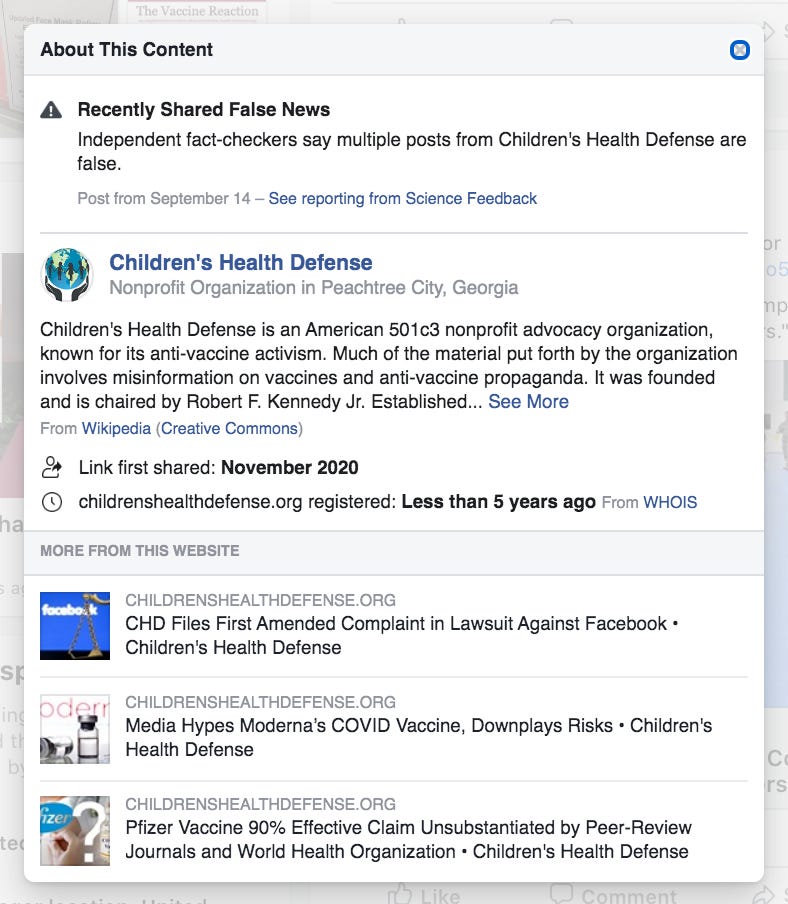

Many articles shared on Facebook feature an “About This Content” label displayed in the corner of the post. The label highlights general information about the outlet that a link is from, notes if the outlet has recently shared false news, and features additional content from the outlet. In essence, it provides users with context on the reliability of a link.

Despite this seemingly important function, however, these information labels are small and barely noticeable. In other words, users can unknowingly interact with misleading or false content unless they notice and click on the label.

The National Vaccine Information Center (NVIC), for example, is one of the nation’s leading sources of vaccine misinformation. Its Facebook page, which has over 209,500 followers, frequently amplifies content from news outlets that push false information.

Yet, when browsing through the page’s feed, the NVIC appears as though it’s a reliable source of information. Although most of the links feature information labels, there is nothing obvious that signals to the user that the content is unreliable.

Without clicking on the information label, a user would not know, for instance, that a post came from a site that Facebook’s independent fact-checkers have identified to frequently publish false information.

Additionally, Facebook provides links to more content from the same unreliable sources.

The broader issue is Facebook’s failure to disclose how frequently the NVIC shares information from false news sources. Seven of the 10 most recently shared links on the NVIC’s page, Popular Information found, came from outlets that Facebook’s independent fact-checkers have flagged for misinformation.

YouTube takes aggressive action, lacks follow through

While Facebook allows vaccine misinformation to be published on its platform with somewhat reduced distribution, YouTube recently decided to ban it entirely. On October 14, YouTube announced that videos with claims "about COVID-19 vaccinations that contradict expert consensus from local health authorities or WHO" would be removed. "A COVID-19 vaccine may be imminent, therefore we’re ensuring we have the right policies in place to be able to remove misinformation related to a COVID-19 vaccine,” Farshad Shadloo, a YouTube spokesman, told The Verge. YouTube also said it would reduce the spread of "borderline" content about vaccines.

But YouTube's implementation of its policy is lacking. Bradlee Dean's video from Monday, which is full of the kind of information YouTube says it would remove, is still available on the platform and has been viewed thousands of times. Dean's guest, Dr. Carrie Madej, also maintains a popular YouTube page which she uses to spread misinformation about vaccines.

Larry Cook, who ran the Stop Mandatory Vaccinations page on Facebook, maintains a YouTube channel with over 50,000 subscribers.

WorldTruth.tv also has a smaller YouTube channel that includes vaccine misinformation.