Avoid boring people - Entropy and information entropy

TakeawayIn physics, entropy measures disorder; in information, entropy measures the average amount of information. Book club announcementI announced the ABP book party a few weeks ago and response has been overwhelming. That’s awesome, and also unfortunately means I can’t accept everyone who applied. I thought about doing multiple sessions but it’d still not be feasible¹, so here’s what I’m planning (shoutout to some readers for the suggestions):

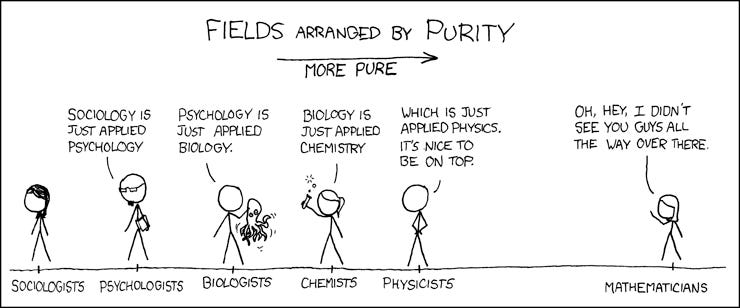

Singapore announcementSeparately, I’m in Singapore for the next two weeks, let me know if you want to meet in person! There’s a framework I want to discuss, and also add more rigour to. Hence, the next few weeks⁴ I’ll be talking about some ideas to build up to it. Frameworks on how the world works all have some number of assumptions, whether implied or stated explicitly. Assumptions can vary in terms of how rigourous they are, as shown in the xkcd comic below. The more rigourous, the more likely they are to hold for general situations rather than just specific ones. For example, if your framework requires a sociological assumption such as “humans tend to be pro-social”, that holds up less well than a math assumption such as “one human plus one human gives me two humans.”⁵ I believe that the more rigourous and “fundamental” a framework’s assumptions are, the more applicable it is, and the more likely it is to be accurate. This is debatable, since it’s not necessarily the case that a feature of one “lower level” system will apply to a “higher level” system⁶, but I’ll be proceeding with this assumption. Put another way, I want to break down frameworks into the key assumptions required. And the closer those assumptions are to pure math and science laws, the more convinced I’ll be that the framework is accurate⁷. Today I’ll do a brief overview of entropy, as well as the related concept of information entropy. It’s been a while since I’ve had to formally learn them so do let me know if I make any errors⁸. EntropyThis MIT video defines entropy as “a measure of the ways energy can be distributed in a system.” A layman definition might be something like “entropy measures disorder of the system.”⁹ The more possibilities there are, the higher the entropy. For example, flipping 100 coins would have more outcomes compared to flipping just 1 coin. Or how an object with a higher temperature will have more variety in states than the same object with a lower temperature. We needed this new term to use for The Second Law of Thermodynamics, which states that “the state of entropy of the entire universe, as an isolated system, will always increase over time.” The layman interpretation is that the disorder of a system will increase over time. This explains why “heat” flows from something hot to something cold, why your earphone wires always seem to get tangled, and why some people believe the universe will eventually end. Note that this is a fundamental physics law, and not something up for debate. If you’ve found something that you believe violates the second law, it’s you who is wrong, not the universe. I’m going to make a small logical leap now, and claim that the second law implies that things staying constant are “unnatural.” If we want something to stay the same, we need to exert effort to keep it that way¹⁰. Change is a fundamental physics law that we cannot escape from. I’ll come back to this in a few weeks. Information entropyA related concept to the entropy of physics is the entropy of information; they use the same equations¹¹. In the 1940s, the brilliant Claude Shannon¹² was working on how to communicate messages effectively, culminating in his landmark paper creating the field of information theory. Information theory deals with how information can be stored and transmitted, and is the foundation of most of our digital interaction today. Entropy in the context of information theory is “the average amount of information conveyed by an event, when considering all possible outcomes.” This is more confusing than before, so let’s think back to our coin flips. Suppose we knew the coin was rigged and actually had heads on both sides. Even after it is flipped, we don’t get any new information, since we know beforehand what the outcomes are going to be. In this case, we get low (no) information from the flip. See this video for a longer explanation¹³. A key result of information theory is what Shannon called the “fundamental theorem” ¹⁴- there is some speed limit in information transmission. As summarised by The Dream Machine, this means that if you keep under the limit, you will be able to send signals with perfect reliability, at no information loss. Conversely, when if you go faster than the speed limit, you will lose information, and there is no way around this. Again, I’ll come back to this in a few weeks. 1 Unlike what some people imagine, I do have a full time job. Also, best to start small and see what works this first time round. I thought about doing an in-person Manhattan one and then a virtual only one but that’s also too much work for now 2 Yep, there’s still time to sign up, since I did say I’d keep the link open till end of May 3 So the first book will be announced early June, and the group will meet end of June 4 Or months, we’ll have to see. I’m also still working on part 2 of the NFT article so that will come somewhere in between. 5 Some smartypants might object that there are fundamental limits to logic, which is true; we’ll be getting to that as well 6 One example is how quantum phenomenon do not generalise to things on a larger scale 7 The tradeoff here is that something extremely rigorous might not be useful. If you want to predict an economic trend, and all you allow yourself to assume is basic math functions, you’re not likely to be able to do anything 8 As always, please do let me know if I’m wrong 9 Some sources I’ve seen state that this isn’t precise but don’t say why, so I’m leaving it for now 10 Note that this still increase the entropy of the entire larger system, that includes yourself 11 As Mitchell Waldrop notes in The Dream Machine, this was one of the reasons why von Neumann told Claude Shannon to name the field information entropy 12 Shannon also connected boolean algebra with logic gates in the 1930s, which was the foundation for how computing is done 13 Information in this context measures how much you actually learn from a communication. If you learn nothing, there’s no information. If you learn a lot, there’s a lot of information You’re on the free list for Avoid Boring People. For the full experience, become a paying subscriber. |

Older messages

What is art, part 1

Saturday, May 1, 2021

What I learnt from art history and art

Avoid Boring People launches ABP

Saturday, April 24, 2021

Experimenting with a book club

Ergodicity, what's it mean

Saturday, April 3, 2021

Ensemble averages and time averages

Min, max, and 10x people

Saturday, February 27, 2021

What things do scale?

First Plaid, then the world

Saturday, January 30, 2021

Abstraction, APIs, and Plaid as Plumbing

You Might Also Like

AI's Impact on the Written Word is Vastly Overstated

Monday, March 3, 2025

Plus! VC IPOs; Sovereign Wealth Funds; The Return of Structured Products; LLM Moderation; Risk Management; Diff Jobs AI's Impact on the Written Word is Vastly Overstated By Byrne Hobart • 3 Mar

Know you’re earning the most interest

Sunday, March 2, 2025

Switch to a high-yield savings account ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Longreads + Open Thread

Saturday, March 1, 2025

Hackers, Safety, EBITDA, More Hackers, Feudalism, Randomness, CEOs Longreads + Open Thread By Byrne Hobart • 1 Mar 2025 View in browser View in browser Longreads A classic: Clifford Stoll on how he and

🚨 This could be a super bubble

Friday, February 28, 2025

An expert said we're in the third-biggest bubble ever, the US poked China one more time, and OpenAI's biggest model | Finimize TOGETHER WITH Hi Reader, here's what you need to know for

Boring, but important

Friday, February 28, 2025

For those life moments you might need . . . ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Last Trader Standing

Friday, February 28, 2025

The Evolution of FX Markets ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

My secret 15-minute video sharing my triple digit options strategy

Friday, February 28, 2025

Free training + book ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

👋 Bye bye, bitcoin

Thursday, February 27, 2025

Bitcoin's biggest one-day blow, Trump's latest tariff threat, and robots playing soccer | Finimize TOGETHER WITH Hi Reader, here's what you need to know for February 28th in 3:12 minutes.

Don't Overlook this Sector Billionaires are Quietly Investing In

Thursday, February 27, 2025

The Billionaires' Energy Secret (You Can Get In) ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Giveaway: Set Sail on Your Next Adventure 🚢

Thursday, February 27, 2025

Enter to win a chance to win a free trip from Virgin Voyages. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏