Platformer - Biden seeks to rein in AI

Here’s this week’s free column — a report from my trip to Washington, DC, this week, where I met with Biden administration officials to discuss the president’s new executive order on AI. Also in this edition: our first ad, which I discussed in our third-anniversary post last month. Showing a single ad underneath the column in the free weekly edition of Platformer will bring us closer to our goal of hiring a new reporter in 2024, and to monetize the roughly 94 percent of you who don’t pay anything to read the newsletter. You can read our advertising policy here. Do you value independent reporting on the Biden administration’s efforts to regulate AI? If so, your support would mean a lot to us. Upgrade your subscription today and we’ll email you first with all our scoops — like our recent interview with a former Twitter employee that Elon Musk fired for criticizing her. ➡️

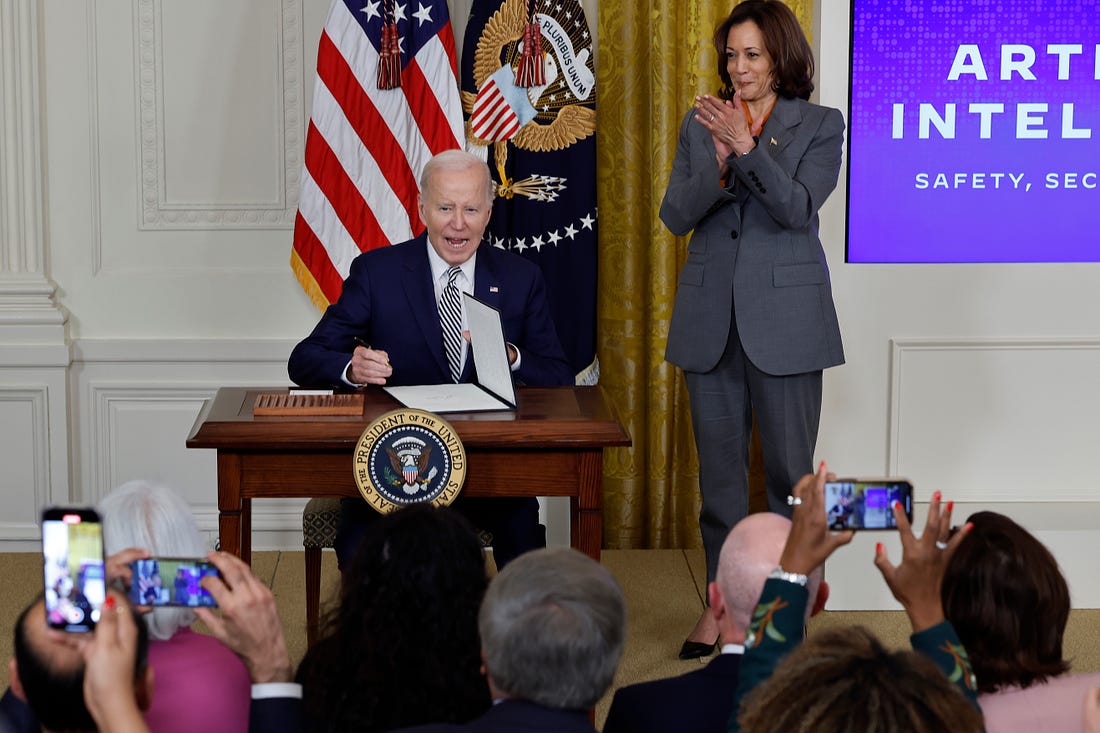

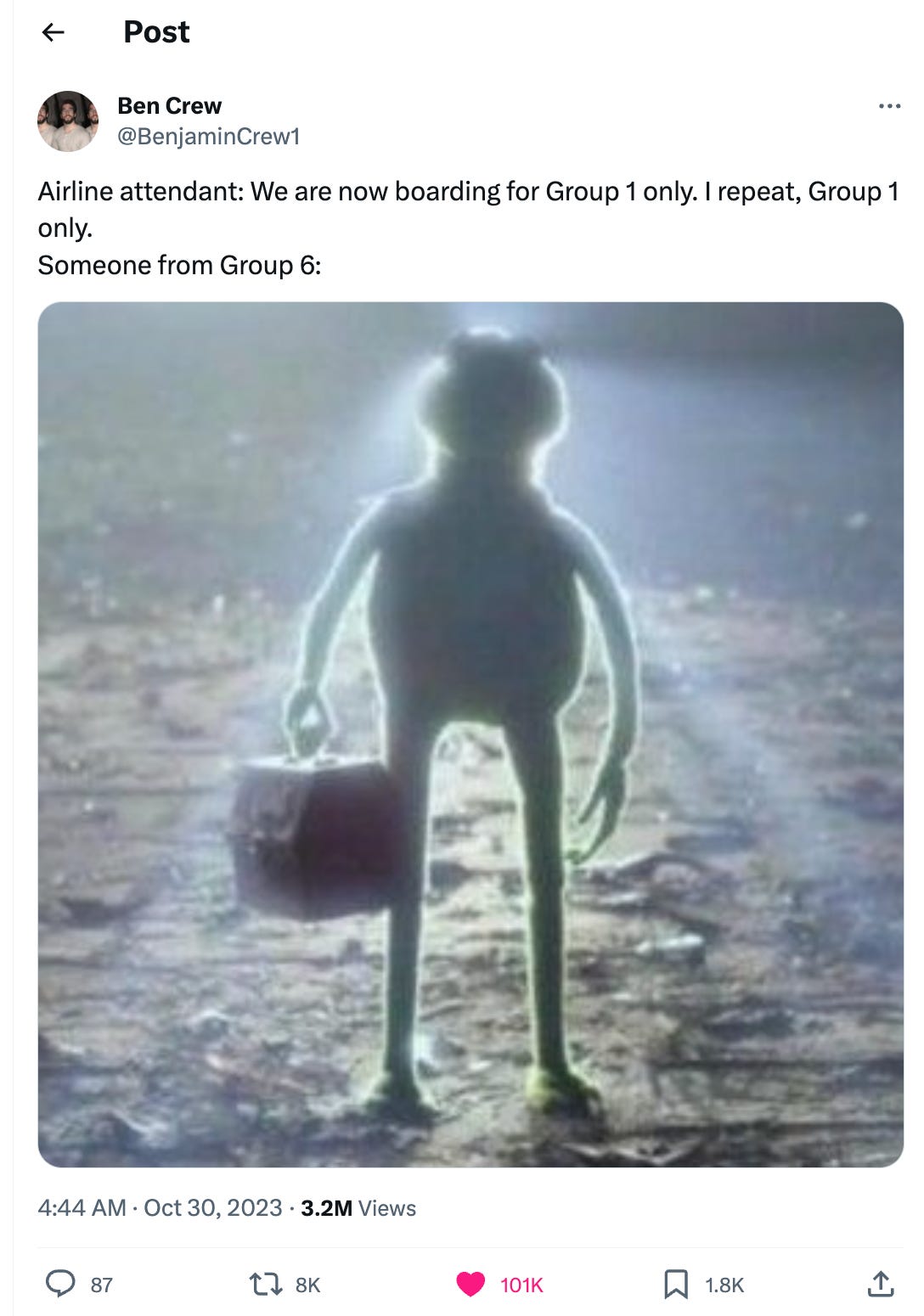

Biden seeks to rein in AIAn executive order gives AI companies the guardrails they asked for. Will the US go further? Vice President Kamala Harris looks on as President Joe Biden signs a new executive order guiding his administration's approach to artificial intelligence during an event in the East Room of the White House on Monday. (Chip Somodevilla / Getty Images)

I. WASHINGTON, DC — Moments before signing a sweeping executive order on artificial intelligence on Monday at the White House, President Biden deviated from his prepared remarks to talk about his own experience of being deepfaked. Reflecting on the times he has seen synthetic media simulating his voice and image, he was sometimes fooled by the hoax. “When the hell did I say that?” he said, to laughter. The text of the remarks stated that even a three-second recording of a person’s voice could fool their family. Biden amended it to say that such a recording could fool the victim, too. “I swear to God — take a look at it,” he said, as the crowd laughed again. “It’s mind-blowing.” The crowd’s laughter reflected both the seeming improbability of 80-year-old Biden browsing synthetic media of himself, and the nervous anticipation that more and more of us will find ourselves the subject of fakes over time. The AI moment had fully arrived in Washington. And, as I listened in the audience in the East Room, Biden offered a plan to address it. Over the course of more than 100 pages, Biden’s executive order on AI lays the groundwork for how the federal government will attempt to regulate the field as more powerful and potentially dangerous models arrive. It makes an effort to address present-day harms like algorithmic discrimination while also planning for worst-case future AI tools, such as those that would aid in the creation of novel bioweapons. As an executive order, it doesn’t carry quite the force that a comprehensive package of new legislation on the subject would have. The next president can simply reverse it, if they like. At the same time, Biden has invoked the Defense Production Act, meaning that so long as it is in effect, the executive order carries the force of law — and companies that shirk their new responsibilities could find themselves in legal jeopardy. Biden’s announcement came ahead of a global AI summit in the United Kingdom on Wednesday and Thursday. The timing reflects a desire on the part of US officials to be seen as leading AI safety initiatives at the same time US tech companies lead the world in AI development. The executive order “is the most significant action any government anywhere in the world has ever taken on AI safety, security, and trust,” Biden said. For once, the president's remarks implied, the United States would compete with its allies in regulation as well as in innovation. II. So what does the order do? The first and arguably most important thing it does is to place new safety requirements based on computing power. Models trained with 10 to the 26th power floating point operations, or flops, are subject to the rules. That’s beyond the computing power required to train the current frontier models, including GPT-4, but should pertain to the next-generation models from OpenAI, Google, Anthropic, and others. Companies whose models fall under this rubric must perform safety tests on their models, and share the results of those tests before releasing their models to the public. This mandate, which builds on voluntary requirements that 15 big tech companies signed on to over the past few months, creates baseline transparency and safety requirements that should limit the ability of a rogue (and extremely rich) company to build an extremely powerful model in secret. And it sets a norm that as models grow more powerful over time, the companies that build them will have to be in dialogue with the government around their potential for abuse. Second, the order identifies some obvious potential harms and instructs the federal government to get ahead of them. Responding to the deepfake issue, for example, the order puts the Commerce Department in charge of developing standards for digital watermarks and other means to establish content authenticity. It also forces makers of advanced models to screen them for their ability to aid in the development of bioweapons, and orders agencies to perform a risk assessment for dangers posed by AI related to chemical, biological, radiological, and nuclear weapons. And third, the order acknowledges various ways AI could improve government services: building AI tools to defend against cyberattacks; developing cheaper life-saving drugs; and exploring the potential for personalized AI tutors in education. Ben Buchanan, special adviser for AI at the White House, told me that AI’s potential for good is reflected in technologies like AlphaFold, the system developed by Google DeepMind to predict a protein's three-dimensional structure from its amino acid sequence. The technology is now used to discover new drugs. More advances are on the near horizon, he said. “I look at something like micro-climate forecasting for weather prediction, reducing the waste in the electricity grid, or accelerating renewable energy development,” said Buchanan who is on leave from Georgetown University, where he teaches about cybersecurity and AI. “There’s a lot of potential here, and we want to unlock that as much as we can.” III. For all that the executive order touches, there are still some subjects that it avoids. For one, it does almost nothing to require new transparency in AI development. “There is a glaring absence of transparency requirements in the EO — whether pre-training data, fine-tuning data, labor involved in annotation, model evaluation, usage, or downstream impacts,” note Arvind Narayanan, Sayash Kapoor, and Rishi Bommasani at AI Snake Oil, in their excellent review of the order. For another, it sidesteps questions around open-source AI development, which some critics worry could lead to more misuse.Whether it is preferable to build open-source models, like Meta and Stability AI, or to build closed ones, as OpenAI and Google are doing, has become one of the most contentious issues in AI. Andrew Ng, who founded Google’s deep learning research division in 2011 and now teaches at Stanford, raised eyebrows this week when he accused closed companies like OpenAI (and presumably Google) of pursuing gate-keeping industry regulation in the cynical hope of eliminating their open-source competitors. “There are definitely large tech companies that would rather not have to try to compete with open source [AI], so they’re creating fear of AI leading to human extinction,” Ng told the Australian Financial Review. “It’s been a weapon for lobbyists to argue for legislation that would be very damaging to the open-source community.” Ng’s sentiments were echoed over the weekend in X posts from Yann LeCun, Meta’s pugnacious chief scientist and an outspoken open-source advocate. LeCun accused OpenAI, Anthropic and DeepMind of “attempting to perform a regulatory capture of the AI industry.” “If your fear-mongering campaigns succeed, they will *inevitably* result in what you and I would identify as a catastrophe: a small number of companies will control AI,” LeCun wrote, addressing a critic on X. “The vast majority of our academic colleagues are massively in favor of open AI R&D. Very few believe in the doomsday scenarios you have promoted.” DeepMind chief Demis Hasabis responded on Tuesday by politely disagreeing with LeCun, and saying that at least some regulations were needed today. “I don’t think we want to be doing this on the eve of some of these dangerous things happening,” Hassabis told CNBC. “I think we want to get ahead of that.” At the White House on Monday, I met with Arati Prabhakar, director of the Office of Science and Technology Policy. I asked her if the federal government had taken a view yet on the open-versus-closed debate. “We’re definitely watching to see what happens,” Prabhakar told me. “What is clear to all of us is how powerful open-source is — what an engine of innovation and business it has been. And at the same time, if a bedrock issue here is that these technologies have to be safe and responsible, we know that that is hard for any AI model because they're just so richly complex to begin with. And we know that the ability of this technology to spread through open source is phenomenal.” Prabhakar said that views of open-source AI can vary depending on your job. “If I were still in venture capital, I'd say the technology is democratizing,” said Prabhakar, whose long history in tech and government includes five years running the the Defense Advanced Research Projects Agency (DARPA). “If I were still at the Defense Department, I would say it's proliferating. I mean, this is just the story of AI over and over — the light side and and dark side. … And the open source issue is one that we definitely continue to work on and hear from people in the community about, and sort of figure out the path ahead.” IV. I left Washington this morning with two main conclusions. One is that AI is being regulated at the right time. The government has learned its lesson from the social media era, when its near-total inattention to hyper-scaling global platforms made it vulnerable to being blindsided by the inevitable harms once they arrived. AI industry players deserve at least some credit for this. Unlike some of their predecessors, executives like OpenAI’s Sam Altman and Anthropic’s Dario Amodei pushed early and loudly for regulation. They wanted to avoid the position of social media executives sitting before Congress, asking for forgiveness rather than permission. And to Biden’s credit, the administration took them seriously, and developed enough expertise at the federal level that it could write a wide-ranging but nuanced executive order that should mitigate at least some harms while still leaving room for exploration and entrepreneurship. In a country that still hasn’t managed to regulate social platforms after eight years of debate, that counts for something. That leads me to my second conclusion: for better and for worse, the AI industry is being regulated largely on its own terms. The executive order creates more paperwork for AI developers. But it doesn’t really create any hurdles. For a set of regulations, it comes across as intending neither to accelerate or decelerate. It allows the industry to keep building more or less as it already is, while still calling it (in Biden’s words) “the most significant action any government anywhere in the world has ever taken on AI safety, security, and trust.” If that is true, I suspect it may not be for long. The European Union’s AI Act, which among other things calls for ongoing monitoring of large language models, could take effect next year. When it does, AI developers may find that the United States is once again its most permissive market. Still, what White House officials told me this week feels true: that the potential to do good here appears roughly equal to its potential to do harm. AI companies now have the opportunity to demonstrate that the trust the administration has now granted them is well placed. And if they don’t, Biden’s order may ensure that they feel the consequences. SponsoredGive your startup an advantage with Mercury Raise. Mercury lays the groundwork to make your startup ambitions real with banking* and credit cards designed for your journey. But we don’t stop there. Mercury goes beyond banking to give startups the resources, network, and knowledge needed to succeed. Mercury Raise is a comprehensive founder success platform built to remove roadblocks that often slow startups down. Eager to fundraise? Get personalized intros to active investors. Craving the company and knowledge of fellow founders? Join a community to exchange advice and support. Struggling to take your company to the next stage? Tune in to unfiltered discussions with industry experts for tactical insights. With Mercury Raise, you have one platform to fundraise, network, and get answers, so you never have to go it alone. *Mercury is a financial technology company, not a bank. Banking services provided by Choice Financial Group and Evolve Bank & Trust®; Members FDIC. Platformer has been a Mercury customer since 2020. This sponsorship gets us 5% closer to our goal of hiring a reporter in 2024. Governing

Industry

Those good postsFor more good posts every day, follow Casey’s Instagram stories.  (Link)  (Link)  (Link) Talk to usSend us tips, comments, questions, and AI regulations: casey@platformer.news and zoe@platformer.news. Sponsor Platformer.By design, the vast majority of Platformer readers never pay anything for the journalism it provides. But you made it all the way to the end of this week’s edition — maybe not for the first time. Want to support more journalism like what you read today? If so, click here: |

Older messages

Twitter is dead and Threads is thriving

Friday, October 27, 2023

One year after Elon Musk let that sink in, an elegy for the platform that was — and some notes on the one that is poised to succeed it

The states sue Meta over child safety

Wednesday, October 25, 2023

Everyone agrees there's a teen mental health crisis. Is this how you fix it?

How to see the future using DALL-E 3

Tuesday, October 24, 2023

To understand how quickly AI is improving, forget ChatGPT — use an image generator

Inside Discord’s reform movement for banned users

Friday, October 20, 2023

Most platforms ban their trolls forever. Discord wants to rehabilitate them

How one former Twitter employee could beat Elon Musk in court

Thursday, October 19, 2023

In her first interview, former engineer Yao Yue discusses being fired over a tweet — and her success in getting the NLRB to file its first formal complaint against X

You Might Also Like

⏰ Final day to join MicroConf Connect (Applications close at midnight)

Wednesday, January 15, 2025

MicroConf Hey Rob! Don't let another year go by figuring things out alone. Today is your final chance to join hundreds of SaaS founders who are already working together to make 2025 their

How I give high-quality feedback quickly

Wednesday, January 15, 2025

If you're not regularly giving feedback, you're missing a chance to scale your judgment. Here's how to give high-quality feedback in as little as 1-2 hours per week. ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

💥 Being Vague is Costing You Money - CreatorBoom

Wednesday, January 15, 2025

The Best ChatGPT Prompt I've Ever Created, Get More People to Buy Your Course, Using AI Generated Videos on Social Media, Make Super Realistic AI Images of Yourself, Build an in-email streak

Enter: A new unicorn

Wednesday, January 15, 2025

+ French AI startup investment doubles; Klarna partners with Stripe; Bavaria overtakes Berlin View in browser Leonard_Flagship Good morning there, France is strengthening its position as one of the

Meta just flipped the switch that prevents misinformation from spreading in the United States

Wednesday, January 15, 2025

The company built effective systems to reduce the reach of fake news. Last week, it shut them down Platformer Platformer Meta just flipped the switch that prevents misinformation from spreading in the

Ok... we're now REALLY live Friend !

Tuesday, January 14, 2025

Join Jackie Damelian to learn how to validate your product and make your first sales. Hi Friend , Apologies, we experienced some technical difficulties but now We're LIVE for Day 3 of the Make Your

Building GTM for AI : Office Hours with Maggie Hott

Tuesday, January 14, 2025

Tomasz Tunguz Venture Capitalist If you were forwarded this newsletter, and you'd like to receive it in the future, subscribe here. Building GTM for AI : Office Hours with Maggie Hott On

ICYMI: Musk's TikTok, AI's future, films for founders

Tuesday, January 14, 2025

A recap of the last week ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

🚨 [LIVE IN 1 HOUR] Day 3 of the Challenge with Jackie Damelian

Tuesday, January 14, 2025

Join Jackie Damelian to learn how to validate your product and make your first sales. Hi Friend , Day 3 of the Make Your First Shopify Sale 5-Day Challenge is just ONE HOUR away! ⌛ Here's the link

The Broken Ladder & The Missing Manager 🪜

Tuesday, January 14, 2025

And rolling through work on a coaster͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏