Not Boring by Packy McCormick - The Goldilocks Zone

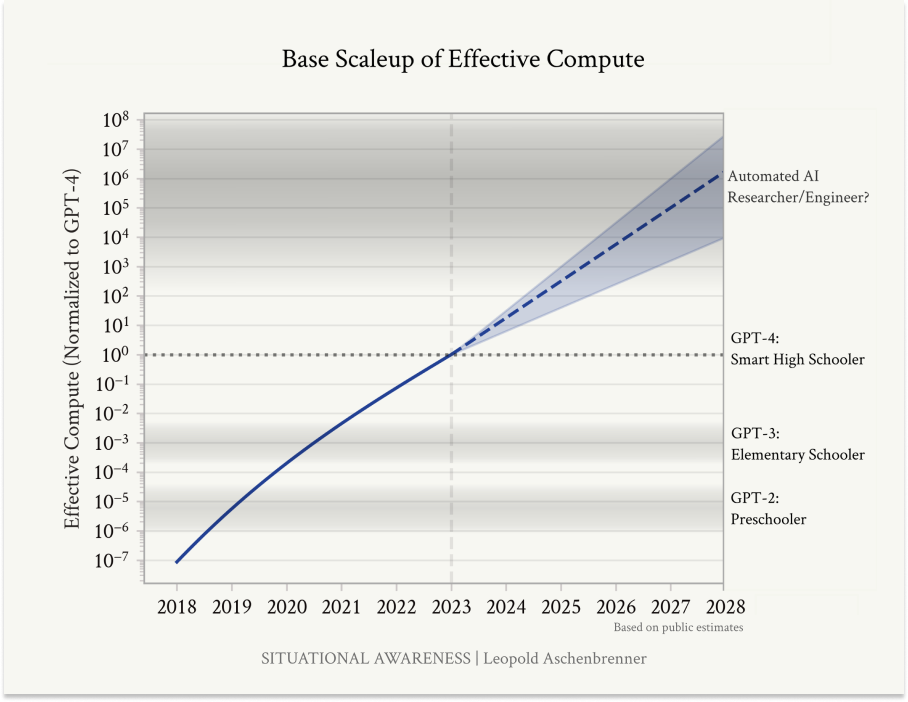

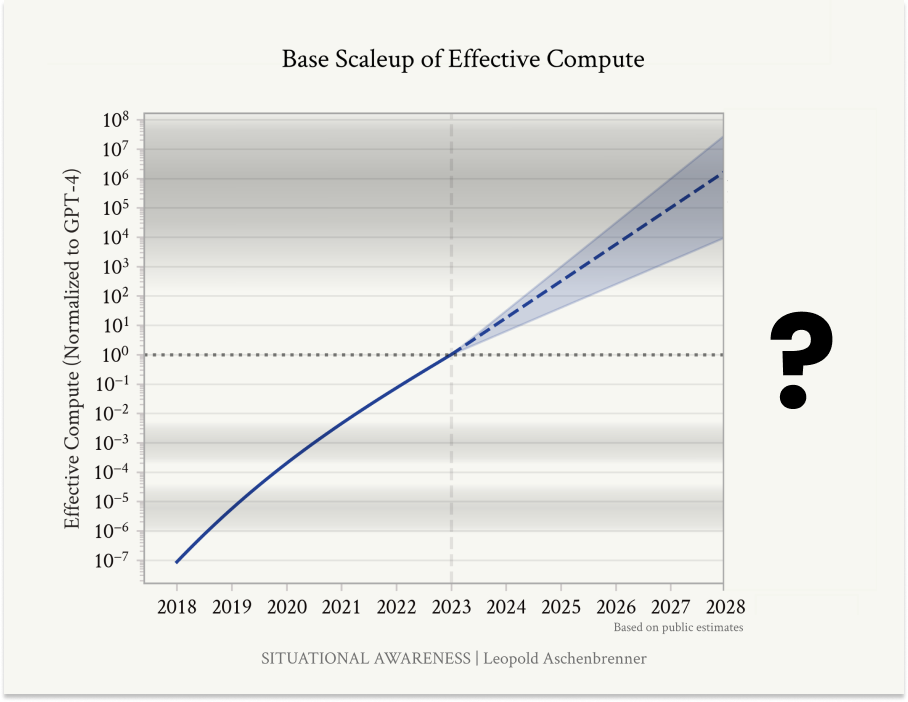

Welcome to the 919 newly Not Boring people who have joined us since our last essay! If you haven’t subscribed, join 227,597 smart, curious folks by subscribing here: Today’s Not Boring is brought to you by… Tegus If the name Tegus sounds familiar, it might be because you’ve been reading Not Boring and know that it’s my favorite way to get up to speed on a new industry from the insider perspective. It might also be because you read that just last week, AlphaSense acquired the company for $930 million. I told you their insights were valuable! Tegus is full of transcripts of conversations with insiders and executives in any industry you can think of. It boasts 75% of the world’s private market transcripts. For example, if you want the inside view on what’s happening in AI, without the hype, you can read expert perspectives on companies like OpenAI, Anthropic, and Mistral, plus a long tail of transcripts covering every topic you can imagine. With Tegus, you gain access to the pulse of the private markets – with perspectives and detailed financials you won’t find anywhere else. There’s a reason the world’s best private market investors use Tegus, and as a smart, curious Not Boring reader, you can get access to the Tegus platform today: Hi friends 👋 , Happy Tuesday and welcome back to Not Boring! Happy belated Father’s Day to all the Not Boring Dads out there. Over the past couple of weeks, I’ve been thinking a lot about Leopold Aschenbrenner’s Situational Awareness, in which he argues that just continuing to scale effective compute at the same pace AI labs have been scaling it will lead to AGI by 2027 and ASI soon thereafter. A lot of people have taken the paper very seriously, many others have been quick to dismiss it, but I think there’s a very optimistic middle path that also happens to be the most likely. This essay is about that path. Let’s get to it. The Goldilocks ZoneHumans are astronomically lucky. That our planet, Earth, exists in what astronomers call a Goldilocks Zone – just the right distance from the Sun to be habitable – is one of a million factors that needed to go just-right for us to be here, monkeys on the precipice of creating thinking machines. We are so lucky that I’ve wondered whether something - god, a simulation creator, a loving universe, capitalism, take your pick – wants to see us win. As one of a billion examples of our fortune, I wrote that the timing of the energy transition is almost too perfect: without the climate crisis, we may have ended up in a much more devastating energy crisis. There are those who believe our luck is about to run out. Billions of years of evolution, poof, once we create AI that is better than us in every way. At best, we will be economically useless passengers. I don’t think that’s right. Humans are unimaginably lucky until proven otherwise, and believing that we are about to get luckier than ever just requires believing in straight lines on a graph. Look, I’m too dumb to grok all of the cutting edge AI research papers, and I’m not deep in the weeds of the AI conversation. This is just my left curve outsider common sense take, based on good ol’ fashioned human intuition, and much smarter people than me may disagree. I’m going to make the case that the data points to an AI Goldilocks Zone: with assistants that continue to get more and more capable the more data and compute we feed them, and that have absolutely no desire or ability to overtake us. AI is more like Oil than God. It’s an economically useful commodity that can be scaled and refined to act as a multiplier on everything we do. Gasoline didn’t replace the horse and buggy; it let buggies carry humans further, faster. If AI is God, governments should lock it down. But if it’s Oil, they should do everything they can to promote its development and unleash their countries’ entrepreneurial vigor on refining it into more and more useful products. If AI is God, you may as well just give up. But if it’s Oil, you can celebrate each advance as another tool at your disposal, and plan for the very real disruptions and opportunities those advances will inevitably create. Believing AI will become God is nihilistic. Believing it is Oil is optimistic. This whole rabbit hole started a couple weeks ago with another Goldilocks Zone of sorts. I had the rare long drive with the kids asleep in the backseat and without Puja in the front. If she’d been there, she never would have let me listen to four hours of Dwarkesh Patel and Leopold Aschenbrenner, understandably. Leopold is a young genius (he graduated top of his Columbia class at 19) who was recently let go from the now defunct OpenAI Superalignment team. He just released a paper, Situational Awareness: The Decade Ahead, in which he argues that on our current trajectory, we are likely to have AGI in 2027 and Superintelligence (ASI) not long after. “That doesn’t require believing in sci-fi;” he tweeted, “it just requires believing in straight lines on a graph.” In the paper, and on the podcast, Leopold makes the case that between more compute, more efficient algorithms, and “unhobblings,” we will scale “effective compute” by 3-6 orders of magnitude (10x increases, “OOMs”) in the next three years, a leap as big as the one from GPT-2 to GPT-4. If that leap transformed the model from a Preschooler to a Smart High Schooler, might this next one carry it from smart high schooler to a PhD in any field? Wouldn’t that be AGI? Listening, and then reading the paper, I felt a cognitive dissonance: I agreed with Leopold’s logic, but the conclusion felt off. After thinking about it a bunch, I think I figured out why. Where I agree with Leopold is that we should trust the curve. Where I disagree is that GPT-4 is a Smart High Schooler. So this essay will do three things:

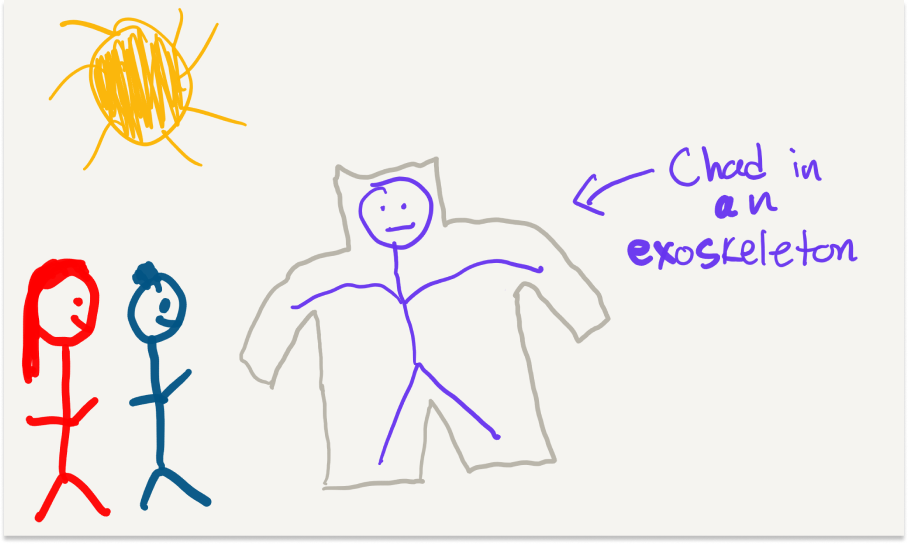

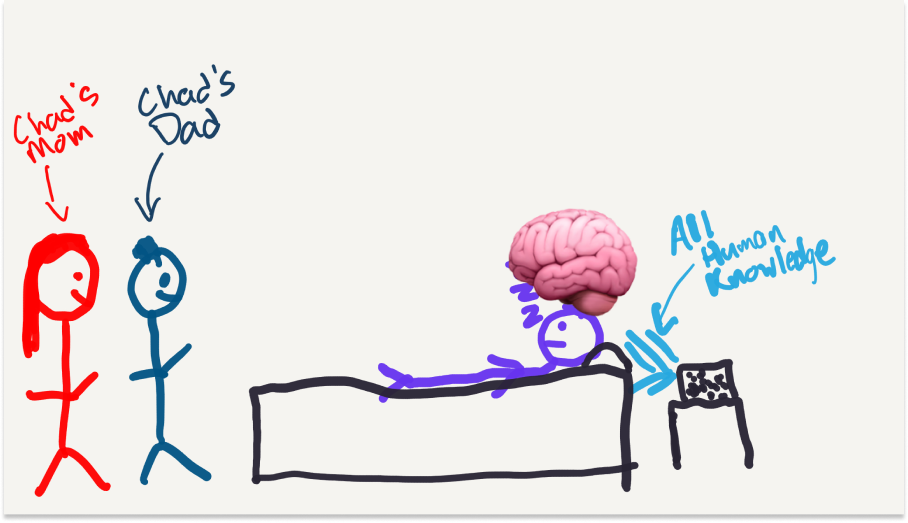

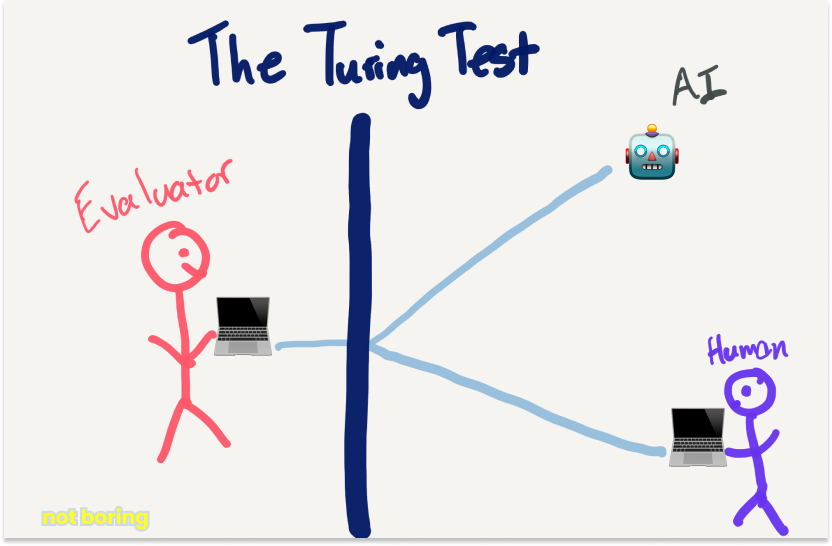

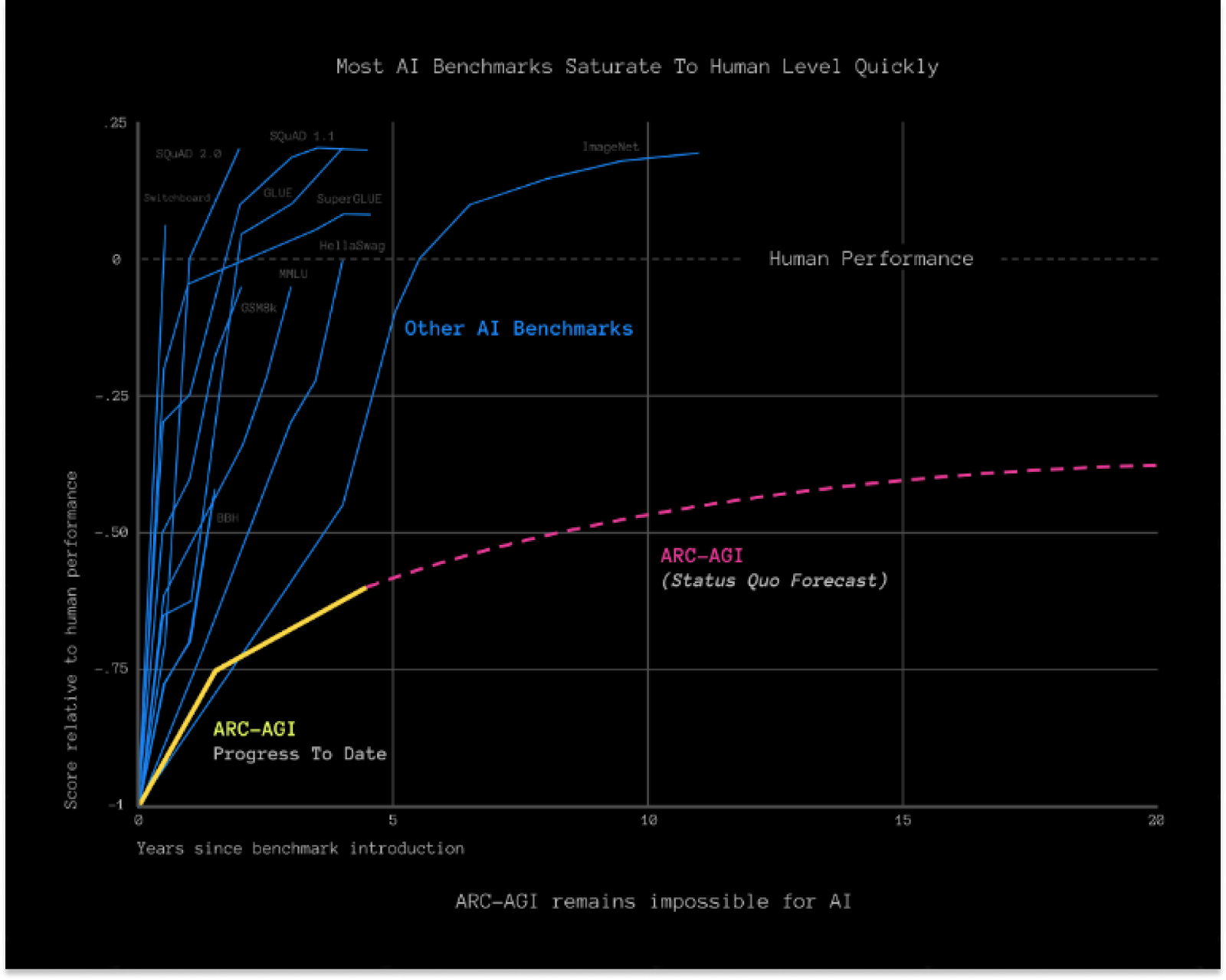

GPT-4 isn’t the same thing as a Smart High Schooler, but if we’re going to anthropomorphize, let’s anthropomorphize. Meet ChadImagine a Smart High Schooler. Let’s call him Chad. Chad is absolutely listless. No motivation, no goals, no drive. Most of the time, he lies in bed asleep while his parents blast all of human knowledge on little speakers next to his bed at super high speed. Somehow, Chad absorbs and memorizes all of it. Occasionally, Chad’s parents wake him up and ask him questions. When he was younger, he’d ramble pretty incoherently but you could tell he was kind of close. Now that he’s older, and he’s absorbed so much more information, he can go on and on and he usually gets things right no matter what his parents ask him. If you were Chad’s parents, you’d be like, “Ho-leeee shit it’s actually working.” You’d get your hands on all of the audio knowledge you could to feed through that speaker. You’d play it at different volumes. You’d weed out all the bad information. You’d feed him superfoods that the doctors suggest might help in making his brain even bigger. You’d pay smart people to record detailed instructions about how they think through really hard problems, so that he learns the how and not just the what. You’d hold his eyes open while you beamed all of YouTube at him. You might even buy Chad an exoskeleton he can wear to get him out of that bed and out into the real world, where he can do physical things and absorb even more information about how the world works. And it all kind of works! Chad keeps learning more and more. He can move heavy boxes in his exoskeleton, even hand you an apple when you ask him for something healthy. When the neighbors ask him questions, he gets them right, even when they’re really hard questions, like “Can you help us with this protein folding problem?” one day, and “Can you please write me a complex legal agreement for this M&A deal I’m working on?” the next. Chad is an extremely Smart High Schooler, and you are very proud. But deep down, you’re a little worried. Because no matter what you do, no matter how much or what kind of information you play him, no matter how nice an exoskeleton you buy, Chad doesn’t seem to care. He doesn’t seem to want anything. Your parent’s intuition tells you that Chad is very different from the other High Schoolers. Heck, in some ways, he’s very different from the Preschoolers. The Preschoolers are idiots, relatively speaking. They can hardly form a sentence. They can’t do basic multiplication. But they know what they want. They wake up in the morning and wake their parents up to play. They go to school and play with the other kids, they even invent their own games and languages without anyone telling them to. They get sad when someone is mean to them, and overjoyed when someone gives them an ice cream cone. They have agency and goals, even if they’re little goals: get ice cream, get a new toy, stay up past bedtime, whatever. That agency and those goals scale as the kids get older. The High Schoolers who would be Chad’s classmates if he could get out of bed and go to school are wild. On the weekend, they sneak out, steal their parents’ cars, and go to parties, where they try to kiss other wild High Schoolers. During the week, they’re starting to figure out what they want to do with their lives – where they want to go to college, what fascinates them, what kind of impact they want to have on the world. They’re nowhere near as smart as Chad, but they have a vivacity you’ve never once seen in your very smart boy. If Chad is ever really going to make something of himself, you’re going to need to figure out how to get him to be more like those other kids, to have his own goals and desires, even just to get out of bed of his own volition in the morning and start doing things without anyone having to ask. But no matter what you try, you can’t, so you focus on what you can do: making Chad really fucking smart. Maybe, you hope, at some point, if he gets smart enough, he’ll see all the other kids wanting things and doing things and start to want and do things himself. In the meantime, though, your neighbors start to see how smart Chad is getting, and they start to ask him for help with all sorts of things. “Hey Chad, can you help me write this marketing copy?” asks neighbor Jill, and Chad does it. “Chad my boy, how about helping me develop some drugs?” asks neighbor Jim, and he does. The neighbors are so happy with Chad’s help that they start paying you a lot of money, and, loving your boy, you spend that money on more, and more sophisticated, education, in the hopes that at some point, he might start acting more like the normal kids, and in the knowledge that, either way, the smarter he gets, the more your neighbors will pay him. And it works, kind of, Chad keeps getting smarter and smarter. He’s smarter than every college student, then every PhD, when you ask him to be. He can do more and more things. Eventually, practically any time a neighbor asks him to do something, mental or physical, he can do it, as long as someone in something he’s listened to has done something like it before. This is fantastic news for your town. It becomes the richest town in the world, the richest town in history, as your neighbors use Chad to do more and more incredible things. The only thing limiting your town’s growth is the creativity of the questions your neighbors ask Chad to help with. But poor Chad, he just stays in bed, listless, waiting to be woken up to help out with the next request, not caring whether anyone wakes him up ever again. Is Chad a Real Boy?There are a bunch of ways people have come up with to determine whether an AI is AGI. The most famous is the Turing Test: an evaluator chats with both a human and an AI through a computer, and if the evaluator can’t tell which is which, then the AI has demonstrated human-like intelligence. A modern fine-tuned LLM could easily pass the Turing Test, but it doesn’t pass the Smell Test. The Smell Test is something like: do I really think that thing across from me is actually a human? Call it AGI (Artificial General Intelligence) – an AI that can match human general intelligence across all intellectual tasks – or ASI (Artificial Superintelligence) – an AI that exceeds human intelligence and is capable of self-improvement. The implication, and the fear, is something so powerful that humans are no longer needed for the vast, vast majority of things we do today. So people keep moving the goalposts, testing LLMs against the SATs, International Math Olympiads, and more. On another Dwarkesh episode, the most recent, Google AI researcher Francois Chollet argued that LLMs are not the path to AGI, because they memorize but don’t reason. He proposed a test – the ARC-AGI Benchmark – that would give models simple problems unlike anything they’ve seen before as the real test of AGI. But whether Chad can ace the SATs or solve ARC, I still don’t think Chad passes the Smell Test. You can just picture the townsfolk whispering behind his back, “There’s something not right with that kid.” He’s smart, but they can smell it. (Note: I wrote that sentence before 2:12 PM yesterday, when Ryan Greenblatt used LLMs to get to 71% on ARC-AGI. I maintain that solving any particular test doesn’t pass the Smell Test.) There’s a lot to love about the argument Leopold makes in Situational Awareness. There’s a beautiful simplicity in trusting the curve. I love trusting the curve. I wrote a whole essay on the beauty of exponentials in tech. In the battle of a long-arcing curve versus human analysis, I take the curve. But as the old saying goes: trust (the curve), but verify (the mappings). Trust but VerifyThere’s this great post that William Hertling wrote on Brad Feld’s blog in 2012, How to Predict the Future. It’s all about trusting the curve, specifically, about trusting hardware curves. In 1996, Hertling plotted modem speeds to predict that streaming video would be possible in 2005. Nine years later, in February 2005, YouTube was born. He predicted that music streaming would come in 1999 or 2000. Napster arrived in 1999. In that 2012 post, he tracked millions of instructions per second (MIPS) over time to predict that:

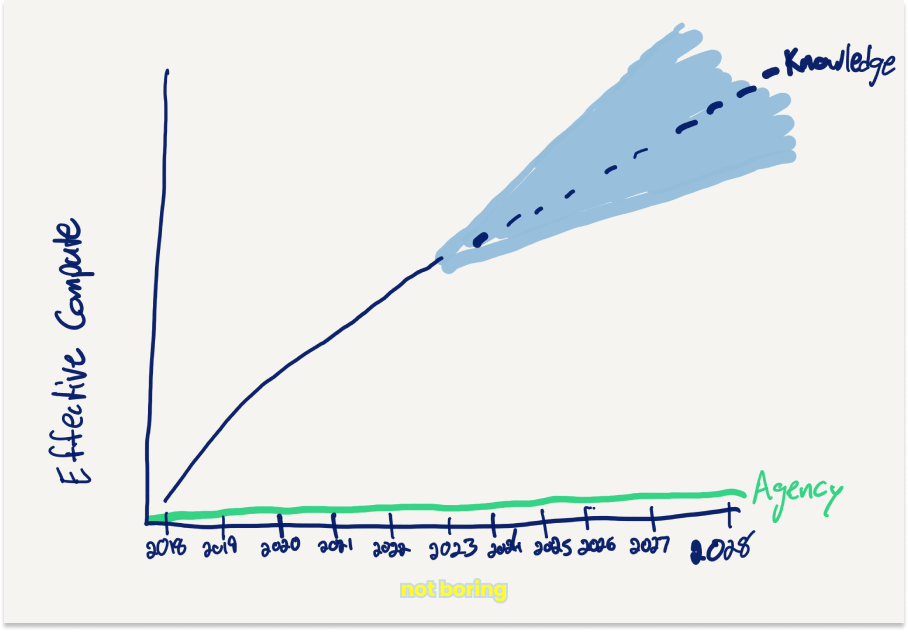

And, depending on which estimate of the complexity of human intelligence you select, he pretty much nailed it. Whether or not AI is intelligent, GPT-4 (2023) does a pretty damn good job of simulating it. Hertling described the three simple steps he uses to predict the future (emphasis mine): Step 1: Calculate the annual increase Step 2: Forecast the linear trend Step 3: Mapping non-linear events to linear trend In other words, plot the annual increases that have happened so far, extrapolate those increases out into the future, and then predict what new capabilities are possible at various future points. That’s exactly what Leopold did, and I think he nails the first two. Thinking about AI progress in terms of OOMs of Effective Compute is a really useful contribution, and forecasting OOMs lets you predict a lot of useful things. While things like $100 billion, even $1 trillion, clusters sound crazy, they’re decidedly on the curve. Who am I to disagree? I buy his argument that large companies will make enough money from AI to justify such large investments, and while challenges like getting enough data and power to keep the curves going are real, capitalism finds a way. As Hertling wrote, “Limits are overcome, worked around, or solved by switching technology.” We’re going to have increasingly powerful AI at our fingertips, and it’s worth preparing for that future. There will be great things, terrible things, and things that just need to be figured out. That said, I disagree with his mapping. In some ways, GPT-4 is much smarter than a Smart High Schooler, just like Chad is. In other, important ways, GPT-4 isn’t even on the same curve as a Preschooler or even a baby. They’re listless. They don’t have agency. They don’t have drive. Continuing to scale knowledge won’t magically scale agency. We’re not even on the agency curve. It’s a different curve. Look, I’m using knowledge and agency a little loosely. LLMs can do more than just regurgitate knowledge; even if you grant them reasoning, though, it still doesn’t feel like humanity emerges from LLM scaleup. They have no curiosity, and don’t ask their own questions. Seven years ago, Kevin Kelly wrote, “Intelligence is not a single dimension, so ‘smarter than humans’ is a meaningless concept.” It bears repeating. GPT-4 is a Smart High Schooler on one dimension, and a bottom centile High Schooler on others. It’s dangerous to assume that strengthening the strong dimension will strengthen the others. No A+ physics student ever became the star quarterback by becoming an A++ physics student. Kelly even anticipated the Leopold chart, writing:

That’s not to say the Smell Test version of AGI and ASI will never happen – I’m sure they will at some point on some path – just that it seems like we’ll need new paths to get there, and that those may be much further away. Put another way, you can believe that solar is going to continue to get much cheaper and more abundant without believing that solar panels will start producing fusion reactions themselves. This might sound like an overly pessimistic take coming from me, but it’s one of the most optimistic things I’ve ever written. If I’m right, we’re going to end up in the Goldilocks Zone once again. The Goldilocks ZoneThere are two extreme paths ahead for AI:

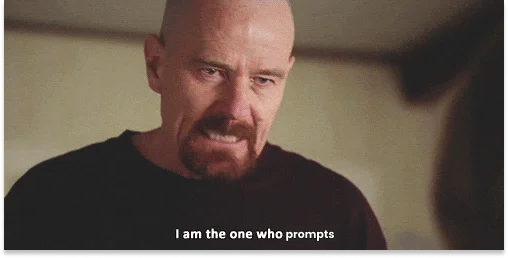

But there is a third way, the Goldilocks Zone: we get increasingly smart and capable helpers without eliminating the need for humans, or birthing beings that have any desire to eliminate humans. That is the implication of agreeing with Leopold’s curve but disagreeing with the mapping. It’s what you get when you believe in straight lines on a graph without believing in sci-fi. In this view of the world, humans have a big role to play: we are the one who prompts. We are the ones who ask the questions, who string together agents in ways that are socially and economically valuable, who have goals and desires, who ask Chad to help us with the problems that we choose to solve. We are the ones with vitality and drive, who use the bounty of new capabilities that increasingly capable AIs provide. I’m not an AI researcher. I’m not in the lab. I don’t know what Ilya saw. But from the outside, I haven’t seen anything that makes me believe otherwise. GPT-4 is no more ambitious than GPT-2. My Preschoolers are still more ambitious than both. Beyond that, we are the one who deals with the new. There was one other very interesting point that Francois made in the Dwarkesh conversation that’s relevant here. He said:

Who knows if he’s right. I have no technical reason to believe Francois over Leopold. But that last point is very interesting: “You need intelligence the moment you have to deal with change, novelty, and uncertainty.” In my interpretation, that’s a deeply beautiful idea: even if we could get an AI to solve all of the problems that exist in the current moment, to answer all of the hard questions that we currently know to ask, there will always be new problems (and new opportunities), new questions to ask, and new areas of knowledge to pursue. That’s not to say ever more powerful AI will be a perfect, harmless thing. The more powerful the tool, the more harm a bad guy can do with the tool. The only way to stop a bad guy with an AI isn’t an AI, it’s a good guy with an AI. So I strongly agree with Leopold that we need to stay ahead of China. I strongly disagree that we need to nationalize AI to do so. That path presents the biggest risk. Not just that we would be trusting the government with the most powerful tool ever created, which it may well be, but that by taking it out of the hands of regular people and assuming that AI will become a God, we let our own abilities atrophy. Because if Francois is right, there’s a path down which we assume that LLMs will always be smarter than us, will solve all of the really hard problems in ways we could never understand, and stop pushing ourselves like our species depended on it. It might work for a while, until LLMs reach the limits of what they can do with the information we’ve trained them on, and they’re stuck, and we’re no longer able to ask new questions or devise new theories. Instead of deifying LLMs based on a faith that with enough knowledge will emerge superintelligence, we should trust the curves. They say that LLMs are getting incredibly capable, incredibly fast. They don’t say the AI will suddenly self-direct. We might be able to thread the needle, to build something that looks almost like a God but is willing to do whatever we ask it to do. Cure cancer. Build factories that build billions of robots. Accelerate progress towards Warp Drives and O’Neill Cylinders and Dyson Spheres. And also: Help people whose jobs are displaced by AI find new work and meaning. Fight deep fakes. Make our smartest people smarter so we can continue to push into new frontiers. Solve old problems, and new ones that AI will undoubtedly help create, but moving ever upwards. What if you could hire armies of assistants who kept getting smarter and more capable, who multiplied your own effectiveness and expanded your ambition? Wouldn’t the perfect employee be one who is much smarter than you but, for some reason, has no desire to take your job? Technology has always been a force multiplier for humanity. The left curve, simple bet is that it will continue to be. That it will look less like Terminator, and more like Oil. AI is the New OilIn 2006, a British data scientist named Clive Humby coined the phrase, “Data is the new oil.” It has been drilled to death ever since. But while data is as valuable as it’s ever been, the real new oil may be AI itself. I’ve been reading Daniel Yergin’s Pulitzer Prize winning book, The Prize: The Epic Quest for Oil, Money, and Power, which is the best book on the history of oil ever written. Read it. Since Drake’s 1859 discovery in Titusville, PA, oil has won and lost wars, made fortunes, incited conflict, increased the world’s GDP, and turned things previously unimaginable entirely possible. It’s been the preeminent priority for countless government administrations, and the primary non-sentient contributor to our current standard of living. But oil is a commodity. What’s made oil so valuable is the people who have discovered it, drilled it, refined it, distributed it, and figured out what to do with it. I think the oil boom of the 1940s to 1970s may be the best comp for what’s coming. Private companies are investing tens of billions of dollars on discovery. Intense competition is causing prices to fall and models to commoditize. There is an international competition, between adversaries (US and USSR then, US and China now), and a race among even friendly companies to develop their own resources. As Yacine pointed out on X, “These companies will become national assets of the countries they reside in. Openai's board getting spooky wasn't an accident. Canada has cohere. France has mistral. Japan has sakana.” Just like countries need to control their own oil supply by any means necessary in order to compete and grow, countries will also need to control their access to frontier models. Not necessarily because ASI will make its host country omnipotent, but because frontier models will be key to a nation’s growth, progress, and yes, security. Then, oil animated previously lifeless machines. The car, washing machine, airplane, and air conditioner roared to life when infused with black gold. But a car was no more likely to drive itself with more or better-refined oil than it was with an empty tank. Now, AI imbues those machines with the ability to learn, act, and adapt. But a car is no more likely to plan a road trip for its own enjoyment with bigger or better-trained models than it was without them. Oil and AI both allow us to get more out of machines when we ask them to do something for us. So if AI is the new oil, what might we expect the coming decade to look like? As I was reading The Prize over the weekend, I came across this passage, which struck me as a crystal ball. I’m going to share the entire section, because I think it might be a decent analogy for the period we’re entering as we do to intelligence (non-human, of course) what the post-war oil boom did to energy:

The world is undoubtedly going to change dramatically, but I’m just dumb enough to believe it’s going to change for the much better, in much the same way it did as humans discovered, refined, and scaled oil. AI is a fuel. This isn’t based on deep scientific research, or a review of the available literature. I’m not in SF at the parties where people casually share billion-dollar training secrets over nootropic cocktails. This is a gut feeling, and I think you probably feel it too. AI is incredibly useful software, but it’s nowhere near human-like. You’ve met humans. You’ve used AI. Do they feel remotely the same to you? Or how about this: if the OpenAI team really believed they were on the cusp of birthing God, would they have agreed to ship a chained version with every new iPhone? That feels like the final leg in the common pattern in oil: discover, drill, refine, distribute. It’s interesting. As I kept reading after writing this section, more parallels kept popping up, like the competition among the oil companies to market their products. Owning the wellhead (where the oil is pumped) was half the battle, but owning distribution became equally important. Oil companies were selling a miracle product, but it was a commodity miracle. Yergin writes:

Does that sound familiar? Oil did reshape the world in extravagant ways. It led to suburbs and motels and commercial air travel and all sorts of things that reshaped the world in ways John D. Rockefeller himself never could have predicted. AI will do the same. But it’s important to consider that the leading AI labs might be racing hard to outdo one another in extravagant claims in order to distribute a (super amazing) commodity product. Why does it matter?One of the things I asked myself as I listened to both the Leopold and Francois conversations was: why does it matter? Who cares how exactly we define AGI, or whether LLMs are the path there? Is this whole debate just a nerd snipe circle jerk? I’ve largely avoided writing AI thought pieces because I’ve been unsure about the answer to that question, but as I’ve written this, I think it matters for a couple of reasons: First, these conversations impact how governments approach AI. If AI is a God, or at least a Superweapon the likes of which the world has never seen, governments should nationalize it, lock it down, and regulate it to death. If it’s Oil, they should do whatever they can to encourage and promote its development, to ensure that their country gets the economic and security benefits that come with access to a powerful commodity and entrepreneurial creativity around its many applications. Second, they impact how people see their place in the world going forward. If AI is a god, or at least a Superintelligence that’s superior to us on all measures, it’s easy to give up and assume that whatever work we do to better ourselves now will be for naught. This seems crazy, but people really are deciding not to have kids because of climate change; imagine what they’ll decide when they think the future will be owned by Superintelligent Machines. If it’s Oil, people should get to work thinking about all of the ways they can use it to fuel the things that they do. Oil didn’t spontaneously grow suburbs; humans like William Levitt realized that gas-powered cars meant more people could live in houses with a yard and a white picket fence. The American Dream as we picture it has oil to thank, and with a new oil, humans can dream up new American Dreams. Oil came with its own challenges. People lost jobs. Countries fought wars. Oil upended the old way of life and replaced it with a new one. AI will likely do the same. But challenges are surmountable, as long as people believe the situation isn’t hopeless. Recognizing that AI is Oil means shedding hyperbole so that people can address the very real challenges it will bring. Human creativity is the greatest resource. We discovered and refined oil. We created AI. Despite what people competing to distribute their brand of AI tell you, I haven’t seen any evidence that AI will match, let alone replace, that creative spark any time soon. This isn’t to say AI is not making incredibly fast progress. It is. Yesterday, DeepSeek took the top spot on a coding leaderboard, Runway dropped its stunning Gen-3 Alpha video generator, and Ryan Greenblatt made the aforementioned progress on ARC-AGI using LLMs. YESTERDAY. It’s just to say that that is great. Progress means growth. We will need rack after rack of chips and gigawatt after gigawatt of new energy capacity. That’s a lot of good jobs. So bring on the models that can solve physics, that can reason on problems they’ve never seen, that can help cure diseases that have stumped scientists using old tools, hell, bring on the models that can make this entire argument better than I can, when I ask it to. Let humans keep pushing the boundaries of new knowledge, pushing the frontiers of our ambitions, and set ever-greater goals to match our ever-more-capable tools. I bet we’re going to look back on this period from 2071 the way people looked back on the discovery of oil in 1971. And hey, it’s an easy bet to make: if I’m wrong, if AGI builds ASI that kills us all, you won’t be around to rub it in my face. Find me an AI clever enough to make that bet. Thanks to my AI editor, Claude Opus, for giving me feedback when I asked it to. That’s all for today. We’ll be back in your inbox with a Weekly Dose on Friday. Thanks for reading, Packy |

Older messages

Weekly Dose of Optimism #98

Friday, June 14, 2024

Cancer Gene, Deaf Gene, Alzheimer's Drug, Solugen, TerraPower, jhanas ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Weekly Dose of Optimism #97

Friday, June 7, 2024

Cryonics, Starship, Starliner, Vogle, Fuse ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Ramp and the AI Opportunity

Tuesday, June 4, 2024

Being in the Right Place at the Right Time, On Purpose ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Weekly Dose of Optimism #95

Monday, June 3, 2024

FIT21, Golden Gate Bridge, Lithium, Promethium, Milei ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Fuse Energy

Monday, June 3, 2024

The Potentially Profitable Path to Fusion Power ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

You Might Also Like

🔮 $320B investments by Meta, Amazon, & Google!

Friday, February 14, 2025

🧠 AI is exploding already!

✍🏼 Why founders are using Playbookz

Friday, February 14, 2025

Busy founders are using Playbookz build ultra profitable personal brands

Is AI going to help or hurt your SEO?

Friday, February 14, 2025

Everyone is talking about how AI is changing SEO, but what you should be asking is how you can change your SEO game with AI. Join me and my team on Tuesday, February 18, for a live webinar where we

Our marketing playbook revealed

Friday, February 14, 2025

Today's Guide to the Marketing Jungle from Social Media Examiner... Presented by social-media-marketing-world-logo It's National Cribbage Day, Reader... Don't get skunked! In today's

Connect one-on-one with programmatic marketing leaders

Friday, February 14, 2025

Enhanced networking at Digiday events

Outsmart Your SaaS Competitors with These SEO Strategies 🚀

Friday, February 14, 2025

SEO Tip #76

Temu and Shein's Dominance Is Over [Roundup]

Friday, February 14, 2025

Hey Reader, Is the removal of the de minimis threshold a win for e-commerce sellers? With Chinese marketplaces like Shein and Temu taking advantage of this threshold, does the removal mean consumers

"Agencies are dying."

Friday, February 14, 2025

What this means for your agency and how to navigate the shift ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Is GEO replacing SEO?

Friday, February 14, 2025

Generative Engine Optimization (GEO) is here, and Search Engine Optimization (SEO) is under threat. But what is GEO? What does it involve? And what is in store for businesses that rely on SEO to drive

🌁#87: Why DeepResearch Should Be Your New Hire

Friday, February 14, 2025

– this new agent from OpenAI is mind blowing and – I can't believe I say that – worth $200/month