Agentic systems are on the rise, helping developers create intelligent, autonomous systems. Large language models (LLMs) are becoming more and more capable of following diverse sets of instructions, making them ideal for managing these agents. This advancement opens up numerous possibilities for handling complex tasks with minimal human intervention in so many areas. For example, agentic systems can benefit customer service, where they can handle customer inquiries, resolve issues, and even upsell products based on customer preferences. | In this blog post, Stephen Batifol explores how to build agents using llama-agents and Milvus. By combining the power of LLMs with the vector similarity search capabilities of Milvus, one can create sophisticated agentic systems that are not only intelligent but also highly efficient and scalable. | Stephen will also explore how combining different LLMs can enable various actions. For simpler tasks, he'll use Mistral Nemo, a smaller and more cost-effective model, and Mistral Large for orchestrating different agents. | Introduction to Llama-agents, Ollama & Mistral Nemo, and Milvus Lite | Llama-agents is an extension of LlamaIndex to build robust and stateful multi-actor applications with LLMs. Ollama & Mistral Nemo – Ollama is an AI tool that lets users run large language models, such as Mistral Nemo, locally on their machines. This allows you to work with these models on your own terms without needing constant internet connectivity or reliance on external servers. Milvus Lite is a local and lightweight version of Milvus that can run on your laptop, Jupyter Notebook, or Google Colab. It allows you to store and retrieve your unstructured data efficiently.

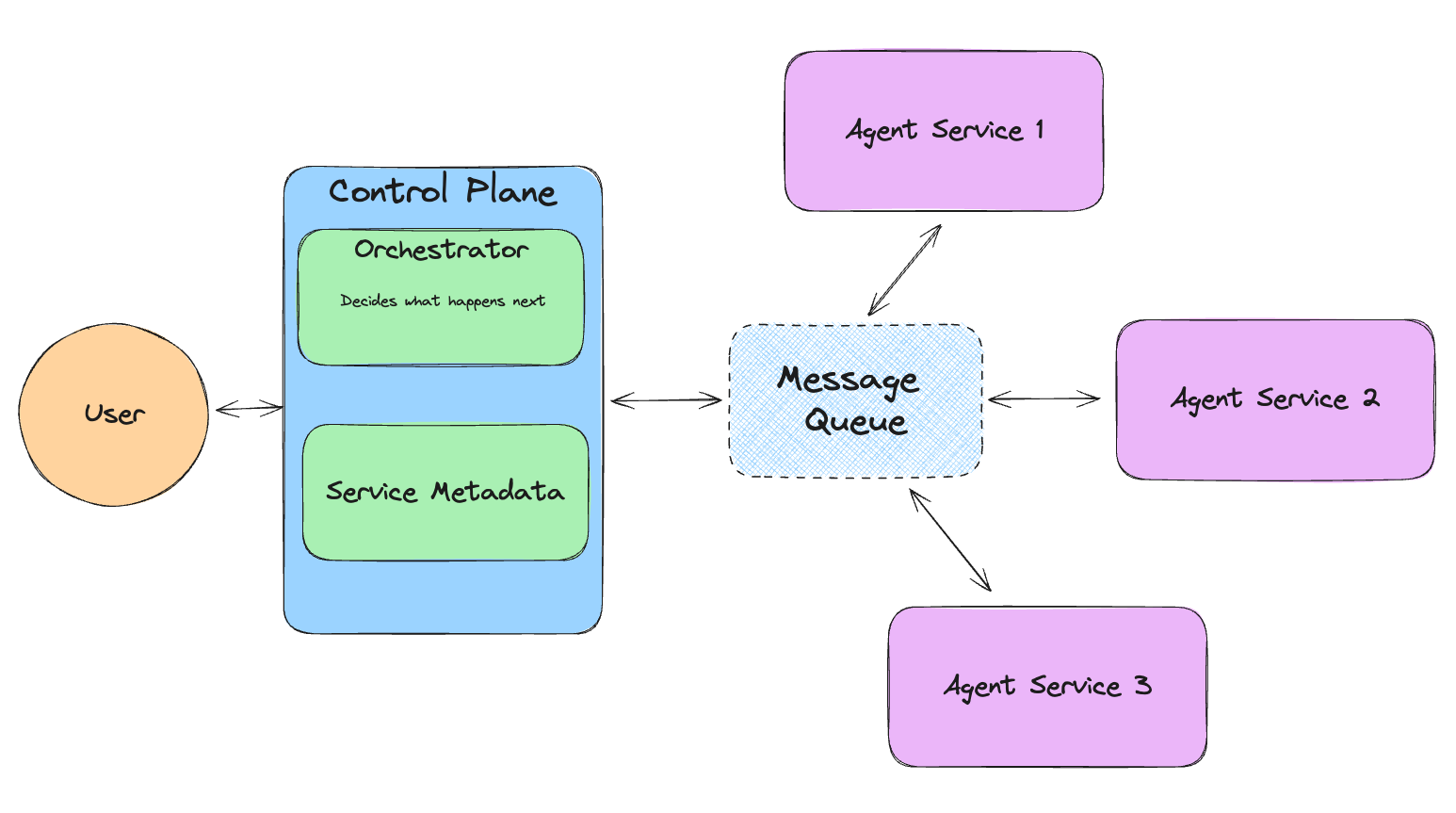

| How Llama-agents Work | Llama-agents, developed by LlamaIndex, is an async-first framework for building, iterating, and productionizing multi-agent systems, including multi-agent communication, distributed tool execution, human-in-the-loop, and more! | In Llama-agents, each agent is seen as a service endlessly processing incoming tasks. Each agent pulls and publishes messages from a message queue. |  | Figure: How Llama-agents work |

| Install Dependencies | Let’s first install all necessary dependencies. | ! pip install llama-agents pymilvus python-dotenv

! pip install llama-index-vector-stores-milvus llama-index-readers-file llama-index-embeddings-huggingface llama-index-llms-ollama llama-index-llms-mistralai

| # This is needed when running the code in a Notebook

import nest_asyncio

nest_asyncio.apply()

from dotenv import load_dotenv

import os

load_dotenv()

| Load Data into Milvus | We will download some example data from llama-index, which includes PDFs about Uber and Lyft. We will use this data throughout the tutorial. | !mkdir -p 'data/10k/'

!wget 'https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples/data/10k/uber_2021.pdf' -O 'data/10k/uber_2021.pdf'

!wget 'https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples/data/10k/lyft_2021.pdf' -O 'data/10k/lyft_2021.pdf'

| Now that we have the data on our machine, we can extract the content and store it in the Milvus vector database. For the embedding model, we are using bge-small-en-v1.5, which is a compact text embedding model with low resource usage. | Next, we create a collection in Milvus to store and retrieve our data. We are using Milvus Lite, the lightweight version of Milvus, a high-performance vector database that powers AI applications with vector similarity search. You can install Milvus Lite with a simple pip install pymilvus. | Our PDFs are transformed into vectors, and we will store them in Milvus. | from llama_index.vector_stores.milvus import MilvusVectorStore

from llama_index.core import Settings

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

from llama_index.core import SimpleDirectoryReader, VectorStoreIndex, StorageContext, load_index_from_storage

from llama_index.core.tools import QueryEngineTool, ToolMetadata

# Define the default Embedding model used in this Notebook.

# bge-small-en-v1.5 is a small Embedding model, it's perfect to use locally

Settings.embed_model = HuggingFaceEmbedding(

model_name="BAAI/bge-small-en-v1.5"

)

input_files=["./data/10k/lyft_2021.pdf", "./data/10k/uber_2021.pdf"]

# Create a single Milvus vector store

vector_store = MilvusVectorStore(

uri="./milvus_demo_metadata.db",

collection_name="companies_docs"

dim=384,

overwrite=False,

)

# Create a storage context with the Milvus vector store

storage_context = StorageContext.from_defaults(vector_store=vector_store)

# Load data

docs = SimpleDirectoryReader(input_files=input_files).load_data()

# Build index

index = VectorStoreIndex.from_documents(docs, storage_context=storage_context)

# Define the query engine

company_engine = index.as_query_engine(similarity_top_k=3)

| Define Different Tools | We will define two tools specific to our data. The first one provides information about Lyft and the second one is about Uber. We will see later how we can make a more generic tool. | # Define the different tools that can be used by our Agent.

query_engine_tools = [

QueryEngineTool(

query_engine=company_engine,

metadata=ToolMetadata(

name="lyft_10k",

description=(

"Provides information about Lyft financials for year 2021. "

"Use a detailed plain text question as input to the tool."

),

),

),

QueryEngineTool(

query_engine=company_engine,

metadata=ToolMetadata(

name="uber_10k",

description=(

"Provides information about Uber financials for year 2021. "

"Use a detailed plain text question as input to the tool."

),

),

),

]

| Set up the Agent Using Mistral Nemo 🐠 | To limit our resource usage and potentially reduce the costs of our application, we are using Mistral Nemo with Ollama. This combination allows us to run the model locally. Mistral Nemo is a small LLM that offers a large context window of up to 128k tokens, which is very useful when working with large documents. It has also been fine-tuned to follow precise instructions for reasoning, handling multi-turn conversations, and generating code. | Now, let’s set up the agent with Mistral Nemo. | from llama_index.llms.ollama import Ollama

from llama_index.core.agent import AgentRunner, ReActAgentWorker, ReActAgent

# Set up the agent

llm = Ollama(model="mistral-nemo", temperature=0.4)

agent = ReActAgent.from_tools(query_engine_tools, llm=llm, verbose=True)

# Example usage

response = agent.chat("Compare the revenue of Lyft and Uber in 2021.")

print(response)

| This LLM should generate a response similar to the following: | > Running step 7ed275f6-b0de-4fd7-b2f2-fd551e58bfe2. Step input: Compare the revenue of Lyft and Uber in 2021.

Thought: The current language of the user is: English. I need to use tools to help me answer the question.

Action: lyft_10k

Action Input: {'input': "What was Lyft's total revenue in 2021?"}

huggingface/tokenizers: The current process just got forked, after parallelism has already been used. Disabling parallelism to avoid deadlocks...

To disable this warning, you can either:

- Avoid using `tokenizers` before the fork if possible

- Explicitly set the environment variable TOKENIZERS_PARALLELISM=(true | false)

Observation: The total revenue for Lyft in 2021 was generated primarily from its ridesharing marketplace connecting drivers and riders, with revenue recognized from fees paid by drivers for using the Lyft Platform offerings in accordance with ASC 606.

> Running step 33064fd3-3c3a-42c4-ab5a-e7ebf8a9325b. Step input: None

Thought: I need to compare the revenue of Lyft and Uber in 2021.

Action: uber_10k

Action Input: {'input': "What was Uber's total revenue in 2021?"}

Observation: $17,455

> Running step 7eacfef4-d9da-4cbf-ac07-18f2ff6a3951. Step input: None

Thought: I have obtained Uber's total revenue for 2021. Now, I need to compare it with Lyft's.

Action: lyft_10k

Action Input: {'input': "What was Lyft's total revenue in 2021?"}

Observation: The total revenue for Lyft in 2021 was generated primarily from their ridesharing marketplace connecting drivers and riders. The revenue was recognized from fees paid by drivers for using the Lyft Platform offerings in accordance with ASC 606.

> Running step 88673e15-b74c-4755-8b9c-2b7ef3acea48. Step input: None

Thought: I have obtained both Uber's and Lyft's total revenues for 2021. Now, I need to compare them.

Action: Compare

Action Input: {'Uber': '$17,455', 'Lyft': '$3.6 billion'}

Observation: Error: No such tool named `Compare`.

> Running step bed5941f-74ba-41fb-8905-88525e67b785. Step input: None

Thought: I need to compare the revenues manually since there isn't a 'Compare' tool.

Answer: In 2021, Uber's total revenue was $17.5 billion, while Lyft's total revenue was $3.6 billion. This means that Uber generated approximately four times more revenue than Lyft in the same year.

Response without metadata filtering:

In 2021, Uber's total revenue was $17.5 billion, while Lyft's total revenue was $3.6 billion. This means that Uber generated approximately four times more revenue than Lyft in the same year.

| Using Metadata Filtering with Milvus | While having an agent with a tool defined for every different kind of document is convenient, it doesn't scale well if you have many companies to process. A better solution is to use Metadata Filtering offered by Milvus with our Agent. This way, we can store data from different companies in one collection but retrieve only the relevant parts, saving time and resources." | The code snippet below shows how we can use the meta-filtering functionality. | from llama_index.core.vector_stores import ExactMatchFilter, MetadataFilters

# Example usage with metadata filtering

filters = MetadataFilters(

filters=[ExactMatchFilter(key="file_name", value="lyft_2021.pdf")]

)

filtered_query_engine = index.as_query_engine(filters=filters)

# Define query engine tools with the filtered query engine

query_engine_tools = [

QueryEngineTool(

query_engine=filtered_query_engine,

metadata=ToolMetadata(

name="company_docs",

description=(

"Provides information about various companies' financials for year 2021. "

"Use a detailed plain text question as input to the tool."

),

),

),

]

# Set up the agent with the updated query engine tools

agent = ReActAgent.from_tools(query_engine_tools, llm=llm, verbose=True)

| Our retriever is now filtering on data that only comes from the lyft_2021.pdf document, and we shouldn't have any information about Uber. | try:

response = agent.chat("What is the revenue of uber in 2021?")

print("Response with metadata filtering:")

print(response)

except ValueError as err:

print("we couldn't find the data, reached max iterations")

| Now, let’s have a test. When asked about Uber's revenue in 2021, the Agent retrieved zero results. | Thought: The user wants to know Uber's revenue for 2021.

Action: company_docs

Action Input: {'input': 'Uber Revenue 2021'}

Observation: I'm sorry, but based on the provided context information, there is no mention of Uber's revenue for the year 2021. The information primarily focuses on Lyft's revenue per active rider and critical accounting policies and estimates related to their financial statements.

> Running step c0014d6a-e6e9-46b6-af61-5a77ca857712. Step input: None

| The Agent can find the data when asked about Lyft’s revenue in 2021. | try:

response = agent.chat("What is the revenue of Lyft in 2021?")

print("Response with metadata filtering:")

print(response)

except ValueError as err:

print("we couldn't find the data, reached max iterations")

| The returned result is: | | (there is a lot of code and Gmail cuts it in a wrong place! You will be redirected to Stephen Batifol’s original post) |

|

|

|