Not Boring by Packy McCormick - Scale: Rational in the Fullness of Time

Welcome to the 1,160 newly Not Boring people who have joined us since Monday! Join 58,051 smart, curious folks by subscribing here: 🎧 To get this essay straight in your ears: listen on Spotify or Apple Podcasts Today’s Not Boring is brought to you by… Fundrise Listen, the stock market can be great. Sometimes everything’s green and you look like a genius. But the stock market can also be unpredictable. YOLO’ing all of your money isn’t a strategy. You should give yourself some peace of mind by diversifying your portfolio. That’s where Fundrise comes in. They make it easy to invest in private market real estate, an alternative to the stock market, but one that used to only be accessible to the ultra-wealthy. Fundrise changed that. Now, you can access real estate’s historically strong, consistent, and reliable returns with just a $500 minimum investment. Diversification is a beautiful thing. Start diversifying with Fundrise today. Hi friends 👋 , Happy Monday! Hot Vax Summer is in full-swing, and while all of you are out trying to remember how to party, I’m sitting in a basement doing my darndest to expose myself as a fraud. My job is to go out to the edges and try to explain things that seem crazy and complex, first to myself and to all of you in the process. I’m not writing as an expert, but as someone who’s taking you along on my own exploration. I’ll get some things wrong, and you’ll call me out, and hopefully we all get a little smarter. In the process, two overarching themes for Not Boring have emerged:

Today’s essay is about Scale AI, a company that started with a non-obvious wedge into a large market that’s growing and evolving rapidly, and that will one day grow and evolve faster than humans can comprehend. It’s a company you should know about:

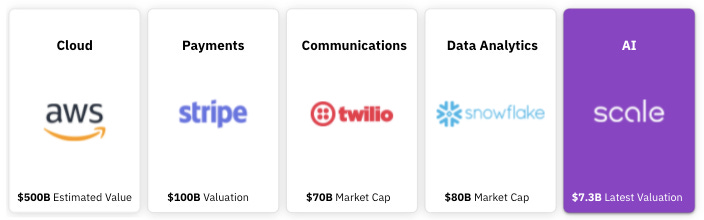

I’m certainly not an AI/ML expert. I will certainly get some things wrong and welcome feedback. I’m just taking you along on my learning and diligence. A note: Not Boring occupies a unique and tricky position. I write about the ideas and companies that fascinate me most and best fit the themes above, but I don’t want to sit passively and watch, so I often invest in the companies I see as best-positioned to bring about and capture value from the trends, too. If I were a journalist, this would be frowned upon. But I’m not. I’m just trying to dive in as deeply as I can to understand all of this better, bring back what I learn, expose my thought process (and potential conflicts) openly, and help shape the future in a small way. Full disclosure: I’m an investor in Scale, but didn’t receive compensation from Scale for this piece. Let’s get to it. Scale: Rational in the Fullness of Time^^ Click to jump straight to the web version ^^ On Thanksgiving last year, Alexandr Wang posted his first essay to Substack. The essay, Hire People Who Give a Shit, was good. So was the next one, Information Compression, which he wrote on December 5th. Both provided well-reasoned glimpses into the way Wang runs his company day-to-day. But the essays weren’t the most illuminating thing about the Substack. The name he chose for it was: Rational in the Fullness of Time The name is fitting given Wang’s long-term focus at his company, Scale. Scale has spent its first half-decade focused on the unassuming first step of the machine learning (ML) development lifecycle -- data labeling or annotation -- with a belief that data is the fundamental building block in ML and artificial intelligence (AI). For a while, that has meant that the company has looked like a commodity services business. Scale exists to “accelerate the development of AI applications” by “building the most data-centric infrastructure platform.” Its core belief, and the assumption on top of which the business is built, is that “Data is the New Code.” Scale wants to be to AI what AWS is to the cloud, Stripe is to payments, Twilio is to communications, or Snowflake is to Data Analytics. Now, of course Scale wants to be like those companies. Who doesn’t? But it’s on a credible path:

In a decade, if Scale is successful, any company that wants to build something using AI or ML will just stitch together five different Scale services like they stitch together AWS services to build something online today. Scale could collect or generate data for you, label it, train the machine learning model, test it, tell you when there’s a problem, continue to feed it fresh, label data, and on and on. Via Scale APIs, companies of any size will be able to build AI-powered products by writing a few lines of code. Take a second to appreciate that: within a decade, AI, long the stuff of sci-fi writers’ imaginations, will be as easy to implement as accepting a credit card is today. That’s mind-blowing. But that’s in the future. First, data. When Wang and co-founder Lucy Guo founded Scale out of Y Combinator in 2016, the company was called Scale API and its value prop was essentially that it was a more reliable Mechanical Turk with an API. They started with the least sexy-sounding piece of an incredibly sexy-sounding industry: human-powered data labeling. Customers sent Scale data, and Scale worked with teams of contractors around the world to label it. Customers send Scale pictures, videos, and Lidar point clouds, and Scale’s software-human teams would send back files saying “that’s a tree, that’s a person, that’s a stop light, that’s a pothole.” By using ML to identify the easy stuff first and routing more difficult requests to the right contractors, Scale could provide more accurate data more cheaply than competitors. Useful, certainly, but it’s hard to see how a business like that … scales. (I’m sorry, but I also can’t promise that will be the last scale pun). Scale’s ambitions are obfuscated by its starting point: using humans to build a seemingly commodity product. A bet on Scale is a bet that data labeling is the right starting point to deliver the entire suite of AI infrastructure products. If Wang is right, if data is the new code, the biggest bottleneck for AI/ML development, and the right insertion point into the ML lifecycle, then the brilliance of the strategy will unfold, slowly at first then quickly, over the coming years. It will all look rational in the fullness of time. Scale has a high ceiling. It has the potential to be one of the largest technology companies of this generation, and to usher in an era of technology development so rapid that it’s hard to comprehend from our current vantage point. But it hasn’t been all clear skies to date, and the future won’t be easy either. It will face competition from the richest companies and smartest people in the world. It still has a lot to prove. In either case, Scale is a company you need to know. It’s also an excellent excuse to dive into the AI and ML landscape and separate fact from science fiction. It’s looking increasingly likely that AI will find itself in the technology impact pantheon alongside the computer, the internet, and potentially web3. The combination of all of those technologies will change the world in unpredictable ways, but one thing’s certain: the world only gets crazier. We’re at an inflection point, so let’s get ready by studying:

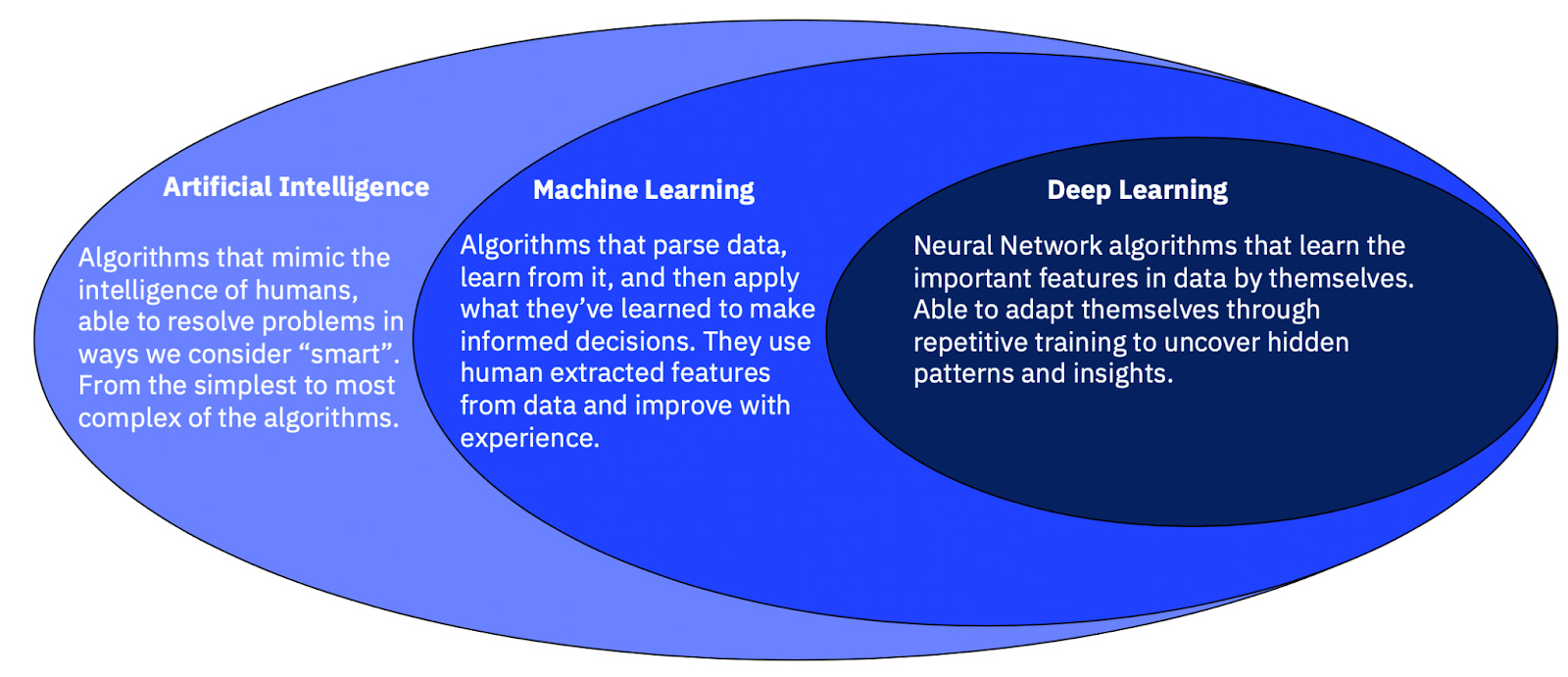

Before we get to Scale, though, we need to get on the same page with what AI and ML are. The State of AI and MLI’ll start with the punchline, and then get to the joke: whether you call it AI or ML, it’s useless without good data. Now the joke. There’s a set of jokes among technical people whose premise is that machine learning is just a fancy way of saying linear regression and that artificial intelligence is just a fancy way of saying machine learning. No one made the joke better than this guy: The idea is that machine learning is the real thing, written in the Python programming language, and AI is just hype-y way of saying ML that people use to fundraise. There are differences, though. I asked my friend Ben Rollert, the CEO of Composer and my smartest data scientist friend, how he would define the differences between AI and ML. His response seems pretty representative of the general conversation: AI is a broad bucket of “algorithms that mimic the intelligence of humans,” some of which exist today in machine learning and deep learning, and some of which still live only in the realm of sci-fi.

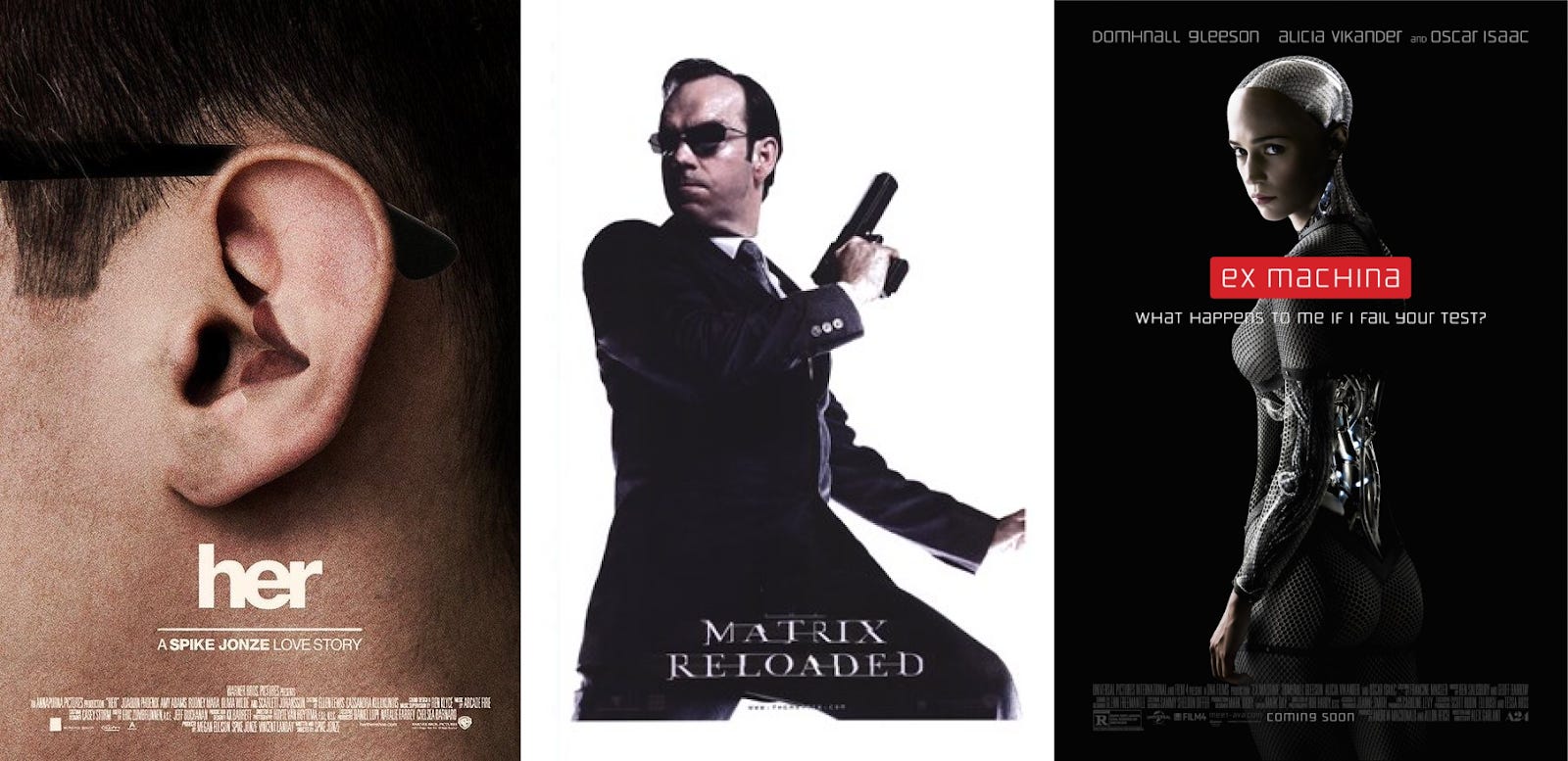

AI is split broadly into two groups: Artificial Narrow intelligence (ANI) and Artificial General Intelligence (AGI). When we talk about AI applications today, we’re talking about ANI, or “weak” AI, which are algorithms that can outperform humans in a very specific subset of tasks, like playing chess or folding proteins. AGI, or “strong” AI, refers to the ability of a machine to learn or understand anything that a human can. This is the stuff of movies, like the voice assistant Samantha in Her, the Agents in The Matrix, or Ava in Ex Machina. Most of the things that we call AI today fit into the subset of AI known as machine learning. ML, according to Ben, is:

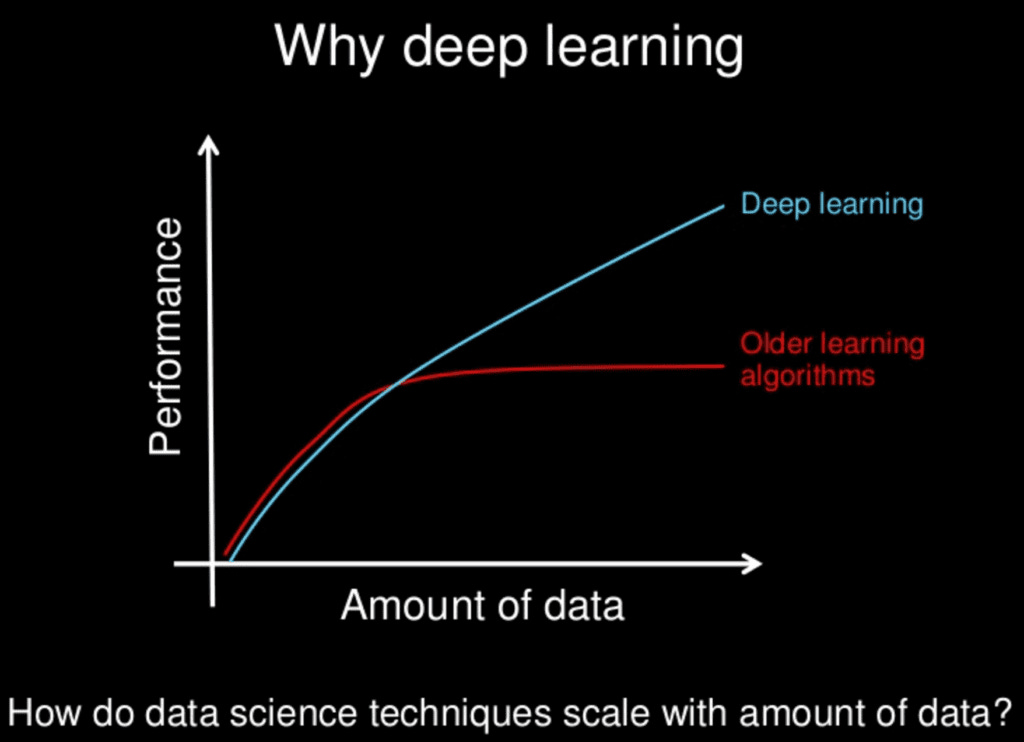

ML has been around since the 1990s, but over the past decade, a subfield within ML called deep learning has ignited ML and AI application development. According to Andrew Ng, the founder of Coursera and Google Brain, deep learning uses brain simulations, called artificial neural networks, to “make learning algorithms much better and easier to use” and “make revolutionary advances in machine learning and AI.” In a 2015 talk, Ng said that the revolutionary thing about deep learning is that it, “Is the first class of algorithms … that is scalable. Performance just keeps getting better as you feed them more data.” Major improvements in ML and AI seem to come from step function changes in the amount of data a model can ingest. In 2017, researchers at Google and the University of Toronto developed a new type of neural network called Transformers, which can be parallelized in ways that previous neural networks couldn’t, allowing them to handle significantly more data. Recent advancements in AI/ML like OpenAI’s GPT-3, which can write longform text given a prompt, or DeepMind’s AlphaFold 2, which solved the decades old protein-folding problem, use Transformers to do so. GPT-3 has the capacity for 175 billion machine learning parameters. These advancements have led to a renaissance in the applications of AI and ML. I asked Twitter for some recent examples, and many of them are truly mind-blowing: For all of the technological advancements, though, it all comes down to data. In his 2015 Extract Data Conference speech, Ng included this slide that highlights the benefit of deep learning: What deep learning solved, and Transformers expanded on, is allowing models to continue to scale performance with more data. The question then becomes: how do you get more good data? Getting to ScaleScale has all the stuff that Silicon Valley darlings are made of: acronyms like AI, API, and YC, huge ambitions, young, brilliant college dropout founders, and an insight born of personal experience: AI needed more and better data. Alexandr Wang was born in 1997 in Los Alamos, New Mexico, the son of two physicists at Los Alamos National Lab. Alexandr is spelled sans second “e” because his parents wanted his name to have eight letters for good luck. Whether through luck or genetics, Wang was gifted. He attended MIT, where he received a perfect 5.0 in a courseload full of demanding graduate coursework, before dropping out after freshman year. Wang worked at tech companies Addepar and Quora, and did a brief stint at Hudson River Trading. In 2016, Wang (then 19) joined forces with Lucy Guo (then 21), a fellow college dropout (Carnegie Mellon) and a Thiel Fellow, and entered Y Combinator’s Spring 2016 batch. They didn’t quite know what they were going to build when they entered, but Wang, like so many founders, hit upon a problem through personal experience. He told Business of Business that at MIT:

So Wang and Guo built Scale during YC, and launched it at the end of the program, in June 2016. Before it was Scale AI, they called it Scale API. To learn about Scale’s evolution from API to AI, Hot-Dog/Not-Hot-Dog, How Scale is (actually) like Stripe, the Scale Bear Case, and the Scale Bull Case… Thanks to Dan for editing, and to Alex, Ben, Neil, Henrique and others for the input. How did you like this week’s Not Boring? Your feedback helps me make this great. Loved | Great | Good | Meh | Bad Thanks for reading and see you on Thursday, Packy If you liked this post from Not Boring by Packy McCormick, why not share it? |

Older messages

The Cooperation Economy 🤝

Friday, June 18, 2021

Or How to Build a Liquid Super Team

Cityblock Health

Friday, June 18, 2021

The Vertically-Integrated, Value-Based Arbitrageur Fixing Healthcare

Zero Knowledge

Friday, June 18, 2021

A Not Boring x Jill Carlson Collab on Moon Math, Privacy, and Hype

A New Day on Earth for Coffee

Friday, June 18, 2021

Cometeer: The Official Coffee of Not Boring

You Might Also Like

🔮 $320B investments by Meta, Amazon, & Google!

Friday, February 14, 2025

🧠 AI is exploding already!

✍🏼 Why founders are using Playbookz

Friday, February 14, 2025

Busy founders are using Playbookz build ultra profitable personal brands

Is AI going to help or hurt your SEO?

Friday, February 14, 2025

Everyone is talking about how AI is changing SEO, but what you should be asking is how you can change your SEO game with AI. Join me and my team on Tuesday, February 18, for a live webinar where we

Our marketing playbook revealed

Friday, February 14, 2025

Today's Guide to the Marketing Jungle from Social Media Examiner... Presented by social-media-marketing-world-logo It's National Cribbage Day, Reader... Don't get skunked! In today's

Connect one-on-one with programmatic marketing leaders

Friday, February 14, 2025

Enhanced networking at Digiday events

Outsmart Your SaaS Competitors with These SEO Strategies 🚀

Friday, February 14, 2025

SEO Tip #76

Temu and Shein's Dominance Is Over [Roundup]

Friday, February 14, 2025

Hey Reader, Is the removal of the de minimis threshold a win for e-commerce sellers? With Chinese marketplaces like Shein and Temu taking advantage of this threshold, does the removal mean consumers

"Agencies are dying."

Friday, February 14, 2025

What this means for your agency and how to navigate the shift ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Is GEO replacing SEO?

Friday, February 14, 2025

Generative Engine Optimization (GEO) is here, and Search Engine Optimization (SEO) is under threat. But what is GEO? What does it involve? And what is in store for businesses that rely on SEO to drive

🌁#87: Why DeepResearch Should Be Your New Hire

Friday, February 14, 2025

– this new agent from OpenAI is mind blowing and – I can't believe I say that – worth $200/month