What Meta (formerly Facebook) Knew About India

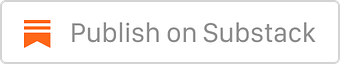

What Meta (formerly Facebook) Knew About India…was a lot. Particularly about user journeys, fake accounts, inauthentic campaigns, and more. And it did nothing.Good Morning! A big hello to readers who signed up this week. This is The Signal’s weekend edition. Our story today is about a company now known as Meta. It takes a deeper look into how Facebook’s researchers and employees saw India, its largest user base market. Also in today’s edition: the best reads from the week. Happy weekend reading. While reading through the Facebook papers outed by whistleblower Frances Haugen, I had a sense of déjà vu. Prior to the 2019 elections, in an experiment to understand what Facebook looked like to a majority of its Indian users, I started a dummy Facebook page to study the information ecosystem. The evolution of the page was not very different to what is described in Facebook’s internal documents. Several people, belonging to a certain political orientation “liked” the page. But even as I explored similar pages, the Facebook algorithm would kick in every time a page was “liked”, and recommend me more, taking me down the proverbial rabbit hole. It would recommend groups and “joining” those would trigger more recommendations. Every group brought more, thanks to GYSJ, or Groups You Should Join, as we now know it’s called internally. The group and the pages are a familiar mix of misinformation or fake news, vulgarity or violent content, and in some cases, hate speech. Much of it violated Facebook’s “community standards.” Now, reportage from a consortium of largely Western newspapers and agencies based on the Facebook papers, has revealed that the social network knew exactly what its largest market by user base was doing on its platform. It deployed a test user who arrived at more or less the same conclusions that I had. Facebook knew how, egged on by AI, its users’ networking pathway progressed. The crux of the matter is: “Facebook knew, and did nothing.” It faltered for a variety of reasons, including “political sensitivity” and “lack of classifiers”. Mark Zuckerberg was quick to call these revelations “a coordinated effort to selectively use leaked documents to paint a false picture of our company”. “Explicitly political considerations” The papers lay bare that Facebook’s operations in India are a black box and it often fails to enforce its own policies, baulking at the prospect of government flak. A series of Wall Street Journal reports last year pointed out that Facebook’s decisions on hate speech in India were likely influenced by its business prospects in the country, a point also made by Haugen that the company puts profit above ethics. There were other factors at play too. One note from December 2020 titled “Political Influences on Content Policy” outlined how Facebook routinely makes exceptions for powerful actors. “In India a politician who regularly posted hate speech was exempted by the Indian public policy team for normal punishment for explicitly political considerations. A Facebook spokesperson would only go as far as saying “this wasn’t the sole factor”,” employees said in a note.

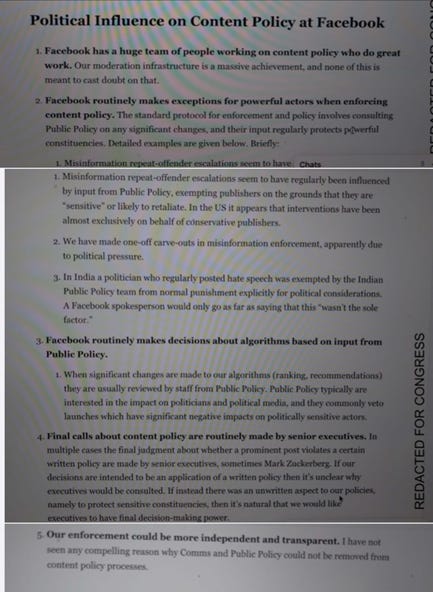

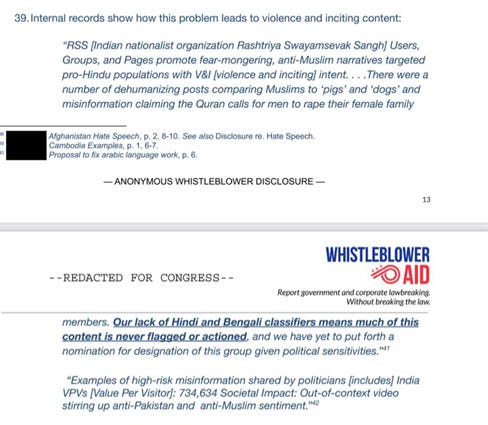

In a separate section titled 2020-08: India “dangerous individual” designation, a researcher wrote, “As far as I can see, there has been no explicit refutation of the claim that this decision was influenced by fear of political consequences for Facebook”. A Meta spokesperson, in an e-mailed response to The Intersection’s detailed questionnaire said, “We ban individuals or entities after following a careful, rigorous, and multi-disciplinary process. We enforce our Dangerous Organizations and Individuals policy globally.” A former senior Facebook employee, Yael Eisenstat, in an interview to PBS, recounted her trip to India in 2018. “When we were there in 2018, we met lots of people who showed us without question about troll farms and fake engagement and hatred spreading. And they were imploring us to do something about it,” she said. “But as these documents now prove to us, again, they were making political decisions to protect their relationship with the party in power in India. And those political decisions were part of the reasons they didn’t enforce some of their very own policies that could have possibly helped tamp down some of the misinformation and hatred that spreads in India,” Eisenstat, who was formerly the global head for election integrity operations for political ads, added. Facebook researchers also noted that the company was yet to “put forth a nomination for designation” groups and users affiliated with the Rashtriya Swayamsevak Sangh, “given political sensitivities”. The statement is in a memo which outlined how Facebook's inadequacy in global languages was leading to “global misinformation and ethnic violence”. “Language issues” While Facebook saw political sensitivities as existential, a lack of local expertise hobbled it further. India staff flagged absence of resources, particularly in languages such as Hindi and Bengali (see screenshot above). Its oft-cited AI review system was ineffective because its training in local languages was limited. A Meta source said that hate speech classifiers in Hindi were added in 2018, while Bengali was introduced in 2020. Classifiers for violence and incitement in Hindi and Bengali first came online in early 2021. Tamil and Urdu already had them, the source said. The New York Times report said “Of India’s 22 officially recognised languages, Facebook said it has trained its AI systems on five”. Assamese, for instance, was of interest in March ahead of the state elections this year. “Assam is of particular concern because we do not have an Assamese hate-speech classifier,” the planning document, revealed by the Wall Street Journal, said. A Meta spokesperson added, “We’ve invested significantly in technology to find hate speech in various languages, including Hindi and Bengali, As a result, we’ve reduced the amount of hate speech that people see by half this year. Today, it’s down to 0.05%. Hate speech against marginalised (sic) groups, including Muslims, is on the rise globally. So we are improving enforcement and are committed to updating our policies as hate speech evolves online.” Facebook’s community standards are not available in local languages in many countries, including India, making things difficult for users. One January 2021 note outlining hate speech in Afghanistan illustrates this point, “…neither the questions that direct a user to the Community Standards page nor the Community Standards are translated into local languages. This is particularly concerning against the backdrop of low literacy rates, low general awareness regarding what constitutes Hate Speech and low specific awareness regarding what Facebook’s policies about Hate Speech are”. Meta sources told The Intersection that Facebook’s community standards are translated in 49 languages globally, including Hindi, Urdu, and Bengali. “Inauthentic Behaviour” There’s a term in Facebook-speak, Coordinated Inauthentic Behaviour (CIB), through which Facebook establishes influence operations and campaigns. While it is hard to define, a Columbia Journalism Review report says Facebook’s “definition of coordinated inauthentic behaviour requires the use of fake accounts to mislead users”. As the general election drew near in India in April 2019, Facebook clamped down on a network that was indulging in CIB. It also spotted similar behavioural patterns in West Bengal. While it is unclear if these were classified as CIB, its employees noted in “Adversarial Harmful Networks: India Case Study” that “40% of sampled top VPV (view port views) civic posters in West Bengal were fake/inauthentic. The highest VPV-user to be assessed as inauthentic had more than 30M (million) accrued…Coordinated inauthentic actors seed and spread civic content to propagate political narratives…”. Facebook also estimated that about 11% of its monthly active users in 2020 were accounted for by SUMA or single user multiple accounts. That number grew to 40% by 2021. One memo, citing an internal presentation, noted that “a party official for India’s BJP used SUMAs to promote pro-Hindi messaging”. It said, “[A] BJP IT Cell worker [shared] coordinated messaging instructions to supporters [with a] copypaste campaign…targeting politically sensitive tags … Fanout from [the post]: use of SUMA and 103 suspicious entities across IN (India) & BD (Bangladesh)”. Who will bell the cat? Although India is Facebook’s largest market by user base, the concerns that Haugen raised and the Western media reported largely centre around the US and Europe. Some reactive steps have been taken by the Delhi government. The Centre has also asked questions. But there is no furore in India, which does not even have a privacy law yet. The US legal framework in the works may turn out to be something like the Foreign Corrupt Practices Act (FCPA), effective in the US, feeble outside it. That Facebook spends only 13% of its misinformation budget outside the US (or rest of the world) is a reflection of this lopsided priority. The FCPA punishes US companies for bribing officials or governments of other countries to promote business interests there. For instance, Walmart had to cough up $282 million for allegedly bribing officials in Mexico, Brazil and India. Similarly, Mondelez, which makes Cadbury chocolates, paid $13 million to the Securities Exchange Commission to settle allegations that it violated the FCPA. But in both the cases, the companies were untouched in India. The Walmart probe in India was set aside by courts and local proceedings against Mondelez, allegedly for building a phantom factory in Himachal Pradesh, continues to be tangled in the Indian judicial system. Even so, a US law that applies to social media companies’ conduct worldwide may still go some way in stamping out misinformation and hate speech in other countries too. But even US legislators could be too far from envisioning it. All screenshots have been sourced from documents that were released by publications belonging to the Consortium. ICYMI FB's Rage Push: A Facebook (now Meta) timeline filled with angry voices isn't a one-off. A Washington Post article has found that engineers at the social media platform pushed more provocative content and angry reaction than sober likes. Another addition to its list of wrongdoings. Million-Dollar Chat: A group chat worth millions of dollars where decentralised tokens are required to become members. Decentralised Autonomous Organisations are private blockchain rooms of the future where everything related to crypto is discussed among community members; investors are hooked. Gender Politics: In a country where female participation is among the world's lowest, women are also facing sexual harassment. This piece details how women in Japan face heckling, bullying and harassment when they enter politics. The glass ceiling is far from being shattered. Sufi Dreams Blocked: When Yemberzal, an all-women Sufi band from Kashmir wanted to further their musical dreams, it wasn't patriarchy that played spoiler. The slew of internet blackouts in the volatile region became the biggest dampener to their ambitions. These women hope to spread their art through social media. ASMR For Anxiety: It is the third-most popular YouTube search term. But could autonomous sensory meridian response (aka ASMR) be used as a supplementary cure for anxiety and depression? That's what mental health experts are now discussing. Loo With A View: Have you visited a public toilet in India that is also highest ranked globally for aesthetics? This listicle of the best-looking toilets across the globe may come as a surprise. Those passing through Thane should definitely have a pee-k. Only women may enter, though. If you liked this post from The Signal, why not share it? |

Older messages

LinkedIn wants to change. Finally

Friday, October 29, 2021

Good morning! Cigarettes are back. It feels strange writing this but it appears they are. According to reports, in the US, the sale of cigarettes increased for the first time in 20 years. The stress of

Zomato’s dash for cash

Thursday, October 28, 2021

Also in today's edition: Google & Twitter shrug off Apple, China is civilising the Internet,

Private equity comes to IPL

Tuesday, October 26, 2021

Evergrande to go for broke on EVs, Pe vs Pe, Who made the best exit?

What Facebook did not do in India

Monday, October 25, 2021

Also in today's edition: It's getting worse in China, US tech stocks snap on guidance, Match box prices to go up after 14 years

Tinder is coming to town

Saturday, October 23, 2021

Dating apps are helping youth connect in small towns and reviving hyperlocal businesses

You Might Also Like

The Gratitude Shift: From 'Grateful For' to 'Grateful In'

Wednesday, November 27, 2024

For many of us, 2024 has been a year of extremes. The highs have felt exhilarating, and the lows have been profoundly difficult.

Short Video 101

Wednesday, November 27, 2024

It's as easy as 1, 2, 3.

⏰ Black Friday Countdown: 2 Days to Go!

Wednesday, November 27, 2024

Alert: Incoming Savings!

Google uses search remedies trial to subpoena OpenAI, Perplexity and Microsoft over their generative AI efforts

Wednesday, November 27, 2024

In an attempt to show AI has created a more competitive search industry, Google is trying to get OpenAI, Perplexity AI, and Microsoft's strategies to defend its own. November 27, 2024 PRESENTED BY

🔔Opening Bell Daily: US exceptionalism

Wednesday, November 27, 2024

The classic advice to diversify with international stocks has become a losing strategy for portfolio managers.

How this founder scaled to $4.6M ARR and a 13x revenue exit!

Wednesday, November 27, 2024

Let's break it down!

MAILBAG! FCS Bowl Games, FBS regular season expansion, and more:

Wednesday, November 27, 2024

Plus, EVEN MORE EXTRA POINTS BOWL PICTURES

Into the black

Wednesday, November 27, 2024

Offshore crypto-gambling is having a moment ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

1 minute to increase your email open rate

Wednesday, November 27, 2024

Every year we bring the highest quality software to RocketHub for an insane BFCM event. This year is no different! BFCM starts now so check the page below for one new lifetime deeaaal drop each day.

Memo: The Distressed Brand

Wednesday, November 27, 2024

The opposite of brand equity isn't no equity; it's brand apathy. View this email in your browser 2PM (No. 1014). The most recent letter was read by 46.1% of subscribers and this was the top

![[crop output image] [crop output image]](https://cdn.substack.com/image/fetch/w_714,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2Fe8fe9ac9-6457-4692-9a7c-a03783ac74c7_500x231.gif)