Not Boring by Packy McCormick - Evolving Minds

Welcome to the 1,546 newly Not Boring people who have joined us since last Monday! If you haven’t subscribed, join 201,790 smart, curious folks on our journey to 1 million: Today’s Not Boring is brought to you by… Pesto Global sourcing + AI-based screening & matching Pesto is building the most effective way to scale global engineering teams, bringing efficiency and predictability to your hiring process. Here’s how:

Pesto is trusted by hyper-growth companies such as Pulley, Snorkel AI, Alloy and hundreds of high quality startups and scaleups. Whether you're building a team from scratch, developing a new AI product, or supercharging your existing team, Pesto has you covered. When you talk to them, tell them Not Boring sent you and get 25% off your first hire! Evolving MindsThe two most important intellectual turning points in history followed a similar pattern: A new knowledge transfer technology unlocked radical new modes of human thought. The phonetic alphabet preceded the creation of math, science, and philosophy in Ancient Greece. Humans began to view the things that happened on earth and in the heavens through the lens of natural laws instead of the whims of the gods. The phonetic alphabet enabled the composability of ideas. It broadened access to reading and writing beyond professional scribes, who had an interest in maintaining the status quo, and allowed any intelligent (upper class) person to express and share complex ideas more easily. The printing press preceded the creation of the scientific method, Heliocentricity, Newtonian physics, and calculus during the Scientific Revolution. Humans began to systematically investigate and understand the world around them using empirical evidence, logical reasoning, and mathematical tools, challenging long-held beliefs and assumptions. The printing press enabled the composability of science. As SlateStarCodex wrote, “If a scientist discovers something, he can actually sent [sic] his work to other scientists in an efficient way, who can then build upon it. This was absolutely not the case for previous scientists, which is why not much happened during those periods.” In both cases, we used new tools to better understand the world so that we might better control it. I’m writing about this now on the off chance that we’re in the beginning of a third intellectual revolution, one that enables the composability of knowledge and complex problem-solving. Large Language Models (LLMs) shrink the gap between thought and execution, giving anyone with good ideas access to conversational formats in which to test them against humanity’s accumulated knowledge and to bring them to life in code. The pattern goes something like this. Humans create scattered knowledge, a knowledge transfer technology comes along that lets us harness and spread that knowledge, some humans use that technology and generate a fresh insight about how to better understand the world, and we create better knowledge until the next major knowledge transfer technology comes along, when, paired with a fresh human insight, allows us to view and work with all of the prior knowledge in new ways. Of course, it remains to be seen if LLMs (or some new AI architecture) are the third major intellectual advance. I would have guessed the internet would be. And if it is, it’s impossible to predict what new leap in human thinking it unlocks. If I knew, I’d be up there with Anaximander and Copernicus. That’s for one of you to figure out. What I do know is that it won’t be LLMs that make the next counterintuitive leap. It will be humans. That’s our role in this dance. I suspect that leap will require that we use new tools to push the limits of our minds’ unique capabilities. “We shape our tools, and thereafter our tools shape us." This John M. Culkin quote, often misattributed to his more famous contemporary, Marshall McLuhan, captures the two-way relationship between humans and technology that I think is missing from the current dialogue about humans’ role in an AI powered future. I certainly hadn’t given it enough thought. Too frequently, those explorations, mine included, view human performance as a static thing. We can retreat ever further into the remaining “uniquely human” skills like creativity and empathy, while we wait to see if AI can outdo us at those skills, too. But humans and technology coevolve and contribute different pieces to progress. The phonetic alphabet facilitated the growth and dissemination of knowledge, and if writing sharpens thought, changed how we think. But the phonetic alphabet couldn’t challenge prevailing belief, shared by all cultures to that point, that the gods were behind everything that happened on earth. Not even today’s most advanced LLM could do that. Making that leap required a radical human insight, one that came from knowledge, experience, and intuition. That’s the argument I’m going to make today. If AI is the third inflection point in humanity’s intellectual history, it will be through a combination of the powerful new capabilities at our fingertips and those unique, transformative insights that only human minds have demonstrated thus far. Those world-shifting insights are harder to come by today than they were when people thought the earth was a flat thing floating on water or held up by turtles, or when knowledge was acquired through passed down truths and deductive reasoning. But we can get smarter, too, and I expect that we will through a combination of training with AI, a renewed focus on mind-expanding techniques like meditation, breathwork, psychedelics, lucid dreaming, and sleep, networked collaboration, hands-on experiences, and even biological and technological enhancements. Far from rolling over and letting AI do our thinking, we’ll need to use all of the tools at our disposal to ensure that our contributions to human-technology collaboration keep pace. To make the argument, we’ll cover:

Technology alone won’t be enough. To make counterintuitive leaps requires new perspectives and fresh insights. The kind that Anaximander made with the help of the phonetic alphabet. Anaximander and the Phonetic AlphabetAnaximander is the most underappreciated thinker in human history, and maybe the most important. That’s the premise of Italian quantum physicist Carlo Rovelli’s new book, Anaximander: And the Birth of Science, and the book is convincing. We’ll explore Anaximander’s contributions, and how he arrived at them, to better understand the interplay between new knowledge transfer technologies and new kinds of human thoughts. Born around 610 BCE in Miletus, an ancient Greek city located in what is now modern-day Turkey, Anaximander was a pre-Socratic Greek philosopher. Anaximander’s contributions were vast: he set the earth floating in space, explained the weather without blaming the gods, surmised that humans evolved from fish, proposed a substrate that we can’t see, and even came up with something like the Big Bang. 2,600 years ago! According to Rovelli, “His is the first rational view of the natural world. For the first time, the world of things and their relations is seen as directly accessible by the investigation of thought.” That’s a monumental shift that’s hard to appreciate from today’s perspective. But let’s try. Imagine that everyone you know believes that the earth is a flat thing surrounded by water, and that Zeus sits above the earth throwing lightning bolts down whenever he gets mad. Your parents, your friends, your wife, your priest – they all believe that and talk about it as if it’s just a simple fact. But you don’t think that sounds quite right. It doesn’t fit with your experience. You’ve never actually seen Zeus, but you have seen the clouds crash into each other during thunderstorms. And if the earth is a flat thing by water, then where do the stars go when they dip below the horizon? The mental leap it must have taken to realize that everything everyone you know believes is untrue, and that maybe things have naturalistic explanations, is nearly impossible to fathom. How was Anaximander able to make the leap? Rovelli cites three things that made Miletus particularly fertile intellectual ground during Anaximander’s time:

In Rovelli’s telling, democracy seems to have been born of the same factors that led to naturalistic thinking – an informed citizenry, healthy debate, and criticism among equals. Cultural crossbreeding, particularly with the Egyptians, may have shown Thales, upon whose work Anaximander expanded, and Anaximander himself, the limits of their ideas. Importantly, the Egyptians could have shown them that it was even possible for their ideas to be wrong. But the phonetic alphabet seems to me to be the most impactful of the three on the contributions Anaximander would make. Prior to the eighth century BCE, the world’s alphabets all used hieroglyphs, cuneiform, logograms, or consonants. As a result, reading and writing “remained the domain of professional scribes for millennia.” The Phoenecian alphabet that the Greeks adopted in 750 BCE had no vowels and seven more consonants than the Greeks needed to capture their consonantal sounds: α, ε, η, ι, ο, υ, ω. No vowels meant that you couldn’t just listen to a word and write down the sounds, or conversely, couldn’t just sound out words by reading them. At some point, in the process of adopting the Phoenecian alphabet, a Greek or group of Greeks had an idea: turn those extra Phoenecian consonants into Greek vowels to capture all the sounds their voices make when speaking. That was an enormous unlock. “Instead of recognizing the written word, one could simply pronounce it and recognize it by the sound, even without preliminary knowledge of the particular written word in the text,” Rovelli writes. “The first technology in human history capable of preserving a copy of the human voice was born.” Correlation may not equal causation, but as Rovelli observes:

And came Anaximander, who changed the way we see the world. I suspect that the phonetic alphabet contributed to his radical insights in a few ways:

Anaximander’s writings are lost to time, and even less is known about his thought process, so this part is guesswork. I can imagine, though, Anaximander sitting down with a wax tablet and working through his ideas in private before committing them to parchment and the critical eyes of a wider audience. You don’t want to bring out your half-baked idea that the gods aren’t that powerful, actually, without a little sharpening. Whatever the specifics, it’s likely that without the phonetic alphabet, Anaximander would not have been able to form or share the ideas that would give birth to science. It’s equally unlikely, though, that any technology, even the best we have today, would have been able to make the mental leap that Anaximander did. Anaximander saw the sun, moon, and stars move in the heavens with his own eyes. He felt the rain on his skin after feeling the sun beat down on his head. He heard the rumble of the thunder, and when he looked up, he saw the clouds crashing in the sky. He had never met Zeus. He must have felt in his bones that something was amiss in the explanations he’d been given, and in his mind, he must have felt that nagging curiosity familiar to all of us when something doesn’t seem quite right. His was a very human insight, aided by the best technology available at the time. But even today’s best technology couldn’t have made the leap Anaximander did. I asked ChatGPT, and it admitted that, if fed everything written and said to that point, it couldn’t have explained things via natural laws. Anaximander’s story is useful in its simplicity: one technology, one man’s radical insight, many discoveries. We could tell similar stories from the Scientific Revolution with more characters: Copernicus, Galileo, Bacon, Newton. Born just 33 years after the invention of the printing press, it’s not hard to imagine that Copernicus was one of the first sufficiently genius humans to have access to the works of Aristarchus of Samos, Ptolemy, Islamic astronomers, and Neoplatonic philosophers. He reinterpreted the astronomical data from Ptolemy’s Almagest through the lens of his radical new insight: what if the Sun was at the center and the earth orbits around it? Francis Bacon was born into the Renaissance, a period that saw a resurgence of interest in ancient Greek philosophies, including Aristotle’s advocacy for empirical observation and logical reasoning. He planted the seeds of the scientific method in a critique of the existing Aristotelian approach, arguing instead for empirical observation paired with inductive reasoning and experimentation. Both heliocentricity and the scientific method required counterintuitive leaps off of the accumulated knowledge made broadly available by the printing press, and in turn, the printing press enabled the spread of Copernicus’ and Bacon’s ideas for further refinement by other thinkers, which shaped them into the forms that survive to this day. If all of the radical mental leaps that need to be made to understand the universe and our own minds have been made, if there’s no need for further Anaximanderian or Copernican insights, if the rest is just experiment and calculation, then AI might solve the remaining mysteries of the universe with or without our help. That may be the case, but I find it highly unlikely. Anaximander’s contemporaries didn’t suspect that godless explanations were within the realm of possibility. Physics was pretty much settled after Newton, until it wasn’t. My overwhelming suspicion is that AI will help uncover new discontinuities that will require fresh human insights, and that at the outer edges of science, humans will make new leaps that we can explore with the help of AI. In that case, I don’t think the framing of AI as simply a tool that we’ll use to do things for us is quite right. I think we’ll use what we learn building AI to better understand how our minds work, define the things that humans are uniquely capable of, and use AI as part of a basket of techniques we use to enhance our uniquely human abilities. Even as AI gets smarter, it will fuel us to get smarter and more creative, too. Chess and Go provide useful case studies. Chess and GoGames are a testing ground for AI. With clearly defined rules and goals, even the most complex games are “tame problems” as compared to the “wicked problems” of carrying on nuanced conversations, pushing the frontiers of scientific knowledge, and understanding the human mind. So it’s instructive to look at how humans responded when AI captured those first frontiers to understand how we might respond as it captures more. As AI became superior at chess and then Go, instead of giving up, we got better, too. In 1997, IBM’s chess-playing computer, Deep Blue, faced off against humanity’s best chess-playing human, Garry Kasparov, in a six game rematch. The year prior, in their first matchup, man had defeated machine. And then the machine got smarter, upgraded with faster hardware and better training. There was a bit of hysteria around the event, as often happens. Washington Post staff writer Joel Achenbach captured the sentiment in the lede of his mid-contest article: “The greatest chess player the world has ever known is struggling to defeat a machine. It's another wonderful opportunity for the human race, as a species, to engage in collective self-loathing.” Achenbach went on to categorize the dire proclamations made by competitor publications:

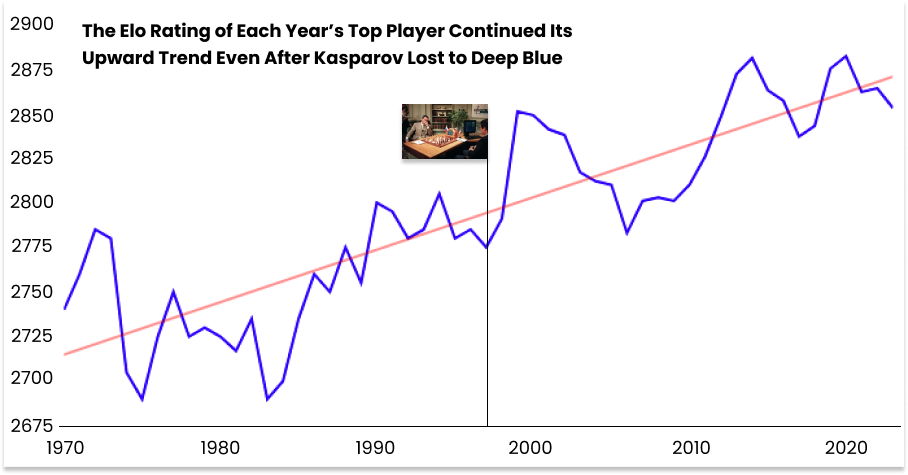

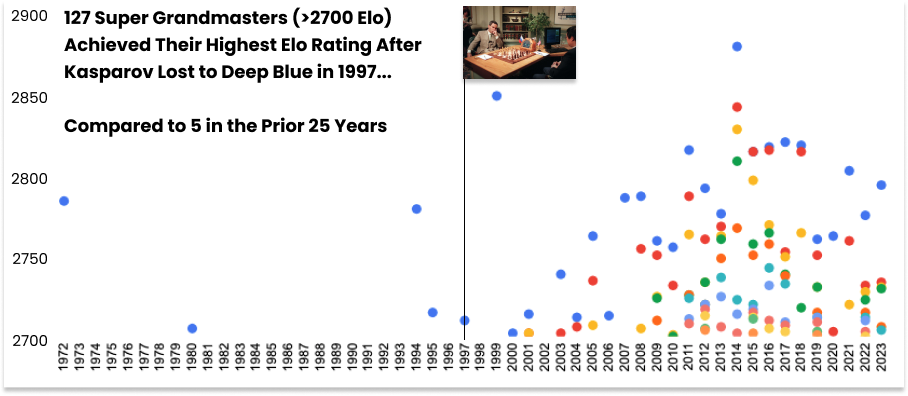

Sound familiar? You know how the match turned out. Deep Blue beat Kasparov 3.5 to 2.5. Kasparov had failed to defend humankind from the inexorable advance of artificial intelligence. Our species was defined as losers. The Brain lost its Last Stand. Early indications of how well our species might maintain its identity, let alone its superiority, in the years and centuries to come were not good. Some commentators even questioned whether there was a point to playing chess anymore. What did it mean to be the best in the world at chess if computers would always be better? But something funny happened after Deep Blue. Chess grew in popularity, and its players grew in skill. Looking at the Elo rating of the top chess players each year, the upward trend continues unbroken after Kasparov’s defeat. The best kept getting better – that’s interesting¹. What’s more interesting is how many people played great chess before and after Deep Blue. Only five Super Grandmasters, players with Elo ratings above 2700, achieved their highest rating in the 25 years from 1972 to 1997 (inclusive). In the 26 years since, 127 Super Grand Masters have broken the 2700 mark. Again, this data isn’t perfect², but the shift is so dramatic that even if the data isn’t pristine, it’s directionally correct. Not only were the best getting better, many, many more people were getting very, very good. Inflation aside, the explosion in the number of Super Grandmasters can be explained by a combination of more players, from anywhere in the world, training with improving chess engines – engines good enough to beat the world’s best player, now handily – and honing their skills online in competitions against each other and the machines. This doesn’t mean that it’s now easy to be a great chess player, or that everyone is now a Super Grandmaster. You can’t bring the chess engine to a competition (and if you try to have it send you messages via anal beads, you’ll get in trouble). But it means that more people with the requisite talent and the willingness to put in the work can get great at chess than before. The machines have pushed the humans to get better, and helped make them better. Whenever AI achieves something, though, the goalposts move. Of course a computer can beat a human in chess, it’s just brute force calculations. Now Go, there’s a complex game. Go is an ancient Chinese strategic board game in which two players take turns placing black and white stones on a grid with the objective of capturing territory by surrounding their opponent's stones and controlling more of the board. There are 10170 possible Go moves, dwarfing the number of atoms in the universe and a whole googol (10100) more complicated than chess. We lost chess, but surely computers couldn’t beat us in Go, right? You know this one too. In 2015, DeepMind’s AlphaGo beat the top European player, Fan Hui, five games to zero. The next year, it beat the world’s top player, Lee Sedol, four to one. There’s a great documentary on the whole thing available for free on YouTube:  As with chess, instead of discouraging human players, AlphaGo led to an increase in both popularity and human skill. As the dramatic contest made people aware of the game, Go boards sold out worldwide. And AlphaGo pushed the best to get better. Here’s Lee Sedol:

The documentary ends with Fan Hui walking through a vineyard, daughter on shoulders, reflecting on playing against AlphaGo:

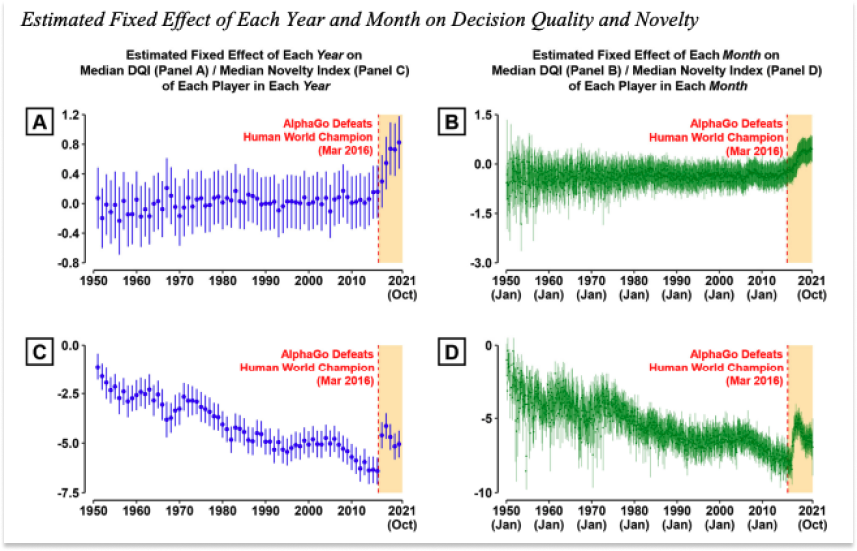

AlphaGo also improved the quality and novelty of human play dramatically beyond the greats. In a March 2023 paper, Superhuman Artificial Intelligence Can Improve Human Decision Making, a team led by Minkyu Shin analyzed 5.8 million move decisions made by human Go players over the past 71 years for quality and novelty. See if you can spot where AlphaGo beat the human world champion for the first time. Those charts are beautiful. They show that not only have human players gotten better at Go because of AlphaGo, they’ve also gotten more creative. What’s interesting to me is that, while AI has improved the level of play in both chess and Go, it’s had a more significant impact on creativity in Go, because, according to ChatGPT, “Go is a more complex game with a larger search space and more possibilities, which allows for a greater scope of innovation and creativity.” That should be cause for optimism as the things AI gets good at become increasingly more complex, with larger search spaces and more possibilities, as it moves from tame problems like games to wicked ones like conversation, scientific research, and understanding the human mind. Doubling Down on Our MindsIf there’s one key message I want you to take away from reading this, it’s that new knowledge transfer technologies unlock new and better ways of thinking. Human brains are not static; they’re dynamic, and capable of improving. New technologies can spur those improvements. The phonetic alphabet and the printing press didn’t just help spread ideas, they made us smarter in the process. While we’re still early in the development of AI, chess and Go provide early proof points that we’re not done improving. Genetic evolution is a slow process, but our minds haven’t evolved through genetics alone for millennia. As Daniel Dennett highlights in From Bacteria to Bach and Back, our brains have coevolved with memes, “words striving to reproduce.” New information – new words, ideas, arguments, images – can be “downloaded to your necktop,” where it mixes and mashes with all of the other memes you’ve downloaded and becomes part of your personal reasoning capabilities. Phonetic language, the printing press, and AI serve to distribute memes further and faster, so that we can upgrade our minds and provide fresh new insights. Dennett addresses AI directly in the closing paragraphs of his 2017 book, writing:

This separation of powers and codependence is what I’m talking about. It mirrors the phonetic alphabet and the printing press. Give them more of the heavy cognitive lifting so that we might focus on our minds’ top-down reasoning abilities, on the rare and novel sparks of insight that people like Anaximander were able to conjure. I don’t know what those insights will be. What I do know, what I have a strong human intuition about, at least, is that if we are at the foot of one of these technological/human insight-powered turning points, people are going to spend a lot of time and effort training their minds in order to generate those insights. Far from giving up on learning because AI is smarter than us, pushing our brains is only going to get more popular, and we’re only going to get better, just like we did in chess and Go. By pushing what AI can do and learning how it thinks, we’ll learn more about how our own minds work, and what makes them unique. We’ll come up with wild theories, like this one from Kevin Kelly – “That our brains tend to produce dreams at all times, and that during waking hours, our brains tame the dream machine into perception and truthiness.” He came up with it while playing with generative AI, by noticing similarities between AI’s hallucinations and our dreams and making a uniquely human leap based on his intuition. Wild theories based on facts, experience, and intuition (which we’ll then subject to the scientific method, of course) will be at a premium. I suspect that we’re going to see an explosion in the popularity of things like meditation, breathwork, psychedelics, lucid dreaming, ongoing education, sleep, nootropics, writing, walks in nature, tutoring, exercise, alcohol-cutting, in-person group experiences, debates, and all sorts of ways that might assist in their creation. We can even use AI to help us train in new, personalized ways, like Greg Mushen used ChatGPT to get himself addicted to running: This tweet is a good metaphor, because while AI can assist in our training, we still have to go out and run the miles ourselves. Part of what makes human insight valuable and different is the hands-on experience of doing, of feeling the frustration when something doesn’t quite make sense, of feeling the joy of figuring something out after a long struggle. We’ve all worked for middle managers too far removed from the day-to-day to add much value; we’re at risk of something similar happening if we hand too much of our thinking over to AI. Intuition is earned. Some people will be happy letting AI do the grunt work. Not everyone will see AI’s advance as a challenge to meet, just as I have not taken advantage of chess and Go engines to become better at either of those games. But I think, as in chess and Go, more people will get smarter. The process will produce more geniuses, broadly defined, ready to deliver the fresh, human insights required to ignite the next intellectual revolution. If we can walk the line, the result, I think, will be a deeper understanding of the universe and ourselves, and a meaningful next leg in the neverending quest to understand and shape our realities. Thanks to Dan and Puja for editing! That’s all for today! We’ll be back in your inbox on Friday with a Weekly Dose of Optimism, and we mayyy drop a little extra in there on Thursday, too. Thanks for reading, Packy 1 Note that this data isn’t perfect – it’s surprisingly hard to find a list of the top Elo rating achieved each year, and I didn’t want to waste time going through year by year here, so I went with ChatGPT and spot checked. It’s close enough and the trend is correct. That 1999 spike is real though – it seems as if losing to Deep Blue gave Kasparov a jolt of motivation. 2 There’s an ongoing debate over whether there’s been inflation in the Elo ratings over the past few decades. For an explanation of how the Elo rating is calculated and whether there has been rating inflation, I asked ChatGPT to summarize. You can see the conversation here. |

Older messages

Weekly Dose of Optimism #40

Friday, April 28, 2023

200K, Robotaxis, Brain Imaging, Legos, Immigrants, Mutation Browser, Anaximander

The Unbearable Heaviness of Being Positioned

Monday, April 24, 2023

Google, Positioning, and Not the Innovator's Dilemma

Weekly Dose of Optimism #39

Friday, April 21, 2023

Starship, How Solar Got Cheap, AgARDA, Against Safetyism, Dr. Peter Attia

Weekly Dose of Optimism #38

Wednesday, April 19, 2023

EVs, Battery Recycling, Child Mortality Rates, The State of Crypto, SimsGPT, The Problem of Abundance

Sign in to Not Boring by Packy McCormick

Wednesday, April 19, 2023

Here's a link to sign in to Not Boring by Packy McCormick. This link can only be used once and expires after 24 hours. If expired, please try logging in again here. Sign in now © 2023 Packy

You Might Also Like

🔮 $320B investments by Meta, Amazon, & Google!

Friday, February 14, 2025

🧠 AI is exploding already!

✍🏼 Why founders are using Playbookz

Friday, February 14, 2025

Busy founders are using Playbookz build ultra profitable personal brands

Is AI going to help or hurt your SEO?

Friday, February 14, 2025

Everyone is talking about how AI is changing SEO, but what you should be asking is how you can change your SEO game with AI. Join me and my team on Tuesday, February 18, for a live webinar where we

Our marketing playbook revealed

Friday, February 14, 2025

Today's Guide to the Marketing Jungle from Social Media Examiner... Presented by social-media-marketing-world-logo It's National Cribbage Day, Reader... Don't get skunked! In today's

Connect one-on-one with programmatic marketing leaders

Friday, February 14, 2025

Enhanced networking at Digiday events

Outsmart Your SaaS Competitors with These SEO Strategies 🚀

Friday, February 14, 2025

SEO Tip #76

Temu and Shein's Dominance Is Over [Roundup]

Friday, February 14, 2025

Hey Reader, Is the removal of the de minimis threshold a win for e-commerce sellers? With Chinese marketplaces like Shein and Temu taking advantage of this threshold, does the removal mean consumers

"Agencies are dying."

Friday, February 14, 2025

What this means for your agency and how to navigate the shift ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Is GEO replacing SEO?

Friday, February 14, 2025

Generative Engine Optimization (GEO) is here, and Search Engine Optimization (SEO) is under threat. But what is GEO? What does it involve? And what is in store for businesses that rely on SEO to drive

🌁#87: Why DeepResearch Should Be Your New Hire

Friday, February 14, 2025

– this new agent from OpenAI is mind blowing and – I can't believe I say that – worth $200/month