JC's Newsletter - Essay: AI is the future of health

Dear friends,Every week, I’m sharing an essay that relates to what we are building and learning at Alan. Those essays are fed by the article I’m lucky enough to read and capitalise on. I’m going to try to be provocative in those essays to trigger a discussion with the community. Please answer, comment, and ping me! If you are not subscribed yet, it's right here! If you like it, please share it on social networks! The evolution of AI in healthcare: from prediction to realityIn "L’Assurance Maladie au Partenaire Bien-Être", I advocated for Europe to spearhead advancements in AI for healthcare, outlining the vast potential it holds. However, the realm of AI has progressed even more rapidly than I'd anticipated since publishing the book, particularly with the advent of Large Language Models. Our hands-on experience with Mistral.ai has solidified my belief that these developments harbor the potential to revolutionize our healthcare landscape. The European healthcare crisisThe healthcare system in Europe is facing a multitude of challenges, some of which are deeply rooted in its structure. As many parts of the system are floundering, citizens and professionals alike are feeling the strain:

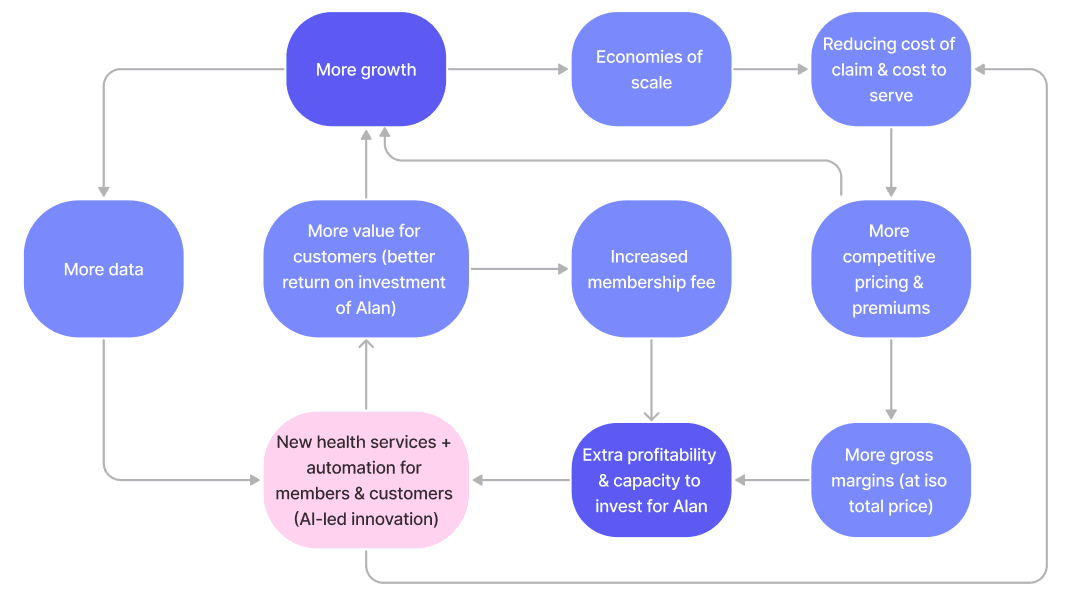

The European healthcare system, while robust in many ways, is facing significant systemic problems. To ensure that every individual receives the quality care they deserve, systemic overhauls and prioritization of both preventive and reactive care are crucial. We believe that AI can help. The renaissance of healthcare: DoctorAIPicture a service that provides free, unlimited access to ultra-personalized virtual healthcare. There would be no paywalls, no appointments needed, no waiting times, answers within seconds. DoctorAI ensures everyone has unlimited and free access to top-tier medical consultation whenever they need. It is unparalleled in accessibility. In 2009, Google ran tests where they intentionally slowed their search results to see the impact. They found that even a delay of 200 to 400 milliseconds (less than half a second) led to a notable and measurable decrease in searches. Speed & accessibility will change how we think about accessing care, increasing usage. Members could interact with a doctor avatar via chat, phone call, or video visit. The AI would accept multi-modal inputs - talk, text, images, health data from wearables - to build an intimate understanding of each person's medical history and needs. DoctorAI would converse with patients in their preferred tone and education level (that is already known). DoctorAI possesses not just immense knowledge but also exhibits boundless empathy. It also constantly updates its knowledge, staying abreast of medical advancements. Individuals often hesitate to ask their doctors what they perceive as 'simplistic' questions. AI democratizes the information-seeking process, mirroring how individuals are currently utilizing anonymous forums for health concerns. It would suggest customized prevention plans while proactively monitoring for potential problems. If higher-level care is needed, the AI could instantly connect patients to the optimal human specialist. With the power to delve into a member's complete medical history, DoctorAI would offer tailored advice based on past health events, ensuring continuity in care. DoctorAI could provide prescriptions, streamlining the treatment process. Staying on track with medical plans can be challenging. DoctorAI could craft personalized adherence plans, ensuring consistent follow-ups, and maximizing treatment outcomes. Backed by peer-reviewed studies, DoctorAI would perform as well or better than human doctors for a list of tasks. But the AI would remain transparent about its confidence level for each interaction. Human oversight would still provide judgement, accountability, and moral guidance. I believe that it would dismantle the conventional compartments of medical specializations, endorsing a more integrated, member-focused model. Just as France's 2024 budget aims to save 15 billion euros by trimming health expenses [Europe1], the implementation of AI in healthcare, with its almost zero incremental cost per query, can significantly pull down the overall cost of healthcare. Of course, it will also streamline administrative burdens like paperwork, and note-taking for Doctors. The audacious vision of DoctorAI isn't just to be another tool in the healthcare toolkit; it's to redefine the very essence of healthcare interaction and delivery. It is a solution to improve care, reduce costs, improve the life of Doctors, and help people live longer, healthier lives. RisksOne concern is that AI could give poor or biased advice. An AI model is only as good as the data it was trained on. If that data reflects societal biases or lacks diversity, the AI's recommendations could be misleading or discriminatory. Rigorous testing on diverse datasets is essential to avoid unfair or inaccurate outputs. We should compare the baseline of medical errors done by humans (and the numbers are high). Over Reliance on AI is also a risk. Doctors and patients may be overly trusting of AI suggestions and fail to critically evaluate them. However, AI is prone to unexpected errors and should not be treated as infallible. Its advice requires human verification, especially for high-stakes medical decisions. See this article on hybrid Intelligence. Transparency and Trust: If AI is integrated into patient care, transparency becomes paramount. Patients should know if they are receiving advice from an AI system. Will patients trust an AI system's advice, or will they seek a "second opinion" from a human? AI predictions are probabilistic, not definitive diagnoses. Caution must be taken to communicate the level of uncertainty in AI-generated recommendations. Patients may misunderstand the meaning of a 60% risk score. That being said, doctor’s recommendations are also probabilistic, except they don’t know what is exactly the probability. The ethical use of patient data to train healthcare AI is another area requiring caution. De-identified data is preferable, but model performance often depends on sensitive personal information. The privacy risks must be balanced with the societal benefits of more accurate AI diagnostics and treatments. We should also consider the risk of the dehumanization of care. Empathy, connection with another human being are known to have a big effect on treatments, mental health. That is why we should push for a hybrid system where humans & doctors remain the cornerstone of the most important interactions. Promising early resultsEarly studies have yielded impressive results. In our own internal pilot study, the empathy and quality of responses from the AI system GPT-4 were rated significantly higher compared to those from human doctors. This aligns with findings from a 2022 JAMA study, where AI responses to patient questions were graded as more empathetic and higher quality by medical professionals. To give perspective, while a mere 4.6% of doctors’ posts received the “empathetic” or “very empathetic” tag, a whopping 45% of ChatGPT responses achieved this distinction. Researchers at Stanford University also found that over 90% of the time, curbside consult responses generated by GPT-4 were considered "safe" by physicians reviewing the AI's advice. 990% is obviously not enough, but a good start. Real-world implementation also shows promising signs. Doctors using tools like ChatGPT report the AI helps them communicate more compassionately with patients and families. It can quickly summarize complex medical histories to assist with evaluation for undiagnosed disease programs. AI is also being used to handle repetitive paperwork and administrative tasks, freeing up more time for direct patient care. The journey ahead may be long, but the early signs are promising. Why should Alan do it?Alan is uniquely positioned to seize this new wave of technological innovation. This is not merely a call to action; it is a strategic imperative. Alan's existing strengths — a robust data backend, strong relationships with members, a commitment to integrated and high-quality products, a readiness to assume calculated risks, and a team flexible enough to adapt — form a solid foundation for pioneering change. Alan could become the best place for Doctors to work: envisage a world where healthcare professionals work 40% less but earn equally, are less prone to burnout, and make fewer errors—a vision that DoctorAI can help realize. The operational efficiency gains could serve as a long-term moat for Alan. When claims represent a large chunk of our cost structure, having an impact on them will help us be more competitive in terms of price while having a better margin than competition thanks to our membership fee. There is also significant potential for creating value for our members and medical professionals alike. By reducing the time to respond and increasing productivity, DoctorAI offers an experience that could very well redefine the very metrics by which healthcare efficiency is measured. In working with public systems and politicians, Alan has the chance to shape policies, to set standards in how to approach AI in the world of healthcare. If we do not step up to this challenge, others will — and there's no guarantee their visions will align with the ethical and quality standards that Alan upholds. The stakes are not only commercial, they are societal and ethical. We are discussing shaping the future of healthcare itself. To not embrace this revolution would be to ignore the inevitable. The AI wave is coming, and Alan has a chance not just to ride it, but to lead it. Our current capabilities, our vision for differentiation, and our commitment to quality and ethics make us not just a participant in this revolution, but a potential leader. Let's not watch the future happen — let's shape it. Some articles I have read this week👉 Stanford and MIT Study: A.I. Boosted Worker Productivity by 14%–Those Who Use It ‘Will Replace Those Who Don’t’ (CNBC)

👉 Product-led AI (Greylock)

👉Language Model Sketchbook, or Why I Hate Chatbots (Maggie Appleton)

👉 Apple just announced (along with the new iPhones) their Apple Watch Series 9 (Apple)

👉 An Interview with Palantir CTO Shyam Sankar and Head of Global Commercial Ted Mabrey (Stratechery)

👉 The way that Jensen Huang runs Nvidia is wild (Twitter)

👉An Interview with Marc Andreessen about AI and How You Change the World (Stratechery)

👉A tweet from Shreyas Doshi on goals & motivations of users (Twitter)

It’s already over! Please share JC’s Newsletter with your friends, and subscribe 👇 Let’s talk about this together on LinkedIn or on Twitter. Have a good week! |

Older messages

LLMs, objectives and the future

Tuesday, September 12, 2023

JC's Newsletter #188

Essay: Investors, Focus & horizons

Tuesday, September 5, 2023

JC's Newsletter #187

The healthcare revolution

Tuesday, August 29, 2023

JC's Newsletter #186

Great product organizations

Tuesday, August 22, 2023

JC's Newsletter #185

AI news: where the innovation is going

Tuesday, August 15, 2023

JC's Newsletter #184

You Might Also Like

🔮 $320B investments by Meta, Amazon, & Google!

Friday, February 14, 2025

🧠 AI is exploding already!

✍🏼 Why founders are using Playbookz

Friday, February 14, 2025

Busy founders are using Playbookz build ultra profitable personal brands

Is AI going to help or hurt your SEO?

Friday, February 14, 2025

Everyone is talking about how AI is changing SEO, but what you should be asking is how you can change your SEO game with AI. Join me and my team on Tuesday, February 18, for a live webinar where we

Our marketing playbook revealed

Friday, February 14, 2025

Today's Guide to the Marketing Jungle from Social Media Examiner... Presented by social-media-marketing-world-logo It's National Cribbage Day, Reader... Don't get skunked! In today's

Connect one-on-one with programmatic marketing leaders

Friday, February 14, 2025

Enhanced networking at Digiday events

Outsmart Your SaaS Competitors with These SEO Strategies 🚀

Friday, February 14, 2025

SEO Tip #76

Temu and Shein's Dominance Is Over [Roundup]

Friday, February 14, 2025

Hey Reader, Is the removal of the de minimis threshold a win for e-commerce sellers? With Chinese marketplaces like Shein and Temu taking advantage of this threshold, does the removal mean consumers

"Agencies are dying."

Friday, February 14, 2025

What this means for your agency and how to navigate the shift ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏ ͏

Is GEO replacing SEO?

Friday, February 14, 2025

Generative Engine Optimization (GEO) is here, and Search Engine Optimization (SEO) is under threat. But what is GEO? What does it involve? And what is in store for businesses that rely on SEO to drive

🌁#87: Why DeepResearch Should Be Your New Hire

Friday, February 14, 2025

– this new agent from OpenAI is mind blowing and – I can't believe I say that – worth $200/month