| 👉 Good Product Manager / Bad Product Manager (Ben Horowitz & David Weiden). ❓ Why am I sharing this article? Being a good product manager is so hard that most product managers at most companies fail to be good -- and instead are bad. Because product management is a highly leveraged position, a bad product manager leads to many other bad consequences, generally including the wrong product being built, which generally has a significant impact on revenue, morale, and reputation – of both the product manager and their company.

➡️ I like the focus on not only shipping, but building a profitable business. How do we assess this in our hiring process for PMs? CEO of the product:

➡️ We have a collaborative approach, but at the end of the day, I agree that PMs (and other stakeholders in a crew) must do whatever it takes to get a product to success. Bad product managers have lots of excuses: not enough funding, the engineering manager is an idiot, Microsoft has 10 times as many engineers working on it, I'm overworked, I don't get enough direction. Once bad product managers fail, they point out that they predicted they would fail.

Good product managers balance all important factors.

➡️ Knowing our members and customers very well, having a clear view on the problems we are solving. Competition. Good product managers understand the architectural and business capabilities of the competition and know where the competitors can go easily and can't go at all. Good product managers know they must be better or different or they're dead. Note "different" can mean things other than product differences, like integration or distribution.

➡️ Important to note that differences can be wide, and PMs are expected to think about it as a whole: business x technology. Do our PMs have an exhaustive view of what is doing the competition in their area? And what are our assets to win? Bad product managers ask customers leading questions and get biased answers. Bad product managers blindly listen to the loudest customers, and define a product that addresses yesterday's needs of a handful of companies.

➡️ PMs should build conviction of what the future looks like. Clear, written communication with product development. Good product managers define a clear product vision and target that empowers engineering to fill in the details that are difficult to specify or anticipate. As part of this, good product managers also explain why. A good test of a product manager is for someone outside the product team to ask 5 different people in engineering, QA, and doc what their product is supposed to do and why and get the same answer.

➡️ Good test to do for crews. Clear goals and advantages.

➡️ That is why I think Product management and product marketing should be one. Focus on the sales force and customers: Good product managers are loved by the salesforce. A good product manager will be known personally or by reputation by at least half the salesforce. Good product managers know that salespeople have a choice of products to sell and, at a higher level, companies to work for, and selling a particular product manager's product is optional. Good product managers know that if the sales force doesn't like their product, they will fail.

Knowledgeable of what actually happens in the field -- nothing turns off a salesperson more than a product manager who rambles on about their product features and seems to have no idea of the salesperson's actual situation. Good product managers know if the salesperson understands what the product does or not (and if not they start with easy to understand basics), if customers do (if not they explain more why a customer would care than what the product does), etc. Good product managers speak from experience. "When I helped Bob close this deal..."

Around -- they've been out in the field, been to sales training, been to SE training, been to pitches, etc.

➡️ How much time do our PMs spend with customers? Help close deals? Marketing & communication: Product management requires an understanding of and proficiency in though not deep expertise of a wide array of marketing functions. For example, good product managers should be able to work effectively with PR and press and analysts, understand how to execute a product launch, develop collateral, staff a tradeshow, train the salesforce, etc.

In particular, a product manager should make sure a core set of updated collateral exists (annotated presentation, written positioning, primary silver bullets). If your primary competitor is abc and the most recent competitive positioning on abs is nine months old and refers to the last release of their product, this is indicative of a bad product manager. Also, good product managers take competition into account in developing their messages, but are not a slave to what the competition does.

👉 Tobi Lutke - Embrace the Unexpected (Join Colossus) ❓ Why am I sharing this article? Good verbatim about long-term thinking and focus. I’d like us to use more the one about “making decisions they assume the company 10 years from now wishes they would've done”. I think it is very good. I like the way they framed the different projects and their status. Should we be even more explicit?

People should make decisions based on the decision they assume the company 10 years from now wishes they would've done, but sometimes you got to just look at what's there and be very, very practical. In the end, I think I stopped about 60% of what we were working on. None of the things we were working on was because people made incorrect choices. Shopify is very bottom up. People can write proposals for every opportunity they see that goes into a system called GSD, which stands for get shit done. Then there's these phases there's proposal phase, prototype phase, build phase, and a releasing phase, and this system allows everyone in the company to see everything that's going on. This entire plan once a year I write product themes for a company, things that we cause to make true over the year. And then they sort of decompose into different projects. Then as this proposal is submitted for transition to the build phase or to prototype phase, and then we can have great conversations about, is this a not yet? Is this a hell yes? Where does this go in a priority stack? And I think building this out has been incredibly clarifying and very, very good for the company.

Companies can get very, very distracted in a lot of ways when they allow themselves to do things that aren't the mission. This is especially true in a world of product. Again, if you follow a moving into adjacencies, I don't think you will have a world class product in your adjacencies. You're not out competing someone's main mission with your side quest.

👉 Set “Non-Goals” and Build a Product Strategy Stack – Lessons For Product Leaders (First Round Review) ❓ Why am I sharing this article? Not A Fan Of Okrs? Try Ncts For Clearer Goal-Setting Introducing NCTs: Narratives, Commitments, Tasks Narrative: “The narrative is similar to the objective in an OKR, but teams are specifically recommended to make it longer. Describe the strategic narrative that the team wants to accomplish in at least a few sentences. Create a really clear linkage between the goal that’s being sent, and the strategy that goal ladders up to.”

Commitment: “Each narrative will have 3-5 objectively measurable commitments. The word commitment is used very deliberately here — you should plan on achieving 100% of those commitments so that your team knows exactly what they’re expected to accomplish at the end of the quarter. Commitments are the evidence that the team has made progress on the narrative.”

Tasks: “What work needs to be done in order for the team to achieve its commitments and make progress on the narrative? Tasks are the most fungible piece of a team’s NCT — the idea is to give teams a warm start on making progress on their goals. If at the end of a quarter a team has achieved all of its commitments, but none of the tasks — that’s a successful quarter. On the other hand, if a team completes all of their tasks but none of their commitments, that’s just checking the box. It’s not a successful quarter.”

Here’s a sample NCT using the previous Tripadvisor example: Narrative: Today, travelers who navigate Tripadvisor using Google are often leaving and re-entering Tripadvisor multiple times in a day. We can build a more retentive user experience by enabling them to organize, share and access all their trip content, no matter what device they are on, or where they are in their planning journey. As a first step, we’ll increase awareness and usage of the “saves” feature and new trip plan feature. Commitments: Increase the number of unique savers from X to Y by the end of Q1. Increase saves per saver from W to Z by end of Q1. Increase 7-day repeat rate for overall traffic (due to increased save usage). Tasks: Launch new backend saves service. Launch saves funnel health metrics dashboard. Test suggesting defaulting trip names. Single-factor test save icon.

👉 Thread by GergelyOrosz: A common mistake of many first-time CTOs or VP of Engineerings (PingThread). ❓ Why am I sharing this article? I found this article interesting because it challenges some of my views. I believe that some mechanisms create a lot better outputs for having experienced them (and seen structures where they weren’t experienced). On the other hand, I really believe in increasing autonomy while keeping radical transparency and accountability. What should we edit/change?

Mandating that all engineering teams in their organization start working {the same way using methodology X}. They usually feel this is a big win for them, and the company. In reality: it's almost always a disaster.

Real-world examples: “All teams will run 1-week sprints and report whether weekly goals were hit or not.” “We are mandating Scrum with 2-week sprints, reporting on velocity.”

👉 Complexity and Strategy (Hackernoon). ❓ Why am I sharing this article? I found this notion of having features that interact with many others, and slow down the development of all features very interesting. Do we identify them well? What are our “bottleneck features”? The focus on having clear, isolated, simple components is also interesting. I believe it is overall a great read that should trigger some discussions around how we approach this at our scale.

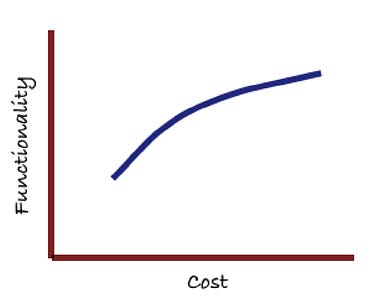

➡️ Do we have similar features that interact with many other features? When the Word team went to estimate the cost of these features, they came back with estimates that were many times larger than PowerPoint’s estimates. The bulk of the growth in estimates was because Word’s feature set interacted in ways that made the specification (and hence the implementation) more complex and more costly. How would the feature interact with spanning rows and spanning columns? How about running table headers? How should it show up in style sheets? How do you encode it for earlier versions of Word? Features interact — intentionally — and that makes the cost of implementing the N+1 feature closer to N than 1.

If essential complexity growth is inevitable, you want to do everything you can to reduce ongoing accidental or unnecessary complexity. Of course, the difference between accidental and essential complexity is not always so clear when you are embedded in the middle of the project and to some extent is determined by the future evolution of the product. When we started the OneNote project (first shipped in Office 2002), we seriously considered whether to build it on top of the very rich Word editing surface. The OneNote team eventually decided to “walk away” from Word’s complexity (and rich functionality) because they believed they needed to be free to innovate in a new direction without paying the cost of continually integrating with all Word’s existing functionality. They would settle for a much smaller initial “N” in order to be able to innovate in a new direction. That is a decision the team never regretted.

Potential solutions? Bill wanted (still wants) a breakthrough in how we build rich productivity apps. He expected that the shape of that breakthrough would be to build a highly functional component that would serve as the core engine for all the applications. That model leads you to invest in building a more and more functional component that represents more and more of the overall application (and therefore results in more of the cost of building each application being shared across all applications). This view that I have described here of increasing feature interaction causing increasing essential complexity leads to the conclusion that such a component would end up suffering from the union of all the complexity and constraints of the masters it needs to serve. Ultimately it collapses of its own weight.

The alternate strategy is to emphasize isolating complexity, creating simpler functional components, and extracting and refactoring sharable components on an ongoing basis. That approach is also strongly influenced by the end-to-end argument and a view that you want to structure the overall system in a way that lets applications most directly optimize for their specific scenario and design point. That sounds a little apple pie and in practice is a lot messier to achieve than it is to proclaim. Determining which components are worth isolating, getting teams to agree and unify on them rather than letting “a thousand flowers bloom” is hard ongoing work. It does not end up looking like a breakthrough — it looks like an engineering team that is just getting things done. That always seemed like a worthy goal to me.

👉 Thread by Ken Kocienda (ex Human Interface @ Apple for 16 years) (PingThread) ❓ Why am I sharing this article? Given the power of Figma and of our design library, could our ideas be a lot more demos? Hackathons are a nice place, but I think it should also be for when we want to make product decisions or allocate new resources. How can we evolve to have more demos internally?

Steve Jobs insisted on concrete and specific demos that showed what the product we were trying to make would be like. Not documents, plans, slides, or hand-wavy abstract talk. Demos. The demos had to be perfect too, to the extent of what they included. If a detail was shown, it had to be an exact proposal for what we might ship. Nobody produced useless content that wasn’t the product. We went directly at the problems we were trying to solve and the products we were trying to make. He insisted on it. Compare this method to any product manager you’ve ever worked with. Ask yourself if they help to save time, are clear in their communication, focus effort on the essentials, and catalyze the work that produces great results.

👉 Can developer productivity be measured? (The Overflow) ❓ Why am I sharing this article? Measuring engineering productivity is a big question and a hard one I like the approach that is described in the article, especially the notion of having “force multipliers”. Still, I’d like us to be able to ask the good questions on how do we ship fast good product!

Long story short, measuring inputs is a deficient technique because software development is not an equation and code cannot be built by assembly line. Some have famously fallen into the trap of thinking that the work output of software development is lines of code or commits in version control. Strictly speaking, a line of code that doesn’t solve a problem is worse than no code at all.

➡️ Very true. For example, some team members are force multipliers for the rest of their team—they may not accomplish a lot on their own, but their teammates would be significantly less productive without their help and influence.

➡️ Make sure we recognise them! Team performance, on the other hand, is far more visible. Perhaps the best way to track it is to ask, does this team consistently produce useful software on a timescale of weeks to months? A team that produces useful software on a regular basis is productive. A team that doesn’t should be asked why not. Team productivity can be measured at an organizational scale with simple, holistic observations. And since teammates tend to be well aware of each other’s contributions (whether measurable or not), any serious failings in individual productivity can be discovered by means of good organizational habits. Many organizations use velocity as their preferred metric for team productivity, and when done right, this can be a useful tool for understanding the software development process. Velocity is an aggregate measure of tasks completed by a team over time, usually taking into account developers’ own estimates of the relative complexity of each task. It answers questions like, “how much work can this team do in the next two weeks?” Many organizations thrive without any hard-and-fast measures at all. I organizations where useful software is well-understood to be both the goal and the primary (albeit hard-to-quantify) measured result of development work and inputs are correspondingly deprioritized, there are profound and far-reaching implications. Developers are liberated to do their best work, whenever and wherever they’re most productive.

➡️ I agree that the best indicator is: is it a good product? Does it break often? Are we happy with the pace of shipping it?

👉 David Fialkow (co-founder of General Catalyst) - Paint Outside the Lines (Join Colossus) ❓ Why am I sharing this article? Because I don’t think it only applies to founders. If you are a PM or a leader of a product, I think you should think that way too.

Number one, does that founder love their product and is willing to do anything to get people to use their product? And will run through walls to make sure the world... Number two, do they know how to sell? And selling means can they storytell? Can they make it clear to others? How important this product and this mission is? The third thing is that they absolutely have to have some form of modesty so that they listen to other people. The smartest people in the world are smart because they listen to others. Now they may not always follow the advice of others, but they listen.

👉 Brain Food: The Passions (Farnam Street) ➡️ Spend time building, more than forecasting.

👉 Inside Apple Park: first look at the design team shaping the future of tech (Wallpaper) ❓ Why am I sharing this article? I loved the depth of the work of the design team at Apple, and how they can dig very deep to build convictions The fact they have co-leads, and they use collaborative debate to work together No necessary action for us, but I found it interesting.

Working side by side to guide this division are Evans Hankey, Apple’s VP of industrial design, and Alan Dye, VP of human interface design. Both close colleagues, confidants and friends of Jony Ive, they effectively took the helm of the Design Team after his departure from the chief design officer role in 2019.

➡️ I love this notion of co-leads, forcing people to really work together. [The Apple Design Team] can share the same studio,’ Ive told Wallpaper* in 2017. ‘We can have industrial designers sat next to a font designer, sat next to a sound designer, who is sat next to a motion graphics expert, who is sat next to a colour designer, who is sat next to somebody who is developing objects in soft materials.’ Having one central Design Team across all Apple products. Take the Human Factors Team, which blends experts in ergonomics, cognition and behavioural psychology. When AirPods’ development began a decade or so ago, human factors researcher Kristi Bauerly found herself researching the ‘crazily complex’ human ear. ‘We moulded and scanned ears, worked with nearby academics, focusing on outer ears for the earbud design and inner ears for the acoustics,’ she says. Thousands of ears were scanned, and only by bringing them all together did the company find the ‘design space’ to work within. ‘I think we’ve assembled one of the largest ear libraries anywhere,’ Hankey says. ‘The database is where the design starts,’ Bauerly continues, ‘and then we iterate and reiterate.

The ability to sketch, model and prototype in-house creates a fluid workflow that is integral to product evolution. Prototyping is hugely important, covering everything from scale and interaction down to materials, colours, textures, and surfaces. At the heart of it all is Apple Park. Hankey and Dye enthuse about the qualities of the space. ‘It’s been designed for serendipitous meetings as well as collaboration.

👉 A Letter To A New Product Manager (Brian Armstrong) ❓ Why am I sharing this article? Are PMs talking enough to customers? What is the frequency? I’d like to have more PM == PMM, being able to communicate change outside. Be real external owners and voices of their products. I love the focus about developing a true vision, and we are really getting there in every parts of the business.

Understand the customer deeply — this means user studies, you have to go talk to the users every week and spend a lot of time with them to hear their pain, get inside their head. Be metrics driven , you should be using time series data to give you insight into what people are doing with the product and see if the changes you are making are improving things. If you haven’t instrumented the app and chosen a metric you want to improve, you are just guessing. The product manager is responsible for prioritizing the product roadmap and communicating it to the team. You need to be a communication hub, both when building consensus internally on priorities/roadmap, but also externally communicating changes to customers. Users need to see that the product is continually improving by hearing about it from you. Develop product vision (conviction about where things are going in the future, make the hard calls about what to eliminate, etc) and strive to make something truly great. You can think about it not just as eliminating customer pain, but actually creating customer delight.

👉 Thread by Gergely Orosz on engineering performance (PingThread) ❓ Why am I sharing this article? Interesting how some large tech companies roll out tools to track the number of PRs merged per engineer - visible for everyone. There’s no target, but incentivises people to do small and frequent diffs. EMs like it as they have a tool to notice when someone has a change of pace. When I was at Uber, 80% of performance issues on my team started with someone not landing code for eg weeks. Also, before this tool was universally available at Uber, better managers manually collected stats on diffs and code reviews, brought it to calibration then used it to get their top people better ratings. Lots of replies assuming this is now a target (when it s not) or it’s used for perf reviews (def not at Meta; AFAIK zero plans at Uber) My point is it’s interesting and the goal is clearly to keep small diffs & moving fast top of mind.

It’s already over! Please share JC’s Newsletter with your friends, and subscribe 👇 Let’s talk about this together on LinkedIn or on Twitter. Have a good week! | |